Soft robots take the wheel: shared control between humans and robots

“When you pick a coffee cup from the table, you’ll first run your hand across the table to localize where you are. A traditional robot arm might approach the task similarly since it has a similar human-inspired shape,” said Laura Blumenschein, assistant professor of mechanical engineering. “But these new soft robots move through and perceive their environment differently. Their sensory feedback can’t easily be communicated through a joystick, or even a computer screen.”

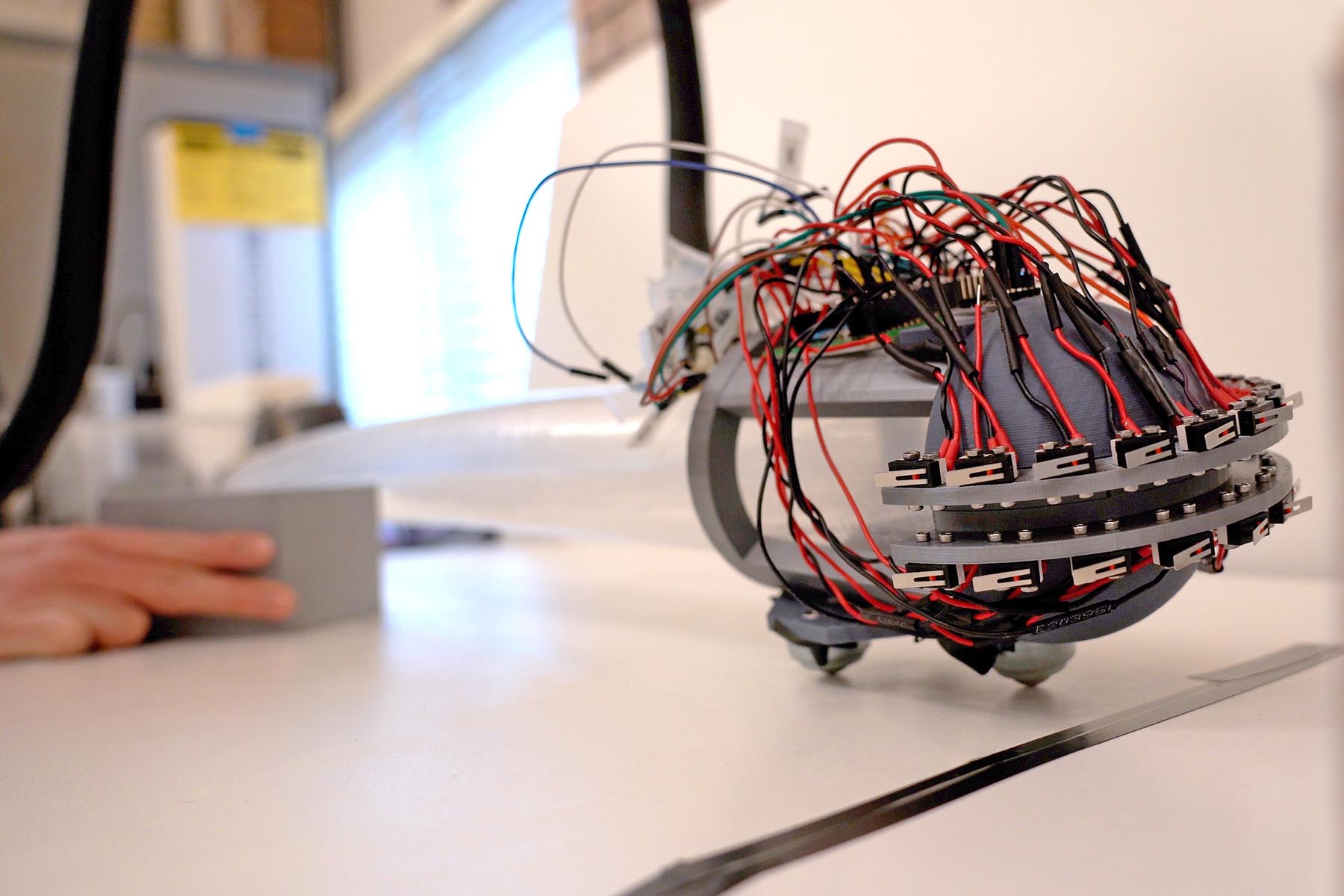

Blumenschein’s lab builds non-traditional soft robots, including a “vine” robot that uses inflatable segments to slither through openings that traditional robots (or humans) can’t traverse. “One classic example is search-and-rescue,” Blumenschein said. “If this vine robot is slithering through a hole and hits a wall, how does it tell the human that it’s hit a wall and can go no further in this direction? How do we decide when the robot should operate autonomously, and when the human should take control?”

To explore these issues, Blumenschein has teamed up with Denny Yu, associate professor of industrial engineering. They recently received a three-year $549,000 grant from the National Science Foundation (NSF) as part of its Mind, Machine, and Motor (M3X) initiative, which explores sensory interaction between human and synthetic actors.

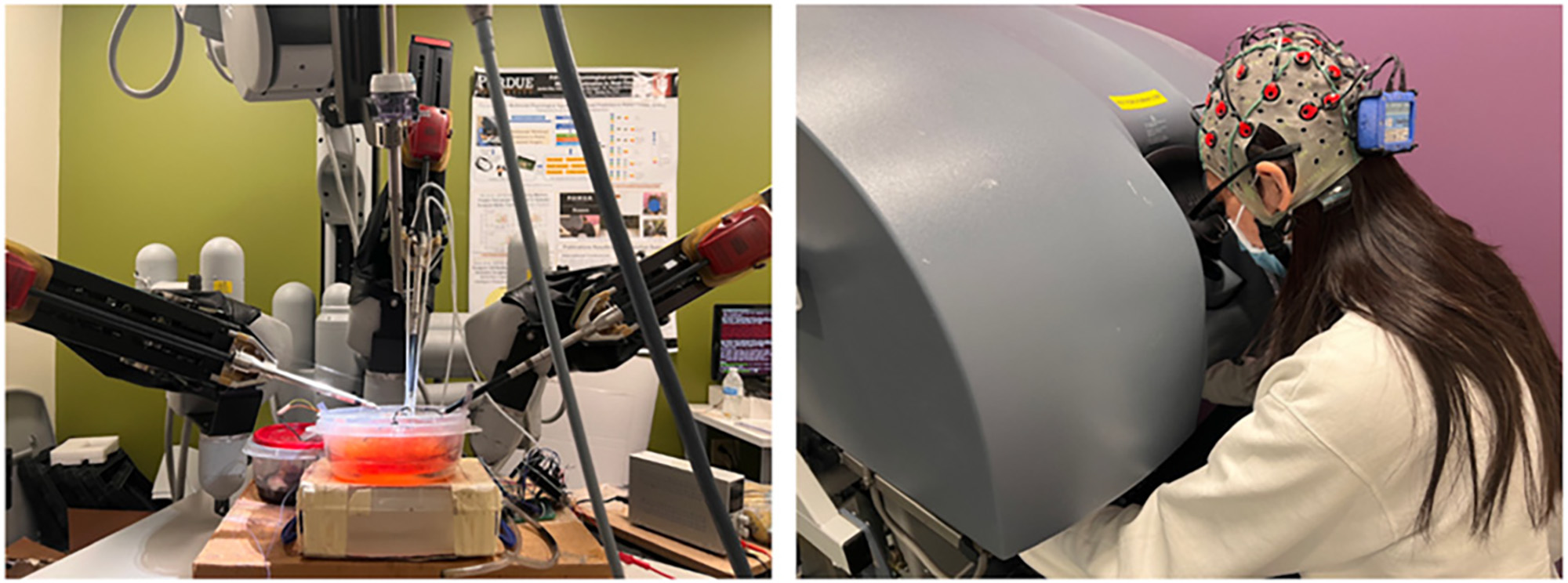

“Our research lab studies this interaction in the healthcare arena — for example, doctors conducting surgeries with the help of robots,” Yu said. “There is a fine balance between enabling highly effective procedures, managing the cognitive and physical workloads of the surgeons, and maintaining the safety of the patients. Our challenge is to discover the best possible interaction between the humans and the robotic systems.”

“There’s often a gap between how robots are designed to operate, and how humans think they should operate,” Blumenschein said. “That gap is the most interesting place for us to start, because we want to bridge those two systems of perception to best accomplish the task.”

The team will start with pick-and-place experiments, which are the simplest tasks for both robots and humans. But when that pick-and-place process will be performed by a soft “vine” robot — which moves in three dimensions and has soft end-effectors rather than a hard claw — will a human start to think like a vine? Or will the vine have to tailor its operation to best suit the human operator?

“We need to start thinking outside the box of traditional joysticks and touchscreens,” Blumenschein said. “For example, we’ve been experimenting with haptic feedback on some of our robots. Rather than a beeping alarm or a warning light, the human operating the robot feels tactile feedback through their hands. You might have also seen this in your car, when the adaptive cruise control buzzes the steering wheel to let you know that you need to take back manual control. We’ve found that using multiple senses — not just vision — increases the ability of humans and robots to work together to sense their environment and function more efficiently.”

“The best-case scenario is a system where humans and autonomous systems work together,” Yu said. “A shared control scheme is our final goal, so that human intuition and robotic capabilities work seamlessly in cooperation.”

Sources: Laura Blumenschein, lhblumen@purdue.edu | Denny Yu, dennyyu@purdue.edu

Writer: Jared Pike, jaredpike@purdue.edu, 765-496-0374