Autonomous grasping robots will help future astronauts maintain space habitats

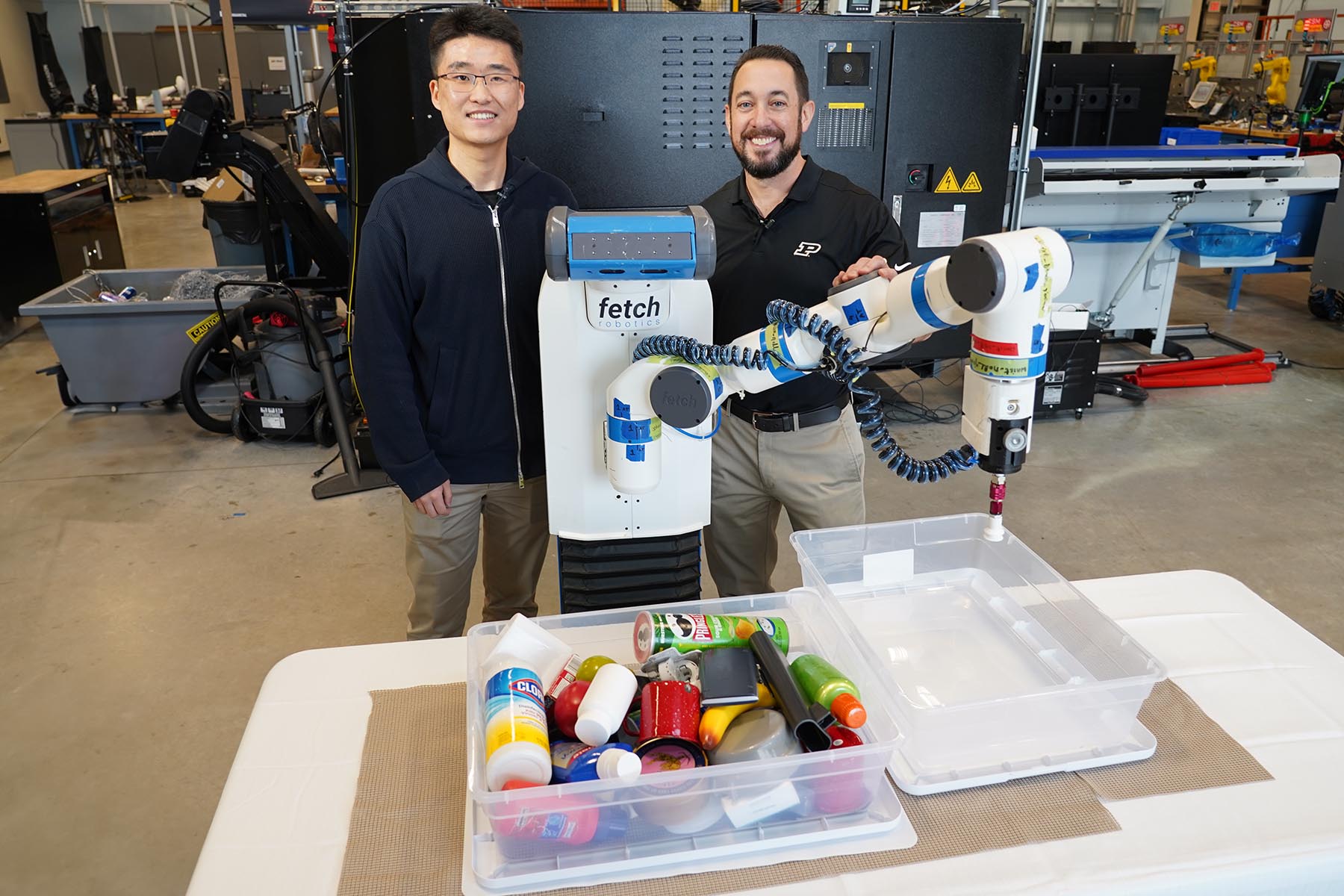

David Cappelleri, professor of mechanical engineering, works on robots at multiple scales: from agricultural robots powered by Internet-of-things, to microrobots small enough to travel through the human body.

His latest challenge? Designing a robot that can autonomously perform maintenance on a space station.

“It’s easy for robots to manipulate things if the environment is set up perfectly, like at an Amazon warehouse,” Cappelleri said. “But in a dynamic environment like a human habitat, objects will sometimes be all over the place, or hidden underneath other objects. A robot needs to be smart enough to not only locate an object, but also position itself to successfully grasp and manipulate the object.”

Humans do this easily. We see an egg and a brick, and we instantly know that these two objects require different amounts of force to pick up, so we adjust our grip strength accordingly. But robots require different grasping tools for each job (“end-effectors,” in robot-speak). Thus, a robot needs to be able to recognize different items, determine which end-effector to use, and calculate the orientation and location that will give it the best grip on the object.

And do it all in a low-to-no gravity environment.

That’s where AI comes in. “The best way to tackle this challenge is to simulate the environment in a machine learning model,” said Juncheng Li, a Ph.D. student in Cappelleri’s lab. “We created numerous virtual objects of different sizes, shapes, and textures. Then we scattered them randomly in the model, so the virtual robot had to learn the best way to grasp each item. Repeat that simulation thousands of times with thousands of different objects, and your robot becomes very smart very quickly.”

Their research has been published in IEEE Transactions on Robotics.

They call their system Sim-Suction, as it involves simulating a robot with a suction-cup end-effector to grasp and manipulate items. These robots, prevalent in industrial settings like factories and warehouses, use a small vacuum pump to establish a seal between their suction cup gripper and the object. This seal requires a flat surface and a proper angle for the suction cup to hold its vacuum against the object.

Li wanted to create an intentionally challenging environment for the robot. So he generated a virtual environment where 20 objects at a time were chosen at random — for example, an apple, a coffee mug, a cardboard box, or an irregularly-shaped 3D-printed object. These objects were then dropped randomly, to create an intentionally cluttered environment. The robot’s virtual cameras registered the scene, rotating around to build virtual point clouds for each object. Finally, the virtual suction cup robot attempted to grasp each item at random locations and orientations. Using real-world physics simulations, it could register whether the grasp was successful or not. In doing so, the model could begin to “learn” what works and what doesn’t.

These two components — the thousands of cluttered virtual environments, and the machine learning model for how best to grip the objects through suction — have both been published open-source on GitHub.

“We are very proud of this dataset,” Li said. “It’s the only one available with six degrees of freedom, analytical models, physics simulation, multiple gripper sizes, and dynamics evaluation. We want the robotics community to be able to use this dataset to facilitate many different applications.”

But they weren’t done. To test their machine learning model, they conducted an experiment with an actual suction cup robot equipped with depth-sensing cameras. They started with a plastic tub of 20 simple objects: cubes, spheres, and cylinders. The robot successfully identified and grabbed individual items 97% of the time. A second test involved 40 more complex objects: bananas, remote controls, and irregular 3D-printed parts. That test’s success rate was 94%. Finally, they mixed the two together in a completely full tub of 60 items, which resulted in a 92% success rate.

“This is a great result,” Li said. “We would give the robot a text command to find one specific object, like an apple. It would move other objects out of the way until it could grasp the apple using an ideal orientation. Or we would tell the robot to move all the objects in the tub; it would determine which item has the most successful orientation, and it would move that one first, followed by the next easiest, and so on until all the objects were moved.”

Cappelleri initiated this project as part of the Resilient Extra-Terrestrial Habitats Institute (RETHi), a collaboration between NASA and Purdue University. The job of RETHi is to design space habitats that are suited for humans, but are also resilient to both known and unknown threats, and capable of actively learning and diagnosing issues autonomously.

So where do suction cup robots fit into that vision?

“When we go back to the Moon or Mars, we know that astronauts will not always be there to occupy the habitats,” Cappelleri said. “Robots need to be able to set everything up for them. They need to do maintenance and repair tasks. And they need to be able to do this autonomously, as well as in collaboration with humans. But we also envision these robots helping with the science experiments: sorting moon rocks, or performing other tasks that are too dangerous for humans.”

To accomplish this, Li and Cappelleri are already working on next steps. “We want to extend this functionality to other grippers and end-effectors,” Li said. “And then we want to empower the robot to automatically determine which end-effector to use. So if it sees an apple, it knows which gripper will work best, and automatically swap out a different end-effector. Then it will autonomously grasp and manipulate the item based on our machine learning model.”

“The examples we use are a muffin and a flat plate of aluminum,” Cappelleri said. “The best way to move a flat plate of aluminum is with a suction cup. But something delicate like a muffin requires a two-fingered soft gripper. Soon our robots will be smart enough to recognize these two different objects, even if they’re in a cluttered environment, and know exactly how to move each of them. This is essential for operating future habitats in space.”

Writer: Jared Pike, jaredpike@purdue.edu, 765-496-0374

Source: David Cappelleri, dcappell@purdue.edu

A portion of this work was supported by a Space Technology Research Institutes grant (#80NSSC19K1076) from NASA’s Space Technology Research Grants Program.

Sim-Suction: Learning a Suction Grasp Policy for Cluttered Environments Using a Synthetic Benchmark

Juncheng Li, David J. Cappelleri

https://doi.org/10.1109/TRO.2023.3331679

ABSTRACT: This paper presents Sim-Suction, a robust object-aware suction grasp policy for mobile manipulation platforms with dynamic camera viewpoints, designed to pick up unknown objects from cluttered environments. Suction grasp policies typically employ data-driven approaches, necessitating large-scale, accurately-annotated suction grasp datasets. However, the generation of suction grasp datasets in cluttered environments remains underexplored, leaving uncertainties about the relation- ship between the object of interest and its surroundings. To address this, we propose a benchmark synthetic dataset, Sim-Suction-Dataset, comprising 500 cluttered environments with 3.2 million annotated suction grasp poses. The efficient Sim-Suction-Dataset generation process provides novel insights by combining analytical models with dynamic physical simulations to create fast and accurate suction grasp pose annotations. We introduce Sim-Suction-Pointnet to generate robust 6D suction grasp poses by learning point-wise affordances from the Sim-Suction-Dataset, leveraging the synergy of zero-shot text-to-segmentation. Real- world experiments for picking up all objects demonstrate that Sim-Suction-Pointnet achieves success rates of 96.76%, 94.23%, and 92.39% on cluttered level 1 objects (prismatic shape), cluttered level 2 objects (more complex geometry), and cluttered mixed objects, respectively. The codebase can be accessed at https://github.com/junchengli1/Sim-Suction-API