Autonomous corn robot monitors and samples crops that humans can't reach

Imagine you’re a farmer who has to monitor miles of corn rows. Not only is it repetitive and time-intensive, but the rows are too narrow to walk in. Perfect job for an autonomous robot! Purdue University researchers have created a small autonomous robot to help farmers monitor crops and regularly collect physical samples, saving them time and effort.

Internet-of-Things for Precision Agriculture (IoT4Ag), an NSF engineering research center, was launched in 2020 with five participating universities: Purdue University, University of Pennsylvania, University of California Merced, University of Florida, and Arizona State University. Purdue’s Agronomy Center for Research and Education (ACRE) is one of three real-world testbeds, and the only one that focuses on row crops such as corn and sorghum plants. Aarya Deb, PhD student in mechanical engineering at Purdue said, “IoT4Ag’s mission is to utilize internet-of-things technologies to digitize the agriculture industry in order to meet the growing demand for food, energy, and water security as the population grows.”

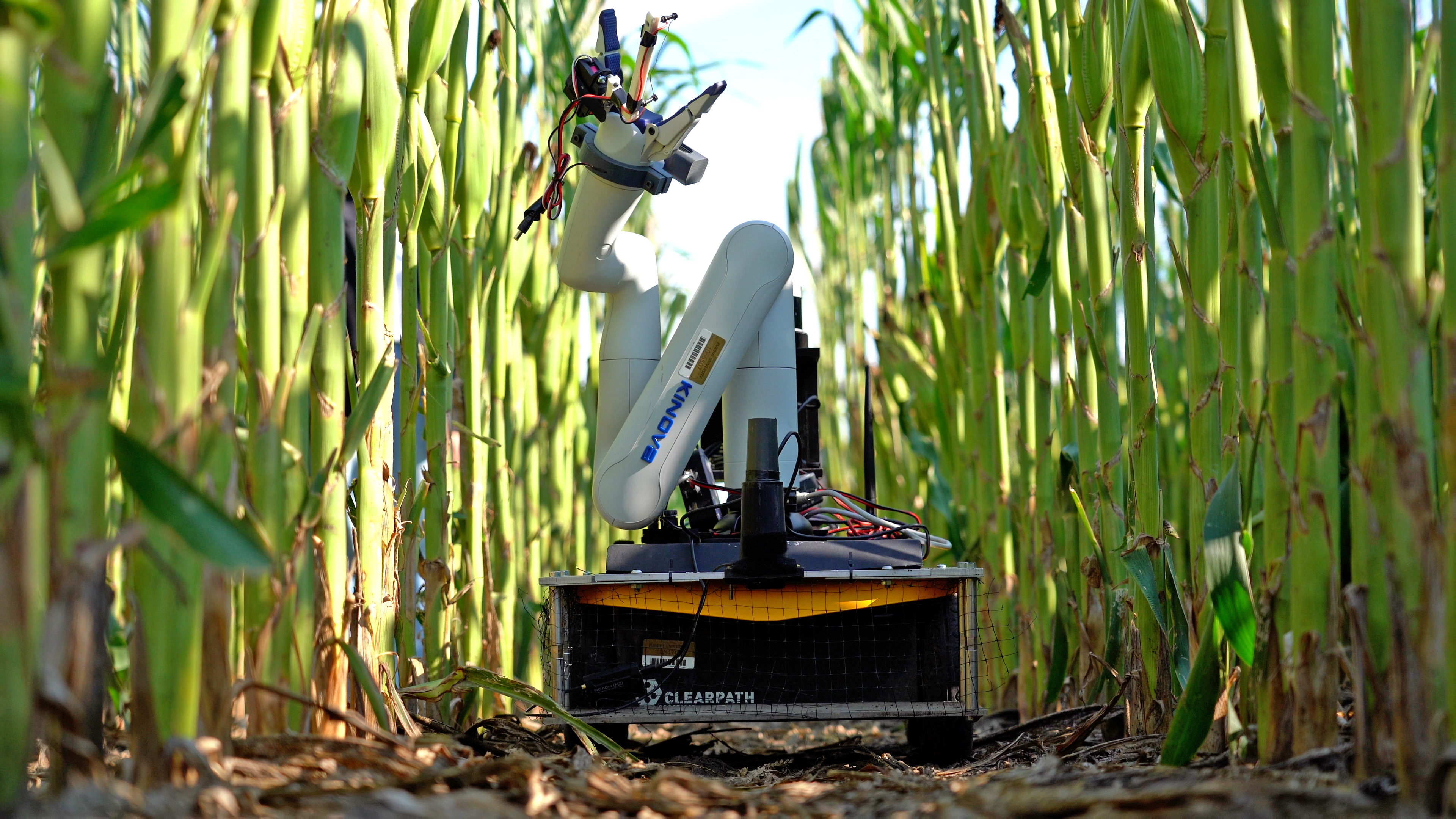

Deb works alongside Kitae Kim, another PhD student in mechanical engineering, at Purdue's Herrick Labs. They both work under the supervision of David Cappelleri, professor of mechanical engineering and assistant vice president for research innovation. Together, they’ve developed an agricultural robot dubbed the Purdue AgBot (P-AgBot) that can be used for in-row and under-canopy crop monitoring and physical sampling.

“When designing the robot, we had to account for many challenges,” said Kim, “there is limited space between corn rows and the robot also navigates under the canopy where GPS signals no longer work.” They overcame these challenges by utilizing 2D light detection and ranging (LiDAR), a remote sensing method that uses light in the form of a pulsed laser to measure ranges to the earth. This allows the robot to both navigate through the rows and understand its location relative to other things in the field.

The team then created a simultaneous localization and mapping (SLAM) framework called P-AgSLAM for robot pose estimation and agricultural monitoring in cornfields. It’s primarily based on a 3D LiDAR sensor and is designed for the extraction of unique morphological features of cornfields which have significantly different characteristics from structured indoor and outdoor urban environments. Outdoor cornfields often have issues of clutter, repetitive patterns, and rugged and slippery terrain which P-AgSLAM is robust at accounting for.

The P-AgSLAM framework is built on an upgraded version of the P-AgBot robotic platform. They configured the P-AgBot with dual LiDARs placed perpendicularly in order to broaden its sensing field of view. The P-AgSLAM can estimate robot states and generate a 3D point cloud map while P-AgBot navigates under the canopy. When the robot finds itself in regions with reliable GPS signal reception, the system performs optimizations on the global poses. The combined capabilities of local and global pose estimation in this method effectively reduce the drift caused by challenging agricultural environments.

The other component of the P-Agbot is its deep learning-based approach to detecting and segmenting crop leaves for robotic physical sampling. The sampling of leaves is crucial because they are good indicators for nutrient deficiencies, nitrogen use, and stress levels. The P-AgBot utilizes 3D LiDAR to capture the entirety of crops at various heights for mapping and analyzing morphological measurements. It has an RGB camera with depth-sensing to detect the crop leaves in real time and extract their positional information. After detecting the leaf, the robotic arm can reach up, grasp the leaf, and use the nichrome wire end-effector to cut the sample off. Another camera is mounted to the end-effector link of the arm to provide vision-based guidance by detecting the position and distance of the leaf. For the sample to be counted as successful, it must be cut close to the stalk, maximizing the sampling area.

Cappelleri and his team came up with a method for gathering a physical dataset of agricultural crops such as corn and sorghum during the growing season, augmenting and labeling the data, and then training machine-learning algorithms on the results. “The issue with leaves on a corn stalk is that they don’t stick straight out, they grow at different angles,” said Aarya, “so the goal here is to help the robot identify the leaf in a 3D space so it can grasp it better and get a more successful sample.”

In the future, they hope to introduce more robots into the field, and successfully design a multi-robot system where the robots can communicate with one another. Their hope is that farmers will utilize the robot as an assistant, making regular tasks like collecting samples and measuring crop heights more efficient and hassle free.

Source: Aarya Deb, deb8@purdue.edu, Kitae Kim, kim3686@purdue.edu

Writer: Julia Davis, juliadavis@purdue.edu

P-AgSLAM: In-Row and Under-Canopy SLAM for Agricultural Monitoring in Cornfields

Kitae Kim, Aarya Deb, and David J. Cappelleri

https://doi.org/10.1109/LRA.2024.3386466

ABSTRACT: In this letter, we present an in-row and under-canopy Simultaneous Localization and Mapping (SLAM) framework called the Purdue AgSLAM or P-AgSLAM which is designed for robot pose estimation and agricultural monitoring in cornfields. Our SLAM approach is primarily based on a 3D light detection and ranging (LiDAR) sensor and it is designed for the extraction of unique morphological features of cornfields which have significantly different characteristics from structured indoor and outdoor urban environments. The performance of the proposed approach has been validated with experiments in simulation and in real cornfield environments. P-AgSLAM outperforms existing state-of-the-art LiDAR-based state estimators in robot pose estimations and mapping.

Deep Learning-based Leaf Detection for Robotic Physical Sampling with P-AgBot

Aarya Deb, Kitae Kim, and David J. Cappelleri

https://doi.org/10.1109/IROS55552.2023.10341516

ABSTRACT: Automating leaf detection and physical leaf sample collection using Internet of Things (IoT) technologies is a crucial task in precision agriculture. In this paper, we present a deep learning-based approach for detecting and segmenting crop leaves for robotic physical sampling. We discuss a method for generating a physical dataset of agricultural crops. Our pro- posed pipeline incorporates using an RGB-D camera for dataset collection, fusing the depth frame along with RGB images to train Mask R-CNN and YOLOv5 models. We also propose our novel leaf pose estimating algorithm for physical sampling and maximizing leaf sample area while using a robotic arm integrated to the P-AgBot platform. The proposed approach has been experimentally validated on corn and sorghum, in both indoor and outdoor environments. Our method has achieved a best-case detection rate of 90.6%, a 9% smaller error compared to our previous method, and approximately 80% smaller error compared to other state-of-the-art methods in estimating the leaf position.