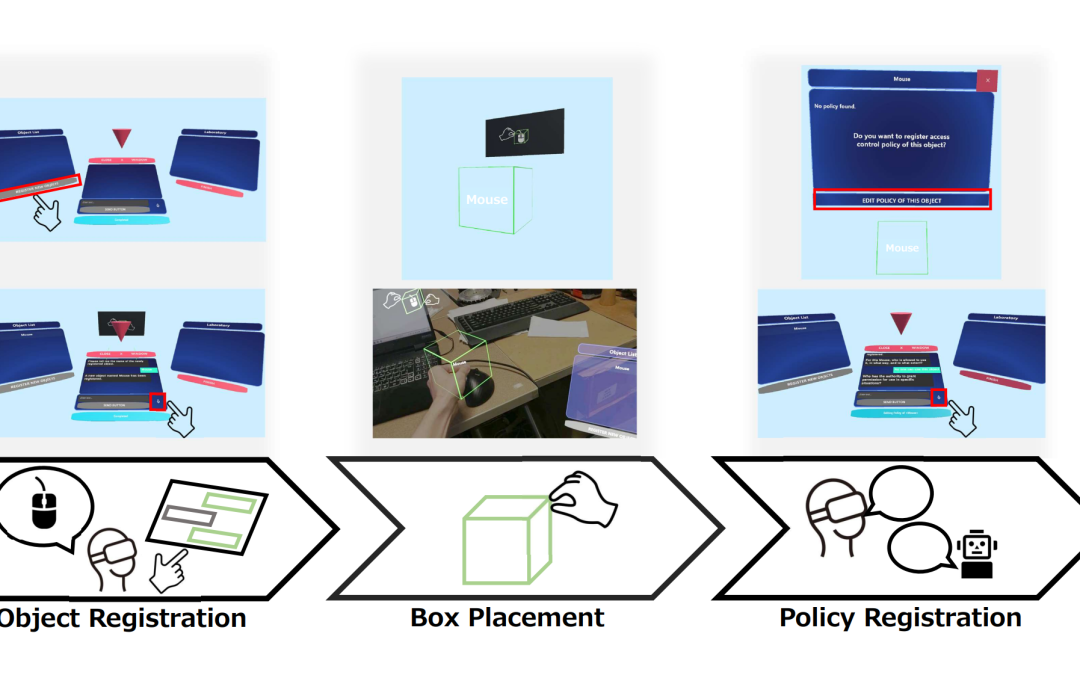

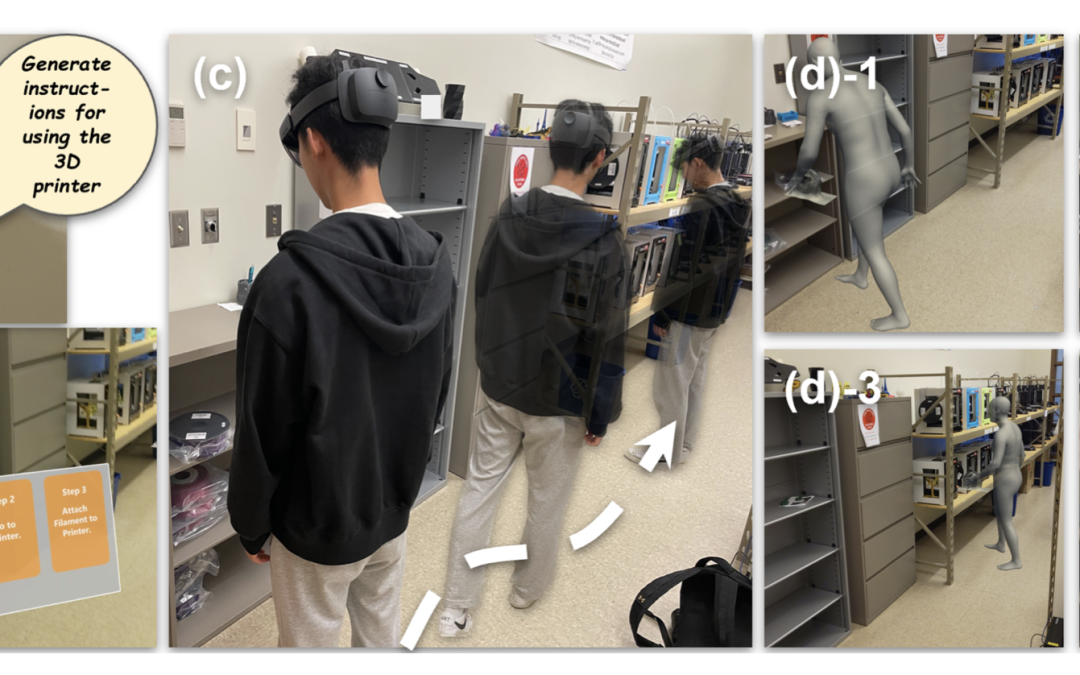

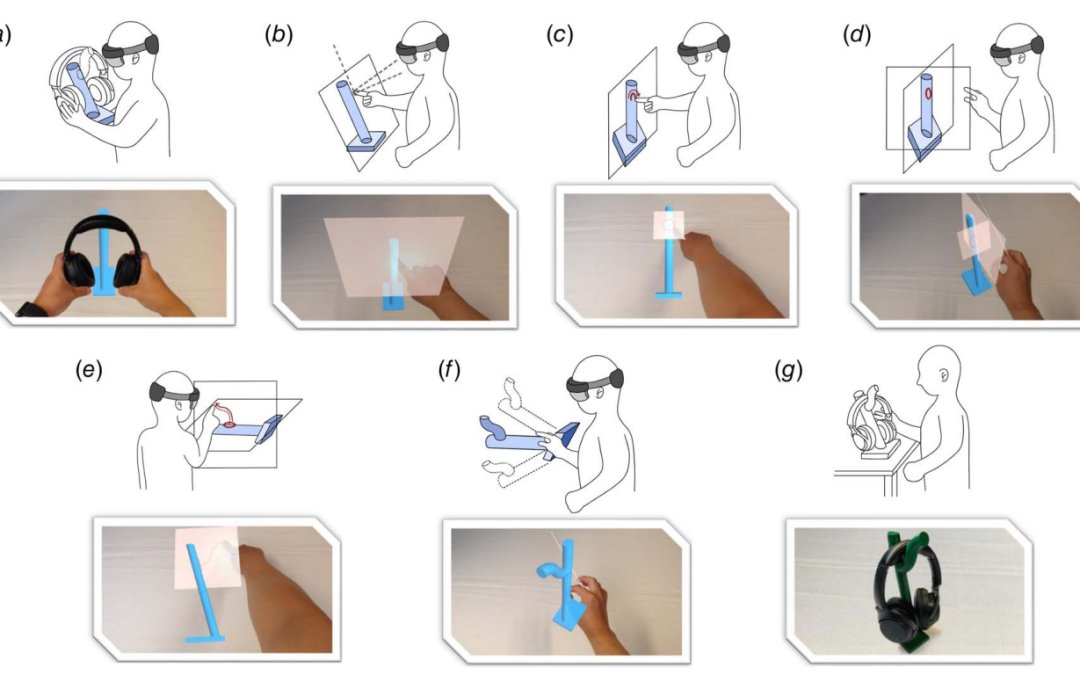

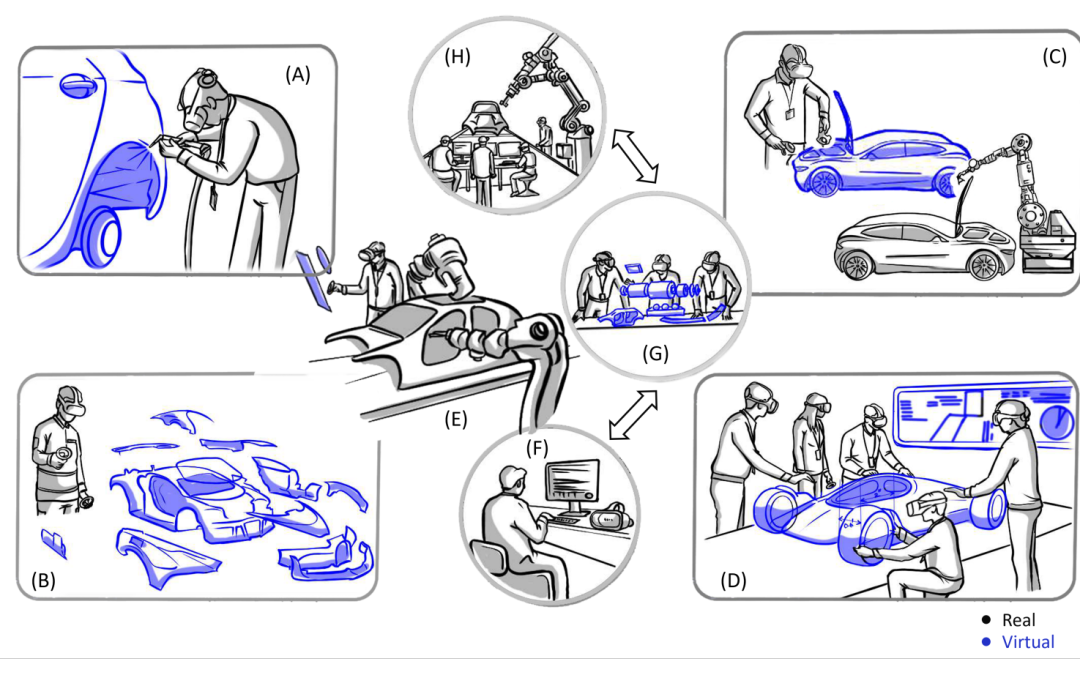

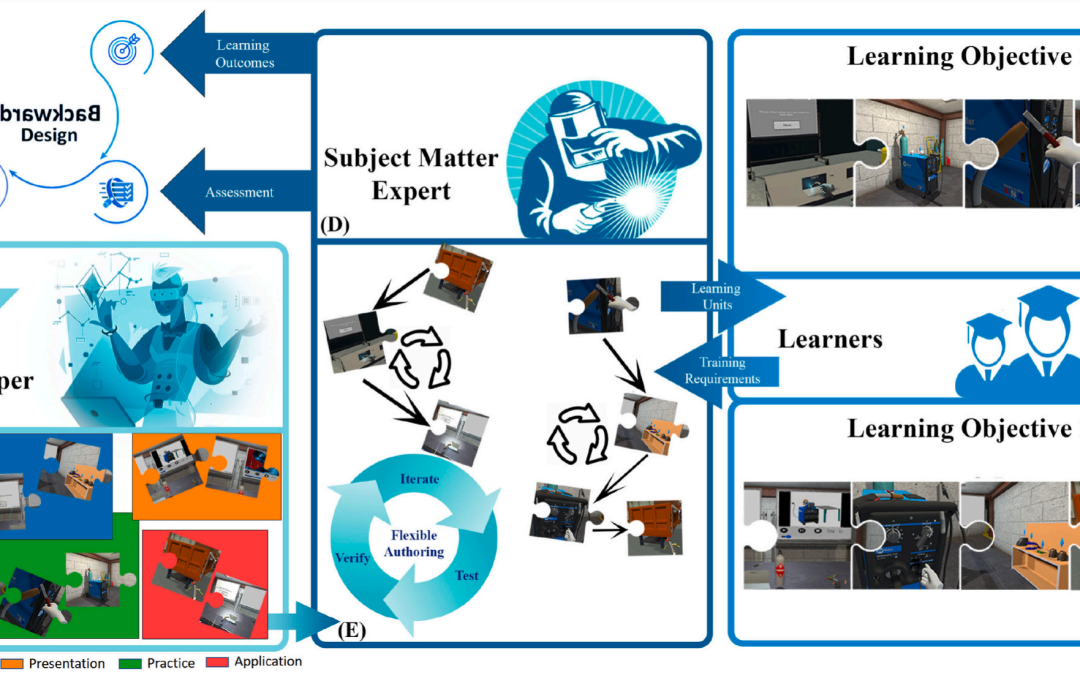

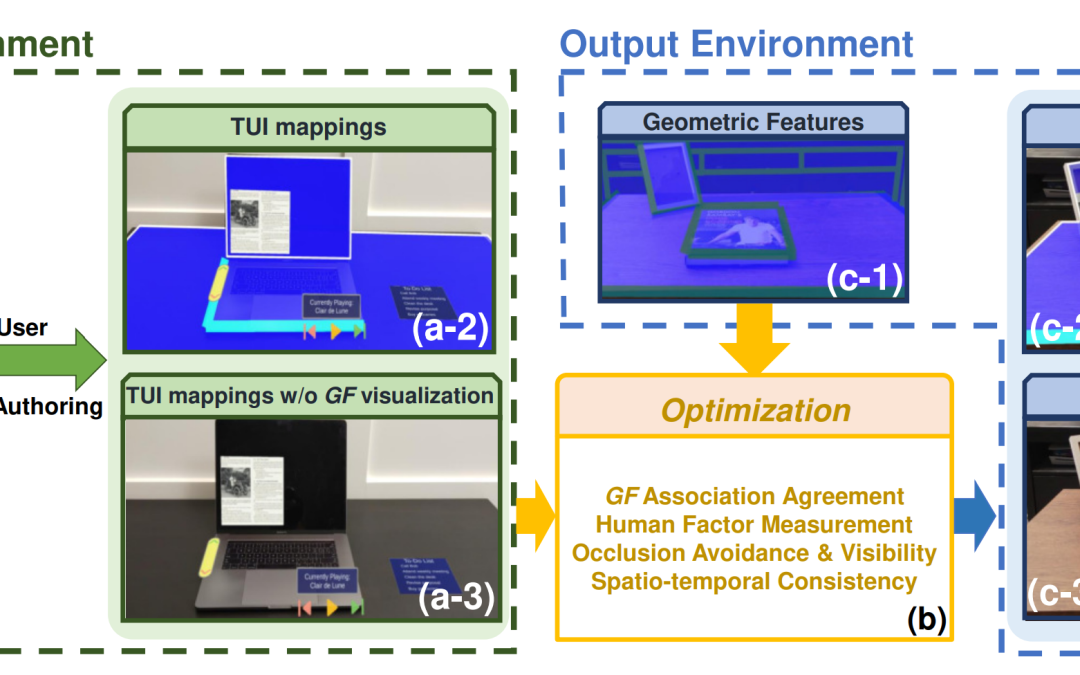

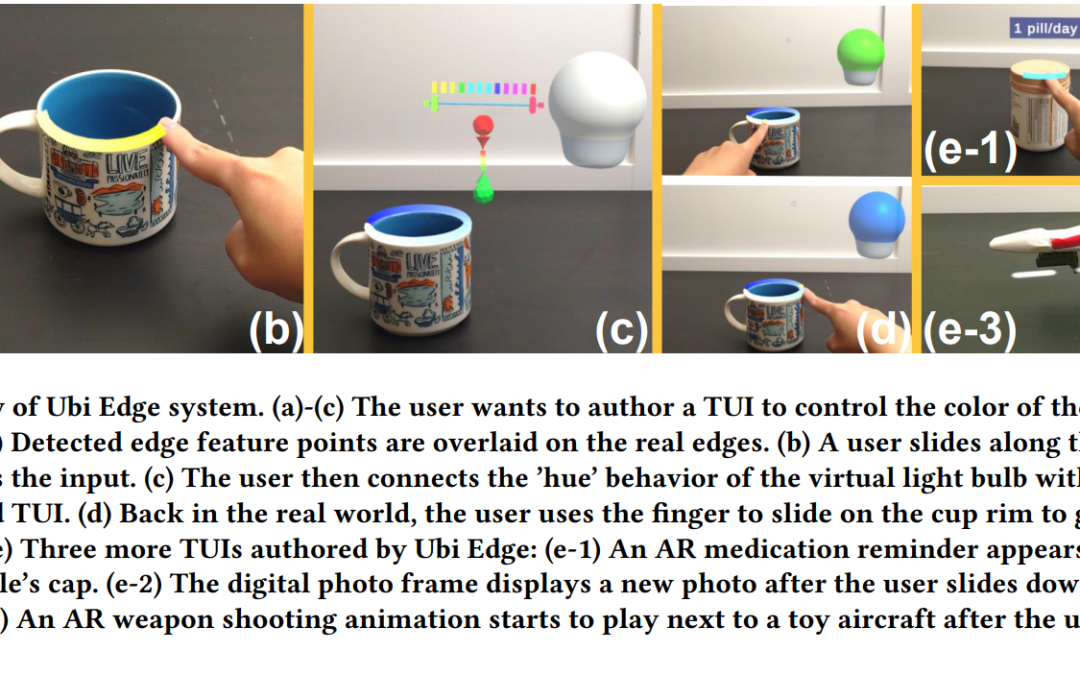

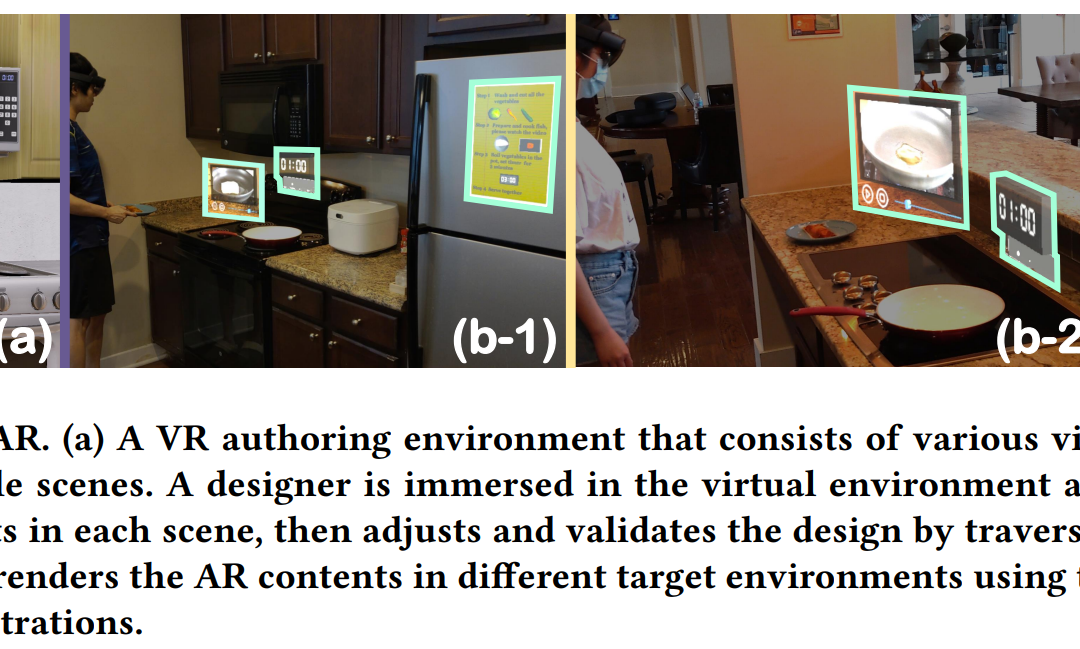

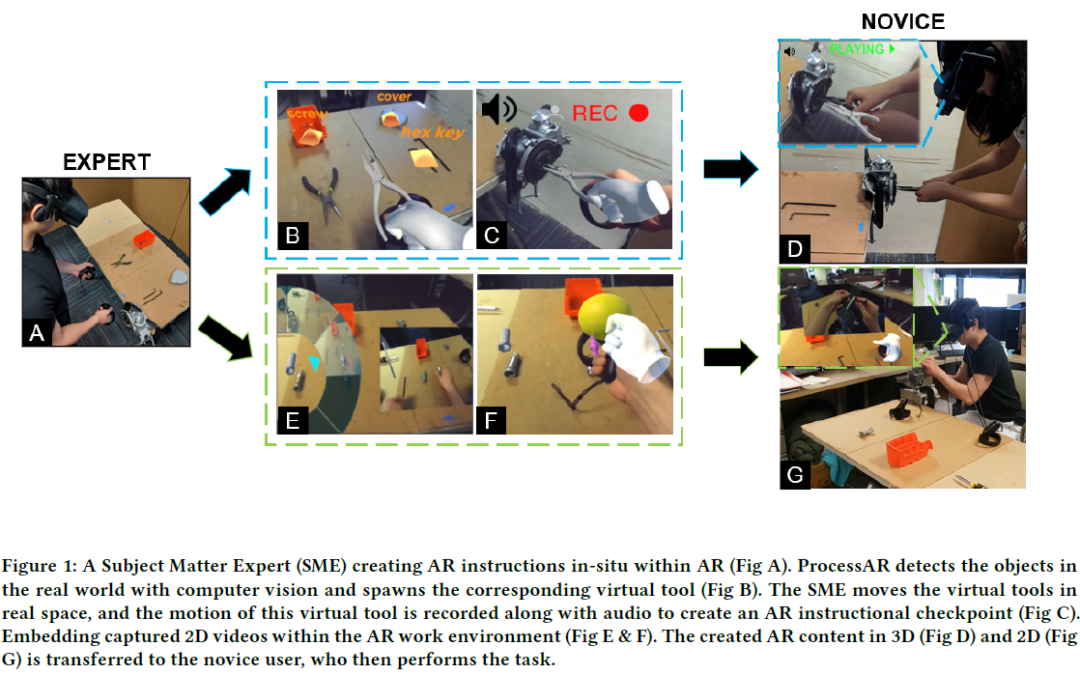

Creating Augmented Reality (AR) applications requires expertise in both design and implementation, posing significant barriers to entry for non-expert users. While existing methods reduce some of this burden, they often fall short in flexibility or...