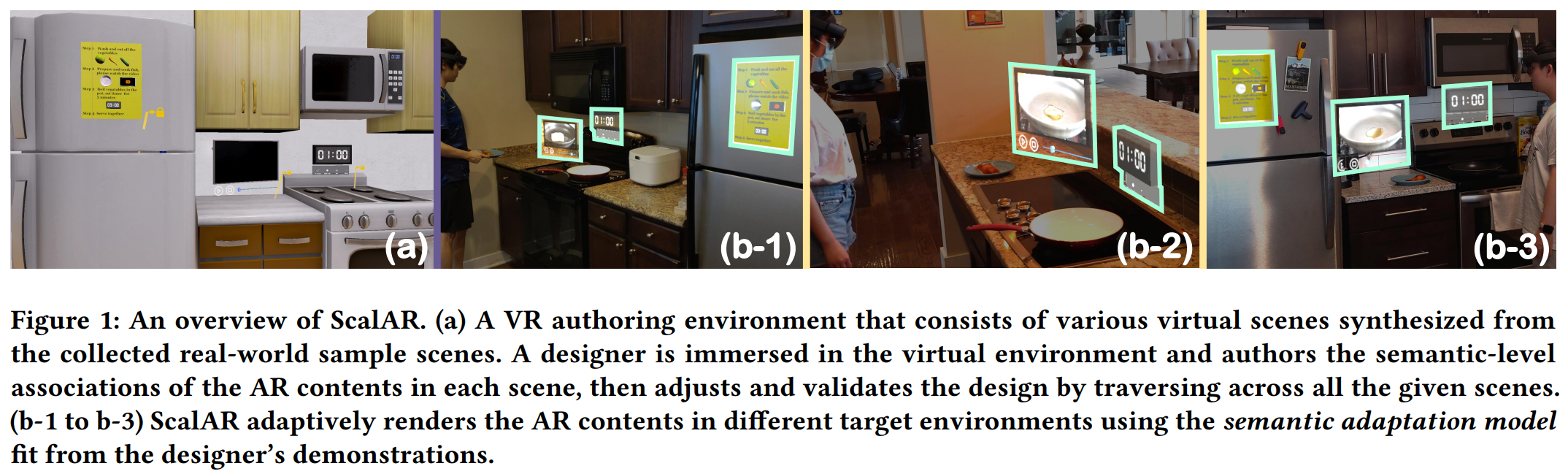

Augmented Reality (AR) experiences tightly associate virtual contents with environmental entities. However, the dissimilarity of different environments limits the adaptive AR content behaviors under large-scale deployment. We propose ScalAR, an integrated workflow enabling designers to author semantically adaptive AR experiences in Virtual Reality (VR). First, potential AR consumers collect local scenes with a semantic understanding technique. ScalAR then synthesizes numerous similar scenes. In VR, a designer authors the AR contents’ semantic associations and validates the design while being immersed in the provided scenes. We adopt a decision-tree-based algorithm to fit the designer’s demonstrations as a semantic adaptation model to deploy the authored AR experience in a physical scene. We further showcase two application scenarios authored by ScalAR and conduct a two-session user study where the quantitative results prove the accuracy of the AR content rendering and the qualitative results show the usability of ScalAR.

ScalAR: Authoring Semantically Adaptive Augmented Reality Experiences in Virtual Reality

Authors: Xun Qian, Fengming He, Xiyun Hu, Tianyi Wang, Ananya Ipsita, and Karthik Ramani

In the Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems

https://doi.org/10.1145/3491102.3517665

Xun Qian

Xun Qian is a Ph.D. student in the School of Mechanical Engineering at Purdue University since Fall 2018. Before joining the C Design Lab, he received his Master's degree in Mechanical Engineering at Cornell University, and Bachelor's degree in Mechanical Engineering at University of Science and Technology Beijing. His current research interests lie in development of novel human-computer interactions leveraging AR/VR/MR, Deep Learning, and Cloud Computing. For more details, please visit his personal website at xun-qian.com