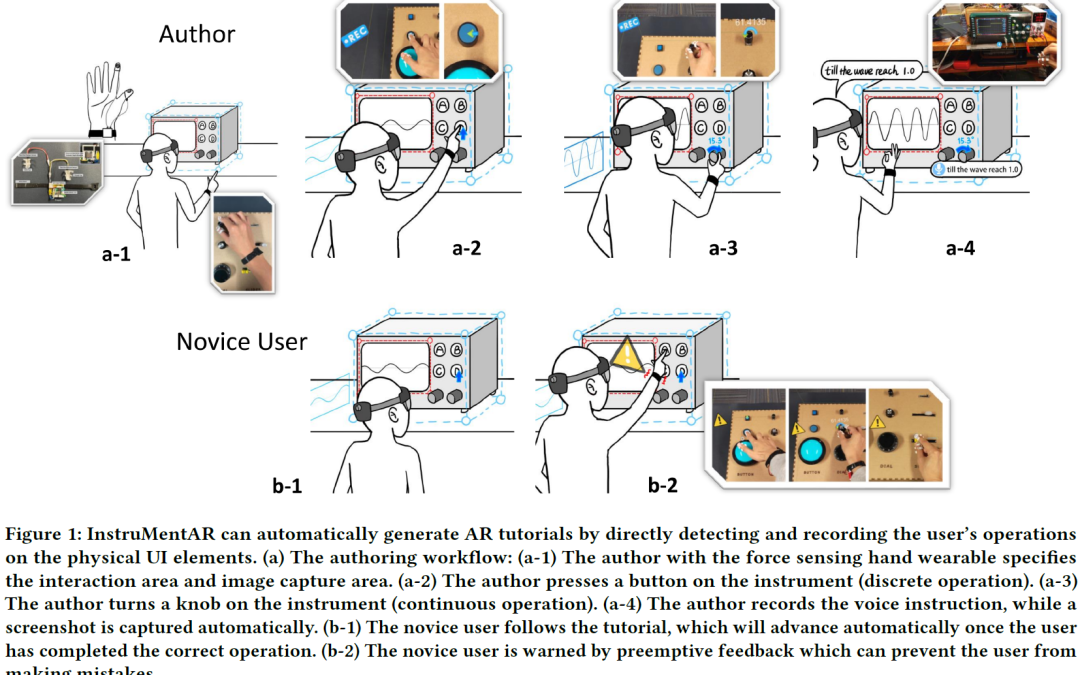

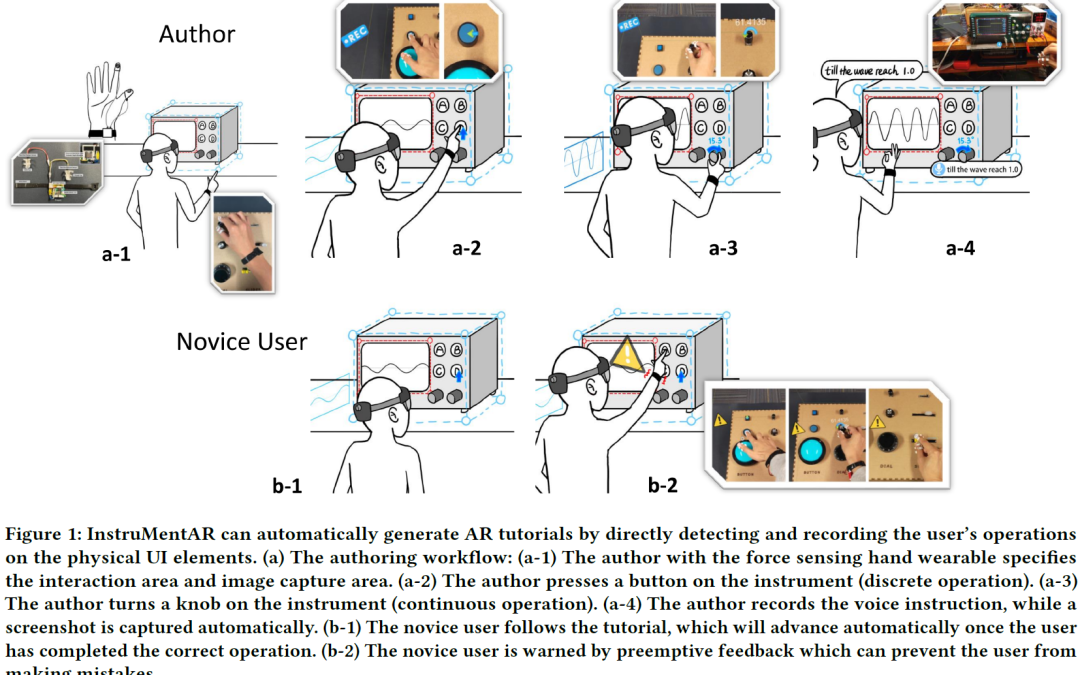

Augmented Reality tutorials, which provide necessary context by directly superimposing visual guidance on the physical referent, represent an effective way of scaffolding complex instrument operations. However, current AR tutorial authoring...

Augmented Reality tutorials, which provide necessary context by directly superimposing visual guidance on the physical referent, represent an effective way of scaffolding complex instrument operations. However, current AR tutorial authoring...

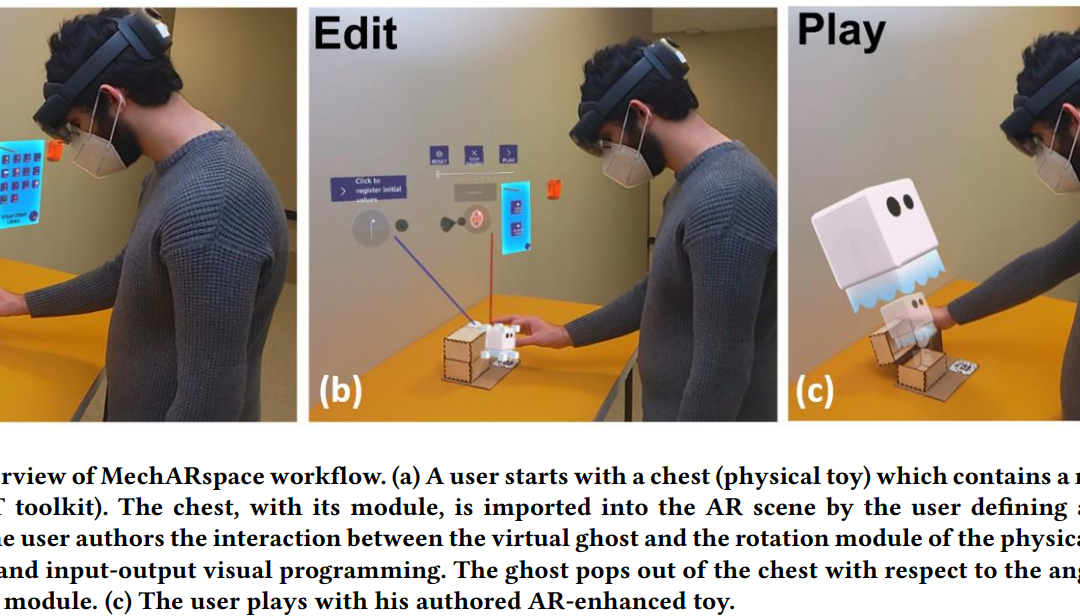

Augmented Reality (AR), which blends physical and virtual worlds, presents the possibility of enhancing traditional toy design. By leveraging bidirectional virtual-physical interactions between humans and the designed artifact, such AR-enhanced...

Vision-based 3D pose estimation has substantial potential in hand-object interaction applications and requires user-specified datasets to achieve robust performance. We propose ARnnotate, an Augmented Reality (AR) interface enabling end-users to...

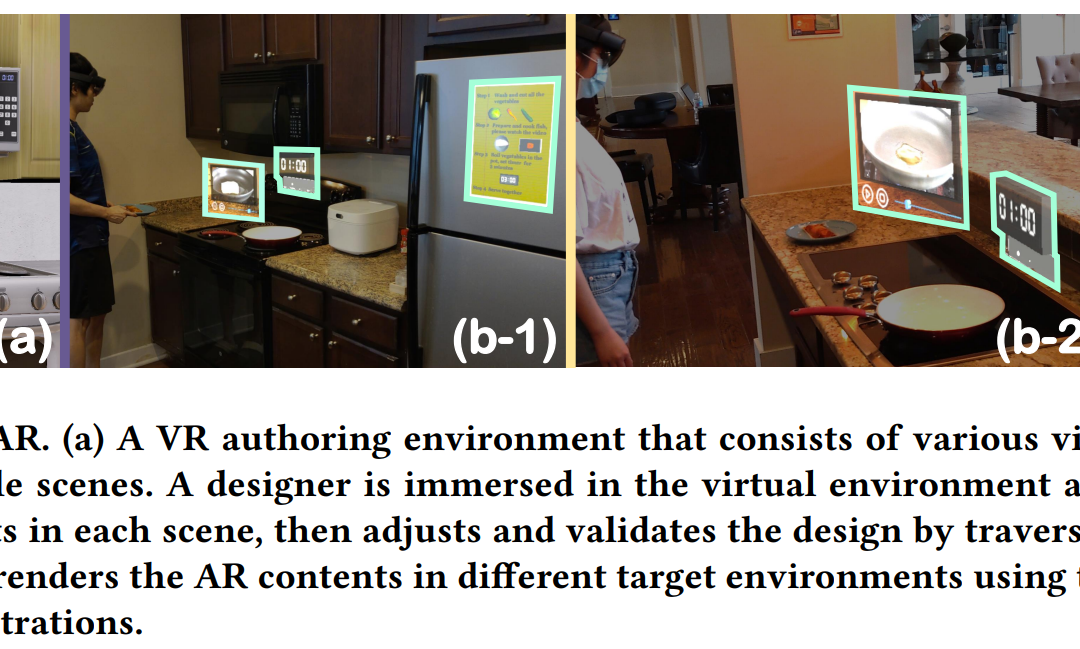

Augmented Reality (AR) experiences tightly associate virtual contents with environmental entities. However, the dissimilarity of different environments limits the adaptive AR content behaviors under large-scale deployment. We propose ScalAR, an...

Freehand gesture is an essential input modality for modern Augmented Reality (AR) user experiences. However, developing AR applications with customized hand interactions remains a challenge for end-users. Therefore, we propose GesturAR, an...

Light painting photos are created by moving light sources in mid-air while taking a long exposure photo. However, it is challenging for novice users to leave accurate light traces without any spatial guidance. Therefore, we present LightPaintAR, a...

Modern manufacturing processes are in a state of flux, as they adapt to increasing demand for flexible and self-configuring production. This poses challenges for training workers to rapidly master new machine operations and processes, i.e. machine...

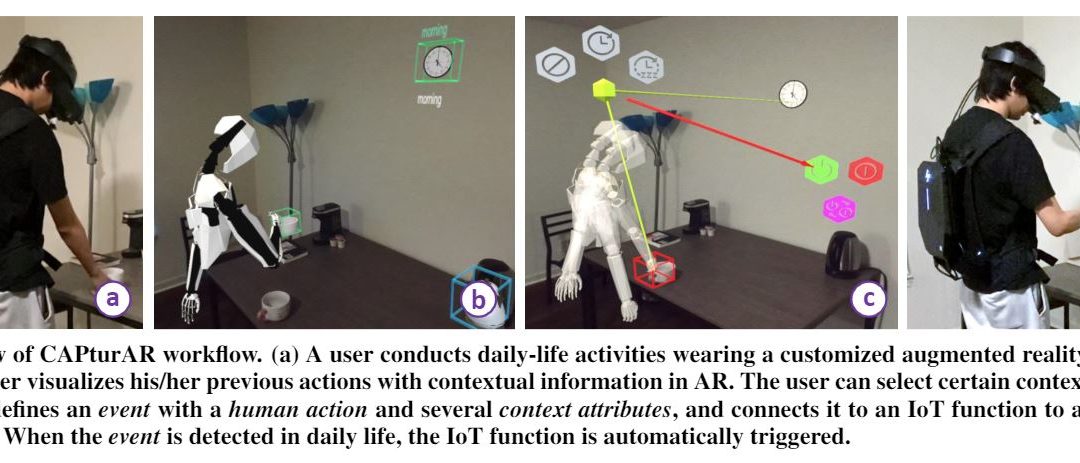

Recognition of human behavior plays an important role in context-aware applications. However, it is still a challenge for end-users to build personalized applications that accurately recognize their own activities. Therefore, we present CAPturAR,...

Machine tasks in workshops or factories are often a compound sequence of local, spatial, and body-coordinated human-machine interactions. Prior works have shown the merits of video-based and augmented reality (AR) tutoring systems for local tasks....

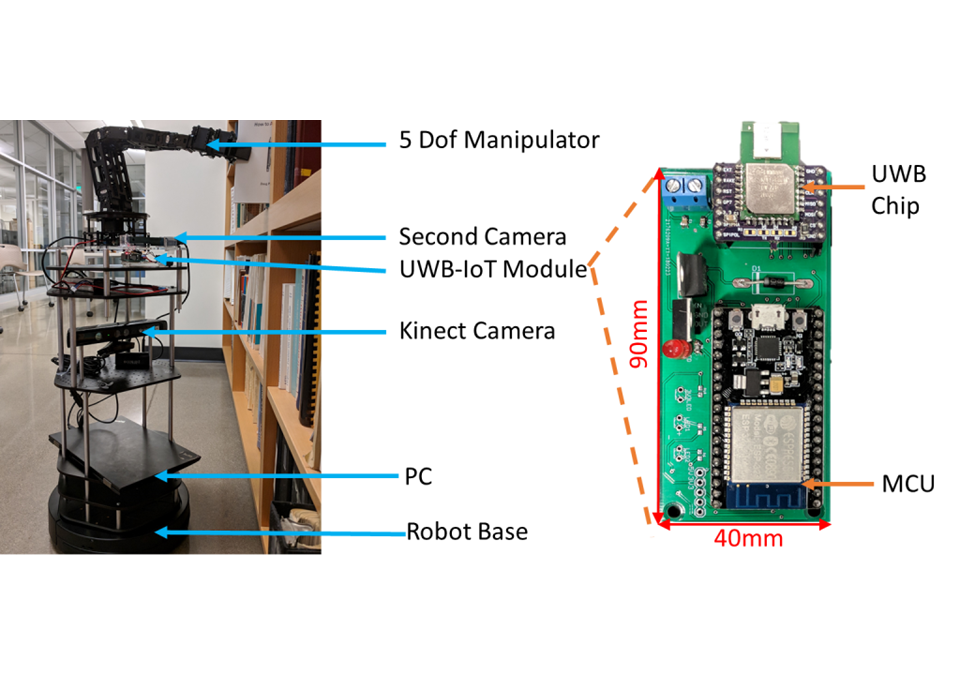

The emerging simultaneous localization and mapping (SLAM) techniques enable robots with the spatial awareness of the physical world. However, such awareness remains at a geometric level. We propose an approach for quickly constructing a smart...