With the advents in geometry perception and Augmented Reality (AR), end-users can customize Tangible User Interfaces (TUIs) that control digital assets using intuitive and comfortable interactions with physical geometries (e.g., edges and...

With the advents in geometry perception and Augmented Reality (AR), end-users can customize Tangible User Interfaces (TUIs) that control digital assets using intuitive and comfortable interactions with physical geometries (e.g., edges and...

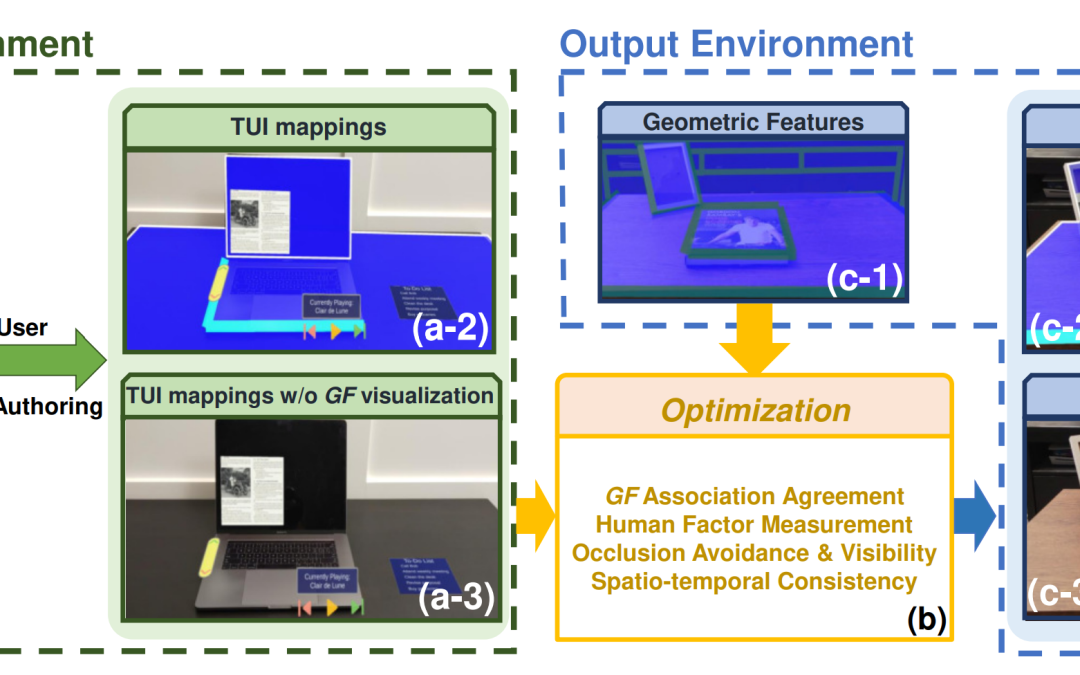

Utilizing everyday objects as tangible proxies for Augmented Reality (AR) provides users with haptic feedback while interacting with virtual objects. Yet, existing methods focus on the attributes of the objects, constraining the possible proxies...

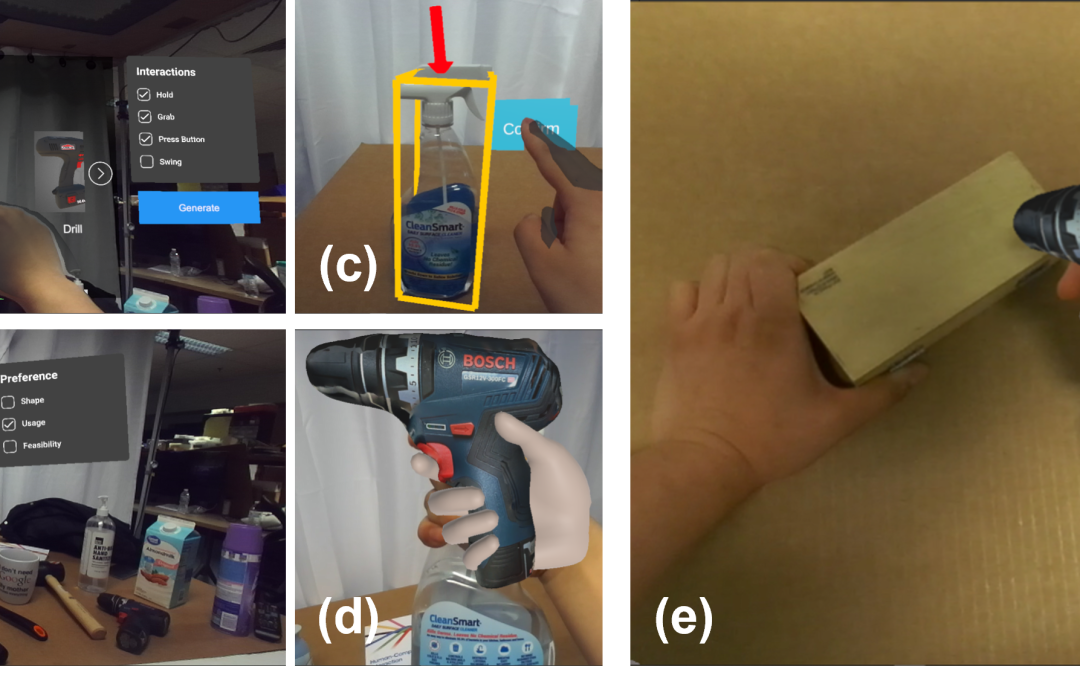

Augmented Reality tutorials, which provide necessary context by directly superimposing visual guidance on the physical referent, represent an effective way of scaffolding complex instrument operations. However, current AR tutorial authoring...

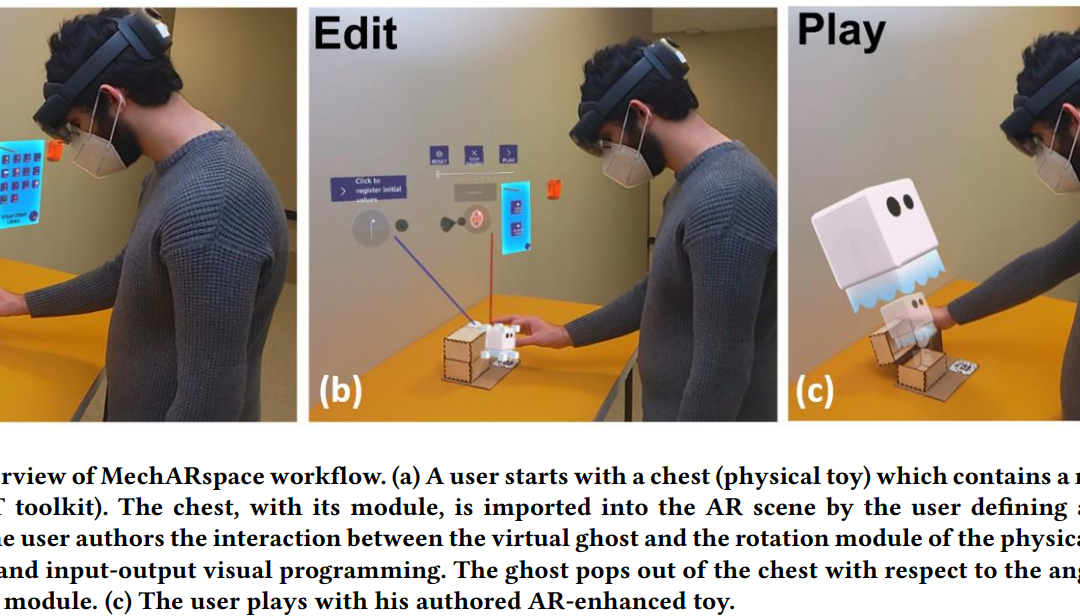

Augmented Reality (AR), which blends physical and virtual worlds, presents the possibility of enhancing traditional toy design. By leveraging bidirectional virtual-physical interactions between humans and the designed artifact, such AR-enhanced...

Vision-based 3D pose estimation has substantial potential in hand-object interaction applications and requires user-specified datasets to achieve robust performance. We propose ARnnotate, an Augmented Reality (AR) interface enabling end-users to...

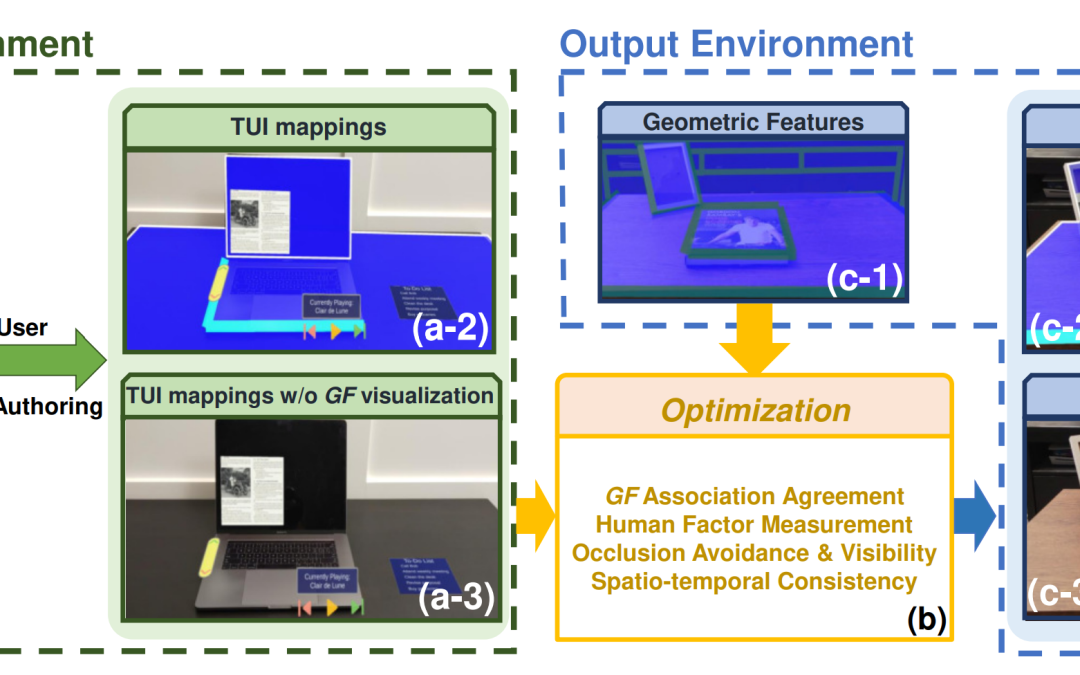

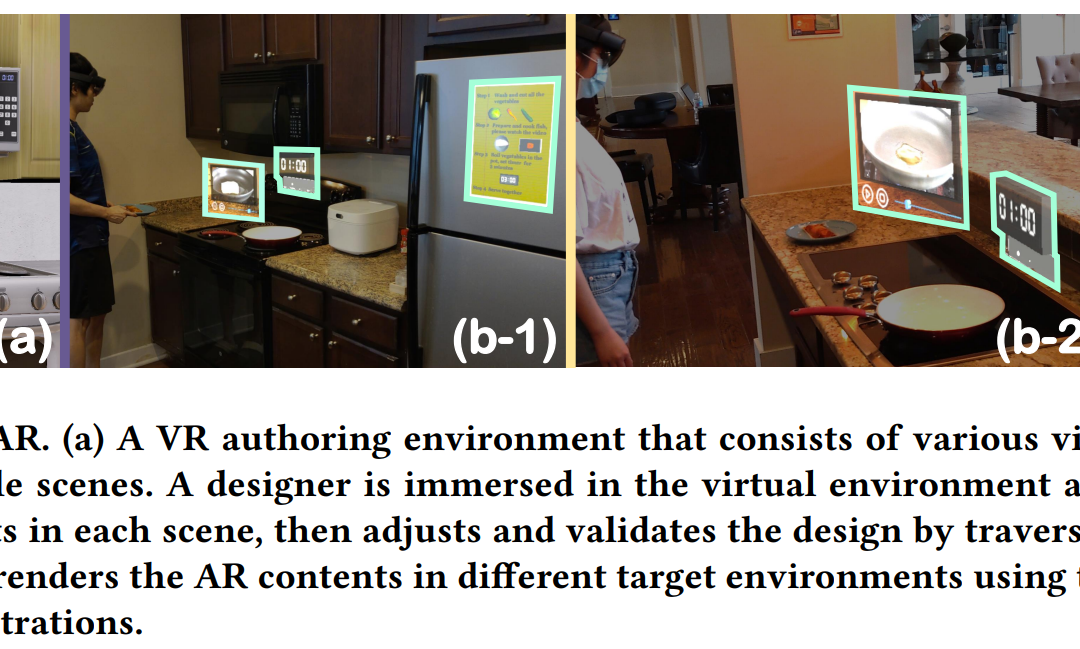

Augmented Reality (AR) experiences tightly associate virtual contents with environmental entities. However, the dissimilarity of different environments limits the adaptive AR content behaviors under large-scale deployment. We propose ScalAR, an...

"GesturAR: An Authoring System for Creating Freehand Interactive Augmented Reality Applications" receives the Best Paper Honorable Mention Award (Top 5% of the accepted paper) in the 34th Annual ACM Symposium on User Interface Software &...

Freehand gesture is an essential input modality for modern Augmented Reality (AR) user experiences. However, developing AR applications with customized hand interactions remains a challenge for end-users. Therefore, we propose GesturAR, an...

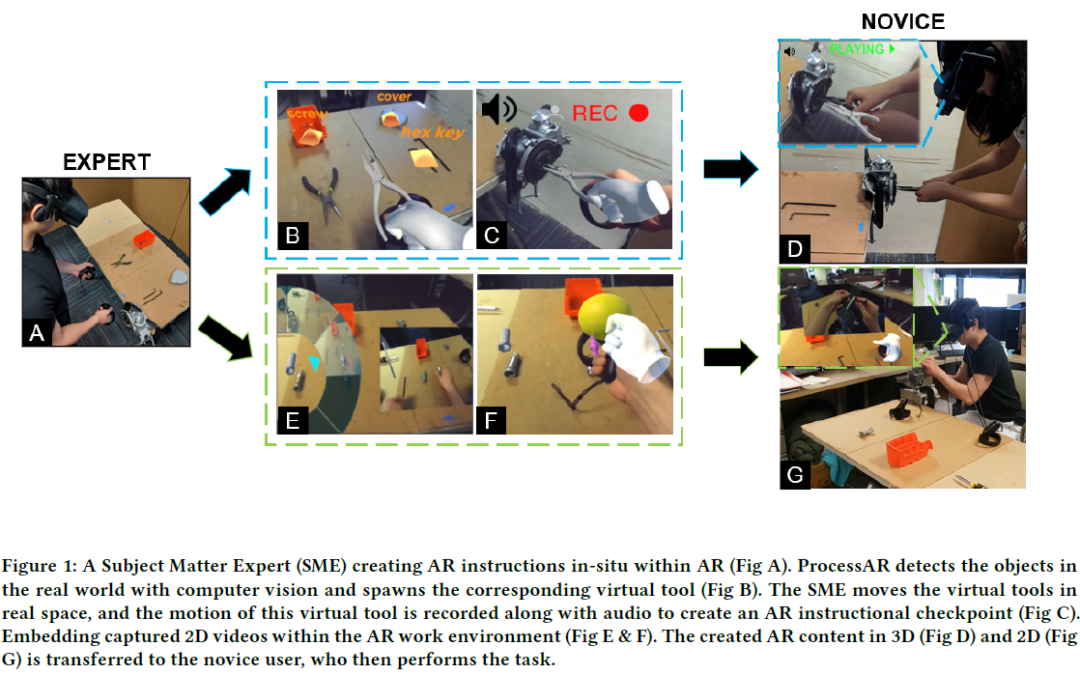

Augmented reality (AR) is an efficient form of delivering spatial information and has great potential for training workers. However, AR is still not widely used for such scenarios due to the technical skills and expertise required to create...

Light painting photos are created by moving light sources in mid-air while taking a long exposure photo. However, it is challenging for novice users to leave accurate light traces without any spatial guidance. Therefore, we present LightPaintAR, a...