by Xiyun Hu | May 14, 2025 | 2025, Dizhi Ma, Fengming He, Karthik Ramani, Karthik Ramani, Recent Publications, Shao-Kang Hsia, Xiyun Hu, Zhengzhe Zhu, Ziyi Liu

Large Language Model (LLM)-based copilots have shown great potential in Extended Reality (XR) applications. However, the user faces challenges when describing the 3D environments to the copilots due to the complexity of conveying spatial-temporal information through...

by Rahul Jain | Apr 25, 2025 | 2025, Augmented Reality, Featured Publications, Hyunggun Chi, Hyungjun Doh, Jingyu Shi, Karthik Ramani, Rahul Jain, Recent Publications, Seunggeun Chi

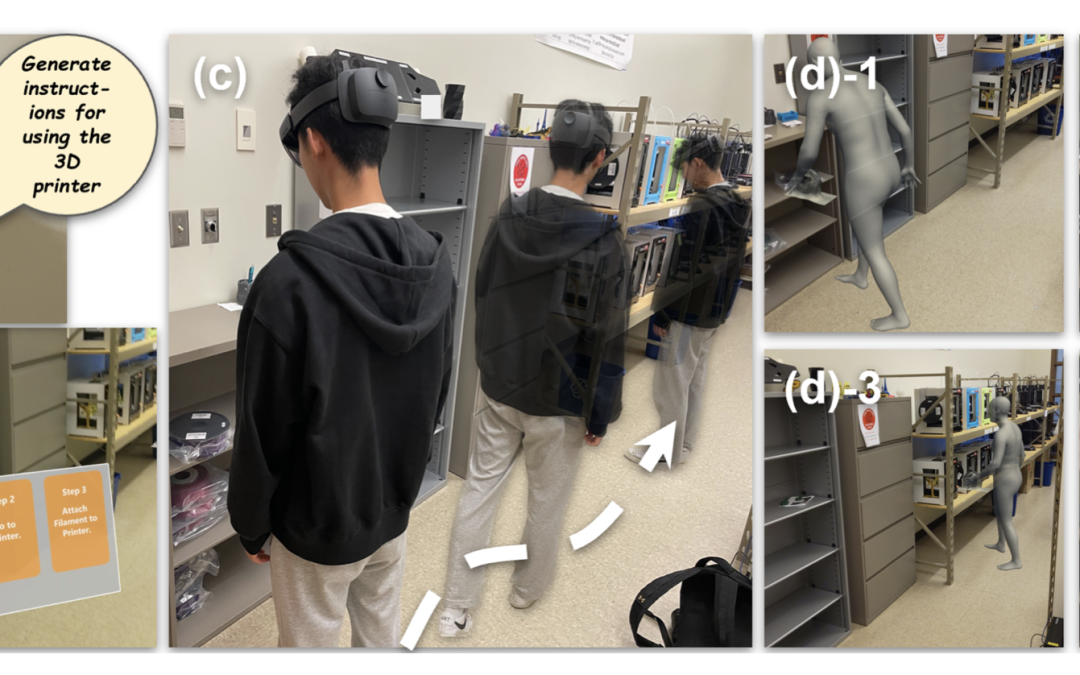

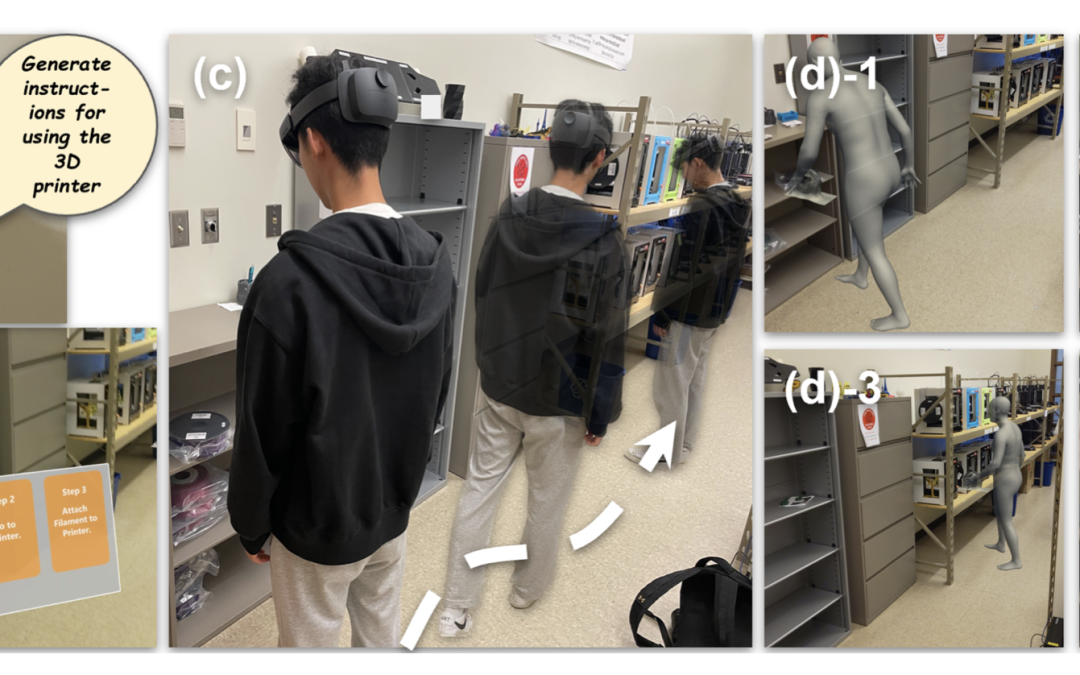

Context-aware AR instruction enables adaptive and in-situ learning experiences. However, hardware limitations and expertise requirements constrain the creation of such instructions. With recent developments in Generative Artificial Intelligence (Gen-AI), current...

by Rahul Jain | Feb 24, 2025 | 2025, Augmented Reality, Featured Publications, Hyungjun Doh, Jingyu Shi, Karthik Ramani, Rahul Jain, Recent Publications, Subramanian Chidambaram

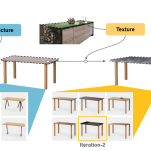

Mixed Reality (MR) is gaining prominence in manual task skill learning due to its in-situ, embodied, and immersive experience. To teach manual tasks, current methodologies break the task into hierarchies (tasks into subtasks) and visualize not only the current...

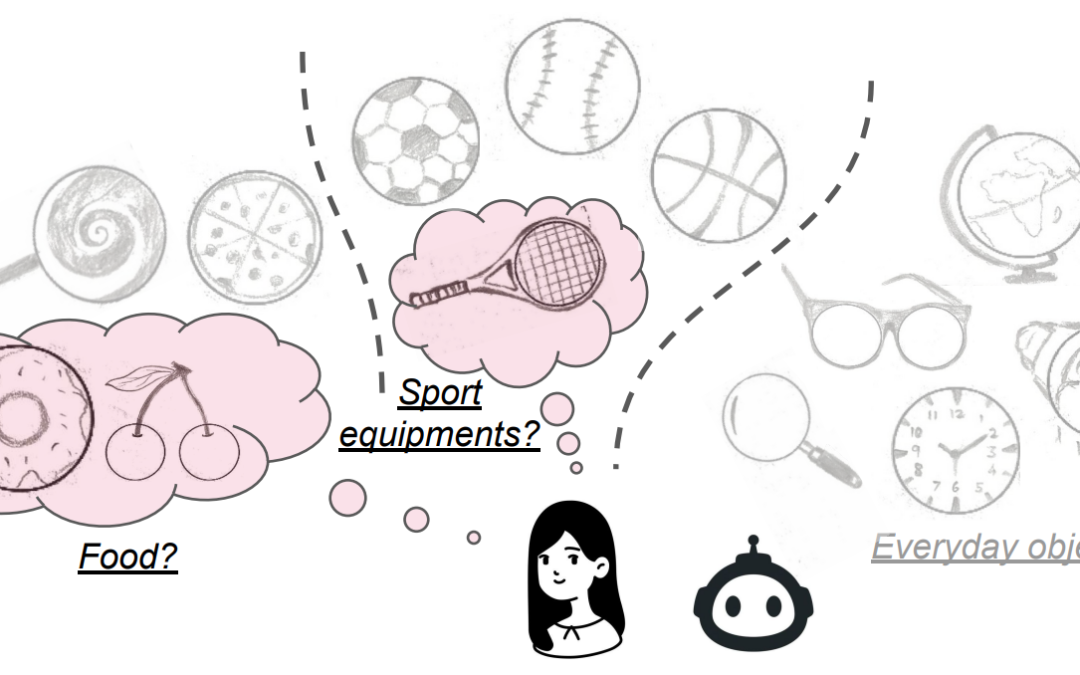

by Karthik Ramani | Feb 11, 2025 | 2025, Karthik Ramani, Runlin Duan

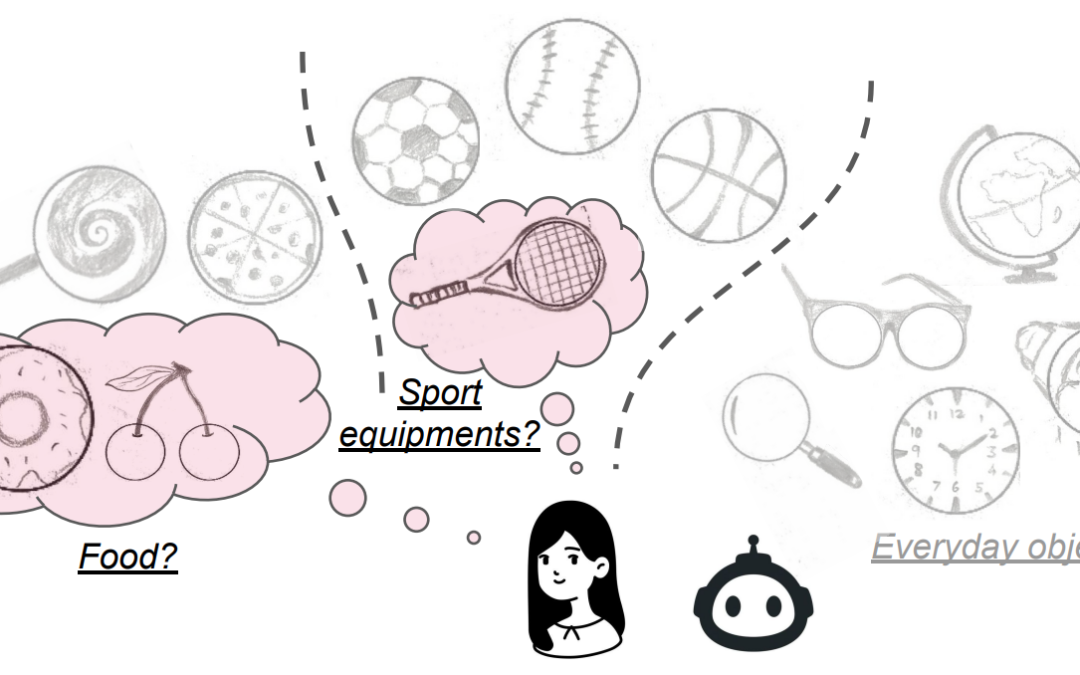

Generative AI (GenAI) is transforming the creativity process. However, as presented in this paper, GenAI encounters “narrow creativity” barriers. We observe that both humans and GenAI focus on limited subsets of the design space. We investigate this...

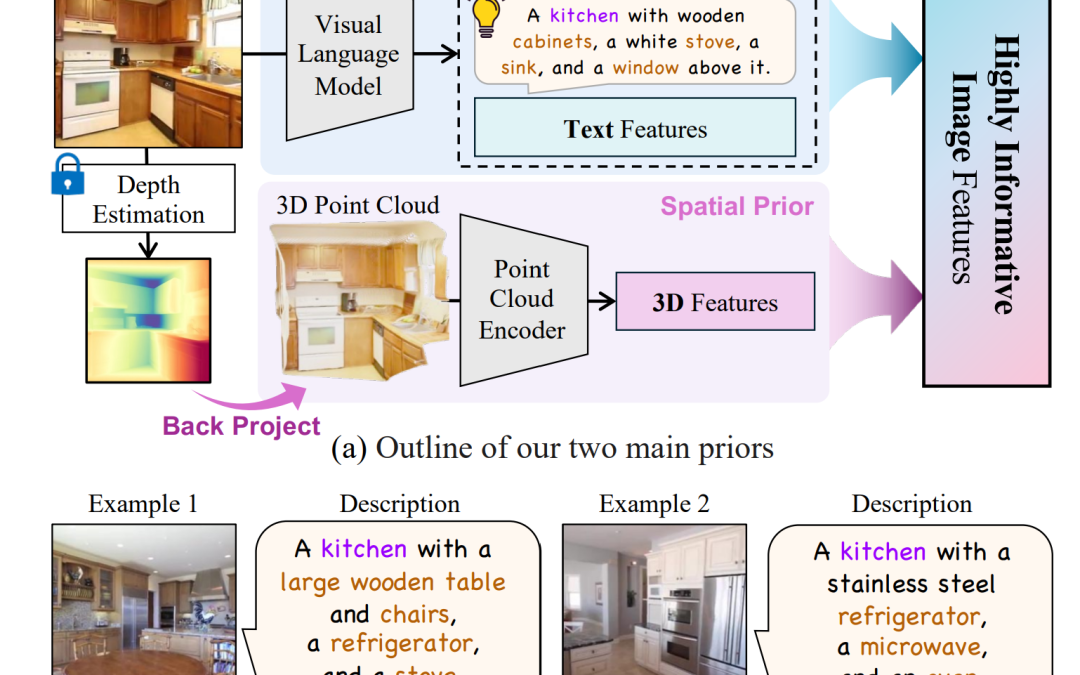

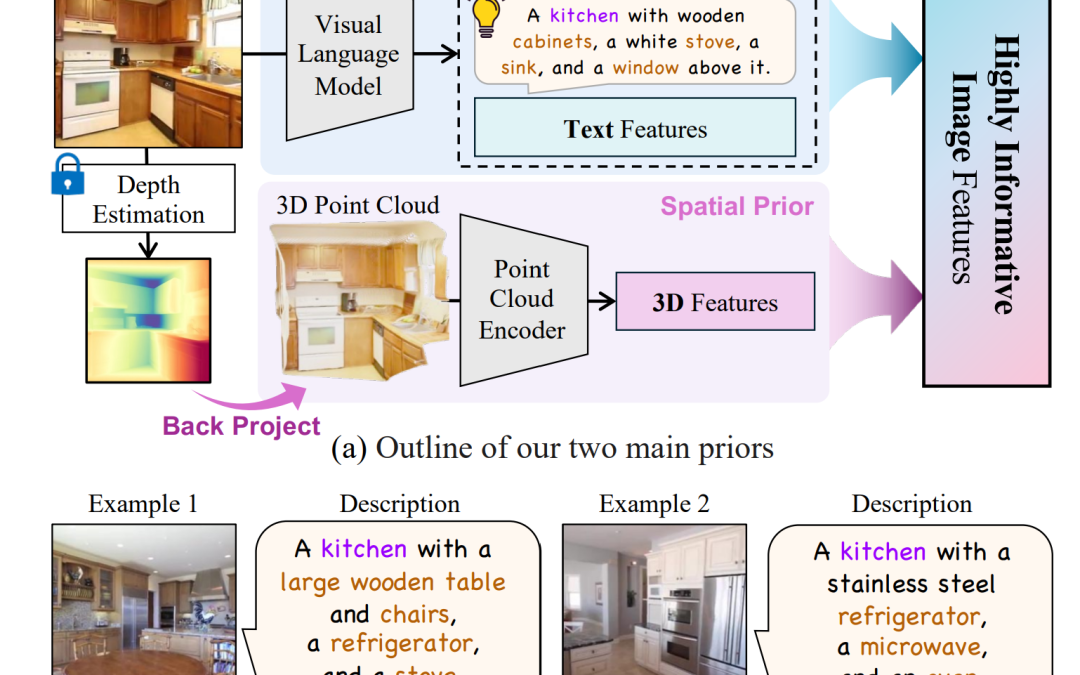

by Seunggeun Chi | Feb 3, 2025 | 2025, Karthik Ramani, Sangpil Kim, Seunggeun Chi

Recently, generalizable feed-forward methods based on 3D Gaussian Splatting have gained significant attention for their potential to reconstruct 3D scenes using finite resources. These approaches create a 3D radiance field, parameterized by per-pixel 3D Gaussian...

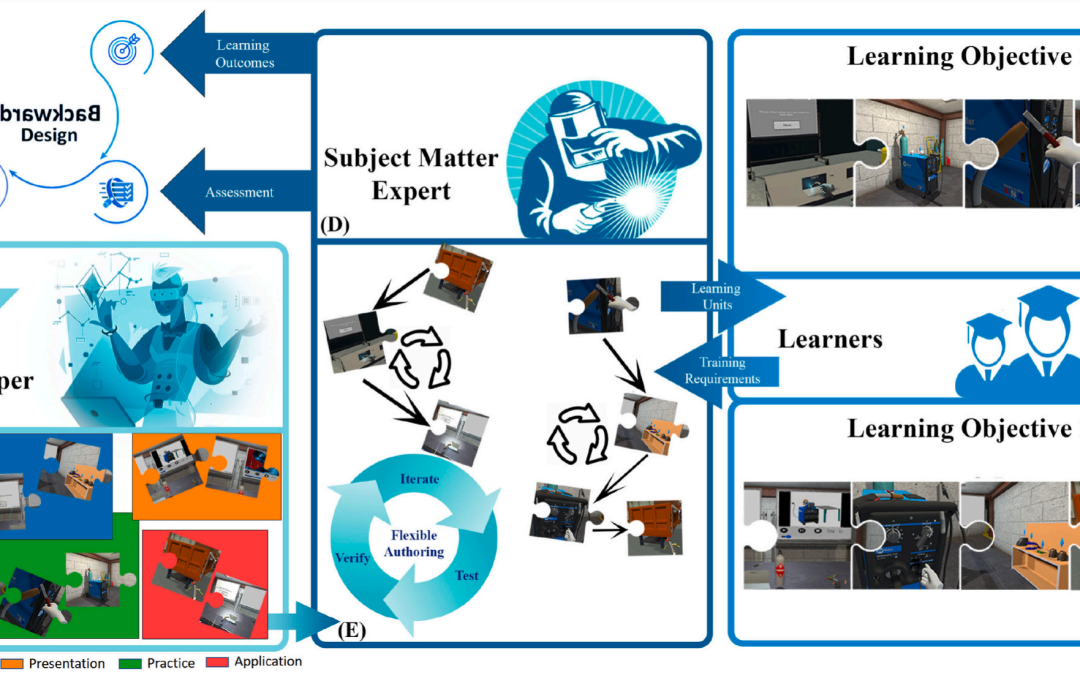

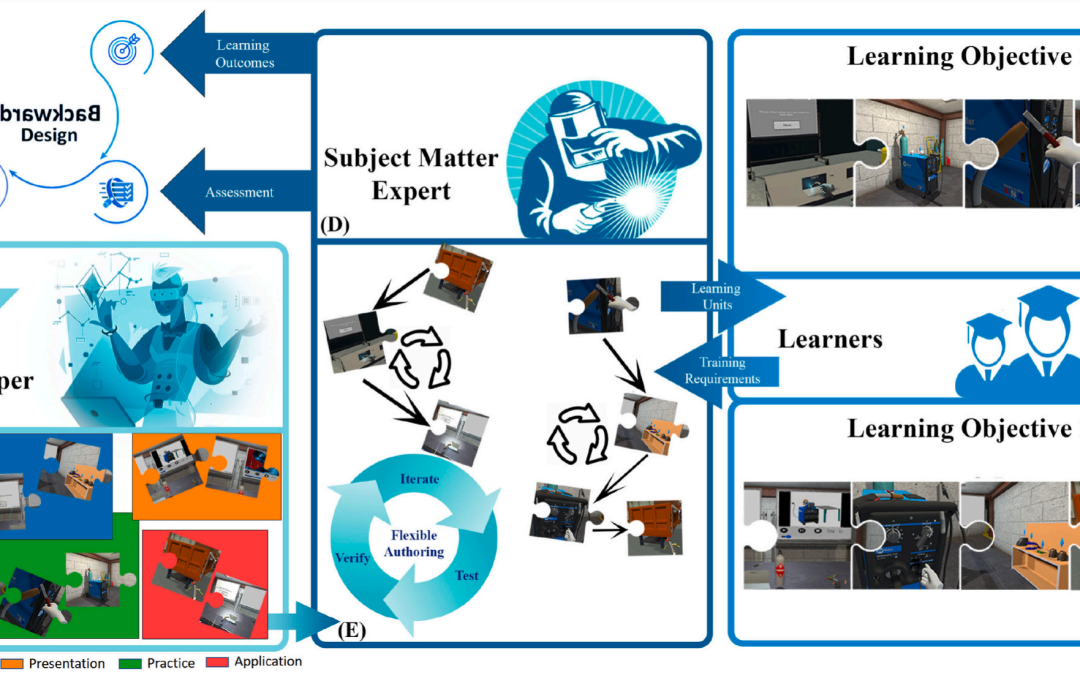

by Ananya Ipsita | Dec 1, 2024 | 2024, Ananya Ipsita, Asim Unmesh, Karthik Ramani, Recent Publications

Despite the recognized efficacy of immersive Virtual Reality (iVR) in skill learning, the design of iVR-based learning units by subject matter experts (SMEs) based on target requirements is severely restricted. This is partly due to a lack of flexible ways of...