Purdue research earns Best Paper Award runner-up at top networking conference

A Purdue University research team led by Christopher Brinton, Elmore Associate Professor in the Elmore Family School of Electrical and Computer Engineering, has earned the Best Paper Award Runner-Up at the ACM International Symposium on Theory, Algorithmic Foundations, and Protocol Design for Mobile Networks and Mobile Computing (MobiHoc 2025).

Purdue ECE PhD student Liangqi Yuan is first author on the paper, titled “Local-Cloud Inference Offloading for LLMs in Multi-Modal, Multi-Task, Multi-Dialogue Settings.” The recognition places the team’s work among the top three papers accepted to MobiHoc 2025, which is one of the premier international conferences in networking.

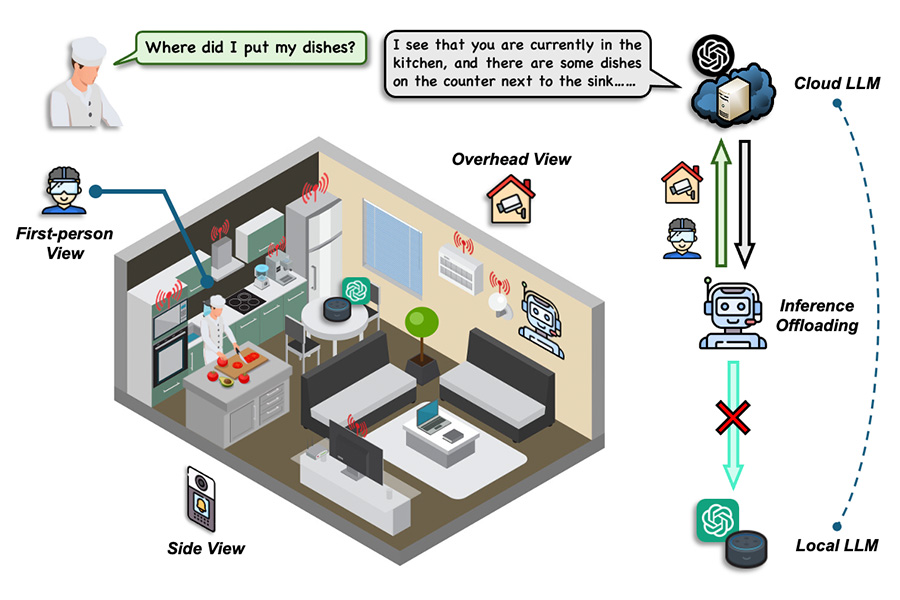

Large language models (LLMs), such as ChatGPT and similar systems, can handle a wide range of tasks, from writing and translation to analyzing images. But deploying these massive models efficiently is a growing challenge.

“Running LLMs entirely on local devices like smartphones or laptops can overwhelm their processing power, while running them in the cloud can introduce high costs and slow response times,” said Brinton. “Our work looks at how to balance those trade-offs intelligently.”

The team’s proposed system, called TMO (Three-M Offloading), decides when tasks should be handled locally and when they should be offloaded to the cloud. The “Three-M” in the name refers to the system’s ability to handle multi-modal data (like text and images), multi-task environments (different types of problems), and multi-dialogue interactions (ongoing conversations).

At the heart of TMO is a reinforcement learning algorithm, a type of machine learning that optimizes decisions through trial and error. In this case, it learns the best times to process data locally or in the cloud based on factors such as task complexity, response quality, latency, and cost.

To support this system, the researchers created a new dataset called M4A1, which contains real-world measurements of how different AI models perform across various data types, tasks, and dialogue settings.

“Our approach achieved significant improvements in response quality, speed, and cost efficiency compared to other methods,” said Yuan. “It shows how AI systems can be both high-performing and resource-aware.”

The implications of this research go beyond the lab. Smarter local-cloud coordination could make advanced AI tools more responsive and accessible on everyday devices, which is an important step toward scalable, sustainable AI deployment.

“Our work bridges the gap between local and cloud AI computing,” Brinton said. “It’s a move toward more adaptive systems that can deliver top-tier performance while staying practical for real-world use.”

The research was supported in part by the National Science Foundation, the Office of Naval Research, and the Air Force Office of Scientific Research.