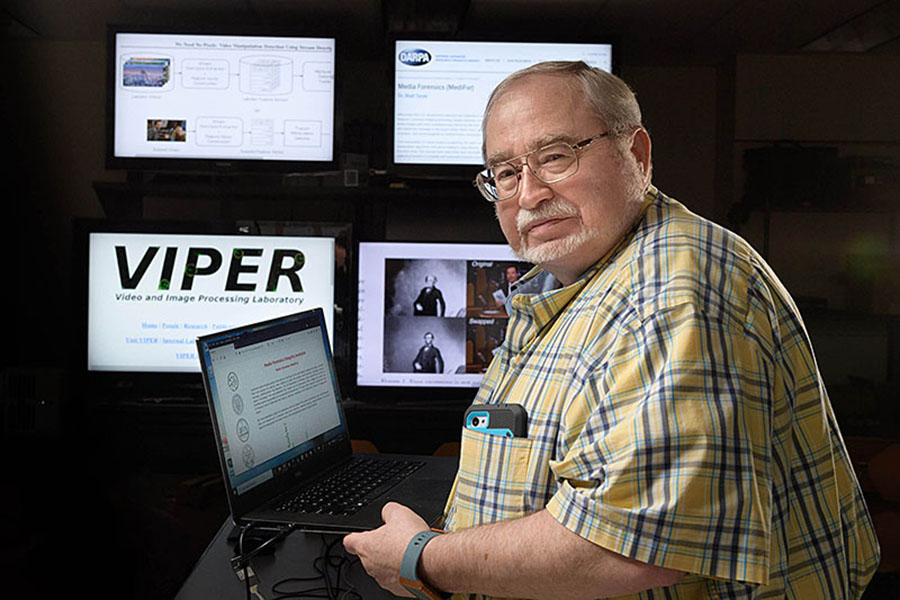

Prof. Edward J. Delp leads team developing advanced methods of identifying manipulated media

Tools to generate manipulated media have been around for decades and are becoming more and more sophisticated. However, deep learning is quickly outpacing existing research – making deep learning detection approaches necessary. A project led by Purdue University’s Elmore Family School of Electrical and Computer engineering is developing advanced methods of identification and attribution of manipulated and fake media. Edward J. Delp, Charles William Harrison Distinguished Professor of ECE, is leading the project, which is funded by the Defense Advanced Research Projects Agency (DARPA) as part of the Semantic Forensics (SemaFor) Program.

The goal of SemaFor is to develop a set of forensics tools to determine the integrity, semantic consistency, source, and intent of media assets such as images, videos, audio, and documents.

“There’s a lot going on in the world in terms of misinformation and disinformation,” says Delp, who directs the Video and Image Processing Laboratory (VIPER) at Purdue. “It’s becoming more and more necessary to develop advanced tools to detect this manipulated media.”

SemaFor technologies could help to identify, understand, and deter campaigns featuring adversarial disinformation. Purdue is working with a team of technical experts from six universities, with complementary skills and backgrounds in computer vision and biometrics, machine learning, digital forensics, as well as signal processing and information theory. Purdue and the University of Notre Dame are the only U.S. universities involved in the project. The remaining four are University of Siena, Politecnico di Milano, University Federico II of Naples, and University of Campinas, Brazil.

Also, being examined are articles trying to be passed off as originating from specific newspapers and social media such tweets. Delp said the work is called style detection and examines the writing style guides of a number of nationwide newspapers and social media.

Delp says there are four questions to consider when working with manipulated media, which includes images, text, audio, and video:

- Has this media asset been altered?

- Where has it been modified?

- What tools were used to manipulate the asset?

- What is the intent of the people who manipulated the asset?

Delp says while most of the research is at the direction of the U.S. Department of Defense, the Department of Health and Human Services has contributed funding to examine manipulation in scientific papers.

“We designed a system that actually runs at Purdue that people can submit scientific papers to and it will examine the papers to determine if the images and text have been manipulated,” he says. “For example, say the scientific paper has an image with a caption. Do the image and the caption match? Do they make sense together? Does the caption talk about something that doesn’t make any sense in relation to the image?”

In addition to this work for DARPA’s SemaFor Project, Delp’s lab is wrapping up five years of research for the Media Forensics (MediFor) Project, which developed automated technologies to detect manipulation in digital imagery, i.e., imagery from digital cameras, mobile phones, etc.