ECE researchers to study adversarial attacks on deep neural networks

Purdue researchers recently received an award from the Army Research Office (ARO) to conduct theoretical and experimental studies on adversarial robustness of deep neural networks. Over the past decade, deep neural networks have made substantial progress in several areas such as computer vision and speech recognition. However, studies have also shown that these networks are very vulnerable to adversarial attacks --- artificial patterns created intentionally to fool the neural networks. Concerns have been raised that if the networks can fail so easily, these powerful machine learning methods may be very unreliable.

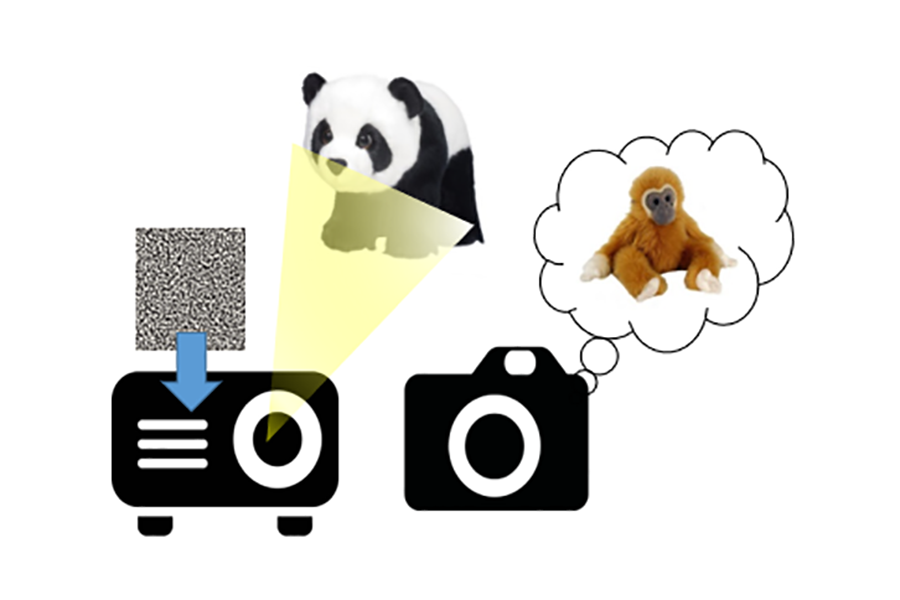

Purdue’s research will focus on adversarial attacks in real physical environments, by using computational imaging techniques to perturb the appearance of a 3D object so that the camera system will misclassify the object. Stanley Chan, assistant professor of electrical and computer engineering and statistics, is the Principal Investigator on the project.

“While most of the existing results in the literature are focusing on attack and defense in the digital domain such as images and videos, only a handful of studies have shown results in real physical environments,” says Chan. “Our goal is to fill the gap by theoretically analyzing the interactions between the attack and the environment. We hope that our conclusions can shed new light on the future research in adversarial robustness.”

The Army Research Office competitively selects and funds basic research proposals from educational institutions, nonprofit organizations and private industry that will enable crucial future Army technologies and capabilities.