by Ananya Ipsita | Dec 1, 2024 | 2024, Ananya Ipsita, Asim Unmesh, Karthik Ramani, Recent Publications

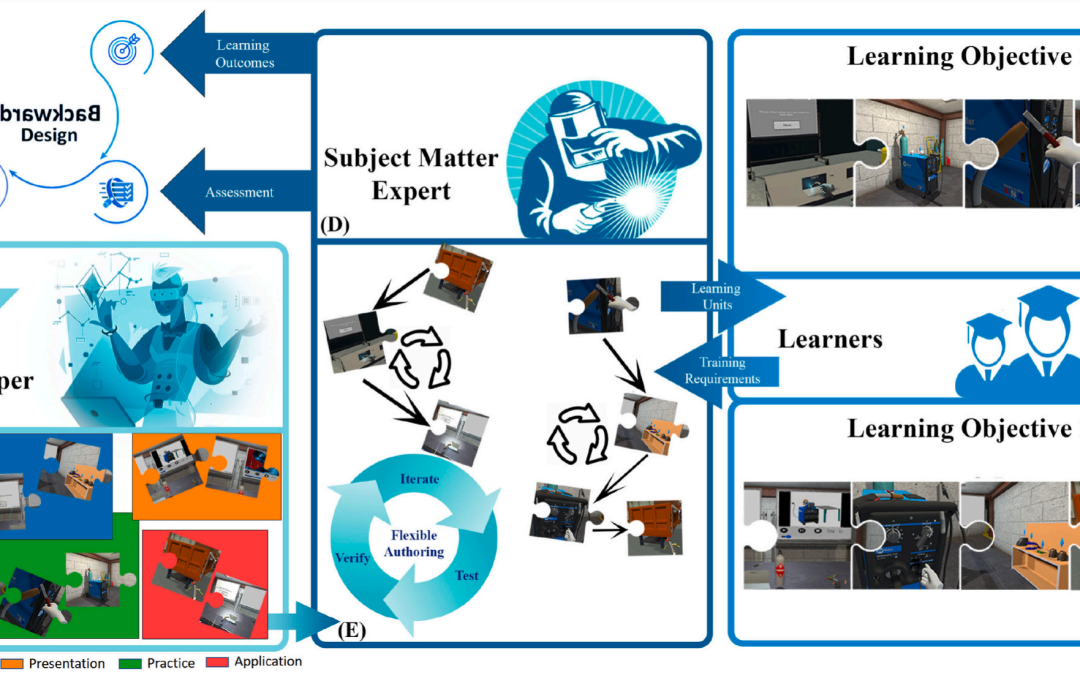

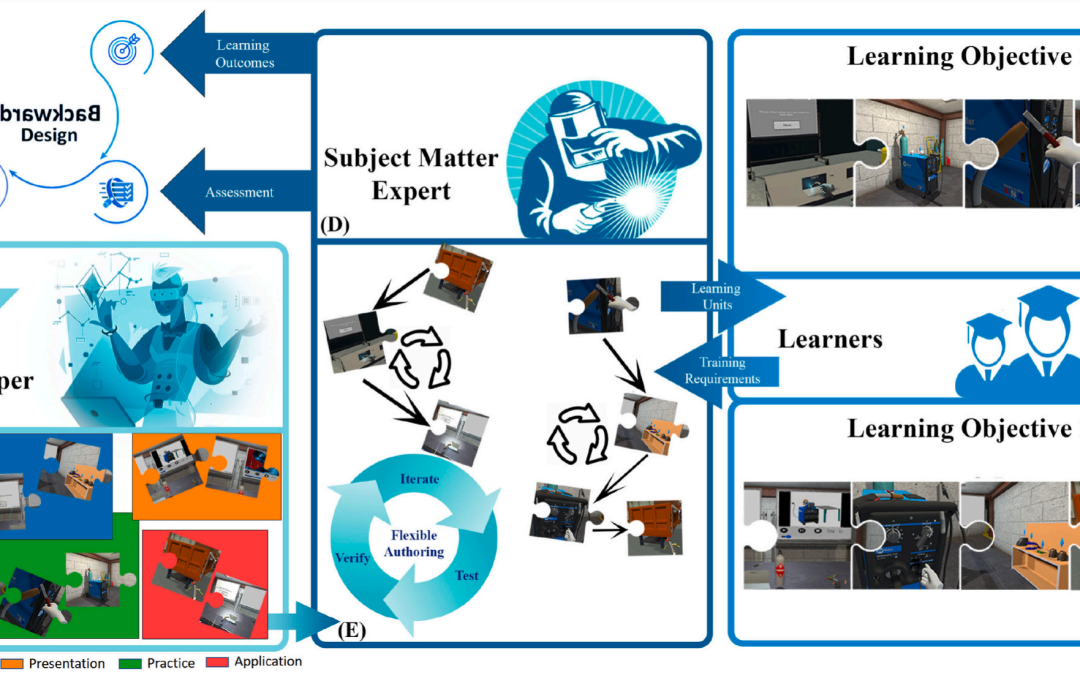

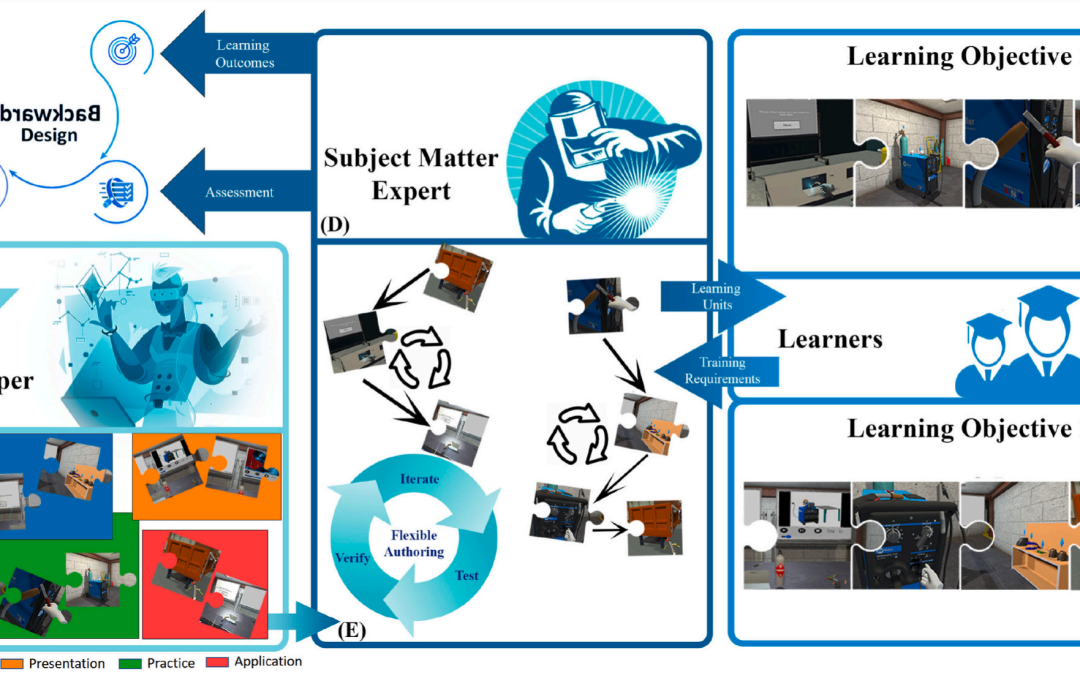

Despite the recognized efficacy of immersive Virtual Reality (iVR) in skill learning, the design of iVR-based learning units by subject matter experts (SMEs) based on target requirements is severely restricted. This is partly due to a lack of flexible ways of...

by Rahul Jain | Dec 1, 2024 | 2024, Asim Unmesh, Karthik Ramani, Karthik Ramani, Rahul Jain, Recent Publications, Subramanian Chidambaram

Computer vision (CV) algorithms require large annotated datasets that are often labor-intensive and expensive to create. We propose AnnotateXR, an extended reality (XR) workflow to collect various high-fidelity data and auto-annotate it in a single demonstration....

by Fengming He | Oct 24, 2024 | 2024, Fengming He, Karthik Ramani, Recent Publications, Xiyun Hu, Xun Qian, Zhengzhe Zhu

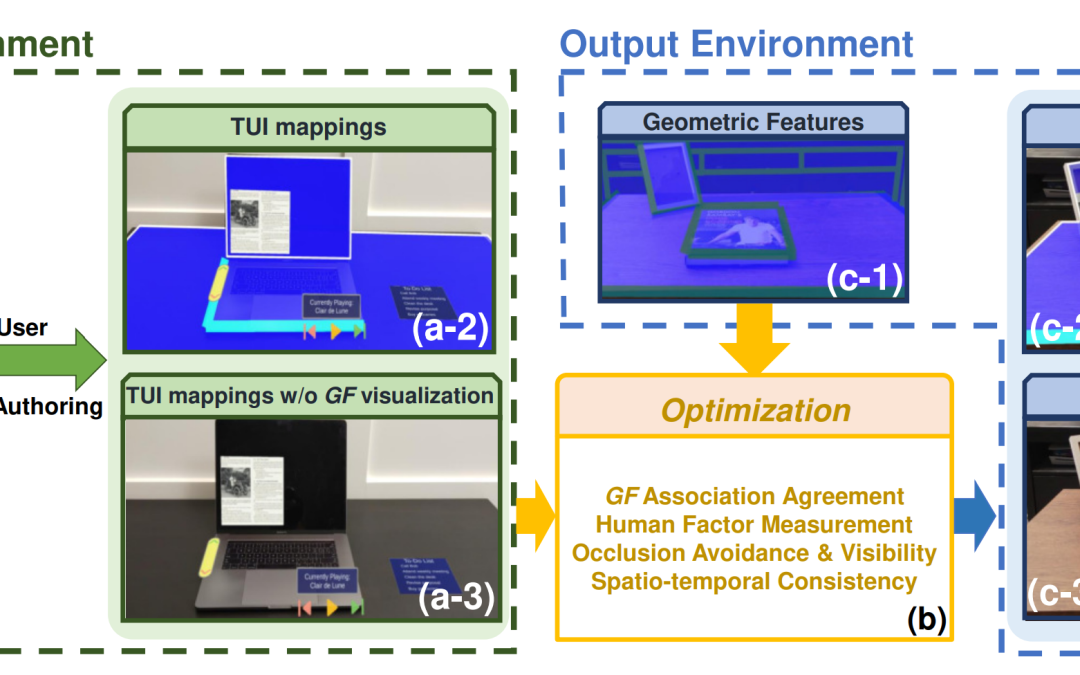

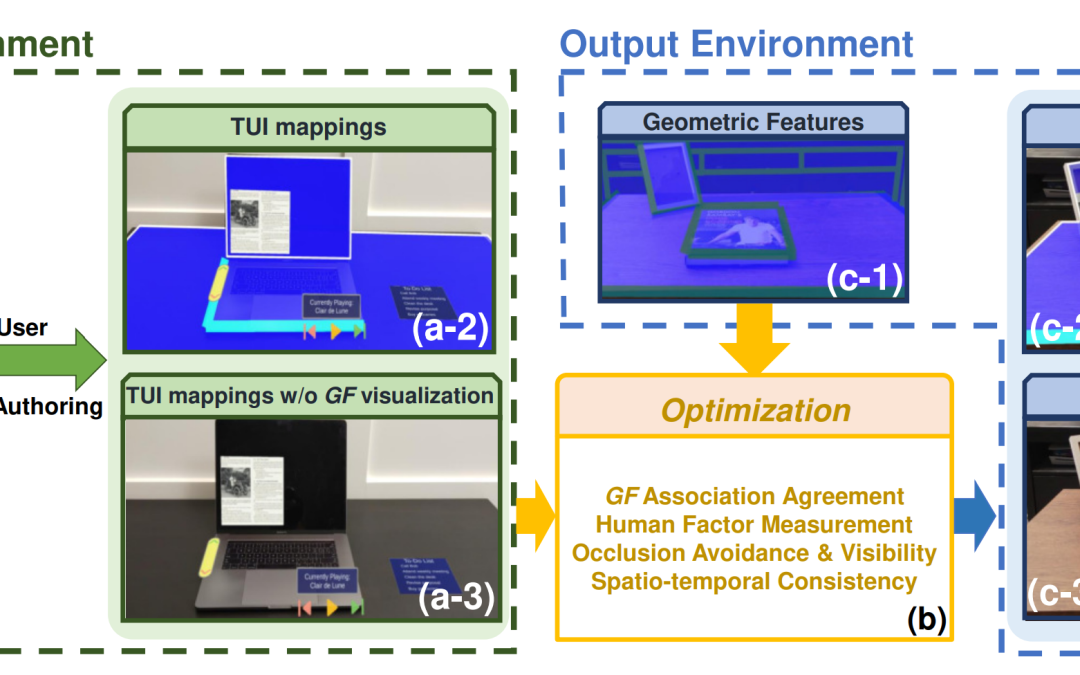

With the advents in geometry perception and Augmented Reality (AR), end-users can customize Tangible User Interfaces (TUIs) that control digital assets using intuitive and comfortable interactions with physical geometries (e.g., edges and surfaces). However, it...

by Hyung-gun Chi | Oct 24, 2024 | 2024, Hyunggun Chi, Karthik Ramani, Recent Publications

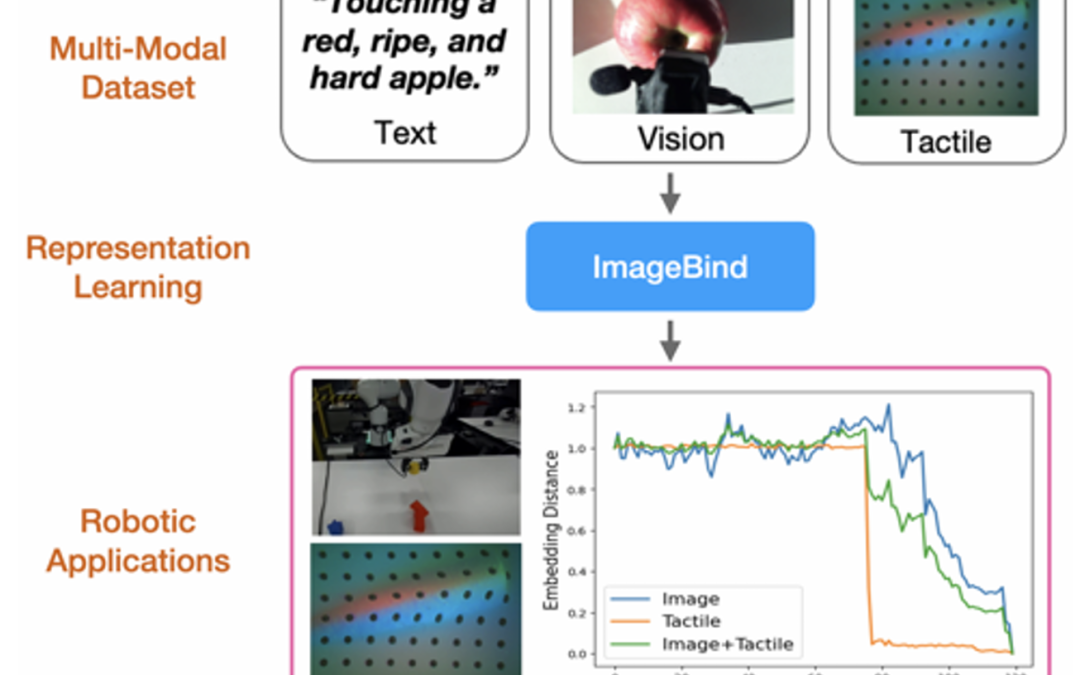

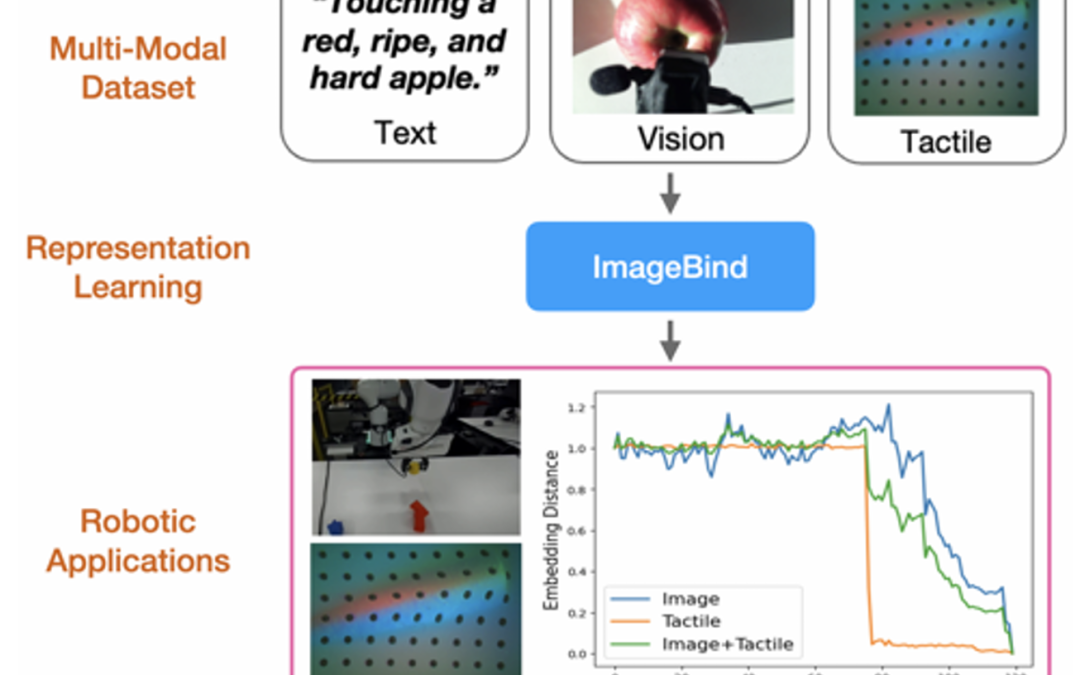

Advancements in embodied language models like PALM-E and RT-2 have significantly enhanced language-conditioned robotic manipulation. However, these advances remain predominantly focused on vision and language, often overlooking the pivotal role of tactile feedback...

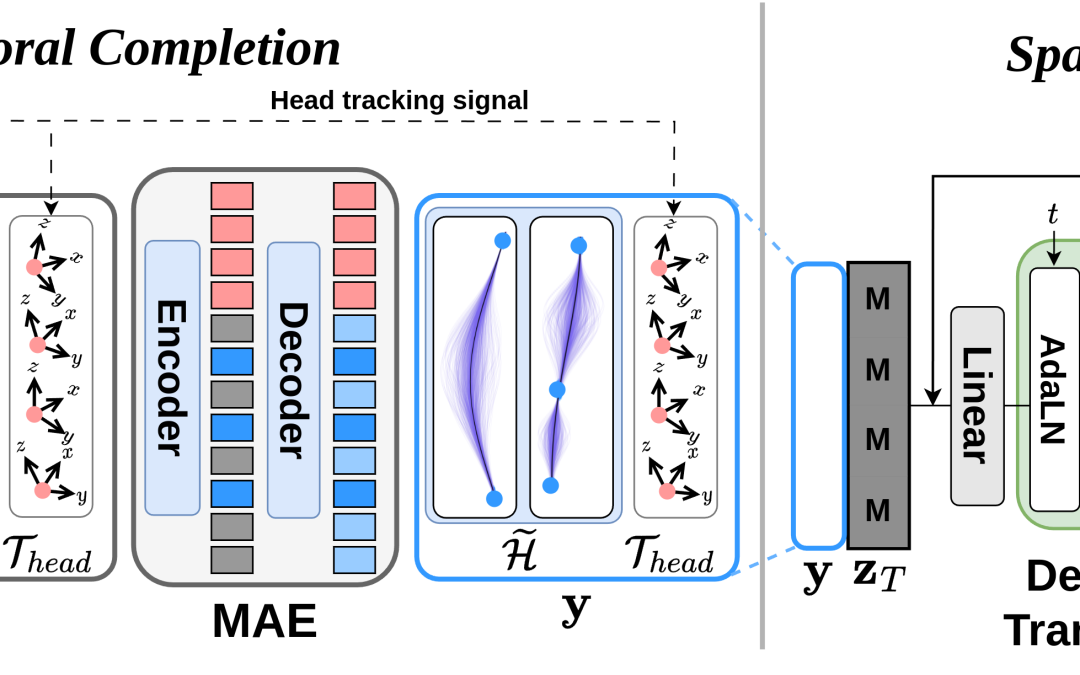

by Seunggeun Chi | Sep 27, 2024 | 2024, Karthik Ramani, Recent Publications, Seunggeun Chi

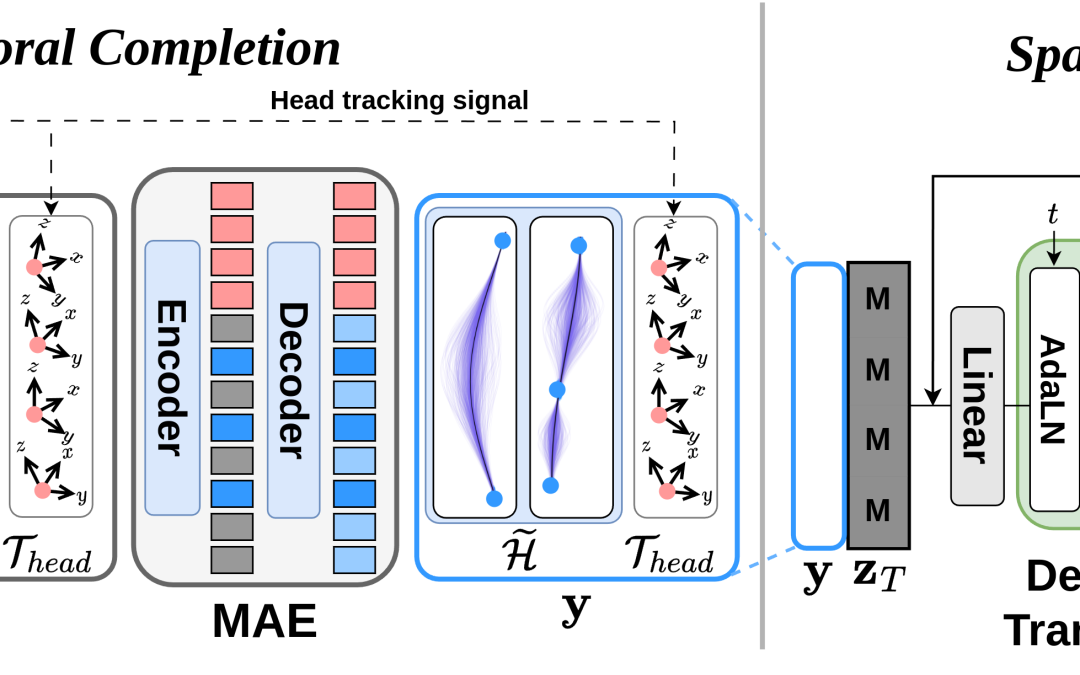

We study the problem of estimating the body movements of a camera wearer from egocentric videos. Current methods for ego-body pose estimation rely on temporally dense sensor data, such as IMU measurements from spatially sparse body parts like the head and hands....

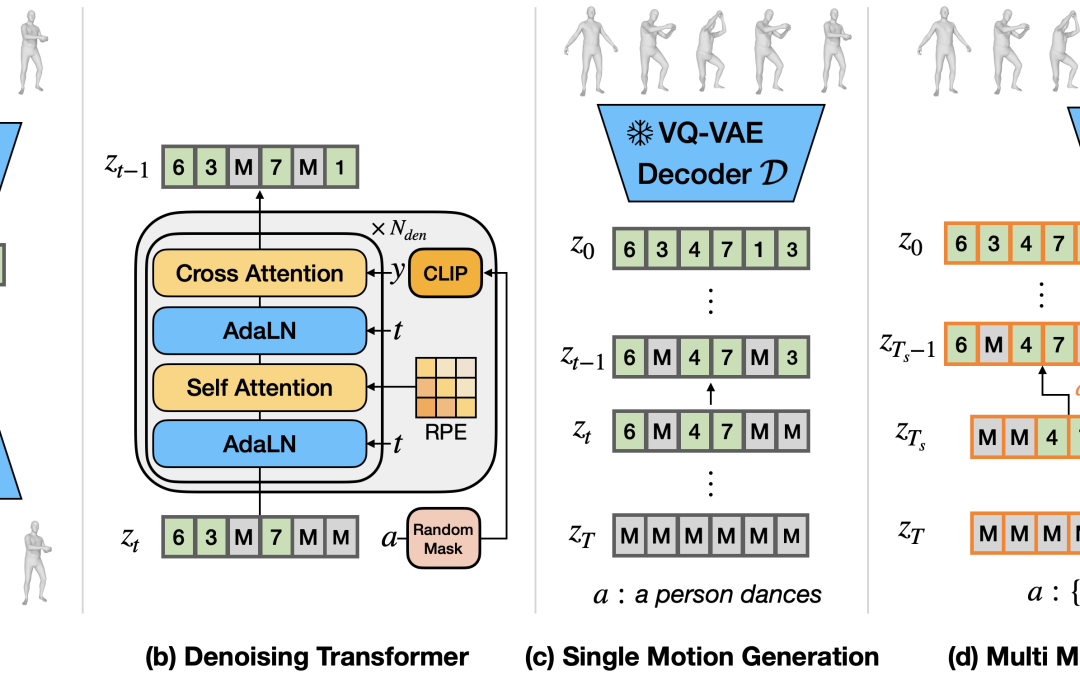

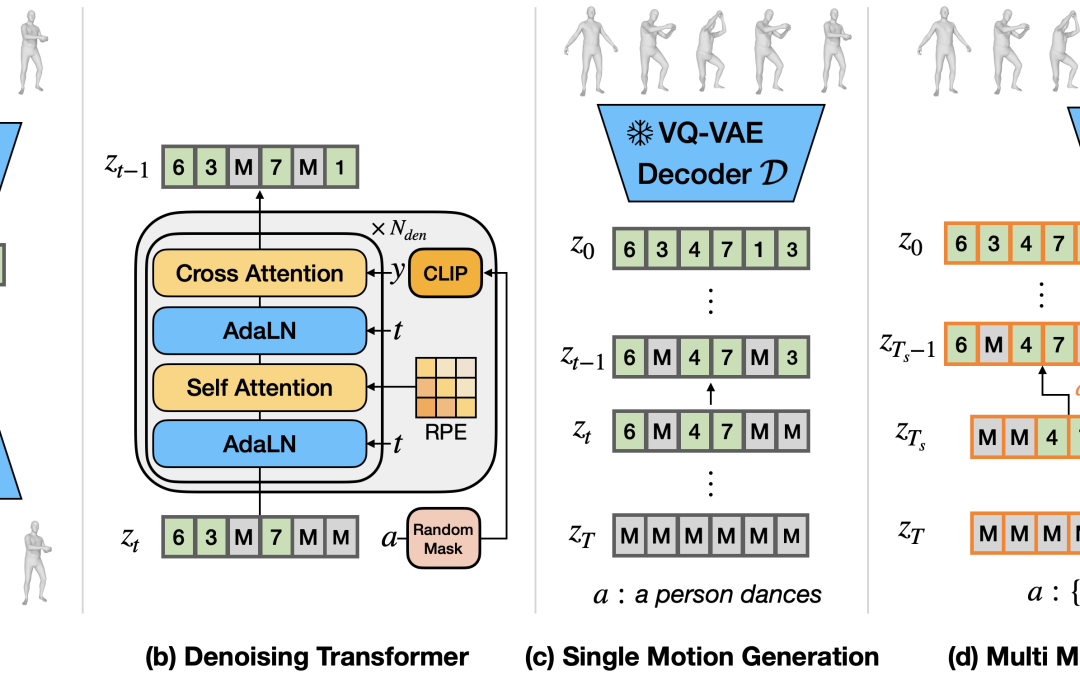

by Seunggeun Chi | Sep 26, 2024 | 2024, Featured Publications, Hyunggun Chi, Karthik Ramani, Karthik Ramani, Publications, Recent Publications, Seunggeun Chi

We introduce the Multi-Motion Discrete Diffusion Models (M2D2M), a novel approach for human motion generation from textual descriptions of multiple actions, utilizing the strengths of discrete diffusion models. This approach adeptly addresses the challenge of...