Edgar Rojas-Munoz's paper entitled "The AI-Medic: An Artificial Intelligent Mentor for Trauma Surgery" was named Best Paper Award. It was submitted to the 14th Augmented Environments for Computer Assisted Interventions workshop (AE-CAI), part of the 23rd International Conference on Medical Image Computing & Computer Assisted Intervention (MICCAI).

He is studying under Professor Juan Wachs at the industrial engineering lab, The Inteligent Systems and Assistive Technologies (ISAT).

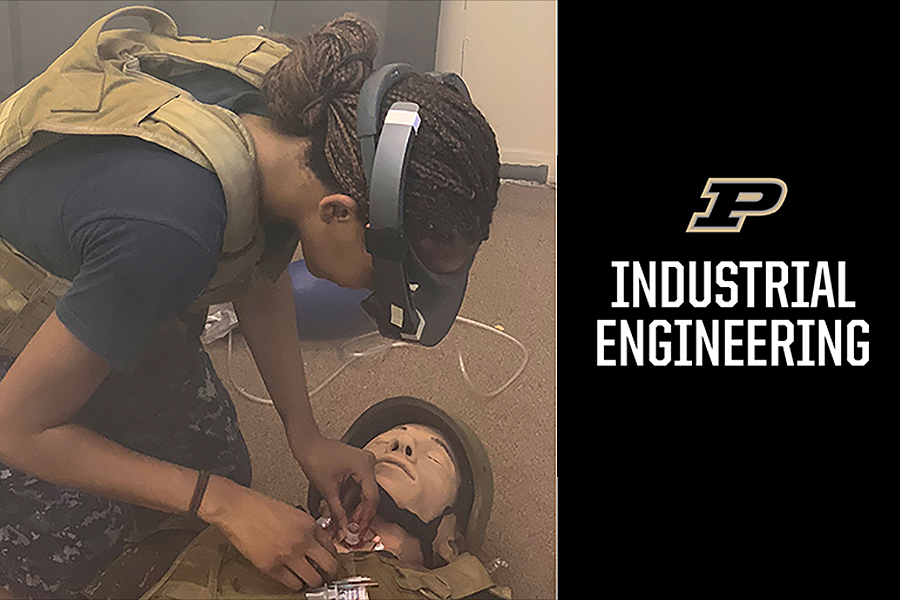

The technology, AI-Medic, has the potential of assisting remote medical specialists when no expert is available to help. This can greatly improve the way in which medical care is given in austere and rural regions. For instance, a rural medic can use our platform to revisit his or her medical knowledge during a surgery. In addition, the dataset compiled during this work can be a powerful baseline for future applications related to autonomous medical mentoring. Several researchers can use our dataset to train their own machine learning modules, which can have a positive impact in the field. Some of the next steps of this research include improving the robustness of the approach via novel machine learning techniques (i.e. state-of-the-art deep learning modules). Moreover, the size and distribution of the dataset can be expanded by including more repetitions of each medical procedure. Both these improvements will allow the AI-Medic to provide better medical guidance.

Congratulations, Edgar and Dr. Wachs!

Related Link: Wachs and Rojas-Munoz Demo VR for in-Field Medical Coaching

Related Video: watch

Abstract

Telementoring generalist surgeons as they treat patients can be essential when in situ expertise is not available. However, unreliable network conditions, poor infrastructure, and lack of remote mentors availability can significantly hinder remote intervention. To guide medical practitioners when mentors are unavailable, we present the AI-Medic, the initial steps towards an intelligent artificial system for autonomous medical mentoring. A Deep Learning model is used to predict medical instructions from images of surgical procedures. The model was trained using the Dataset for AI Surgical Instruction (DAISI), a dataset including images and instructions providing step-by-step demonstrations of surgical procedures. The dataset includes one repetition of 290 different procedures from 20 medical disciplines, for a total of 17,339 color images and their associated text descriptions. Both images and text descriptions were compiled via input of expert surgeons from five medical facilities and from academic textbooks. DAISI was used to train an encoder-decoder neural network to predict medical instructions given a view of the surgery. Afterwards, the predicted instructions were evaluated using cumulative BLEU scores and input from expert physicians. The evaluation was performed under two settings: with and without providing the model with prior information from test set procedures. According to the BLEU scores, the predicted and ground truth instructions were as high as 86±1% similar. Additionally, expert physicians subjectively assessed the algorithm via Likert scale questions, and considered that the predicted descriptions were related to the images. This work provides a baseline for AI algorithms to assist in autonomous medical mentoring.