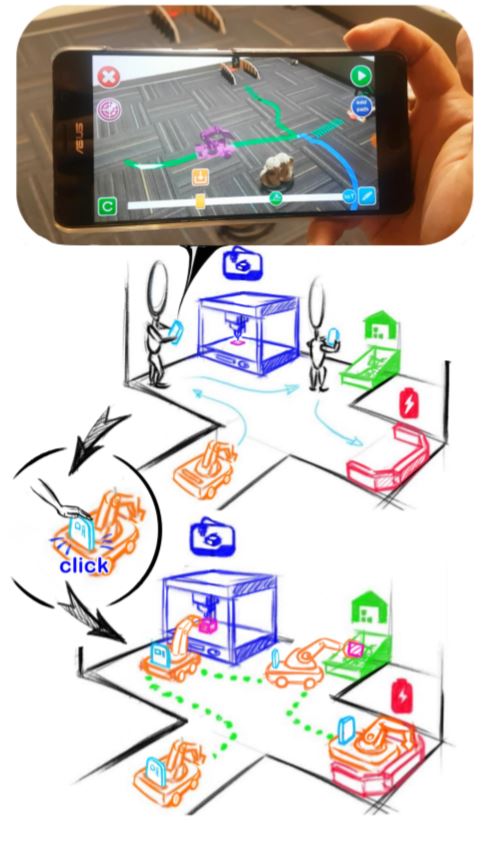

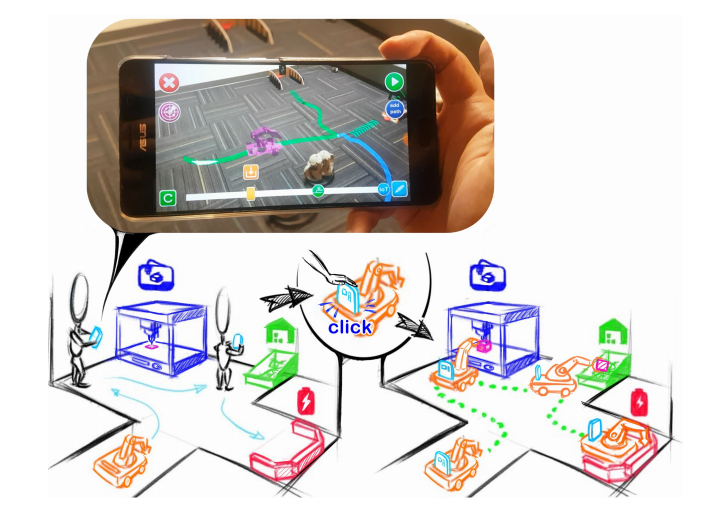

We present V.Ra, a visual and spatial programming system for robot-IoT task authoring. In V.Ra, programmable mobile robots serve as binding agents to link the stationary IoTs and perform collaborative tasks. We establish an ecosystem that coherently connects the three key elements of robot task planning (human-robot-IoT) with one single AR-SLAM device. Users can perform task authoring in an analogous manner with the Augmented Reality (AR) interface. Then placing the device onto the mobile robot directly transfers the task plan in a what-you-do-is-what-robot-does (WYDWRD) manner. The mobile device mediates the interactions between the user, robot and IoT oriented tasks, and guides the path planning execution with the SLAM capability.