Purdue Collaboration Awarded NSF Grant

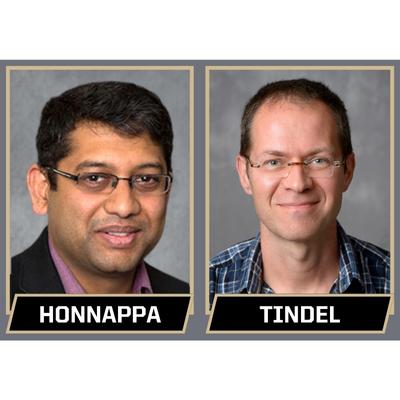

Purdue's Harsha Honnappa, professor of industrial engineering, and Samy Tindel, professor of mathematics, have teamed up with Prakash Chakraborty, associate professor in Penn State's Harold and Inge Marcus Department of Industrial and Manufacturing Engineering to work on developing RL methods for continuous-time and non-Markovian settings.

"Reinforcement learning (RL) methods have been embraced, in both academic and industrial settings, for solving a range of science and engineering problems involving dynamic system optimization. These problems run the gamut and include optimal resource allocation in, for example, ride-sharing, healthcare management and energy systems, pricing and trading risky assets in finance, autonomous vehicles and robots. RL is also increasingly being used in the physical sciences, for instance for discovering new materials and/or exploring the properties of known materials. Despite the growing importance and breadth of applications, RL methods are often found to perform poorly in actuality. RL methods have been primarily developed for discrete-time and so-called Markovian settings, while most real-world problems are better modeled in continuous time, with non-Markovian dynamics. The broad adoption of RL methods across science and engineering necessitates the investigation of how to develop RL methods for continuous-time and non-Markovian settings."

The project also includes provisions for national and international networking, mentoring of junior researchers, graduate and undergraduate students, dissemination of discoveries and interdisciplinary research.

Related link: Award & Abstract