- Josh (Zhao) got his work accepted into CVPR, yet again, second year running. This work is in the space of privacy of Federated Learning (FL) models, especially when they are being run on constrained devices, like embedded and mobile devices. This paper shows that the leaked data from our prior linear layer leakage attacks can be used for downstream training task. That improves the accuracy of the trained model beyond the vanilla FL training and almost reaching the holy grail of centralized training in some cases.

Ahaan and Atul, other DCSL researchers, helped Josh in this feat. Noteworthy is one of the three reviewers went into the accept side from borderline after the rebuttal and the following discussion.

Joshua C. Zhao, Ahaan Dabholkar, Atul Sharma, and Saurabh Bagchi, “Leak and Learn: An Attacker’s Cookbook to Train Using Leaked Data from Federated Learning.” Accepted to IEEE/CVF (CVPR), pp. 1-10, 2024. (Acceptance rate: Not known yet)

- Taylor (Schorlemmer) led the work that was accepted into IEEE Security and Privacy Symposium (S&P or Oakland). This work was jointly with faculty James (Taylor’s advisor) and Santiago. There are 4 graduate researchers who aided Taylor in this work, Kelechi, Luke, Myung, and Eman.

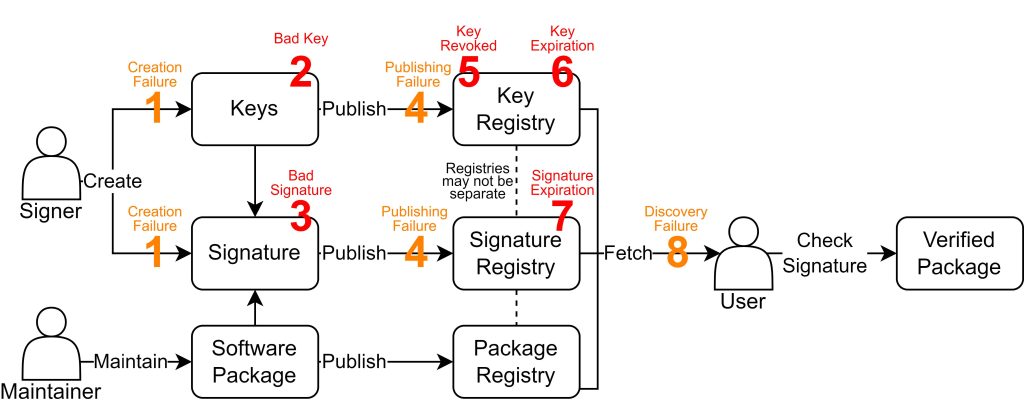

Many software applications incorporate open-source third-party packages distributed by third-party package registries. Guaranteeing authorship along this supply chain is a challenge. Package maintainers can guarantee package authorship through software signing. However, it is unclear how common this practice is, and whether the resulting signatures are created properly. Our work does a systematic study to answer these questions. For the study, we use three kinds of package registries: traditional software (Maven, PyPi), container images (DockerHub), and machine learning models (HuggingFace).

Taylor R. Schorlemmer, Kelechi G. Kalu, Luke Chigges, Kyung Myung Ko, Eman Abdul-Muhd Abu Ishgair, Saurabh Bagchi, Santiago Torres-Arias, and James C. Davis, “Signing in Four Public Software Package Registries: Quantity, Quality, and Influencing Factors,” Accepted to appear at the 45th IEEE Symposium on Security and Privacy (S&P), pp. 1-16, May 2024. (Acceptance rate: 261/1463 = 17.8%)