Facilities and Equipment at DCSL

Last updated: July 13, 2021

EMBEDDED AI TESTBED:

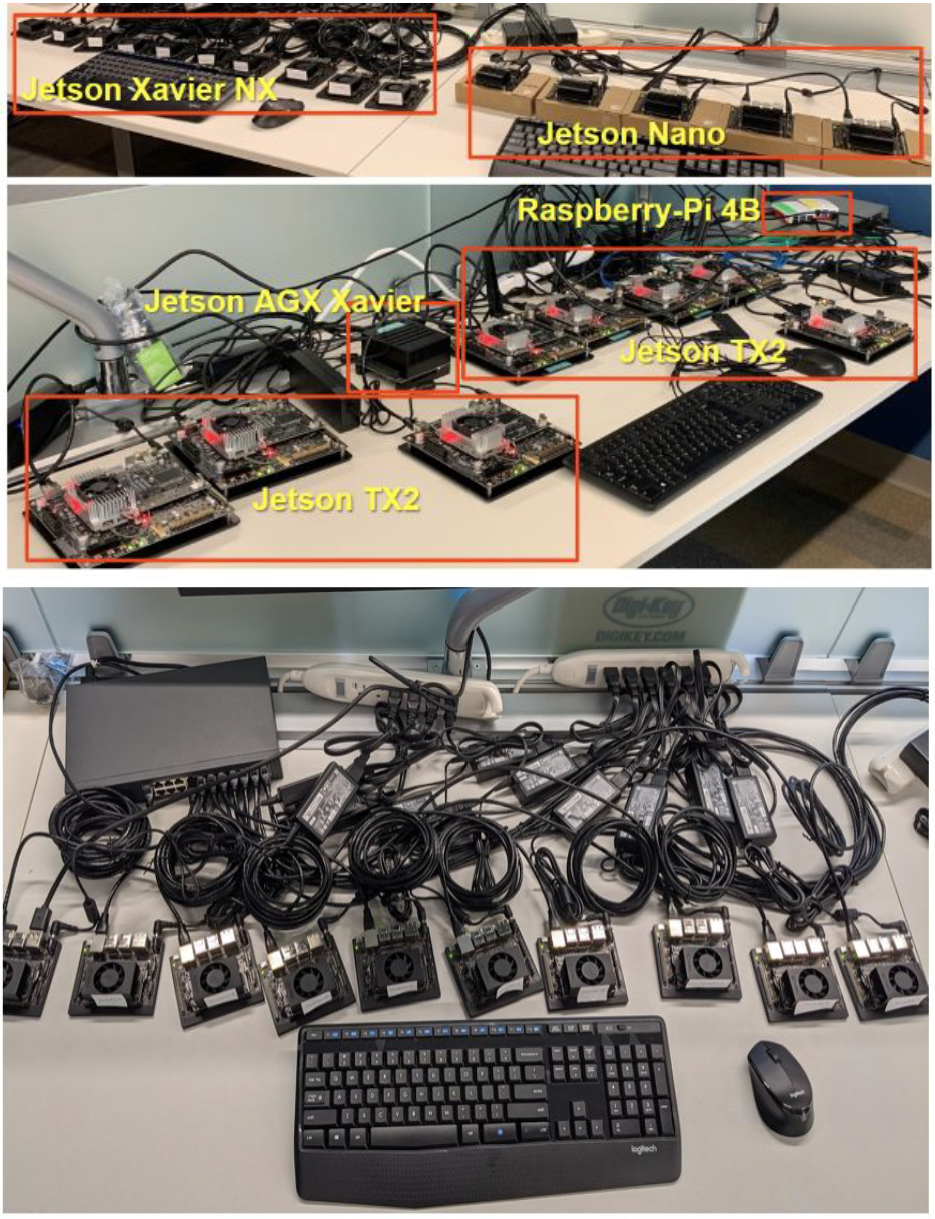

In

DCSL, we maintain a leading-edge testbed with embedded devices with GPUs on them. The testbed is heterogeneous and has four different classes of nodes (Jetson Nano, Jetson TX2, Jetson Xavier NX, and Jetson AGX Xavier. They are distributed in three locations across Purdue campus, thus affording another degree of heterogeneity. The testbed is set up for remote access and is routinely used by our external collaborators. The testbed devices in each subnet are connected to an external monitor and keyboard allowing for facilities like macroprogramming and monitoring of the loads on the devices.

Embedded AI testbed site

GPU CLUSTERS:

In

DCSL, we have two server clusters to perform computation-intensive tasks. For

CPU intensive tasks, like high-performance computing, we have multi-core

machines with up to 48 CPUs. For GPU intensive tasks, like training machine

learning models, we have a small cluster rwith GPU

capability, each equipped with high-end NVIDIA GPU cards (P100 GPU cards).

Apart from the in-house machines, our researchers regularly run their tasks on

public cloud services, like Amazon AWS, Google Compute, and Microsoft Azure. We

have an annual research award from Amazon which allows all our researchers to

use AWS compute credits.

IOT TESTBEDS:

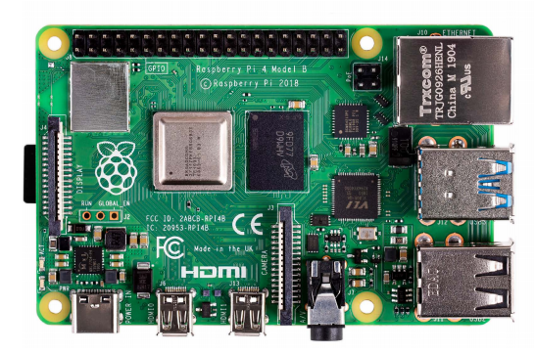

Researchers at DCSL have access to many lightweight and

energy saving IoT devices that are used in evaluating algorithms developed. The

latest version of Raspberry Pi 4 Model B is available in the lab. Researchers at DCSL have used Raspberry Pi as

an edge device which collects data from sensors through the IoT network and

analyze the data on the edge that can minimize the latency of data processing.

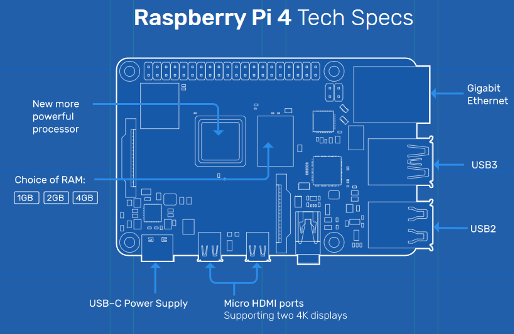

Figure 1. Hardware Architecture of Raspberry

Pi 4 Model B [1]

In addition, DCSL also offers a testbed of ARM development

boards which allows us to build, test and evaluate various protocols.

Currently, the testbed is being used to evaluate the energy consumption in

consensus protocols.

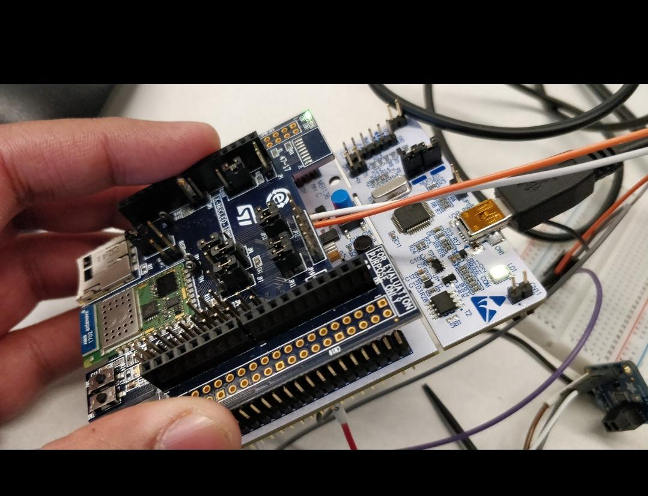

Figure 2. STM32F401RE with BLE Extension Board,

used for our distributed embedded security testbed

Table 1. IoT Devices Used in The Testbeds at DCSL

|

Vendor |

Model |

CPU / Memory |

Additional Features |

|

Raspberry Pi |

Raspberry Pi 4 |

64-bit quad-core processor, 4GB of

RAM |

Dual-display support at

resolutions up to 4K via a pair of micro-HDMI ports, Dual-band 2.4/5.0 GHz Wireless

LAN, Bluetooth 5.0, Gigabit Ethernet, USB 3.0, and PoE capability |

|

STM |

NUCLEO-F401RE |

ARM®32-bit Cortex®-M4 CPU with FPU, 512 KB Flash, 96 KB SRAM |

External BLE

and WiFi Boards are available, Arduino Uno Revision

3 connectivity |

|

STM |

STM32F407VG |

32-bit ARM®Cortex®-M4

with FPU core, 1-Mbyte Flash memory, 192-Kbyte RAM |

BLE extension

available, LIS302DL

or LIS3DSH ST MEMS 3-axis accelerometer |

|

Saleae |

LOGIC PRO 8 |

|

Sampling

digital and analog signals from embedded boards, compatible with current

sensor, TI-INA169 that can be used to measure energy. |

Figure 3. Raspberry Pi 4 Model B [2]

Reference:

[1] https://www.raspberrypi.org/products/raspberry-pi-4-model-b/specifications/

DIGITAL AG TESTBEDS:

Researchers at DCSL have access to edge devices such as Jetson TX2 to implement

Deep Neural Networks (DNN). Jetson TX2 is the fastest and most power-efficient

embedded AI computing device. This 7.5-watt supercomputer on a module brings

true AI computing at the edge. It’s built around an NVIDIA Pascal™-family GPU

and loaded with 8GB of memory and 59.7GB/s of memory bandwidth. It features a

variety of standard hardware interfaces that make it easy to integrate it into

a wide range of products and form factors. [1]

The specification of TX2 is as following:

Ø 2+4 CPUs (Dual-Core

NVIDIA Denver 2 64-Bit CPU, Quad-Core ARM® Cortex®-A57 MPCore)

Ø 8GB RAM (128-bit LPDDR4

@ 1866Mhz | 59.7

GB/s)

Ø 256-core NVIDIA Pascal

GPU, Ubuntu 18.04, CUDA 10.0.326 + tensorflow-gpu1.14

Figure 4. Jetson TX2 [1]

Reference:

[1]: https://developer.nvidia.com/embedded/jetson-tx2

Prof. Bagchi leads the IoT and Data

Analytics thrust of the $39M WHIN project (2018-23). We have IoT deployments in

experimental farms as well as production farms around Purdue plus deployment on

Purdue campus. The farm deployments are measuring temperature, humidity, soil

nutrient quality, etc. while the campus deployments are measuring temperature,

barometric pressure, and humidity. These are a unique resource for us to

experiment with our protocols at scale and under real-world conditions.

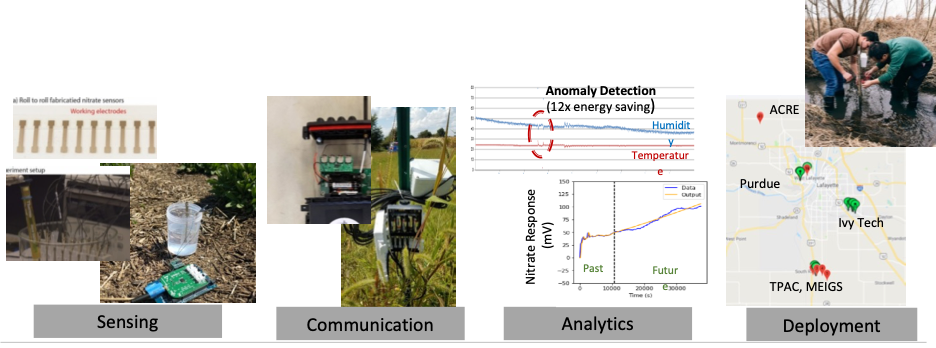

Figure 5. IoT workflow for our digital Agriculture theme

of the WHIN project

Sensing: Nitrate sensor scale up manufacturing (+

phosphate, pesticides, microbial activity, …), Soil moisture, temperature

Decagon sensors.

Communication: Optimized long-range

wireless networking using LoRa, optimized short-range

wireless networking using BLE, Mesh routing

Analytics: On-sensor such as

threshold matching, On-edge device such as ARIMA, On-backend server such as

LSTM

Deployment: Farms in Tippecanoe,

Benton counties, including TPAC and ACRE

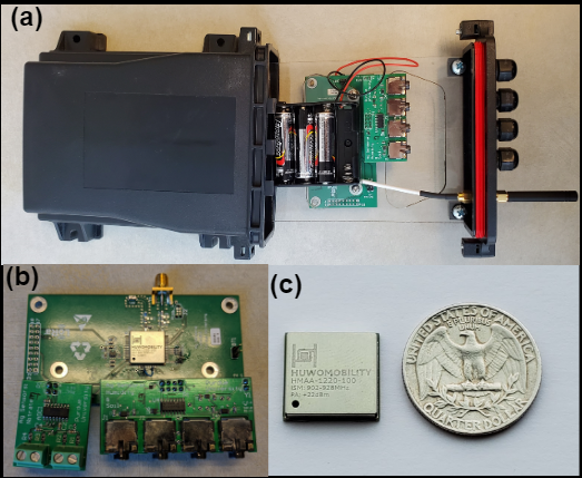

Figure 6. The hardware platform designed by us: (a)

Motherboard PCB and battery holder with its packaging, (b) Motherboard PCB with

two daughter boards, (c)the HMAA-1220 module

PURDUE CAMPUS-WIDE

TESTBED

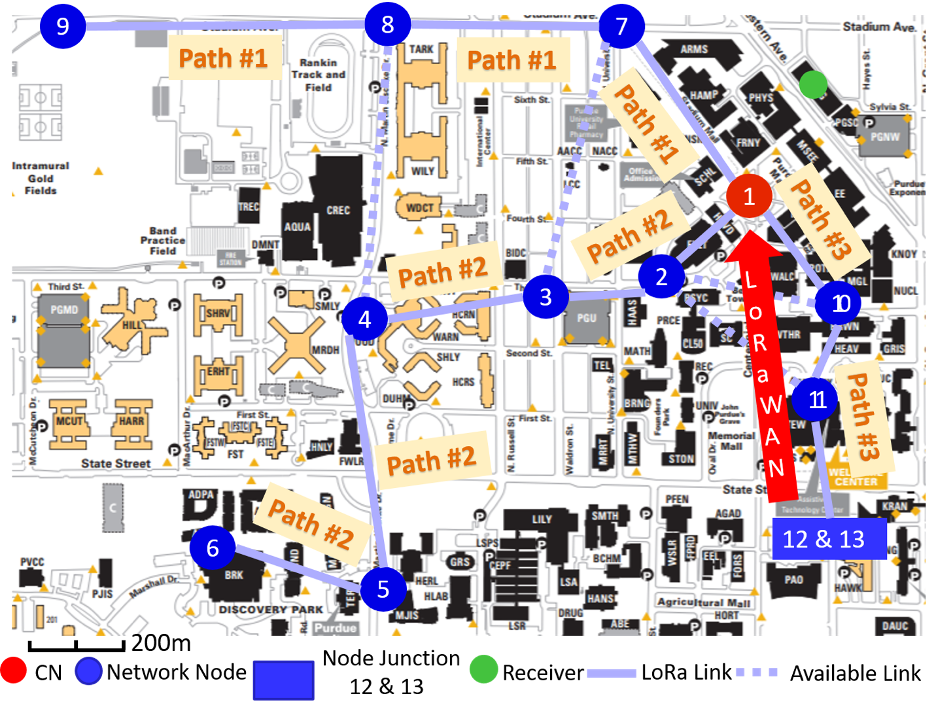

Figure 7. Campus-wide live experimentation testbed of a wireless

mesh network

In the

campus-scale deployment, we placed 13 LoRa nodes,

distributed in a 1.1 km by 1.8 km area of Purdue University campus. All 13

nodes are deployed and continuously operated when experimentation is needed. We

have received authorization from campus police for this deployment and there is

signage at all the nodes to indicate that these are for research. The figure shows

the complete map of our campus-scale experiment where a complete mesh network

is established. Each blue dot represents a network node which are randomly and

evenly distributed across the entire Purdue campus. The red node represents the

location of the center node. The green dot represents the receiver, a laptop

connected with SX1272DV K1CAS (LoRa development kit)

from Semtech. Each solid line represents the actual LoRa link for the deployed network and the dotted line

represents available LoRa links that were not being

used. The node junction represents two nodes (node 12 and node 13) that were

deployed on purpose at close range and were communicating via ANT instead of LoRa. In addition, three paths that are highlighted

represent three distinguished data flows that are formed by the mesh network.

For instance, path number 1 represents the path 9 ® 8 ® 7 ® 1. All nodes (including the center

node) are located 1 meter above ground level and the receiver is placed on the

3rd floor inside of an office building facing south-east. The furthest node is

placed 1.5 km away from the receiver and across more than 18 buildings in

between.

ADVANCED

MANUFACTURING TESTBEDS:

Researchers at DCSL have access to piezoelectric sensors and MEMS

sensors that measure changes in pressure, acceleration, temperature, strain.

The sensors vary in sampling rate (10Hz with MEMS, 3.2kHz with the

piezoelectric sensor). DCSL has been

conducting experiments with a motor testbed (shown in Fig. 5) to collect

machine condition data (i.e., acceleration) for different health conditions.

This data is currently being used for defect-type classification and learning

transfer tasks in our research work.

Figure 8. A motor testbed equipped with sensors for

measuring vibration at all frequencies

Figure 9. Pizoelectric and MEMS sensors mounted on the motor testbed

WEARABLE DEVICES:

The laboratory is

equipped with a variety of high-end mobile and wearable devices, supported on

Android and Wear OS architecture respectively. These devices help us in our

ongoing mission to improve the overall reliability of mobile and wearable

platforms. Table 2 and Table 3 provide details on the mobile and wearable

device models available in the Lab.

Table 2.

Smartphone Models.

|

Vendor |

Model |

CPU / Memory |

Sensors |

|

Huawei |

Nexus 6P |

Snapdragon 810,

3GB RAM, 32 GB storage |

Fingerprint (rear-mounted),

accelerometer, gyro, proximity, compass, barometer. |

|

LG |

Nexus 5X |

Snapdragon 808,

2GB RAM, 32 GB storage |

Fingerprint

(rear-mounted), accelerometer, gyro, proximity, compass, barometer. |

|

Motorola |

G6 |

Snapdragon 450,

3GB RAM, 32 GB storage |

Fingerprint

(front-mounted), accelerometer, gyro, proximity, compass. |

Table 3. Wearable Device Models.

|

Vendor |

Model |

CPU / Memory |

Sensors |

|

Motorola |

Moto 360 |

Quad-core 1.2

GHz Cortex-A7, 4GB |

Accelerometer,

gyro, heart rate |

|

Huawei |

Watch 2 |

Quad-core 1.1

GHz Cortex-A7, 4GB |

Accelerometer,

gyro, heart rate, barometer, compass |

|

Fossil |

Sport

Smartwatch |

Snapdragon Wear

3100 SoC, 4GB |

Accelerometer,

gyro, heart rate, light, compass/magnetometer,

vibration/haptics |