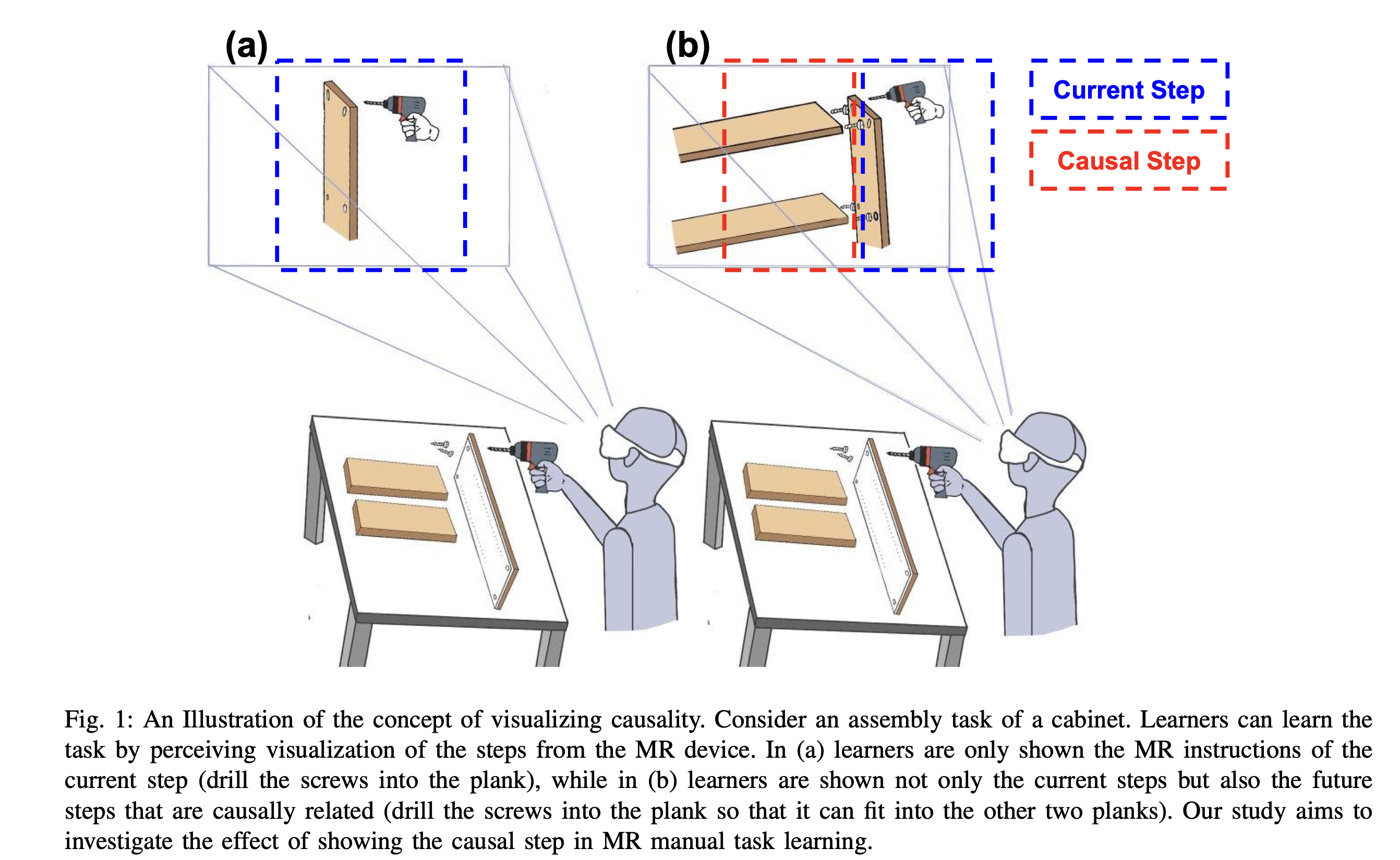

Mixed Reality (MR) is gaining prominence in manual task skill learning due to its in-situ, embodied, and immersive experience. To teach manual tasks, current methodologies break the task into hierarchies (tasks into subtasks) and visualize not only the current subtasks but also the future ones that are causally related. We investigate the impact of visualizing causality within an MR framework on manual task skill learning. We conducted a user study with 48 participants, experimenting with how presenting tasks in hierarchical causality levels (no causality, event-level, interaction-level, and gesture-level causality) affects user comprehension and performance in a complex assembly task. The research finds that displaying all causality levels enhances user understanding and task execution, with a compromise of learning time. Based on the results, we further provide design recommendations and in-depth discussions for future manual task learning systems.

Visualizing Causality in Mixed Reality for Manual Task Learning: A Study

Authors: Rahul Jain*, Jingyu Shi*, Andrew Benton; Moiz Rasheed; Hyungjun Doh; Subramanian Chidambaram and Karthik Ramani

IEEE Transactions on Visualization and Computer Graphics

10.1109/TVCG.2025.3542949

Rahul Jain

Rahul Jain has been a Ph.D. student in the School of Electrical and Computer Engineering at Purdue University since Spring 2022. He is conducting research under Professor Karthik Ramani’s Convergence Design Lab. He received his Master’s in Electrical and Computer Engineering at Purdue University and Bachelor’s in Civil Engineering at Indian Institute of Technology (IIT), Patna. His current research focuses on area of Computer Vision, Machine Learning and human-computer interactions utilizing AR/VR.