Understanding the intent behind human gestures is a critical problem in the design of gestural interactions. A common method to observe and understand how users express gestures is to use elicitation studies. However, these studies requires time-consuming analysis of user data to identify gesture patterns. Also, the analysis by humans cannot describe gestures in as detail as in data-based representations of motion features. In this paper, we present GestureAnalyzer, a system that supports exploratory analysis of gesture patterns by applying interactive clustering and visualization techniques to motion tracking data. GestureAnalyzer enables rapid categorization of similar gestures, and visual investigation of various geometric and kinematic properties of user gestures. We describe the system components, and then demonstrate its utility through a case study on mid-air hand gestures obtained from elicitation studies.

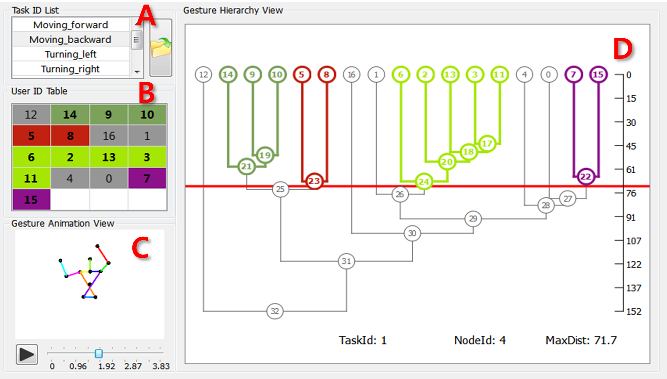

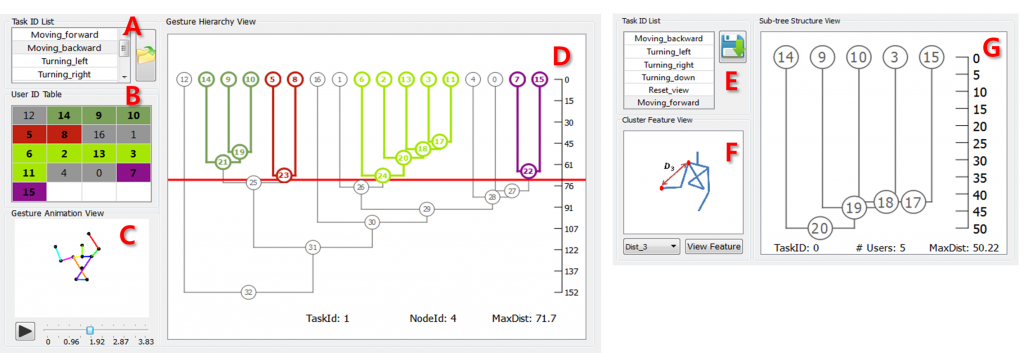

The GestureAnalyzer interface. (A) is a list of tasks loaded from the database. (B) shows a table of user IDs. (C) shows the animation of user gestures. (D) is a panel that shows the interactive hierarchical clustering of gesture data. Information of currently selected task and cluster node are given at the bottom. (E) is a list of output clusters generated from the interactive hierarchical clustering. (F) provides a visual definition of gesture feature. (G) shows a tree diagram of gesture clusters.