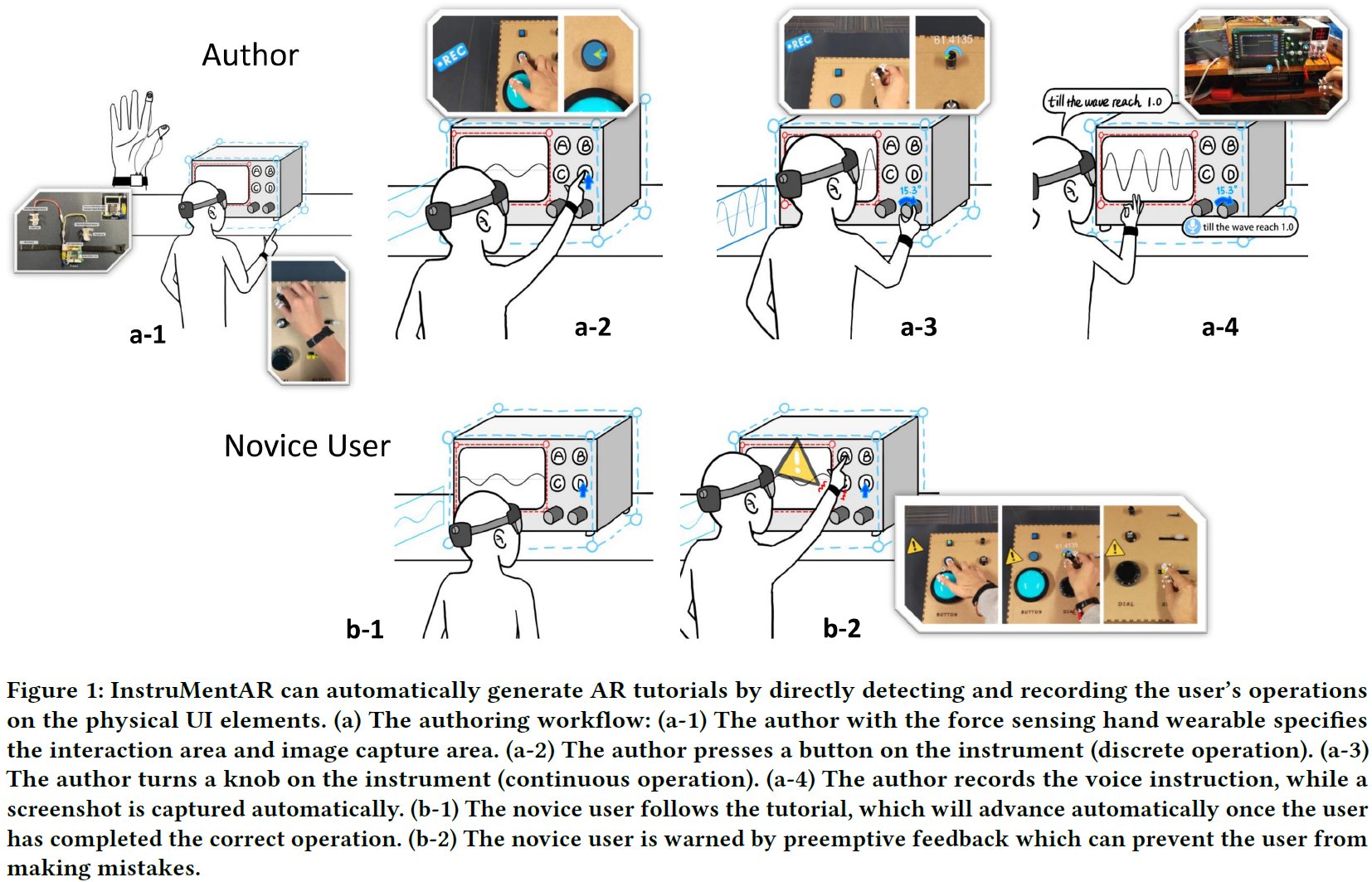

Augmented Reality tutorials, which provide necessary context by directly superimposing visual guidance on the physical referent, represent an effective way of scaffolding complex instrument operations. However, current AR tutorial authoring processes are not seamless as they require users to continuously alternate between operating instruments and interacting with virtual elements. We present InstruMentAR, a system that automatically generates AR tutorials through recording user demonstrations. We design a multimodal approach that fuses gestural information and hand-worn pressure sensor data to detect and register the user’s step-by-step manipulations on the control panel. With this information, the system autonomously generates virtual cues with designated scales to respective locations for each step. Voice recognition and background capture are employed to automate the creation of text and images as AR content. For novice users receiving the authored AR tutorials, we facilitate immediate feedback through haptic modules. We compared InstruMentAR with traditional systems in the user study.