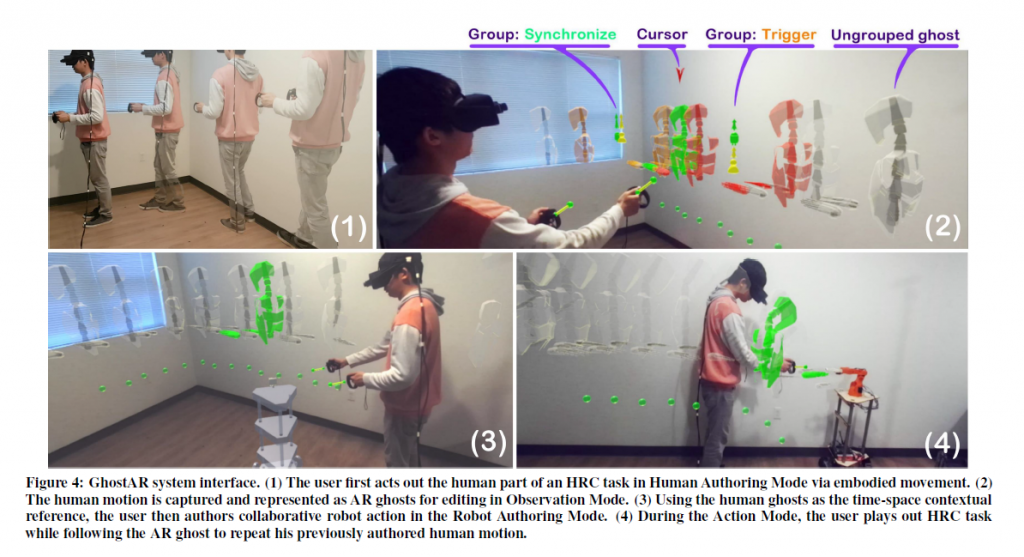

We present GhostAR, a time-space editor for authoring and acting Human-Robot-Collaborative (HRC) tasks in-situ. Our system adopts an embodied authoring approach in Augmented Reality (AR), for spatially editing the actions and programming the robots through demonstrative role-playing. We propose a novel HRC workflow that externalizes user’s authoring as demonstrative and editable AR ghost, allowing for spatially situated visual referencing, realistic animated simulation, and collaborative action guidance. We develop a dynamic time warping (DTW) based collaboration model which takes the real-time captured motion as inputs, maps it to the previously authored human actions, and outputs the corresponding robot actions to achieve adaptive collaboration. We emphasize an in-situ authoring and rapid iterations of joint plans without an offline training process. Further, we demonstrate and evaluate the effectiveness of our workflow through HRC use cases and a three-session user study.

GhostAR: A Time-space Editor for Embodied Authoring of Human-Robot Collaborative Task with Augmented Reality

Authors: Yuanzhi Cao*, Tianyi Wang*, Xun Qian, Pawan S. Rao, Manav Wadhawan, Ke Huo, Karthik Ramani

Proceedings of the 32nd Annual Symposium on User Interface Software and Technology. ACM, 2019.

https://doi.org/10.1145/3332165.3347902

Yuanzhi Cao

As a senior Ph.D. student and a researcher in the Human-Computer Interaction (HCI) area, I specialize in designing interactive systems that provide novel Augmented Reality (AR) user experience for smartthing applications, such as Machines, Robots, and IoTs. [personal site]