Pattern analysis of human motions, which is useful in many research areas, requires understanding and comparison of different styles of motion patterns. However, working with human motion tracking data to support such analysis poses great challenges. In this paper, we propose MotionFlow, a visual analytics system that provides an effective overview of various motion patterns based on an interactive flow visualization. This visualization formulates a motion sequence as transitions between static poses, and aggregates these sequences into a tree diagram to construct a set of motion patterns. The system also allows the users to directly reflect the context of data and their perception of pose similarities in generating representative pose states. We provide local and global controls over the partition-based clustering process. To support the users in organizing unstructured motion data into pattern groups, we designed a set of interactions that enables searching for similar motion sequences from the data, detailed exploration of data subsets, and creating and modifying the group of motion patterns. To evaluate the usability of MotionFlow, we conducted a user study with six researchers with expertise in gesture-based interaction design. They used MotionFlow to explore and organize unstructured motion tracking data. Results show that the researchers were able to easily learn how to use MotionFlow, and the system effectively supported their pattern analysis activities, including leveraging their perception and domain knowledge.

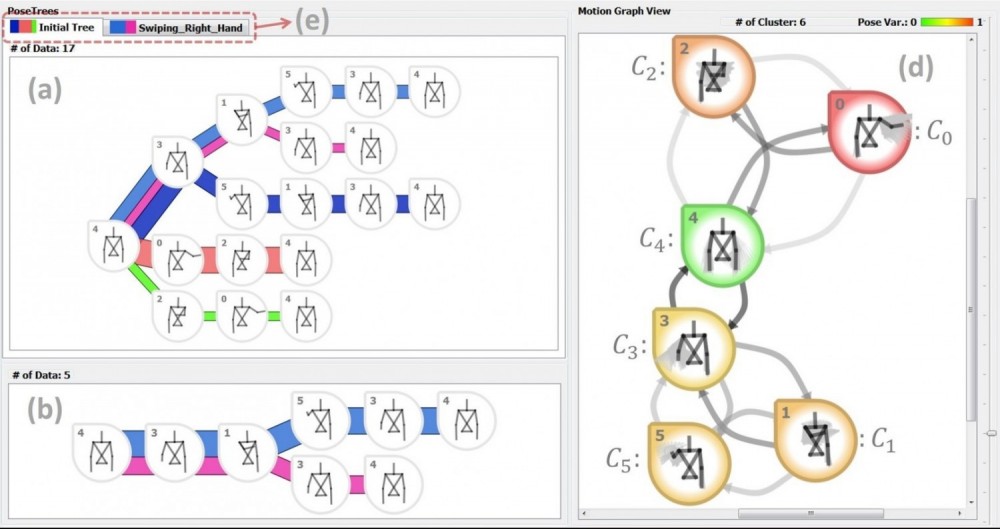

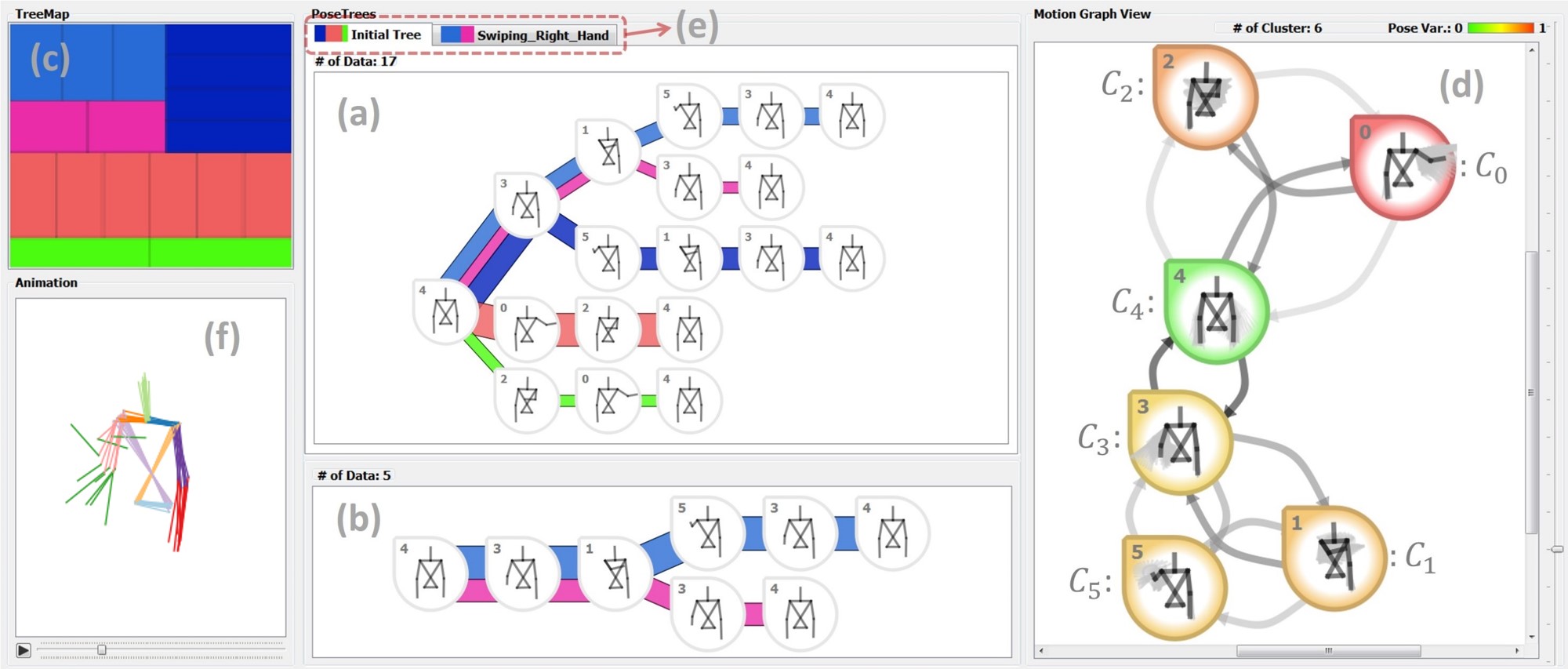

Screen capture of MotionFlow for pattern analysis of human motion data. (a) Pose tree: a simplified representation of multiple motion sequences aggregating the same transitions into a tree diagram. (b) A window dedicated to show a subtree structure based on a query. (c) Space-filling treemap representation of the motion sequence data using slice-and-dice layout. (d) Node-link diagram of pose clusters (nodes) and transitions (links) between them. This view supports interactivepartition-based pose clustering. (e) Multi-tab interface for storing unique motion patterns. (f) Animations of single or multiple selected human motions.