Photon-Limited Imaging

Generative Quanta Color Imaging

IEEE/CVF Conference on Computer Vision and Pattern Recognition 2024

Vishal Purohit, Junjie Luo, Yiheng Chi, Qi Guo, Stanley H Chan, Qiang Qiu

Manuscript: https://arxiv.org/abs/2403.19066

The astonishing development of single-photon cameras has created an unprecedented opportunity for scientific and industrial imaging. However the high data throughput generated by these 1-bit sensors creates a significant bottleneck for low-power applications. In this paper we explore the possibility of generating a color image from a single binary frame of a single-photon camera. We evidently find this problem being particularly difficult to standard colorization approaches due to the substantial degree of exposure variation. The core innovation of our paper is an exposure synthesis model framed under a neural ordinary differential equation (Neural ODE) that allows us to generate a continuum of exposures from a single observation. This innovation ensures consistent exposure in binary images that colorizers take on resulting in notably enhanced colorization. We demonstrate applications of the method in single-image and burst colorization and show superior generative performance over baselines.

Project Page: https://vishal-s-p.github.io/projects/2023/generative_quanta_color.html

Spatially varying exposure with 2-by-2 multiplexing: Optimality and universality

IEEE Transactions on Computational Imaging

Xiangyu Qu, Yiheng Chi, Stanley H Chan

Manuscript: https://arxiv.org/abs/2306.17367

The advancement of new digital image sensors has enabled the design of exposure multiplexing schemes where a single image capture can have multiple exposures and conversion gains in an interlaced format, similar to that of a Bayer color filter array. In this article, we ask the question of how to design such multiplexing schemes for adaptive high-dynamic range (HDR) imaging where the multiplexing scheme can be updated according to the scenes. We present two new findings. 1) We address the problem of design optimality . We show that given a multiplex pattern, the conventional optimality criteria based on the input/output-referred signal-to-noise ratio (SNR) of the independently measured pixels can lead to flawed decisions because it cannot encapsulate the location of the saturated pixels. We overcome the issue by proposing a new concept known as the spatially varying exposure risk (SVE-Risk) which is a pseudo-idealistic quantification of the amount of recoverable pixels. We present an efficient enumeration algorithm to select the optimal multiplex patterns. 2) We report a design universality observation that the design of the multiplex pattern can be decoupled from the image reconstruction algorithm. This is a significant departure from the recent literature that the multiplex pattern should be jointly optimized with the reconstruction algorithm. Our finding suggests that in the context of exposure multiplexing, an end-to-end training may not be necessary.

PCH-EM: A solution to information loss in the photon transfer method

IEEE Transactions on Electronic Devices, 2024

Aaron J. Hendrickson, David P. Haefner, Stanley H. Chan, Nicholas R. Shade, Eric R. Fossum

Manuscript: https://arxiv.org/abs/2403.04498

Working from a Poisson-Gaussian noise model, a multi-sample extension of the Photon Counting Histogram Expectation Maximization (PCH-EM) algorithm is derived as a general-purpose alternative to the Photon Transfer (PT) method. This algorithm is derived from the same model, requires the same experimental data, and estimates the same sensor performance parameters as the time-tested PT method, all while obtaining lower uncertainty estimates. It is shown that as read noise becomes large, multiple data samples are necessary to capture enough information about the parameters of a device under test, justifying the need for a multi-sample extension. An estimation procedure is devised consisting of initial PT characterization followed by repeated iteration of PCH-EM to demonstrate the improvement in estimate uncertainty achievable with PCH-EM; particularly in the regime of Deep Sub-Electron Read Noise (DSERN). A statistical argument based on the information theoretic concept of sufficiency is formulated to explain how PT data reduction procedures discard information contained in raw sensor data, thus explaining why the proposed algorithm is able to obtain lower uncertainty estimates of key sensor performance parameters such as read noise and conversion gain. Experimental data captured from a CMOS quanta image sensor with DSERN is then used to demonstrate the algorithm's usage and validate the underlying theory and statistical model.

The Secrets of Non-Blind Poisson Deconvolution

IEEE Transactions on Computational Imaging, 2024

Abhiram Gnanasambandam, Yash Sanghvi, Stanley H Chan

Manuscript: https://arxiv.org/abs/2309.03105

Non-blind image deconvolution has been studied for several decades but most of the existing work focuses on blur instead of noise. In photon-limited conditions, however, the excessive amount of shot noise makes traditional deconvolution algorithms fail. In searching for reasons why these methods fail, we present a systematic analysis of the Poisson non-blind deconvolution algorithms reported in the literature, covering both classical and deep learning methods. We compile a list of five “secrets” highlighting the do's and don'ts when designing algorithms. Based on this analysis, we build a proof-of-concept method by combining the five secrets. We find that the new method performs on par with some of the latest methods while outperforming some older ones.

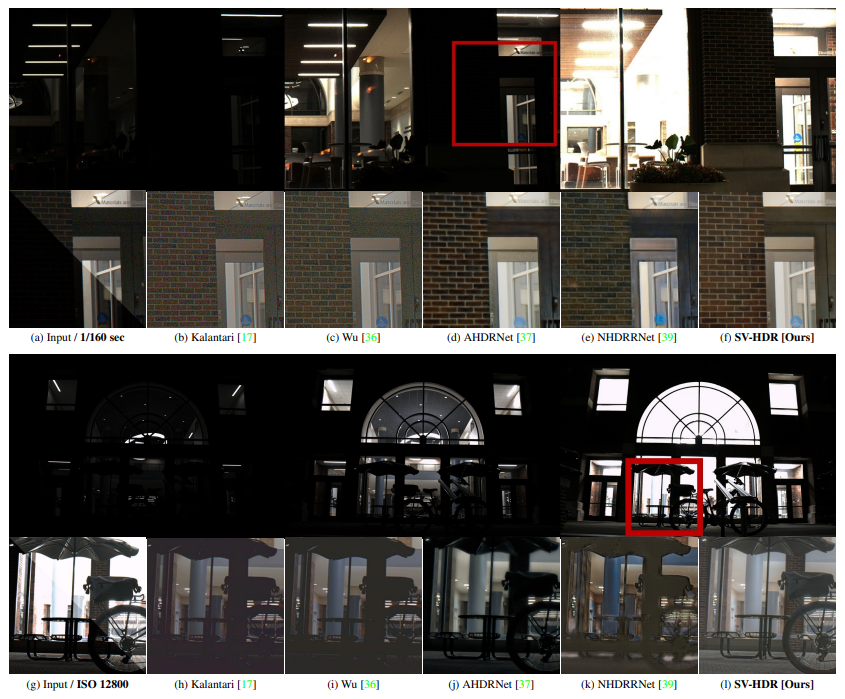

HDR Imaging with Spatially Varying Signal-to-Noise Ratios

IEEE Computer Vision and Pattern Recognition, 2023

Yiheng Chi, Xingguang Zhang, and Stanley H. Chan

Manuscript: https://arxiv.org/abs/2303.17253

While today's high dynamic range (HDR) image fusion algorithms are capable of blending multiple exposures, the acquisition is often controlled so that the dynamic range within one exposure is narrow. For HDR imaging in photon-limited situations, the dynamic range can be enormous and the noise within one exposure is spatially varying. Existing image denoising algorithms and HDR fusion algorithms both fail to handle this situation, leading to severe limitations in low-light HDR imaging.

This paper presents two contributions. Firstly, we identify the source of the problem. We find that the issue is associated with the co-existence of (1) spatially varying signal-to-noise ratio, especially the excessive noise due to very dark regions, and (2) a wide luminance range within each exposure. We show that while the issue can be handled by a bank of denoisers, the complexity is high. Secondly, we propose a new method called the spatially varying high dynamic range (SV-HDR) fusion network to simultaneously denoise and fuse images. We introduce a new exposure-shared block within our custom-designed multi-scale transformer framework. In a variety of testing conditions, the performance of the proposed SV-HDR is better than the existing methods.

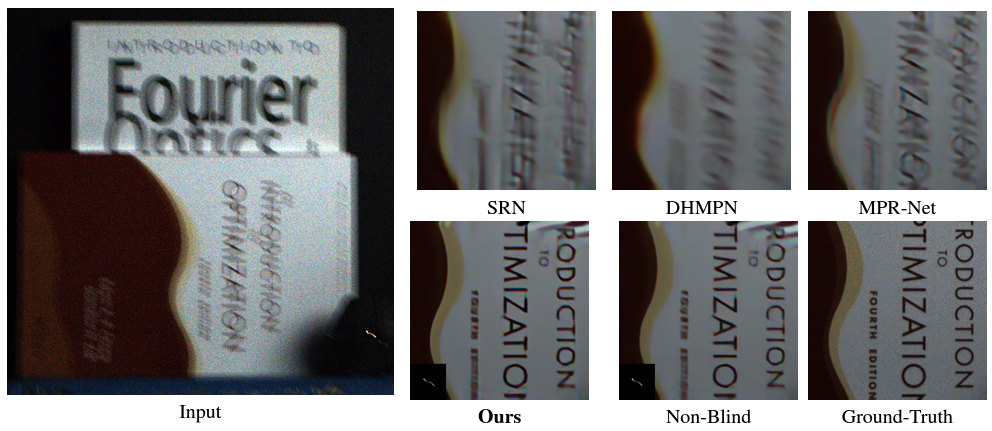

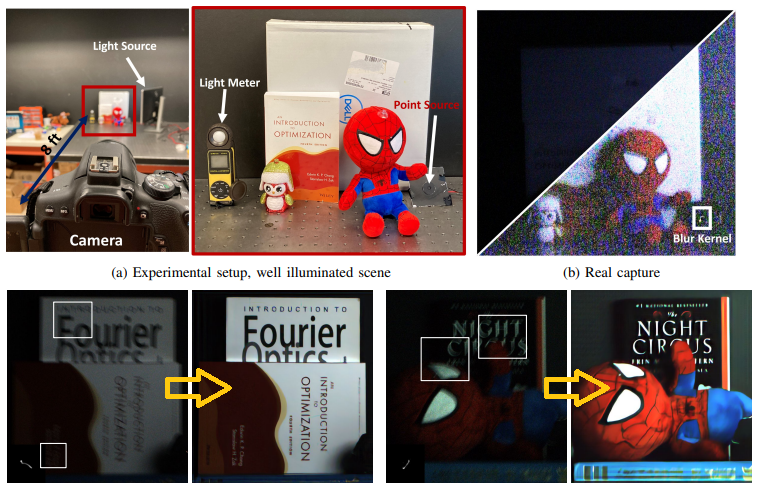

Structured Kernel Estimation for Photon-Limited Deconvolution

IEEE Computer Vision and Pattern Recognition, 2023

Yash Sanghvi, Zhiyuan Mao, Stanley H. Chan

Manuscript: https://arxiv.org/abs/2303.03472

Images taken in a low light condition with the presence of camera shake suffer from motion blur and photon shot noise. While state-of-the-art image restoration networks show promising results, they are largely limited to well-illuminated scenes and their performance drops significantly when photon shot noise is strong. In this paper, we propose a new blur estimation technique customized for photon-limited conditions. The proposed method employs a gradient-based backpropagation method to estimate the blur kernel. By modeling the blur kernel using a low-dimensional representation with the key points on the motion trajectory, we significantly reduce the search space and improve the regularity of the kernel estimation problem. When plugged into an iterative framework, our novel low-dimensional representation provides improved kernel estimates and hence significantly better deconvolution performance when compared to end-to-end trained neural networks.

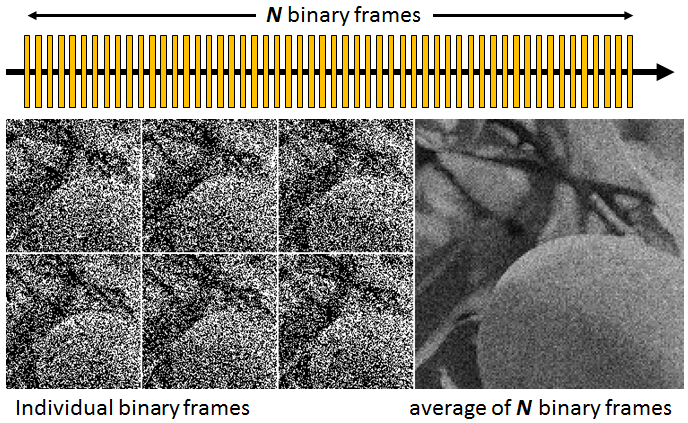

What Does a One-Bit Quanta Image Sensor Offer?

IEEE Transactions on Computational Imaging, 2022

Stanley H. Chan

Manuscript: https://arxiv.org/abs/2208.10350

The one-bit quanta image sensor (QIS) is a photon-counting device that captures image intensities using binary bits. Assuming that the analog voltage generated at the floating diffusion of the photodiode follows a Poisson-Gaussian distribution, the sensor produces either a ‘‘1’’ if the voltage is above a certain threshold or ‘‘0’’ if it is below the threshold. The concept of this binary sensor has been proposed for more than a decade, and physical devices have been built to realize the concept. However, what benefits does a one-bit QIS offer compared to a conventional multi-bit CMOS image sensor? Besides the known empirical results, are there theoretical proofs to support these findings?

The goal of this paper is to provide new theoretical support from a signal processing perspective. In particular, it is theoretically found that the sensor can offer three benefits: (1) Low-light: One-bit QIS performs better at low-light because it has a low read noise, and its one-bit quantization can produce an error-free measurement. However, this requires the exposure time to be appropriately configured. (2) Frame rate: One-bit sensors can operate at a much higher speed because a response is generated as soon as a photon is detected. However, in the presence of read noise, there exists an optimal frame rate beyond which the performance will degrade. A Closed-form expression of the optimal frame rate is derived. (3) Dynamic range: One-bit QIS offers a higher dynamic range. The benefit is brought by two complementary characteristics of the sensor: nonlinearity and exposure bracketing. The decoupling of the two factors is theoretically proved, and closed-form expressions are derived.

Review of Quanta Image Sensors

IEEE Journal of Electron Devices, 2022

Jiaju Ma, Stanley H. Chan, Eric R. Fossum

Paper: https://ieeexplore.ieee.org/document/9768129

The quanta image sensor (QIS) is a photon-counting image sensor that has been implemented using different electron devices, including impact ionization-gain devices, such as the single-photon avalanche detectors (SPADs), and low-capacitance, high conversion-gain devices, such as modified CMOS image sensors (CIS) with deep subelectron read noise and/or low noise readout signal chains. This article primarily focuses on CIS QIS, but recent progress of both types is addressed. Signal processing progress, such as denoising, critical to improving apparent signal-to-noise ratio, is also reviewed as an enabling coinnovation.

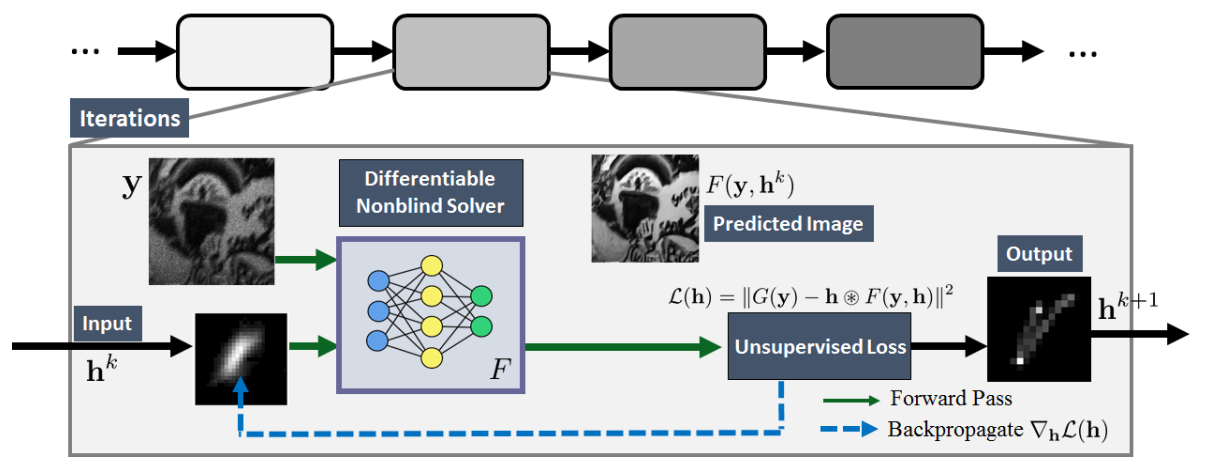

Photon-Limited Blind Deconvolution using Unsupervised Iterative Kernel Estimation

Yash Sanghvi, Abhiram Gnanasambandam, Zhiyuan Mao, Stanley H. Chan

Manuscript: https://arxiv.org/abs/2208.00451

Blind deconvolution in low-light is one of the more challenging problems in image restoration because of the photon shot noise. However, existing algorithms – both classical and deep-learning based – are not designed for this condition. When the shot noise is strong, conventional deconvolution methods fail because (1) the presence of noise makes the estimation of the blur kernel difficult; (2) generic deep-restoration models rarely model the forward process explicitly; (3) there are currently no iterative strategies to incorporate a non-blind solver in a kernel estimation stage. This paper addresses these challenges by presenting an unsupervised blind deconvolution method. At the core of this method is a reformulation of the general blind deconvolution framework from the conventional image-kernel alternating minimization to a purely kernel-based minimization. This kernel-based minimization leads to a new iterative scheme that backpropagates an unsupervised loss through a pre-trained non-blind solver to update the blur kernel. Experimental results show that the proposed framework achieves superior results than state-of-the-art blind deconvolution algorithms in low-light conditions.

Photon-Limited Non-Blind Deblurring Using Algorithm Unrolling

Yash Sanghvi, Abhiram Gnanasambandam, Stanley H. Chan

Manuscript:

https://arxiv.org/abs/2110.15314

Project Page: https://sanghviyashiitb.github.io/nb-deblur-webpage/

Image deblurring in photon-limited conditions is ubiquitous in a variety of low-light applications such as photography, microscopy and astronomy. However, the presence of photon shot noise due to low-illumination and/or short exposure makes the deblurring task substantially more challenging than the conventional deblurring problems. In this paper we present an algorithm unrolling approach for the photon-limited deblurring problem by unrolling a Plug-and-Play algorithm for a fixed number of iterations. By introducing a three-operator splitting formation of the Plug-and-Play framework, we obtain a series of differentiable steps which allows the fixed iteration unrolled network to be trained end-to-end. The proposed algorithm demonstrates significantly better image recovery compared to existing state-of-the-art deblurring approaches. We also present a new photon-limited deblurring dataset for evaluating the performance of algorithms.

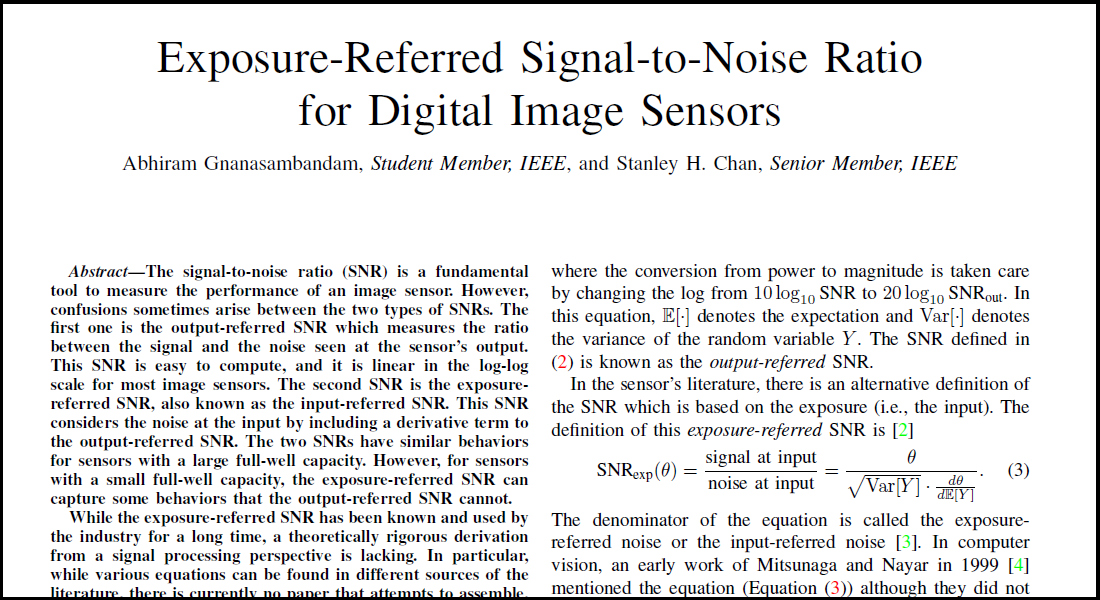

Exposure referred Signal-to-Noise Ratio for Digital Image Sensors

IEEE Transactions on Computational Imaging, 2022

Abhiram Gnanasambandam and Stanley H. Chan

Manuscript: https://arxiv.org/abs/2112.05817

The signal-to-noise ratio (SNR) is a fundamental tool to measure the performance of an image sensor. However, confusions sometimes arise between the two types of SNRs. The first one is the output-referred SNR which measures the ratio between the signal and the noise seen at the sensor's output. This SNR is easy to compute, and it is linear in the log-log scale for most image sensors. The second SNR is the exposure-referred SNR, also known as the input-referred SNR. This SNR considers the noise at the input by including a derivative term to the output-referred SNR. The two SNRs have similar behaviors for sensors with a large full-well capacity. However, for sensors with a small full-well capacity, the exposure-referred SNR can capture some behaviors that the output-referred SNR cannot.

While the exposure-referred SNR has been known and used by the industry for a long time, a theoretically rigorous derivation from a signal processing perspective is lacking. In particular, while various equations can be found in different sources of the literature, there is currently no paper that attempts to assemble, derive, and organize these equations in one place. This paper aims to fill the gap by answering four questions: (1) How is the exposure-referred SNR derived from first principles? (2) Is the output-referred SNR a special case of the exposure-referred SNR, or are they completely different? (3) How to compute the SNR efficiently? (4) What utilities can the SNR bring to solving imaging tasks? New theoretical results are derived for image sensors of any bit-depth and full-well capacity.

Electronic Imaging 2022 Short Course

Signal Processing for Photon-Limited Imaging

Stanley Chan

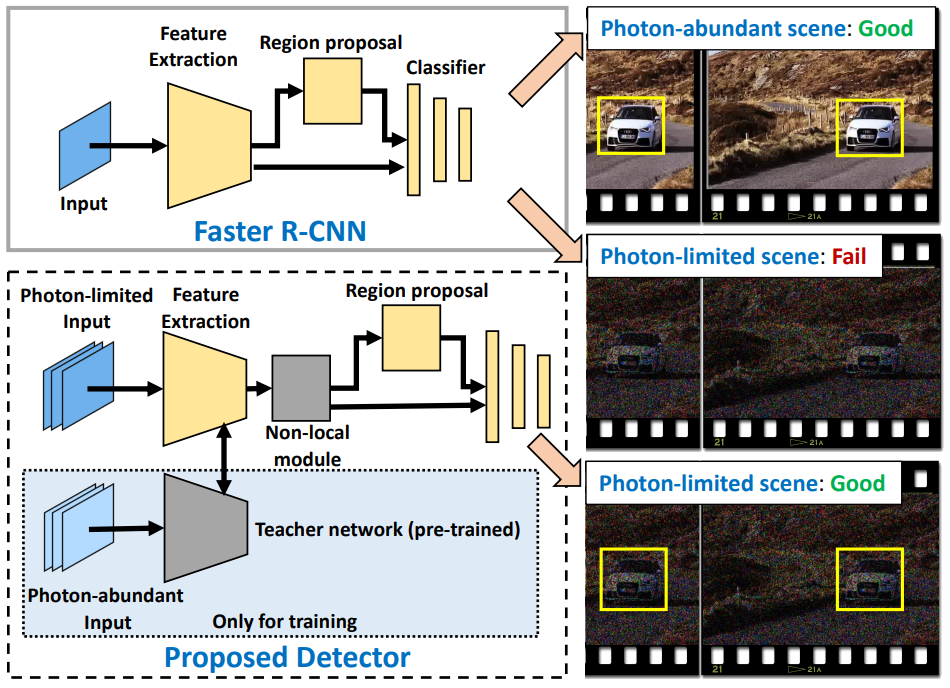

Photon-Limited Object Detection using Non-local Feature Matching and Knowledge Distillation

IEEE International Conference on Computer Vision Workshop, 2021

Chengxi Li, Xiangyu Qu, Abhiram Gnanasambandam, Omar A. Elgendy, Jiaju Ma, Stanley H. Chan

Manuscript: https://bit.ly/3vT7eum

Robust object detection under photon-limited conditions is crucial for applications such as night vision, surveillance, and microscopy, where the number of photons per pixel is low due to a dark environment and/or a short integration time. While the mainstream “low-light” image enhancement methods have produced promising results that improve the image contrast between the foreground and background through advanced coloring techniques, the more challenging problem of mitigating the photon shot noise inherited from the random Poisson process remains open. In this paper, we present a photon-limited object detection framework by adding two ideas to state-of-the-art object detectors: 1) a space-time non-local module that leverages the spatial-temporal information across an image sequence in the feature space, and 2) knowledge distillation in the form of student-teacher learning to improve the robustness of the detector’s feature extractor against noise. Experiments are conducted to demonstrate the improved performance of the proposed method in comparison with state-of-the-art baselines. When integrated with the latest photon counting devices, the algorithm achieves more than 50% mean average precision at a photon level of 1 photon per pixel.

Low-Light Demosaicking and Denoising for Small Pixels Using Learned Frequency Selection

IEEE Transactions on Computational Imaging, 2021

Omar Elgendy, Abhiram Gnanasambandam, Stanley H. Chan, and Jiaju Ma

Manuscript: https://ieeexplore.ieee.org/abstract/document/9335264

Low-light imaging is a challenging task because of the excessive photon shot noise. Color imaging in low-light is even more difficult because one needs to demosaick and denoise simultaneously. Existing demosaicking algorithms are mostly designed for well-illuminated scenarios, which fail to work with low-light. Recognizing the recent development of small pixels and low read noise image sensors, we propose a learning-based joint demosaicking and denoising algorithm for low-light color imaging. Our method combines the classical theory of color filter arrays and modern deep learning. We use an explicit carrier to demodulate the color from the input Bayer pattern image. We integrate trainable filters into the demodulation scheme to improve flexibility. We introduce a guided filtering module to transfer knowledge from the luma channel to the chroma channels, thus offering substantially more reliable denoising. Extensive experiments are performed to evaluate the performance of the proposed method, using both synthetic datasets and real data. Results indicate that the proposed method offers consistently better performance over the current state-of-the-art, across several standard evaluation metrics.

HDR Imaging with Quanta Image Sensors: Theoretical Limits and Optimal Reconstruction

IEEE Transactions on Computational Imaging, 2020

Abhiram Gnanasambandam and Stanley H. Chan

Manuscript: https://arxiv.org/abs/2011.03614

Code: https://github.itap.purdue.edu/StanleyChanGroup/QIS_HDR_TCI20

An earlier version of the paper is presented at International Image Sensor Workshop (IISW) 2019.

Manuscript: PDF

High dynamic range (HDR) imaging is one of the biggest achievements in modern

photography. Traditional solutions to HDR imaging are designed for and

applied to CMOS image sensors (CIS). However, the mainstream one-micron CIS

cameras today generally have a high read noise and low frame-rate. These, in

turn, limit the acquisition speed and quality, making the cameras slow in the

HDR mode. In this paper, we propose a new computational photography technique

for HDR imaging. Recognizing the limitations of CIS, we use the Quanta Image

Sensor (QIS) to trade the spatial-temporal resolution with bit-depth. QIS is

a single-photon image sensor that has comparable pixel pitch to CIS but

substantially lower dark current and read noise. We provide a complete

theoretical characterization of the sensor in the context of HDR imaging, by

proving the fundamental limits in the dynamic range that QIS can offer and

the trade-offs with noise and speed. In addition, we derive an optimal

reconstruction algorithm for single-bit and multi-bit QIS. Our algorithm is

theoretically optimal for emph{all} linear reconstruction schemes based on

exposure bracketing. Experimental results confirm the validity of the theory

and algorithm, based on synthetic and real QIS data.

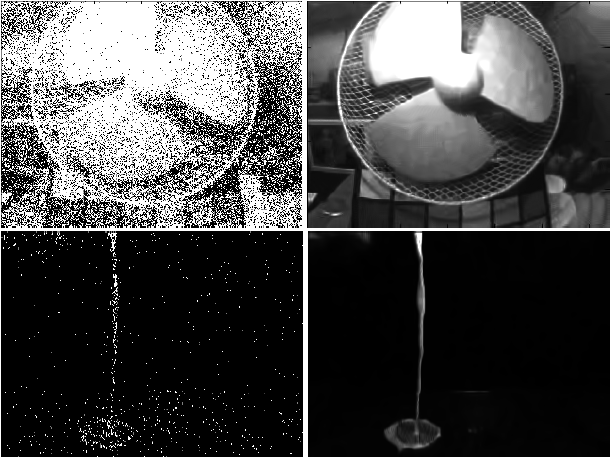

Dynamic Low-light Imaging with Quanta Image Sensors

European Conference on Computer Vision (ECCV), 2020.

Manuscript: https://arxiv.org/abs/2007.08614

Yiheng Chi, Abhiram Gnanasambandam, Vladlen Koltun, and Stanley H. Chan

Code: https://github.itap.purdue.edu/StanleyChanGroup/ECCV2020_Dynamic

Imaging in low light is difficult because the number of photons arriving at

the sensor is low. Imaging dynamic scenes in low-light environments is even

more difficult because as the scene moves, pixels in adjacent frames need to

be aligned before they can be denoised. Conventional CMOS image sensors (CIS)

are at a particular disadvantage in dynamic low-light settings because the

exposure cannot be too short lest the read noise overwhelms the signal. We

propose a solution using Quanta Image Sensors (QIS) and present a new image

reconstruction algorithm. QIS are single-photon image sensors with photon

counting capabilities. Studies over the past decade have confirmed the

effectiveness of QIS for low-light imaging but reconstruction algorithms for

dynamic scenes in low light remain an open problem. We fill the gap by

proposing a student-teacher training protocol that transfers knowledge from a

motion teacher and a denoising teacher to a student network. We show that

dynamic scenes can be reconstructed from a burst of frames at a photon level

of 1 photon per pixel per frame. Experimental results confirm the advantages

of the proposed method compared to existing methods.

Image Classification in the Dark using Quanta Image Sensors

European Conference on Computer Vision (ECCV), 2020

Manuscript: https://arxiv.org/abs/2006.02026

Abhiram Gnanasambandam, and Stanley H. Chan

Code: https://github.itap.purdue.edu/StanleyChanGroup/QIS_ImageClassification_ECCV20

Abstract:

State-of-the-art image classifiers are trained and tested using

well-illuminated images. These images are typically captured by CMOS image

sensors with at least tens of photons per pixel. However, in dark

environments when the photon flux is low, image classification becomes

difficult because the measured signal is suppressed by noise. In this paper,

we present a new low-light image classification solution using Quanta Image

Sensors (QIS). QIS are a new type of image sensors that possess photon

counting ability without compromising on pixel size and spatial resolution.

Numerous studies over the past decade have demonstrated the feasibility of

QIS for low-light imaging, but their usage for image classification has not

been studied. This paper fills the gap by presenting a student-teacher

learning scheme which allows us to classify the noisy QIS raw data. We show

that with student-teacher learning, we are able to achieve image

classification at a photon level of one photon per pixel or lower.

Experimental results verify the effectiveness of the proposed method compared

to existing solutions.

Color Filter Arrays Design for Quanta Image Sensors

IEEE Trans. Computational Imaging, 2019.

Manuscript: https://arxiv.org/abs/1903.09823

Omar A. Elgendy, and Stanley H. Chan

Quanta image sensor (QIS) is envisioned to be the next generation image

sensor after CCD and CMOS. In this paper, we discuss how to design color

filter arrays for QIS. Designing color filter arrays for small pixels such as

QIS is challenging because maximizing the light efficiency while suppressing

aliasing and crosstalk are conflicting tasks. We present an

optimization-based framework which unifies several mainstream color filter

array design methodologies and offers greater generality and flexibility.

Compared to existing methods, the new framework can simultaneously handle

luminance sensitivity, chrominance sensitivity, cross-talk, anti-aliasing,

manufacturability and orthogonality. Extensive experimental comparisons

demonstrate the effectiveness and generality of the framework.

Megapixel photon-counting color imaging using quanta image sensor

OSA Optics Express 2019

Manuscript: https://arxiv.org/abs/1903.09036

Abhiram Gnanasambandam, Omar A. Elgendy, Jiaju Ma, and Stanley H. Chan

Code: https://github.itap.purdue.edu/StanleyChanGroup/ColorRecon_OpEx19

Quanta Image Sensor (QIS) is a single-photon detector designed for extremely

low light imaging conditions. Majority of the existing QIS prototypes are

monochrome based on single-photon avalanche diodes (SPAD). Passive color

imaging has not been demonstrated with single-photon detectors due to the

intrinsic difficulty of shrinking the pixel size and increasing the spatial

resolution while maintaining acceptable intra-pixel cross-talk. In this

paper, we present image reconstruction of the first color QIS with a

resolution of 1024-by-1024 pixels, supporting both single-bit and multi-bit

photon counting capability. Our color image reconstruction is enabled by a

customized joint demosaicing-denoising algorithm, leveraging truncated

Poisson statistics and variance stabilizing transforms. Experimental results

of the new sensor and algorithm demonstrate superior color imaging

performance for very low-light conditions with a mean exposure of as low as a

few photons per pixel in both real and simulated images.

Photon-counting Imaging with Multi-bit Quanta Image Sensors

International Image Sensor Workshop (IISW), 2019

Manuscript: PDF

Jiaju Ma, Yu-Wing Chung, Abhiram Gnanasambandam, Stanley H. Chan, and Saleh Masoodian

Image Reconstruction for Quanta Image Sensors using Deep Neural Networks

IEEE ICASSP, 2019

Manuscript: PDF

Joon Hee Choi, Omar A. Elgendy and Stanley H. Chan

Quanta Image Sensor (QIS) is a single-photon image sensor that oversamples

the light field to generate binary measurements. Its single-photon

sensitivity makes it an ideal candidate for the next generation image sensor

after CMOS. However, image reconstruction of the sensor remains a challenging

issue. Existing image reconstruction algorithms are largely based on

optimization. In this paper, we present the first deep neural network

approach for QIS image reconstruction. Our deep neural network takes the

binary bit stream of QIS as input, learns the nonlinear transformation and

denoising simultaneously. Experimental results show that the proposed network

produces significantly better reconstruction results compared to existing

methods.

Optimal Threshold Design for Quanta Image Sensors

(ICIP 2016 Best Paper Award)

IEEE Trans. Computational Imaging, 2018

Manuscript: https://arxiv.org/abs/1704.03886

Omar A. Elgendy and Stanley H. Chan

Also presented in IEEE ICIP 2016

Manuscript: PDF

MATLAB Implementation (1.1MB)

Quanta Image Sensor (QIS) has been envisioned as a candidate solution for next generation image sensors. We provide two

new contributions to the signal processing aspects of QIS. First, we develop an image reconstruction algorithm to

recover the underlying images from the QIS data, which is a massive array of binarized Poisson random variables. The

new algorithm supersedes existing methods by enabling arbitrary threshold level. Second, we present a threshold design

scheme to adaptively update the threshold level for optimal image reconstruction. We discuss the existence of a phase

transition in determining the optimal threshold. Experimental results on tone-mapped high dynamic range images

validates the effectiveness of the threshold scheme and the image reconstruction algorithm.

Non-Iterative Image Reconstruction

|

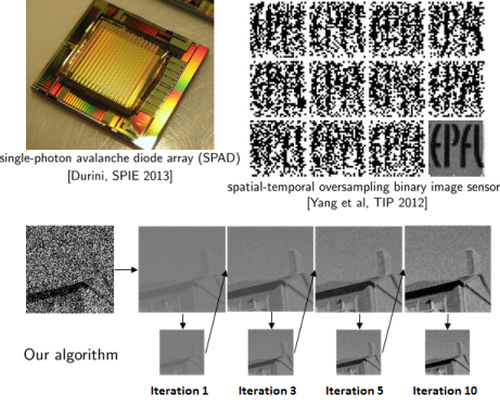

Quanta image sensor (QIS) is a class of single photon imaging devices that measure light intensity

using oversampled binary observations. Because of the stochastic nature of the photon arrivals, data acquired by QIS is

a massive stream of random binary bits. The goal of image reconstruction is to recover the underlying image from these

bits. In this paper, we present a non-iterative image reconstruction algorithm for QIS. Unlike existing reconstruction

methods that formulate the problem from an optimization perspective, the new algorithm directly recovers the images

through a pair of nonlinear transformations and an off-the-shelf image denoising algorithm. By skipping the usual

optimization procedure, we achieve orders of magnitude improvement in speed and even better image reconstruction

quality. We validate the new algorithm on synthetic datasets as well as real videos collected by 1-bit SPAD cameras. Publication:

Code: https://github.itap.purdue.edu/StanleyChanGroup/SingleBitRecon_MDPI_16 |

ADMM Image Reconstruction

|

Recent advances in materials, devices and fabrication technologies have motivated a strong momentum in developing

solid-state sensors that can detect individual photons in space and time. It has been envisioned that such sensors can

eventually achieve very high spatial resolutions as well as high frame rates. In this paper, we present an efficient

algorithm to reconstruct images from the massive binary bit-streams generated by these sensors. Based on the concept of

alternating direction method of multipliers (ADMM), we transform the computationally intensive optimization problem

into a sequence of subproblems, each of which has efficient implementations in the form of polyphase-domain filtering

or pixel-wise nonlinear mappings. Moreover, we reformulate the original maximum likelihood estimation as maximum a

posterior estimation by introducing a total variation prior. Numerical results demonstrate the strong performance of

the proposed method, which achieves several dB's of improvement in PSNR and requires a shorter runtime as compared to

standard gradient-based approaches. Publication:

|