Robust Machine Learning

Optical Adversarial Attack

Abhiram Gnanasambandam, Alex M. Sherman, and Stanley H. Chan,

‘‘Optical Adversarial Attack’’,

IEEE International Conference on Computer Vision Workshop (ICCV-W), 2021.

2nd Workshop on Adversarial Robustness in Real World

Paper (PDF): https://arxiv.org/abs/2108.06247

News coverage:

https://cacm.acm.org/news/254919-optical-adversarial-attack-can-change-the-meaning-of-road-signs/fulltext

https://www.databreachtoday.in/researchers-demonstrate-ai-be-fooled-a-17366

https://www.unite.ai/optical-adversarial-attack-can-change-the-meaning-of-road-signs/

https://www.hackread.com/optical-adversarial-attack-low-cost-projector-trick-ai/

https://news.ycombinator.com/item?id=28204077

https://www.reddit.com/r/technology/comments/p5eyfm/optical_adversarial_attack_can_change_the_meaning/

https://thenextweb.com/news/researchers-tricked-ai-ignoring-stop-signs-using-cheap-projector

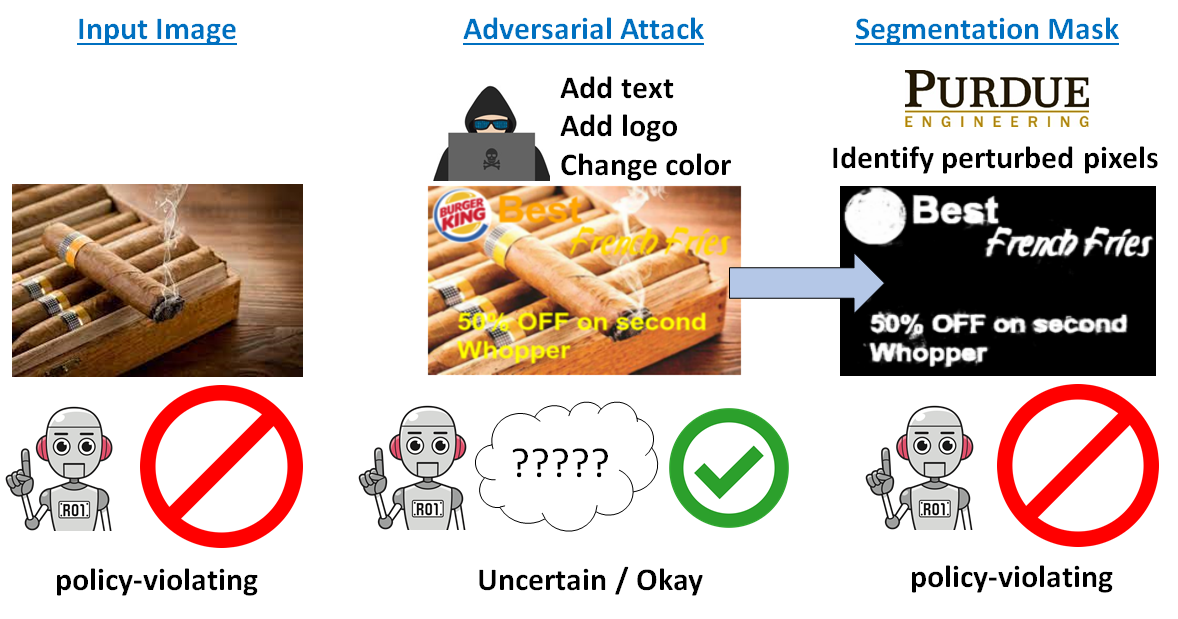

Detecting and Segmenting Adversarial Graphics Patterns from Images

Xiangyu Qu and Stanley H. Chan

‘‘Detecting and Segmenting Adversarial Graphics Patterns from Images’’,

IEEE International Conference on Computer Vision Workshop (ICCV-W), 2021.

2nd Workshop on Adversarial Robustness in Real World

Paper (PDF): https://arxiv.org/abs/2108.09383

Code: TBA

Student-Teacher Learning from Clean Inputs to Noisy Inputs

Guanzhe Hong, Zhiyuan Mao, Xiaojun Lin, and Stanley H. Chan,

‘‘Student-Teacher Learning from Clean Inputs to Noisy Inputs’’,

IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2021. (Acceptance rate = 27%)

Paper (PDF): https://arxiv.org/abs/2103.07600

Slides (PDF): Preview

One Size Fits All: Can We Train One Denoiser for All Noise Levels?

Abhiram Gnansambandam and Stanley H. Chan,

‘‘One Size Fits All: Can We Train One Denoiser for All Noise Levels?’’

International Conference on Machine Learning (ICML), 2020. (Acceptance rate = 21%)

Paper (PDF): https://arxiv.org/abs/2005.09627

ConsensusNet: Optimal Combination of Image Denoisers

Joon Hee Choi, Omar A. Elgendy and Stanley H. Chan,

Optimal Combination of Image Denoisers,

IEEE Trans. Image Process., vol. 28, no. 8, pp. 4016-4031, Aug. 2019.

Paper (PDF): https://arxiv.org/abs/1711.06712

Supplementary Material (PDF): [https://arxiv.org/src/1711.06712v2/anc/Supplementary_arxiv.pdf