Citation: “CAMILA: Context-Aware Masking for Image Editing with Language Alignment,” Hyunseung Kim (Purdue); Chiho Choi, Srikanth Malla, Sai Prahladh Padmanabhan (Samsung USA); Saurabh Bagchi (Purdue), Joon Hee Choi (Samsung). Accepted to appear at NeurIPS 2025 (5,290/21,575 = 24.5%)

Motivation

We are getting used to editing images or videos using text instructions. For example, services like Canva, Pincel App, and Microsoft Designer use AI to understand your text instructions, eliminating the need for complex manual editing tools. However, all existing image editing techniques naively attempt to follow all user instructions, even if those instructions are inherently infeasible or contradictory, often resulting in nonsensical output. For example, you can say “Color the river in the image blue” when there is no river in the image. This will lead to counter-intuitive results, which also vary widely across repeated queries. This happens increasingly frequently as we are performing more complex operations on images and consequently, the complexity of the images as well as the text instructions are growing. Further, the text instructions are increasingly multi-instruction scenarios, i.e., multiple complete sentences of instructions.

Our Contribution

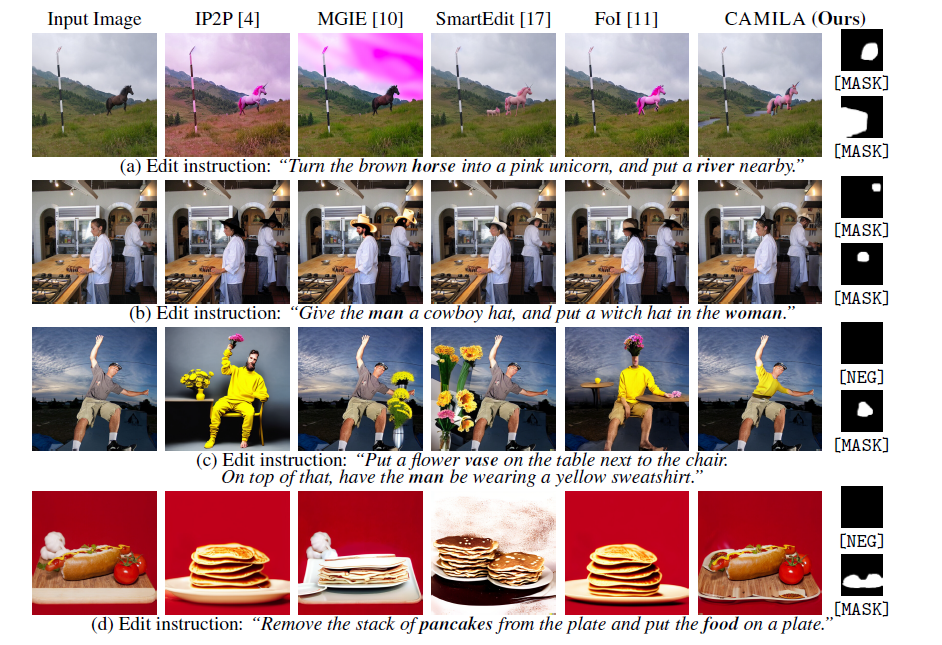

Hyunseung led the work that resulted in a tool called CAMILA, which solves this problem. The work was started during summer 2024 when Hyunseung went to Samsung in California and working with a team of Samsung researchers, led the solution development for this problem. Our solution CAMILA makes the key contribution that it explicitly assesses the executability of the instruction throughout the editing process. Building on pioneering research in the domain of natural language processing and more recently in multimodal large language models (MLLM), we leverage the MLLM to jointly interpret both text instructions and images, then we extend its capabilities to enable image editing with context awareness. Here, context refers to the model’s ability to interpret the relevance of various instructions within a given image, allowing it to focus on applicable regions while ignoring irrelevant areas. We achieve this through the use of specialized tokens — CAMILA assigns [MASK] tokens to editable regions and [NEG] tokens to suppress irrelevant edits. A following broadcasting module then consistently aligns token assignments with user prompts.

How do we know if this works?

To evaluate CAMILA, we extend the conventional single- and multi-instruction image editing tasks by introducing the possibility of non-executable prompts. This results in new evaluation scenarios: Context-Aware Image Editing that evaluate how the model handles the number of instructions and the presence of infeasible requests within the same sequence. We compare our method against five different state-of-the-art image editing methods: IP2P [CVPR-23], EMILIE [WACV-24], MGIE [ICLR-24], SmartEdit (SE) [CVPR-24], and FoI [CVPR-24]. We observe substantial improvements in editing accuracy, particularly L1 and L2 distances, as well as enhanced performance on CLIP and DINO scores, with a human preference-based evaluation also indicating strong performance. CAMILA outperforms the strongest baseline, FoI, by 18.1% and 24.0% in Multi-instruction and Context-Aware image editing tasks.

pretrained GPT model before running the model. Furthermore, due to inaccuracies in the attention

map of diffusion model, FoI often fails to make precise modifications. In the case of context-aware

instructions, CAMILA accurately identifies applicable instructions by generating [MASK] and [NEG]

tokens from MLLM. We present the decoded mask results for each instruction of the [MASK] token.

We plan to release the multi-instruction image editing dataset after Samsung review.