Seminars in Hearing Research at Purdue

PAST: Abstracts

Talks in 2014-2015

[LYLE 1150: 1030-1120am]

Link to Schedule

August 25, 2014

Organization & Tour of LYLE research labs

September 4, 2014

Dr. Bob Carlyon (video talk)

Programme leader: Hearing, speech, and language group

MRC Cognition and Brain Sciences Unit

Cambridge, UK

“Science: How not to do it”

This pre-recorded British Society of Audiology ‘Lunch and Learn’ eSeminar provides significant food for thought about how scientific research is done in the field of Hearing Science and in general. An entirely non-mathematical, example-based, and sometimes even entertaining discussion covers many of the common scientific mistakes (simple and subtle) that have been (and still are) made in published statistical analyses of hearing-science data.

https://connect.sonova.com/p1q15w788pc/?launcher=false&fcsContent=true&pbMode=normal

September 11, 2014

Ximena Bernal, Ph.D. (BIO)

Lack of auditory induction and tone-deaf females in túngara frogs, plus some preliminary data on frog-biting midge hearing to whet your appetite

This talk presents two studies that investigate auditory induction and the role of harmonically related in túngara frogs (Engystomops pustulosus). In the first study, we examined auditory induction in this species that has an advertisement call that is naturally continuous and thus similar to the tonal sweeps used in human psychophysical studies of auditory induction. We tested female frogs using a set of synthetic stimuli with silent gaps filled with band-limited, spectrum level-matched noise. Our results showed lack of auditory induction in this species. In combination with previous research, these findings suggest that anuran receivers have evolved alternative mechanisms to cope with interrupted or masked acoustic stimulation. In the second study, we tested the response of the frogs to variation in the frequency ratios of their harmonically-structured mating call to determine whether this vertebrate, that lacks a cochlea, is preferentially attracted to harmonic consonance. The ratio of frequencies present in acoustic stimuli did not influence female response. Instead, the amount of inner ear stimulation explained female preference behavior. We conclude that the harmonic relationships that characterize the vocalizations of these frogs did not evolve in response to a preference for consonant sounds. Finally, I present preliminary data on the hearing organs of frog-biting midges (Corethrella spp), a group of flies that is specialized on eavesdropping on anuran mating calls.

September 18, 2014

Brandon Coventry (PhD student, BME, Bartlett Lab)

The Use of Biophysically Inspired Particle Swarm Social Networks in Understanding Single Neuron Dynamics in the Inferior Colliculus

The inferior colliculus (IC) is a major integrative center in the auditory system, receiving excitatory input from cochlear nucleus and superior olivary complex as well as inhibitory inputs from lateral lemniscus and superior paraolivary nucleus. The generation of complex IC neural responses arises from a fine tuning of the excitatory and inhibitory balance. However, if this balance is upset, such as the marked reduction in inhibition seen in aging, IC responses become compromised. A major obstacle in understanding the generation of normal and pathological responses is estimating the strength and tuning of excitatory and inhibitory inputs which are integrated to create output IC firing responses. To this end, a biophysically accurate IC model was used to recreate IC input output firing and explore input integration properties. A new social network modeling visual cortical winner-take-all coding was developed and adapted to particle swarm optimization in order to recreate IC frequency tuning responses. This talk will present preliminary data on IC frequency tuning response recreation in young and aged ICs using particle swarm optimization and discuss the general use of social networks and particle swarm optimization to solve inverse problems in neuroscience

September 25, 2014

Kaidi Zhang (PhD student, BIO, Fekete Lab)

Expression and misexpression of the miR-183 family in the developing hearing organ of the chicken

The miR-183 family is expressed in sensory cells of inner ear and retina and the precise level of the three miRNAs was previously found to be positively correlated with hair cell number in zebrafish inner ear. The miR-183 family was shown to have dynamic expression gradients along the longitudinal axis and across the radial axis in the cochlea of the mice. Whether the miR-183 family is required in the patterning of hair cells located at different positions along and across the cochlea had not been determined. Here we showed the expression pattern of miR-183 family with both longitudinal and radial gradients in the hearing organ of the chicken, the basilar papilla (BP). However, we did not observe hair cell fate changes from basal (high frequency) to apical (low frequency) phenotypes, or from short hair cells to tall hair cells after overexpression of miR-183 family by Tol2 transposase mediated stable transfection. Ectopic expression of miR-183 family at the prosensory stage resulted in a higher percentage of hair cells midway along the BP. This suggests that higher levels of the miR-183 family biased progenitor cells towards a hair cell fate rather than the alternative supporting cell fate. These findings indicate that miR-183 family can influence cell fate determination of progenitor cells, but may not be sufficient to instruct hair cells toward distinct phenotypic pathways.

October 2, 2014

Chandan Suresh (PhD student, SLHS, Krishnan Lab)

Cortical pitch response components show differential sensitivity to native and nonnative pitch contours

The aim of this study is to evaluate how nonspeech pitch contours of varying shape influence latency and amplitude of cortical pitch-specific response (CPR) components differentially as a function of language experience. Stimuli included time-varying, high rising Mandarin Tone 2 (T2) and linear rising ramp (Linear), and steady-state (Flat). Both the latency and magnitude of CPR components were differentially modulated by (i) the overall trajectory of pitch contours (time-varying vs. steady-state), (ii) their pitch acceleration rates (changing vs. constant), and (iii) their linguistic status (lexical vs. nonlexical). T2 elicited larger amplitude than Linear in both language groups, but size of the effect was larger in Chinese than English. The magnitude of CPR components elicited by T2 were larger for Chinese than English at the right temporal electrode site. Using the CPR, we provide evidence in support of experience-dependent modulation of dynamic pitch contours at an early stage of sensory processing.

October 9, 2014

Jeff Gehlhausen (MD/PhD student (MSTP), Biochemistry and Molecular Biology, Clapp Lab-IUSOM [Heinz slot])

A mouse model of Neurofibromatosis Type 2 that acquires hearing loss and vestibular impairment

Neurofibromatosis type 2 (NF2) is an autosomal dominant genetic disorder resulting from germline mutations in the NF2 gene. Bilateral vestibular schwannomas, tumors on cranial nerve VIII, are pathognomonic for NF2 disease. Furthermore, schwannomas also commonly develop in other cranial nerves, dorsal root ganglia and peripheral nerves. These tumors are a major cause of morbidity and mortality, and medical therapies to treat them are limited. Animal models that accurately recapitulate the full anatomical spectrum of human NF2-related schwannomas, including the characteristic functional deficits in hearing and balance associated with cranial nerve VIII tumors, would allow systematic evaluation of experimental therapeutics prior to clinical use. Here, we present a genetically engineered NF2 mouse model generated through excision of the Nf2 gene driven by Cre expression under control of a tissue-restricted 3.9kbPeriostin promoter element. By 10 months of age, 100% of Postn-Cre; Nf2flox/flox mice develop spinal, peripheral and cranial nerve tumors histologically identical to human schwannomas. In addition, the development of cranial nerve VIII tumors correlates with functional impairments in hearing and balance, as measured by auditory brainstem response and vestibular testing. Overall, the Postn-Cre; Nf2flox/flox tumor model provides a novel tool for future mechanistic and therapeutic studies of NF2-associated schwannomas.

October 16, 2014

Erica Hegland (PhD student, SLHS, Strickland Lab)

A tale of two gain reductions: The interaction of suppression and the medial olivocochlear reflex

Two-tone suppression, a nearly instantaneous reduction in cochlear gain and a by-product of the active process, has been extensively studied both physiologically and psychoacoustically. Some physiological data suggest that the medial olivocochlear reflex (MOCR), which reduces the gain of the active process in the cochlea, may also reduce suppression. This is counter-intuitive: one might suppose that the two types of gain reduction would compound and result in even more gain reduction. The interaction of these two gain reduction mechanisms is complex and has not been widely studied or understood. Functionally, it is thought that these mechanisms may perceptually reduce the level of background noise, helping speech to stand out in noisy environments. Due to the complexity of this interaction, a model of the auditory periphery that includes the MOCR time course was used to systematically investigate the interaction of these gain reduction mechanisms. This model was used to closely examine two-tone suppression at the level of the basilar membrane using suppressors lower in frequency than the signal tone. Results were compared both with and without elicitation of the MOCR. Frequency components of the basilar membrane response at CF were separated to determine the response produced by the signal and by the suppressor. Model results support the idea that, in some conditions, suppression is reduced with elicitation of the MOCR. The interaction of these two mechanisms of gain reduction is complicated and varies by level, frequency, and duration of the stimulus. This interaction of suppression and the MOCR creates a dynamic system in which gain changes according to fluctuations in the signal. For people with mild hearing loss or presbyacusis, who do not show effects of suppression, these results can provide further insight into why speech understanding in noise is so negatively impacted even before auditory thresholds are greatly affected.

October 23, 2014

Ed Bartlett, PhD (BIO/BME)

MULTI-LEVEL ANALYSIS OF AGE-RELATED DECLINES IN AUDITORY TEMPORAL PROCESSING

Hearing thresholds and wave amplitudes measured using auditory brainstem responses (ABRs) to brief sounds are the predominantly used clinical measures to objectively assess auditory function. However, frequency-following responses (FFRs) to tonal carriers and to the modulation envelope (envelope-following responses or EFRs) to longer and spectro-temporally modulated stimuli are rapidly gaining prominence as a measure of complex sound processing in the brainstem and midbrain. In spite of numerous studies reporting changes in hearing thresholds, ABR wave amplitudes, and the FFRs and EFRs under neurodegenerative conditions, including aging, the relationships between these metrics are not clearly understood. In this study, the relationships between ABR thresholds, ABR wave amplitudes, and EFRs are explored in a rodent model of aging. ABRs to broadband click stimuli and EFRs to sinusoidally amplitude- modulated noise carriers were measured in young (3–6 months) and aged (22–25 months) Fischer-344 rats. ABR thresholds and amplitudes of the different waves as well as phase-locking amplitudes of EFRs were calculated. Age-related differences were observed in all these measures, primarily as increases in ABR thresholds and decreases in ABR wave amplitudes and EFR phaselocking capacity. There were no observed correlations between the ABR thresholds and the ABR wave amplitudes. Significant correlations between the EFR amplitudes and ABR wave amplitudes were observed across a range of modulation frequencies in the young. However, no such significant correlations were found in the aged. The aged click ABR amplitudes were found to be lower than would be predicted using a linear regression model of the young, suggesting altered gain mechanisms in the relationship between ABRs and FFRs with age. These results suggest that ABR thresholds, ABR wave amplitudes, and EFRs measure complementary aspects of overlapping neurophysiological processes and the relationships between these measurements changes asymmetrically with age. Hence, measuring all three metrics provides a more complete assessment of auditory function, especially under pathological conditions like aging.

October 30, 2014

NO MEETING – ASA in Indy

November 6, 2014

Vidhya Munnamalai, PhD (BIO, Fekete lab)

Temporal manipulation of the Wnt pathway influences radial patterning in the developing mouse cochlea.

There is growing interest in Wnts for their potential to regenerate sensory cells in a damaged cochlea. These regeneration studies will be informed by a better understanding of how Wnt-mediated gene regulatory networks function during cochlear development. By activating the Wnt pathway in a temporal manner, we can tease apart the immediate Wnt target genes from the secondary genes involved in other signaling pathways, as well as identify potential feedback and feed forward loops.

We temporally manipulated the pathway in vitro in E12.5 murine cochleas by adding CHIR on different days and analyzed effects on cell fate and patterning after the 6th day. The drug was washed out the following day and replaced with DMSO control media. For all other days, cochleas were kept in DMSO control media. Cochleas were immunolabeled for Sox2, Prox1 and Myo6 to monitor the status of sensory formation. We performed RT-qPCR and in-situ hybridization (ISH) to assess changes in gene expression levels and expression patterns.

Profoundly different phenotypes are observed based on the timing of Wnt activation suggesting the temporal response to Wnt activation is dependent on the time in development. Our data suggest that precise Wnts signaling activity regulates the specification and size of the medial (IHC) domain before it influences the lateral (OHC) domain. The observed changes in the appearance and size of sensory subdomains upon Wnt activation hint at the different genes important in the specification of various cell types or domains.

November 13, 2014

Ann Hickox, PhD (SLHS, Heinz lab)

Cochlear recovery of speech-envelope cues in noise-damaged auditory-nerve responses

Listeners with hearing impairment have difficulties in speech perception that extend beyond the issue of reduced audibility of sound. Psychophysical evidence suggests that hearing-impaired listeners have a reduced ability to use rapidly varying, "temporal fine structure" (TFS) cues of a target signal compared to normal-hearing individuals. Acoustic signals can be decomposed into TFS vs more slowly varying "temporal envelope" (ENV) cues; however, cochlear filtering of acoustic, true-TFS information produces a neural "recovered" envelope that listeners may use to their advantage. Broadened cochlear filtering that typically accompanies sensorineural hearing loss is predicted to impair encoding of not only true-TFS, but also recovered ENV information. Here we test this prediction in a chinchilla model of noise-induced hearing loss. Auditory nerve responses to chimaeric speech (TFS from speech; ENV from noise) were analyzed using neural cross-correlation coefficients (Heinz and Swaminathan, 2009) representing the degree of speech-TFS and recovered-speech-ENV extracted from this modified speech signal. Overall, noise-induced cochlear damage resulted in significantly broadened tuning, yet modest if any change in true-TFS or recovered ENV coding. These results do not support either of the putative degradations primarily hypothesized to account for impaired TFS perception by hearing-impaired listeners, and thus suggest that simple interpretations of TFS speech studies may not be appropriate.

November 20, 2014

Kelly Ronald (BIO, Lucas lab)

The Role of Multimodal Sensory Reception in Female Mating Decisions

During courtship male signalers often convey information to female receivers in multimodal courtship displays. While much is known about how male signals vary, variation in female processing of these multimodal signals is relatively unexplored. We suggest that there is a critical, albeit undeveloped, link between multimodal sensory reception and individual variation in mate choice. What is the relationship of sensory processing across different sensory modalities? Does the correlation across sensory modalities affect a female’s mate-choice decisions? To answer these questions we conducted mate preference tests and examined the physiological responses to auditory and visual evoked potentials in female brown headed cowbirds (Molothrus ater). This is the first study that will be able to relate variation in mate-choice to variation in multimodal sensory processing. By understanding the potential for co-variation between the sensory modalities and its influence on mate-choice, we can begin to uncover the physiological mechanisms behind individual variation in mate-choice.

November 27, 2014

THANKSGIVING

December 4, 2014

Varsha Hariram, AuD (SLHS, Alexander lab)

Motivation and Development of a Neural-Scaled Entropy Model for Perception Frequency Compressed Speech

Signal processing schemes used in hearing aids, such as nonlinear frequency compression (NFC), recode speech information by synthesizing high-frequency information in lower frequency regions. Perceptual studies have shown that depending on the dominant speech sound, where compression occurs and the amount of compression can have a significant effect on perception. Very little is understood about how frequency-lowered information is encoded by the auditory periphery. Existing measures of speech perception cannot adequately explain the perceptual results. We have developed a measure that is sensitive to information in the altered speech signal in an attempt to predict optimal hearing aid settings for individual hearing losses. Inspired by the Cochlear-Scaled Entropy (CSE) model [Stilp et al., 2010, J. Acoust. Soc. Am., 2112-2126], Neural-Scaled Entropy (NSE) examines the effects of frequency-lowered speech at the level of the inner hair cell synapse of an auditory nerve model [Zilany et al. 2013, Assoc. Res. Otolaryngol.]. NSE quantifies the information available in speech by the degree to which the pattern of neural firing across frequency changes relative to its past history (entropy). Nonsense syllables with different NFC parameters were processed in noise. Results from NSE and CSE models are compared with perceptual data across the NFC parameters as well as across different vowel-defining parameters, and consonant features. We also explore various parameters for optimizing the predictive value of the NSE and CSE.

December 11, 2014

Chris Soverns (PULSe, Bartlett lab)

Consonant Discrimination in the Rat Inferior Colliculus

Speech perception relies on our ability to discriminate between phonemes by specific acoustic cues. In this continuing study, we recorded extracellular spiking data in the inferior colliculus (IC) of anesthetized rat in response to /ba/ vs. /pa/ human speech stimuli, which differ only in voice onset time (VOT). Across neurons, we have found diverse representations of the same stimulus, suggesting sparse and non-redundant perceptual coding. Distance measures of response timing across stimuli suggest that representations are not isomorphic for stimulus features across the population. This contrasts isomorphism seen in earlier auditory nuclei but is consistent with a temporal- to rate-code conversion that begins in the IC and continues in the auditory thalamus and cortex. However, latencies and clustering of the most robust response features progress as a function of VOT. As this work continues, we are refining classifiers of VOT-specific responses from the data, and we hope to demonstrate effective discrimination of the stimuli based upon the response features at single-neuron and population levels.

December 18-January 8th – WINTER BREAK

January 15, 2015

Alex Francis, PhD (Francis lab)

Psychophysiological measures of listening effort

In this talk I’ll present an interim report on progress in the development of a new line of research in my lab, applying physiological measures of autonomic nervous system response to investigate processing demands during speech perception under adverse or sub-optimal conditions.

January 22, 2015

Dave Axe (Heinz lab)

The effects of carboplatin induced ototoxicity on temporal coding in the auditory nerve

Sensorineural hearing loss (SNHL) and temporal coding within the auditory nerve (AN) is often discussed in terms of threshold shift and changes in tuning. In most cases, these changes are attributed to dysfunction of outer hair cells (OHCs). However, in many pathologies including noise-induced hearing loss (NIHL), inner hair cells (IHCs) are disrupted as well and may affect temporal coding. The chemotherapy drug carboplatin, administered in chinchillas, has been shown to selectively damage IHCs while leaving OHCs unaffected across much of the cochlear partition. Here, we have used this model to evaluate effects of IHC dysfunction on temporal coding without the confound of broadened tuning. Distortion-product otoacoustic emissions (DPOAEs) and auditory-brainstem-responses (ABRs) were measured before and at least three weeks after a single dose of carboplatin to characterize the degree and configuration of hearing loss, and to confirm the lack of effect on OHC function. Single-unit spike-train responses were recorded from barbiturate-anesthetized chinchillas, and compared between the normal-hearing control group and the carboplatin-exposed group. Tuning curves, rate-level functions, and post-stimulus-time histograms were used to characterize all auditory-nerve fibers. Quantitative analyses were performed on recorded spike trains, with temporal coding evaluated in terms of both fine structure and envelope coding. Temporal responses to sinusoidally amplitude-modulated (SAM) tones of varying modulation frequency, modulation depth, and background-noise levels were measured. We estimated the ability of each nerve fiber to detect and discriminate between varying levels of amplitude modulation in quiet and in varying levels of background noise. Consistent with our ABR and DPOAE measurements, AN-fiber thresholds were only slightly elevated in the carboplatin group and there was no significant change in tuning-curve bandwidth. Rate-level functions demonstrated an average reduction in both spontaneous and stimulus-driven firing rates, such that the average slope of the rate-level functions did not change. In general, synchrony to the envelope and temporal fine structure of the stimuli was not significantly affected. However, estimated modulation detection thresholds based on phase locking were worse in the carboplatin group than in the normal-hearing control group. This result appears to be due largely to the reduced number of spikes in the carboplatin-group responses, which decreases the statistical detectability of the modulation. These results suggest that, although IHC damage does not degrade cochlear tuning, it often reduces the number of spikes available in the AN response and thus can degrade the representation accuracy of the temporal structure of perceptually relevant complex sounds.

January 29, 2015

Katie Scott (Fekete lab)

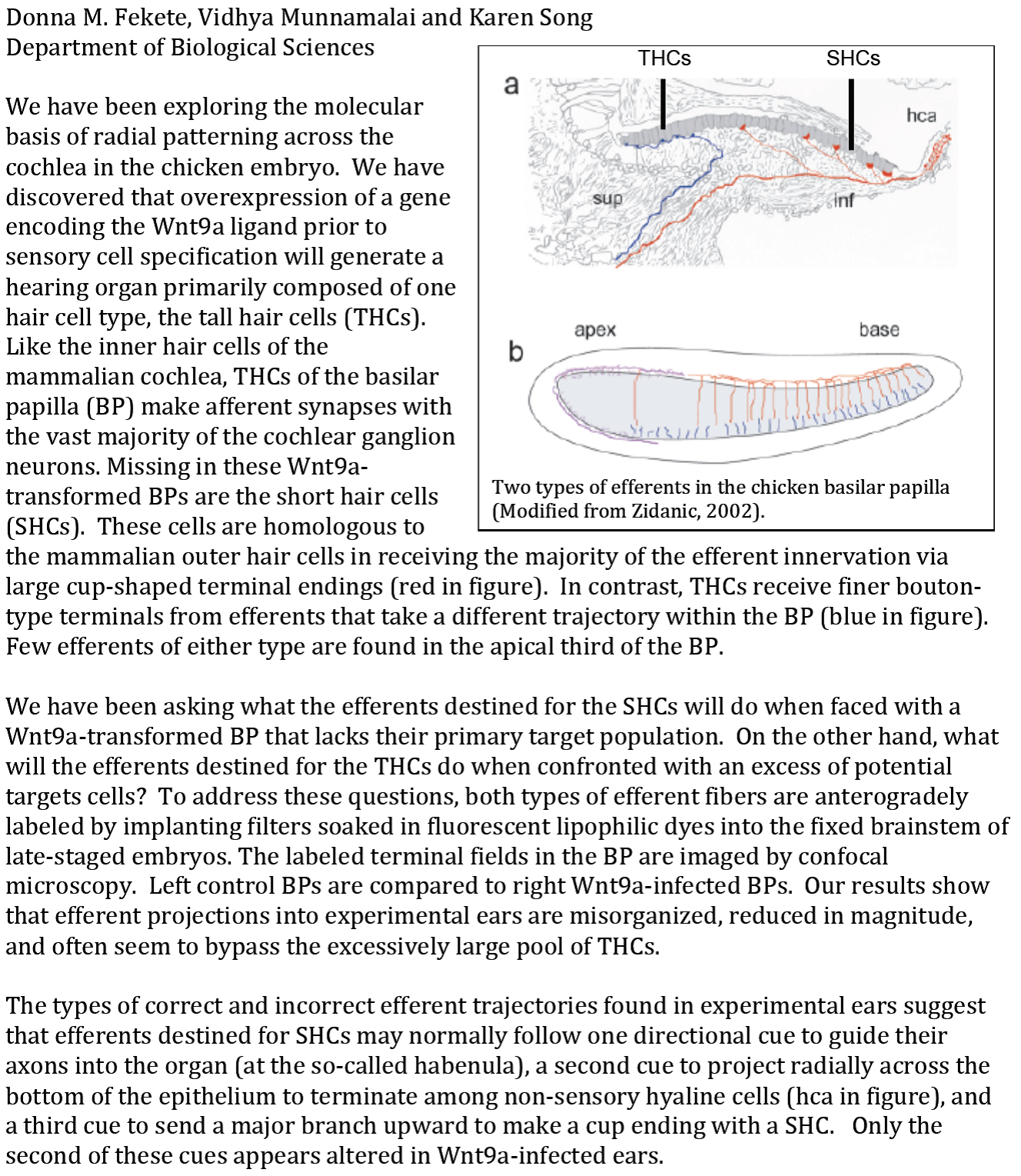

The Role of Wnt9a in the Development of the Chicken Basilar Papilla

The basilar papilla (BP) is the auditory organ of the chicken and is the equivalent of the organ of Corti in the mammalian cochlea. Sensory hair cells in the BP are classified into two types based on morphology – tall and short. Tall hair cells are located on the neural side of the BP and are innervated primarily by afferent neurons (the neural-side identity). Short hair cells are located on the abneural side of BP and are primarily innervated by efferent neurons (the abneural-side identity). Early in embryonic development, asymmetry in the expression of Wnt9a mRNA suggests that there is a Wnt9a protein gradient across the sensory organ that is highest on the neural side. Overexpression of Wnt9a results in the development of the neural-side identity across the entire width of the BP. We hypothesize that high levels of Wnt9a are instructive for the neural-side identity. This raises two intriguing possibilities for patterning on the abneural side exposed to low Wnt9a levels: (1) the abneural-side identity is the default phenotype and is not dependent on Wnt9a, or (2) Low levels of Wnt9a are instructive for the abneural-side identity. To distinguish among these possibilities, we have designed experiments to knockdown Wnt9a transcripts in developing BPs. We aim to deliver short-interfering RNAs against Wnt9a using a retroviral vector. Immunohistochemistry has shown effective infection in BPs injected with our gene delivery vector. In-situ hybridization using an RNA probe to detect the presence of Wnt9a transcripts has shown weak knockdown of Wnt9a. To further test the effectiveness of our siWnt9a, we will infect chicken fibroblast (DF-1) cells and use qPCR to detect changes in Wnt9a transcripts.

February 5, 2015

Xin Luo

Performance Evaluation of a Cochlear Implant Processing Strategy with Partial Tripolar Mode Current Steering

In cochlear implants (CIs), partial tripolar (pTP) stimuli return part of the current to the two flanking electrodes (controlled by σ) to generate narrower excitation patterns than monopolar (MP) stimuli. To create additional spectral channels, current steering may be incorporated into focused pTP stimuli by varying the proportion of current returned to the basal and apical flanking electrodes (controlled by α and 1-α, respectively). This study compared the performances of experimental CI processing strategies (MP, pTP, and pTP-steering) to evaluate the benefits of current focusing and current steering to speech and music perception. Five post-lingually deafened adult CI users were tested. Experimental strategies were matched in the number of main electrodes, phase duration, pulse rate, and loudness, although they used less electrodes and lower pulse rates than clinical strategies. In the pTP-steering strategy, two steering ranges with α from 0.4 to 0.6 and from 0.2 to 0.8 were tested. Experimental and clinical strategies were tested in random order for psychoacoustic tests (pitch ranking and spectral ripple discrimination), speech tests (speech perception in noise and vocal emotion recognition), and music tests (melodic contour identification). The results showed that experimental strategies had similar performance as clinical strategies in different outcome measures. Compared to the MP strategy, pTP and pTP-steering strategies significantly improved CI subjects’ pitch-ranking ability. However, the performance improvements in other tasks with pTP and pTP-steering strategies than with the MP strategy were not significant. Future studies will test more CI users to explore inter-subject variability and increase statistical power. Longer trial period with the experimental strategies may be necessary for CI users to achieve significantly better speech and music perception.

February 12, 2015

ARO practice/feedback

February 19, 2015

Prof. Chris Plack, University of Manchester (UK)

Hidden Hearing Loss due to Noise Exposure and Aging

Dramatic results from animal experiments suggest that moderate noise exposure can cause substantial permanent damage to the auditory nerve (cochlear neuropathy) that is not detectable by standard hearing tests. In humans, such damage has been described as “hidden hearing loss,” and may be a cause of speech perception difficulties and tinnitus. In the talk I will describe the rationale and research plan for our project that aims to determine the physiological bases and perceptual consequences of hidden hearing loss in humans. I will present pilot data based on the “frequency-following response,” an electrophysiological measure of neural temporal coding. Animal experiments also suggest that ageing is a cause of cochlear neuropathy, in the absence of significant noise exposure. This may contribute to the temporal coding deficits experienced by the elderly. I will present electrophysiological and behavioral data from our group suggesting that aging impacts both on the neural coding of pitch, and on the perception of musical harmony.

February 26, 2015

ARO recap

March 5, 2015

Ed Bartlett, PhD (BIO/BME)

Age-related changes in the transformation of responses to amplitude modulated sounds in the inferior colliculus

Age-related hearing loss includes peripheral threshold loss caused by loss of OHCs, synaptic loss due to loss of ribbon synapses and central processing changes due to loss of inhibitory neurotransmitters. Studying central processing deficits is hindered due to the difficulties in isolating these from inherited peripheral deficits.

Multiple studies have shown evidence of impaired neural coding to rapid temporal modulations (e.g., fine structure), and simultaneous enhancement of coding to slower modulations (e.g. envelope). We have previously shown that the synaptic drive, measured indirectly by auditory brainstem responses, are not correlated with temporal processing measured by envelope following responses (EFRs) in the aged5. Statistical modeling also postulated the presence of compensatory mechanisms that increased responses resulted in larger EFRs compared to the ABRs.

Here we look for physiological evidence for these compensatory mechanisms, by studying the transformations of responses to sinusoidally amplitude modulated noise (nAM) in the inferior colliculus (IC). By measuring local field potentials (LFPs) and single unit responses simultaneously from the same site, we can compare the synaptic input as well as neuronal spiking output for each site and better isolate mechanistic changes generated in the IC, versus those inherited from lower nuclei in the auditory pathway.

March 12, 2015

Kristina Milvae, Au.D. (SLHS)

Psychoacoustic Measures of Cochlear Gain Reduction at Speech-Relevant Frequencies

Difficulty understanding speech in noisy environments is a common complaint of listeners with hearing loss. One known physiological mechanism that is hypothesized to improve speech understanding in noisy environments is the medial olivocochlear reflex (MOCR). The MOCR acts by reducing gain in the cochlea in response to the acoustic environment. When gain is already reduced, as in cochlear hearing impairment, the MOCR may be less effective. Evidence of ipsilateral cochlear gain reduction has been predominantly measured at 4 kHz in psychoacoustic studies. However, other frequency regions are more ecologically relevant and more likely to play a larger role in speech-in-noise perception. Therefore, further exploration of gain reduction at lower frequencies is needed. In this experiment, forward and simultaneous masking techniques are used to measure changes in cochlear gain with preceding acoustic stimulation. These measurements were made at 2- and 4-kHz for listeners with normal hearing. The amount of gain change is compared to subject performance on a speech-in-noise task. It is hypothesized that the magnitude of gain change (estimated MOCR strength) will be positively correlated with performance in speech-in-noise perception. Surprising results and implications will be discussed.

March 26, 2015

Bailey Yeager and Jennifer Simpson, Au.D. (SLHS)

Integrative Audiology Grand Rounds

Next semester the Purdue Speech, Language, and Hearing Sciences program will be launching an "Integrative Audiology Grand Rounds" course. This week in "Hearing Seminars" a clinical audiology case will be shared. The case will focus on a teenage patient with septo-optic dysplasia and a unilateral hearing loss. The presentation will highlight the many components of patient care: clinical history, results from testing, a detailed description of the audiologic clinical test battery, and recommendations for treatment.

April 9, 2015

Donna Fekete, Ph.D. (BIO)

Forlorn wallflowers at the country barn dance: what do outgrowing cochlear efferents do when they cannot find an appropriate hair cell partner?

April 16, 2015

Josh Alexander, Ph.D (SLHS)

Information for Perception of Speech Distorted by Sensorineural Hearing Loss

April 23, 2015

Ed Bartlett, Ph.D (BIO/BME)

Dynamic neural sensitivity adjustments of rat inferior colliculus neurons to spectral stimulus distributions

Stimulus-specific adaptation is the phenomenon of reduced neural responses induced by stimulus repetition. However, stimulus-specific adaptation might be influenced by factor additional to stimulus repetition. Recent recordings of human auditory cortex responses suggest dynamic changes of stimulus-specific adaptation by the statistical stimulation context. With the current study we aimed to investigate whether such context effects are also present at an earlier stage of the auditory hierarchy. Spikes and local field potentials were recorded from inferior colliculus neurons of rats while tones were presented in oddball sequences of different statistical contexts. That is, stimulation consistent of two types of oddball sequences, each consisting of a repeatedly presented tone (standard) and three rare spectral changes of different magnitude (small, moderate, large deviants). The critical manipulation was the relative probability with which large spectral changes occurred. In one context, the probability was high (relative to all deviants) while it was low in the other context. We observed larger responses for deviants compared to standards, confirming previous reports of increased response adaptation for frequently presented tones. Importantly, the statistical context in which tones were presented strongly modulated stimulus-specific adaptation. Physically and probabilistically identical stimuli (moderate deviants) in the two contexts elicited different responses magnitudes consistent with neural gain changes and thus neural sensitivity adjustments induced by the spectral range of a stimulus distribution. The data show that already at the level of the inferior colliculus stimulus-specific adaptation is dynamically altered by the statistical context in which stimuli are presented.

April 30, 2015

Michael Heinz, Ph.D (SLHS/BME)

Neurophysiological effects of noise-induced hearing loss

Noise over-exposure is known to cause sensorineural hearing loss (SNHL) through damage to sensory hair cells and subsequent cochlear neuronal loss. These same histopathological changes can result from other etiological factors (e.g., aging, ototoxic drugs). Audiologically, each is currently classified as SNHL, despite differences in etiology. Functionally, noise-induced hearing loss can differ significantly from other SNHL etiologies; however, these differences remain hidden from current audiological diagnostic tests. For example, noise-induced mechanical damage to stereocilia on inner- and outer-hair cells results in neurophysiological responses that complicate the common view of loudness recruitment. Also, mechanical effects of noise overexposure appear to affect the tip-to-tail ratio of impaired auditory-nerve-fiber tuning curves in ways that create a much more significant degradation of the cochlear tonotopic representation than does a metabolic form of age-related hearing loss. Even temporary threshold shifts due to moderate noise exposure can cause permanent synaptic loss at inner-hair-cell/auditory-nerve-fiber synapses, which reduces neural-coding redundancy for complex sounds and likely degrades listening in background noise. These pathophysiological results suggest that novel diagnostic measures are now required to dissect the single audiological category of SNHL to allow for better individual fitting of hearing aids to restore speech intelligibility in real-world environments. Supported by the NIH and the American Hearing Research Foundation.