Human-Technology Interaction

Gesture Recognition

Gestures provide a physical form of human-computer interaction (HCI) that has benefits for unencumbered control and rehabilitative therapy. Unfortunately, gesture interfaces are typically designed for individuals that are able-bodied.

In our lab we have designed a software engine to systematically convert standard gesture lexicons into gestures that are usable by individuals with upper extremity mobility impairments.

Related papers:

-

Jiang, H., Duerstock, B., Wachs, J. (2016). User-Centered and Analytic-Based Approaches to Generate Usable Gestures for Individuals with Quadriplegia. IEEE Trans Human-Machine Systems, 46(3), 460-466.

-

Jiang, H., Duerstock, B.S., Wachs, J.P. (2014). A Machine Vision-Based Gestural Interface for People with Upper Extremity Physical Impairments. IEEE Trans on Systems, Man, and Cybernetics: Systems, 44(5), 630-641.

-

Jiang, H., Wachs, J.P., Duerstock, B.S. (2013). An Optimized Real-Time Hands Gesture Recognition Based Interface for Individuals with Upper-Level Spinal Cord Injuries. J Real-Time Image Processing, 1-14.

Assistive Robotics

Assistive robotics has the potential to assist persons with disabilities. Persons with mobility impairments lack the number and precision of body effectors for the sophisticated control needed for performing most robotic-assisted tasks.

In our lab, we design technologies to assist individuals with mobility disabilities with daily living activities (DLA).

Related papers:

- Chen, K., Tang, S., Jiang, H., Wachs, J.P., Duerstock, B.S. (2014, Sept. 14). Practical Implications for the Design of Mobile Assistive Robots for Quadriplegics Using a Service Dog Model", In Proc. of IROS 2014 Workshop on Assistive Robotics for Individuals with Disabilities: HRI Issues and Beyond.

- Jiang, H., Zhang, T., Wachs, J., Duerstock, B.S. (2016). Enhanced Control of a Wheelchair-Mounted Robotic Manipulator Using 3-D Vision and Multimodal Interaction. Computer Vision and Image Understanding, 149, 21-31.

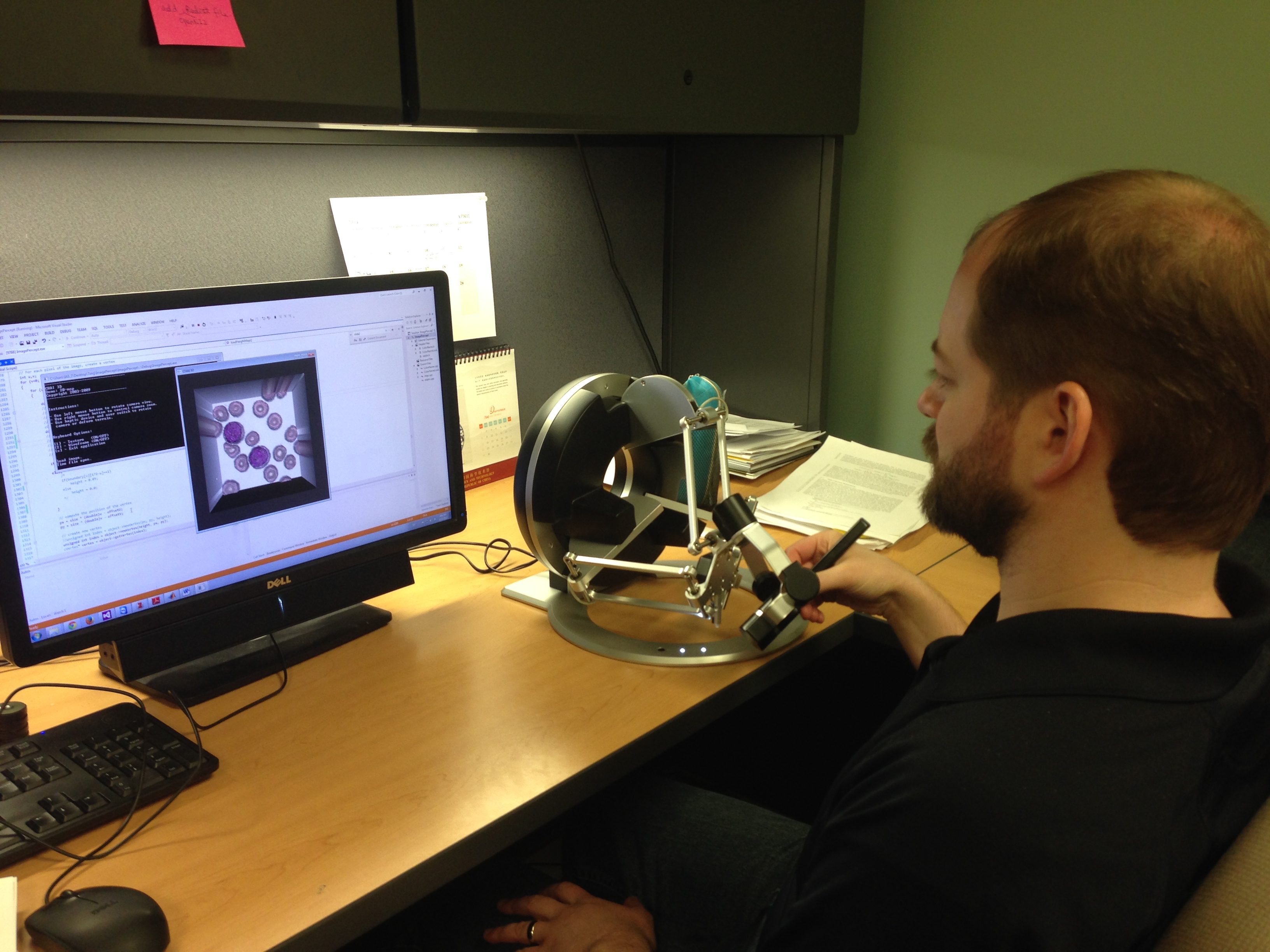

Multimodal Sensory Substitution

Multimodal sensory substitution enables blind or visually impaired individuals perceive images through sensors other than vision. Currently, it is challenging for blind or visually impaired people to interpret real-time visual scientific data that is commonly generated during lab experimentation, such as performing light microscopy, spectrometry, and observing chemical reactions.

Multimodal sensory substitution enables blind or visually impaired individuals perceive images through sensors other than vision. Currently, it is challenging for blind or visually impaired people to interpret real-time visual scientific data that is commonly generated during lab experimentation, such as performing light microscopy, spectrometry, and observing chemical reactions.

In our lab, we have developed a multimodal image perception system which can substitute visual information with other sensory modalities, such as tactile, auditory and haptics.

Related papers:

- Zhang, T., Williams, G.J., Duerstock, B.S., Wachs, J.P. (2014, Oct. 5-8). Multimodal approach to image perception of histology for the blind or visually impaired, In Proc. of 2014 IEEE International Conference on Systems, Man, and Cybernetics (SMC2014).

- T. Zhang, B. Duerstock ,J. Wachs. Multimodal Perception of Histological Images for Persons Who Are Blind of Visually Impaired. (2017). ACM Transactions on Accessible Computing (TACCESS) 9(3).

- Featured in New Scientist