Evaluation of an augmented reality platform for austere surgical telementoring: a randomized controlled crossover study in cricothyroidotomies |

||

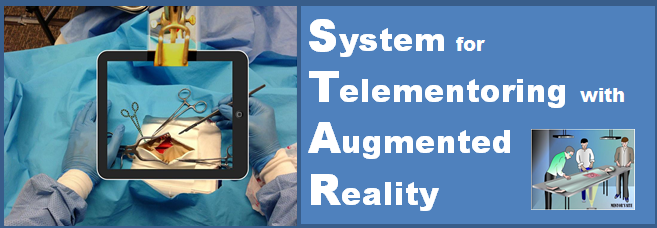

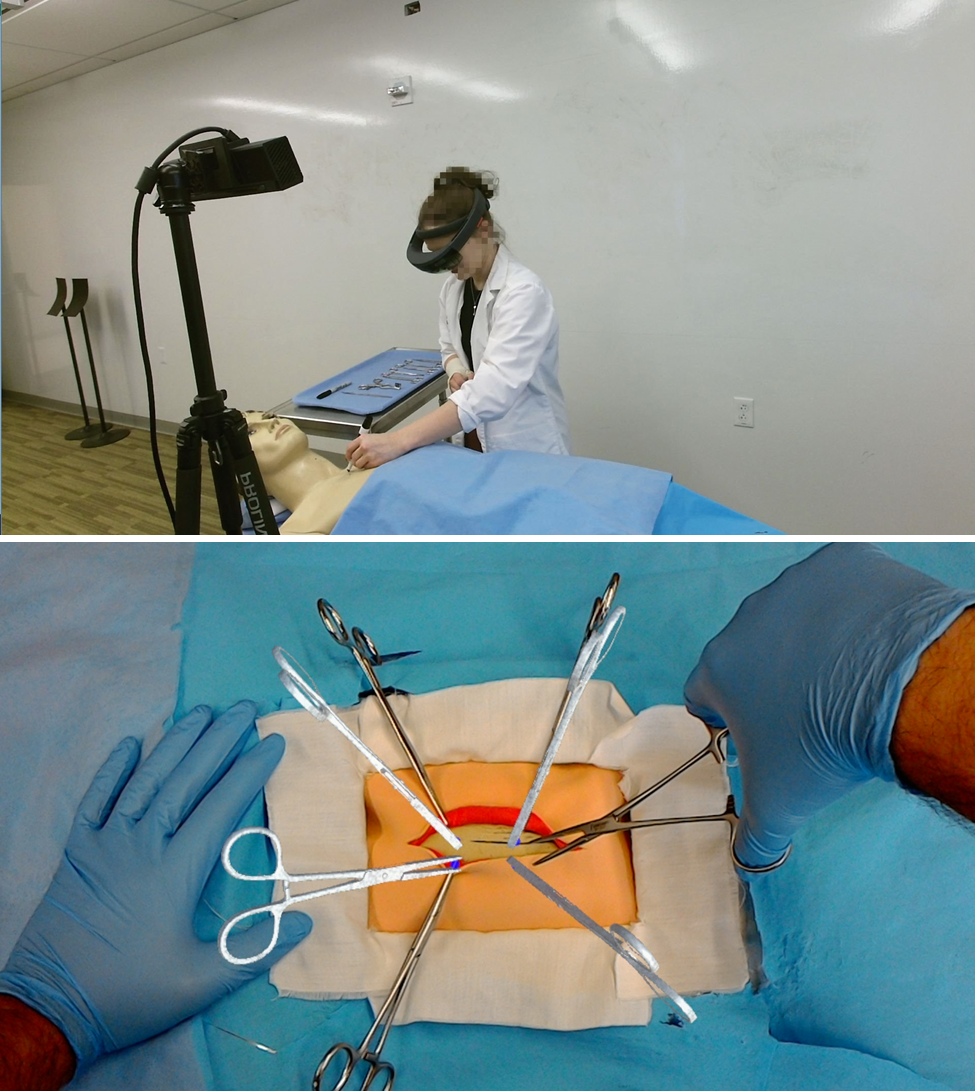

| Abstract:Telementoring platforms can help transfer surgical expertise remotely. However, most telementoring platforms are not designed to assist in austere, pre-hospital settings. This paper evaluates the System for Telementoring with Augmented Reality (STAR), a portable and self-contained telementoring platform based on an Augmented Reality Head-Mounted Display (ARHMD). The system is designed to assist in austere scenarios: a stabilized first-person view of the operating field is sent to a remote expert, who creates surgical instructions that a local first responder wearing the ARHMD can visualize as three-dimensional models projected onto the patient’s body. Our hypothesis evaluated whether remote guidance with STAR could lead to performing a surgical procedure better, as opposed to remote audio-only guidance. Remote expert surgeons guided first responders through training cricothyroidotomies in a simulated austere scenario, and on-site surgeons evaluated the participants using standardized evaluation tools. The evaluation comprehended completion time and technique performance of specific cricothyroidotomy steps. The analyses were also performed considering the participants’ years of experience as first responders, and their experience performing cricothyroidotomies. A linear mixed model analysis showed that using STAR was associated with higher procedural and non-procedural scores, and overall better performance. Additionally, a binary logistic regression analysis showed that using STAR was associated to safer and more successful executions of cricothyroidotomies. This work demonstrates that remote mentors can use STAR to provide first responders with guidance and surgical knowledge, and represents a first-step towards the adoption of ARHMDs to convey clinical expertise remotely in austere scenarios. |  |

|

| Rojas-Muñoz E, Lin C, Sanchez-Tamayo N, Cabrera ME, Andersen D, Popescu V, JA Barragan, Zarzaur B, Murphy P, Anderson K, Douglas T, Griffis C, McKee J, Kirkpatrick A, Wachs J. Evaluation of an augmented reality platform for austere surgical telementoring: a randomized controlled crossover study in cricothyroidotomies. Nature Digital Medicine. 2020; 3, 75 | Online PDF | |

How About the Mentor? Effective Workspace Visualization in AR Telementoring |

||

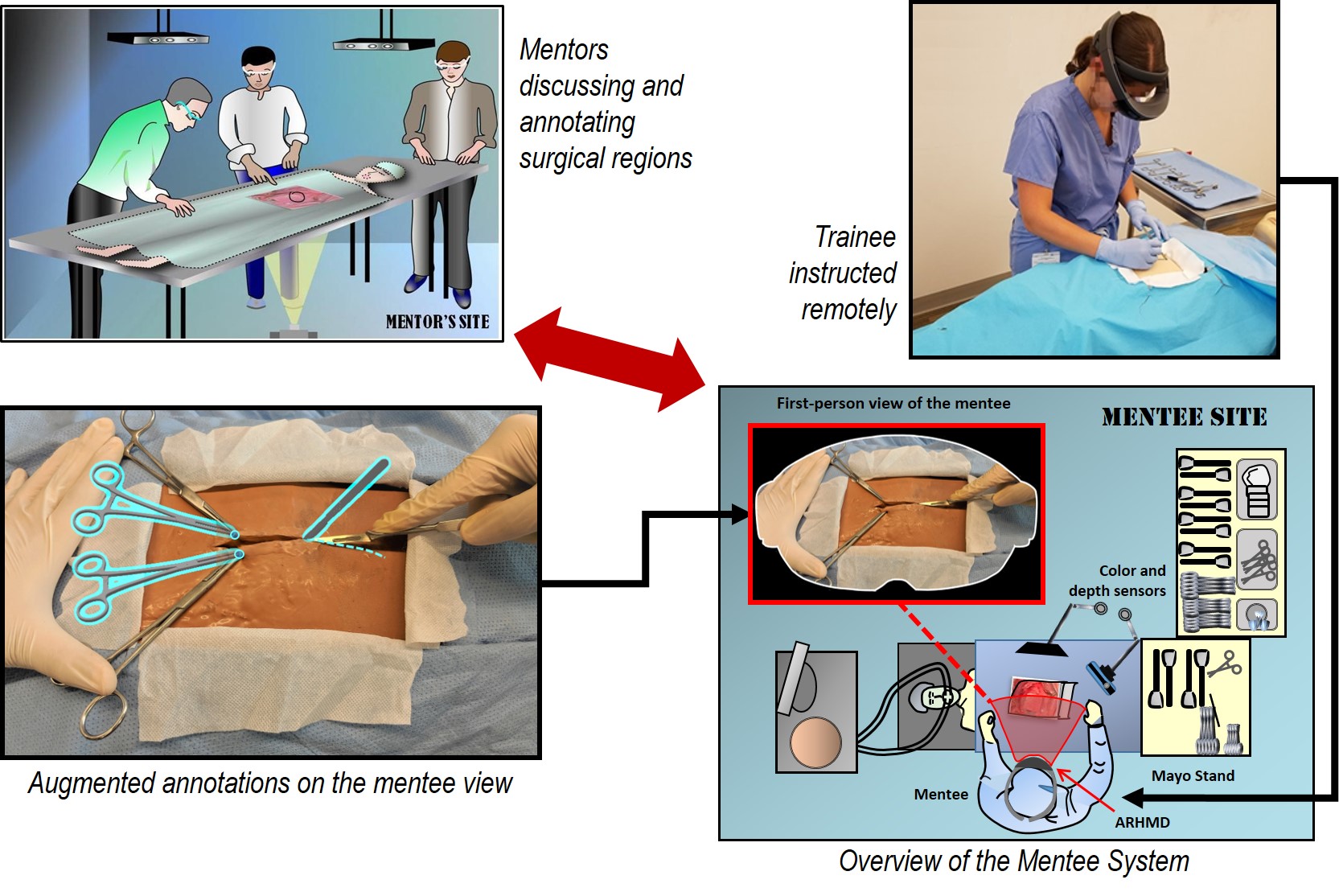

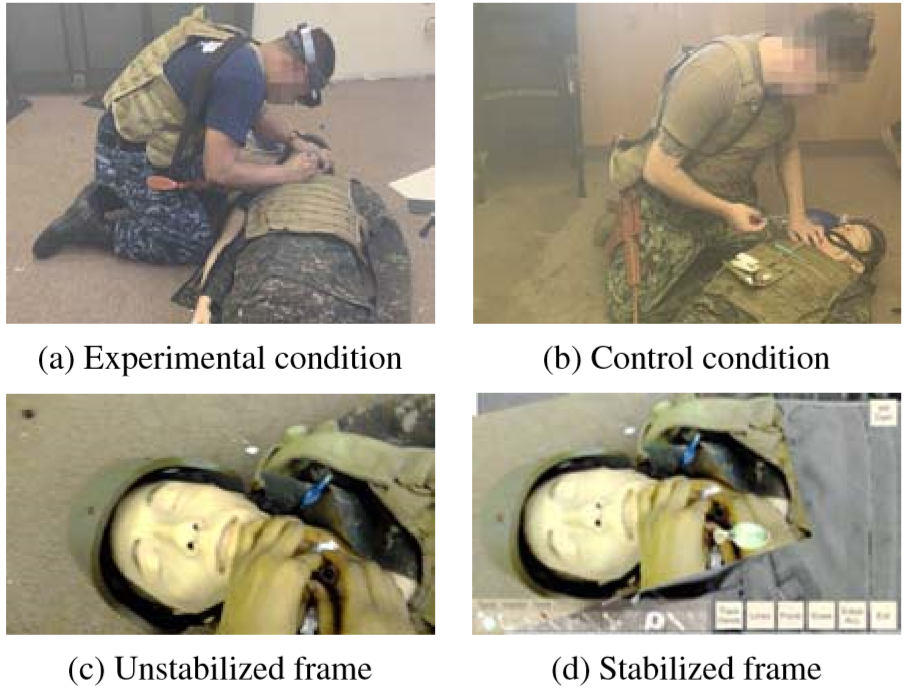

| Abstract:Augmented Reality (AR) benefits telementoring by enhancing the communication between the mentee and the remote mentor with mentor authored graphical annotations that are directly integrated into the mentee’s view of the workspace. An important problem is conveying the workspace to the mentor effectively, such that they can provide adequate guidance. AR headsets now incorporate a front facing video camera, which can be used to acquire the workspace. However, simply providing to the mentor this video acquired from the mentee’s first-person view is inadequate. As the mentee moves their head, the mentor’s visualization of the workspace changes frequently, unexpectedly, and substantially. This paper presents a method for robust high-level stabilization of a mentee first-person video to provide effective workspace visualization to a remote mentor. The visualization is stable, complete, up to date, continuous, distortion free, and rendered from the mentee’s typical viewpoint, as needed to best inform the mentor of the current state of the workspace. In one study, the stabilized visualization had significant advantages over unstabilized visualization, in the context of three number matching tasks. In a second study, stabilization showed good results, in the context of surgical telementoring, specifically for cricothyroidotomy training in austere settings. |  |

|

| Lin C, Rojas-Muñoz E, Cabrera ME, Sanchez-Tamayo N, Andersen D, Popescu V, Barragan JA, Zarzaur B, Murphy P, Anderson K, Douglas T, Griffis C, Wachs J. How About the Mentor? Effective Workspace Visualization in AR Telementoring. 27th IEEE Conference on Virtual Reality and 3D User Interfaces. 2020. | ||

The System for Telementoring with Augmented Reality (STAR): A Head-Mounted Display to Improve Surgical Coaching and Confidence in Remote Areas |

||

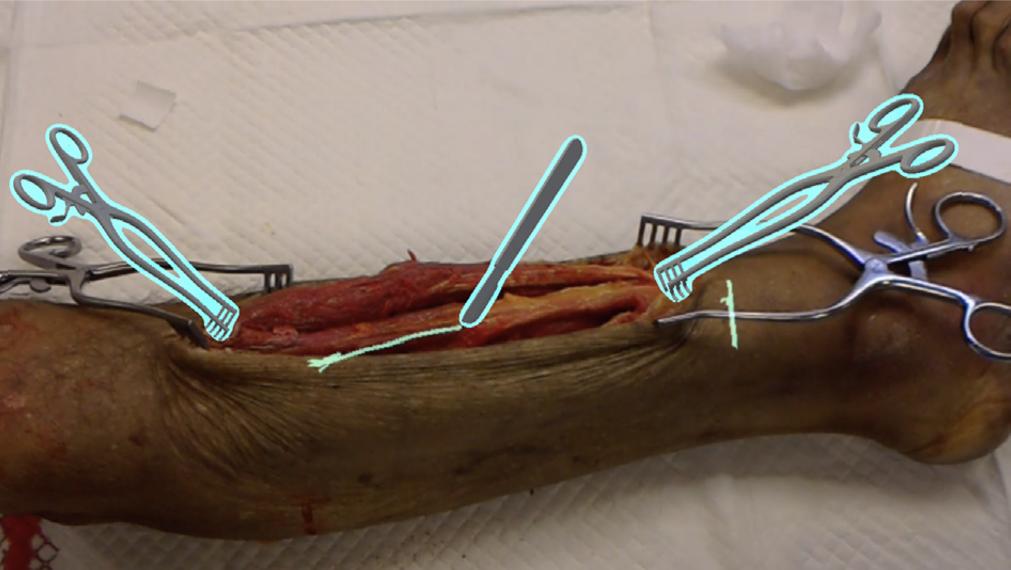

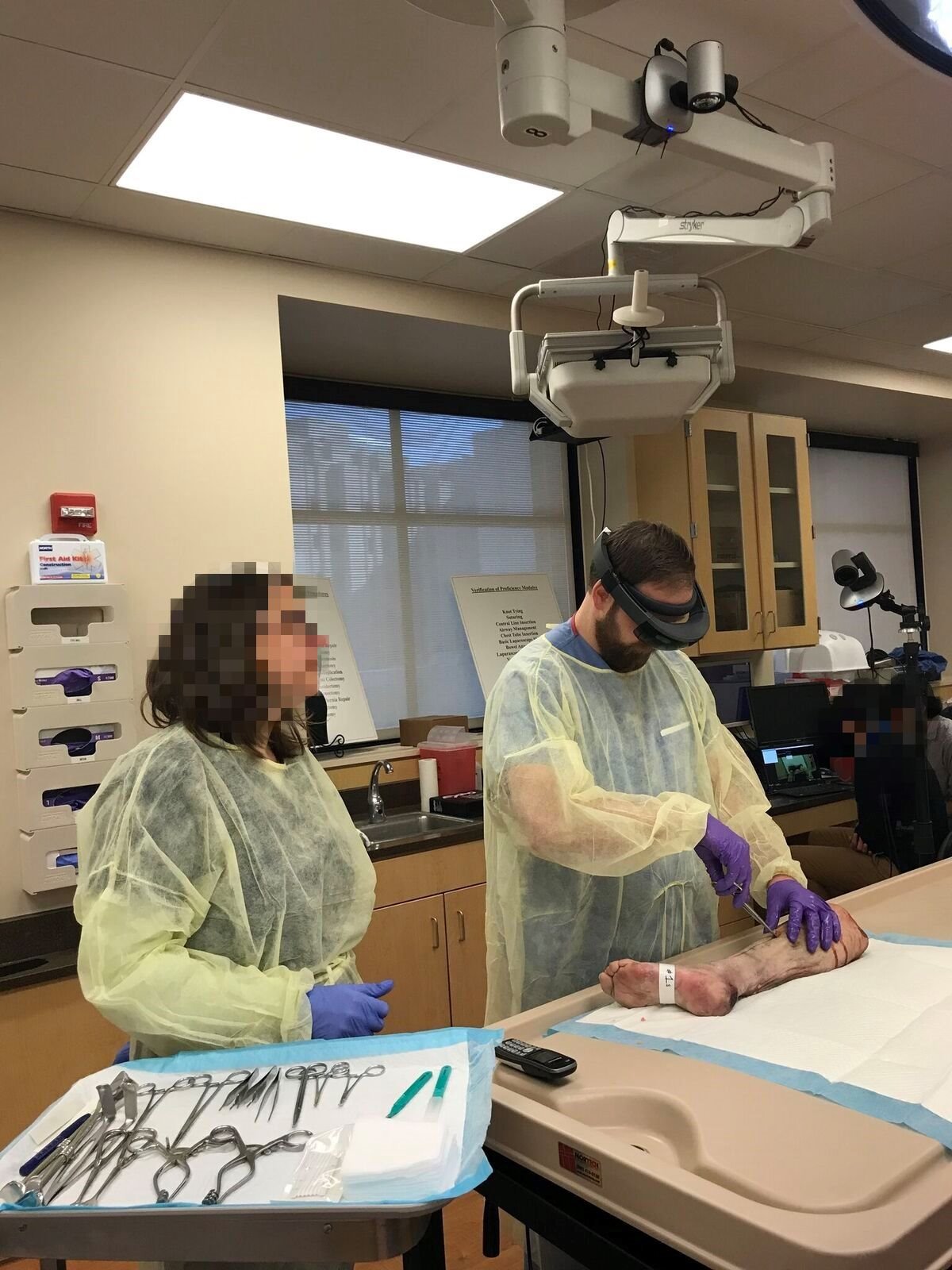

| Abstract: Background: The surgical workforce particularly in rural regions needs novel approaches to reinforce the skills and confidence of health practitioners. Although conventional telementoring systems have proven beneficial to address this gap, the benefits of platforms of augmented reality-based telementoring in the coaching and confidence of medical personnel are yet to be evaluated. Methods: A total of 20 participants were guided by remote expert surgeons to perform leg fasciotomies on cadavers under one of two conditions: (1) telementoring (with our System for Telementoring with Augmented Reality) or (2) independently reviewing the procedure beforehand. Using the Individual Performance Score and the Weighted Individual Performance Score, two on-site, expert surgeons evaluated the participants. Postexperiment metrics included number of errors, procedure completion time, and self-reported confidence scores. A total of six objective measurements were obtained to describe the self-reported confidence scores and the overall quality of the coaching. Additional analyses were performed based on the participants’ expertise level. Results: Participants using the System for Telementoring with Augmented Reality received 10% greater. Weighted Individual Performance Score (P < .03) and performed 67% fewer errors (P < .04). Moreover, participants with lower surgical expertise that used the System for Telementoring with Augmented Reality received 17% greater Individual Performance Score (P < .04), 32% greater Weighted Individual Performance Score (P < .01) and performed 92% fewer errors (P < .001). In addition, participants using the System for Telementoring with Augmented Reality reported 25% more confidence in all evaluated aspects (P < .03). On average, participants using the System for Telementoring with Augmented Reality received augmented reality guidance 19 times on average and received guidance for 47% of their total task completion time. Conclusion: Participants using the System for Telementoring with Augmented Reality performed leg fasciotomies with fewer errors and received better performance scores. In addition, participants using the System for Telementoring with Augmented Reality reported being more confident when performing fasciotomies under telementoring. Augmented Reality Head-Mounted Display based telementoring successfully provided confidence and coaching to medical personnel. |

|

|

| Rojas-Muñoz E, Cabrera ME, Lin C, Andersen D, Popescu V, Anderson K, Zarzaur B, Mullis B, Wachs J. The System for Telementoring with Augmented Reality (STAR): A Head-Mounted Display to Improve Surgical Coaching and Confidence in Remote Areas. Surgery. 2020. | ||

Telementoring in Leg Fasciotomies via Mixed-Reality: Clinical Evaluation of the STAR Platform. |

||

| Abstract: Introduction: Point-of-Injury (POI) care requires immediate specialized assistance, but delays and expertise lapses can lead to complications. In such scenarios, telementoring can benefit health practitioners by transmitting guidance from remote specialists. However, current telementoring systems are not appropriate for POI care. This paper clinically evaluates our System for Telementoring with Augmented Reality (STAR), a novel telementoring system based on an Augmented Reality Head-Mounted Display. The system is portable, self-contained, and displays virtual surgical guidance onto the operating field. These capabilities can facilitate telementoring in POI scenarios while mitigating limitations of conventional telementoring systems. Methods: Twenty participants performed leg fasciotomies on cadaveric specimens under either one of two experimental conditions: telementoring using STAR; or without telementoring but reviewing the procedure beforehand. An expert surgeon evaluated the participants’ performance in terms of completion time, number of errors, and procedure-related scores. Additional metrics included a self-reported confidence score and post-experiment questionnaires. Results: System for Telementoring with Augmented Reality effectively delivered surgical guidance to non-specialist health practitioners: participants using STAR performed fewer errors and obtained higher procedure-related scores. Conclusions: This work validates STAR as a viable surgical telementoring platform, which could be further explored to aid in scenarios where life-saving care must be delivered in a prehospital setting. |

|

|

| Rojas-Muñoz E, Cabrera ME, Lin C, Sanchez-Tamayo N, Andersen D, Popescu V, Anderson K, Zarzaur B, Mullis B, Wachs J. Telementoring in Leg Fasciotomies via Mixed-Reality: Clinical Evaluation of the STAR Platform. Military Medicine. 2019; 185, 513-520. | ||

Robust High-Level Video Stabilization for Effective AR Telementoring |

||

| Abstract: This poster presents the design, implementation, and evaluation of a method for robust high-level stabilization of mentees first-person video in augmented reality (AR) telementoring. This video is captured by the front-facing built-in camera of an AR headset and stabilized by rendering from a stationary view a planar proxy of the workspace projectively texture mapped with the video feed. The result is stable, complete, up to date, continuous, distortion free, and rendered from the mentee’s default viewpoint. The stabilization method was evaluated in two user studies, in the context of number matching and for cricothyroidotomy training, respectively. Both showed a significant advantage of our method compared with unstabilized visualization. |

|

|

| Lin C, Rojas-Muñoz E, Cabrera ME, Sanchez-Tamayo N, Andersen D, Popescu V, Barragan Noguera JA, Zarzaur B, Murphy P, Anderson K, Douglas T, Griffis C, Wachs J. Robust High-Level Video Stabilization for Effective AR Telementoring. 26th IEEE Conference on Virtual Reality and 3D User Interfaces, Japan. 2019. | ||

Augmented Reality as a Medium for Improved Telementoring |

||

| Abstract: Combat trauma injuries require urgent and specialized care. When patient evacuation is infeasible, critical life-saving care must be given at the point of injury in real-time and under austere conditions associated to forward operating bases. Surgical telementoring allows local generalists to receive remote instruction from specialists thousands of miles away. However, current telementoring systems have limited annotation capabilities and lack of direct visualization of the future result of the surgical actions by the specialist. The System for Telementoring with Augmented Reality (STAR) is a surgical telementoring platform that improves the transfer of medical expertise by integrating a full-size interaction table for mentors to create graphical annotations, with augmented reality (AR) devices to display surgical annotations directly onto the generalist’s field of view. Along with the explanation of the system’s features, this paper provides results of user studies that validate STAR as a comprehensive AR surgical telementoring platform. In addition, potential future applications of STAR are discussed, which are desired features that state-of-the-art AR medical telementoring platforms should have when combat trauma scenarios are in the spotlight of such technologies. |

|

|

| Rojas-Muñoz E, Andersen D, Cabrera ME, Popescu V, Marley S, Zarzaur B, Mullis B, Wachs J. Augmented Reality as a Medium for Improved Telementoring. Military Medicine. 2019; 184, 57-64. | ||

Augmented Reality Future Step Visualization for Robust Surgical Telementoring |

||

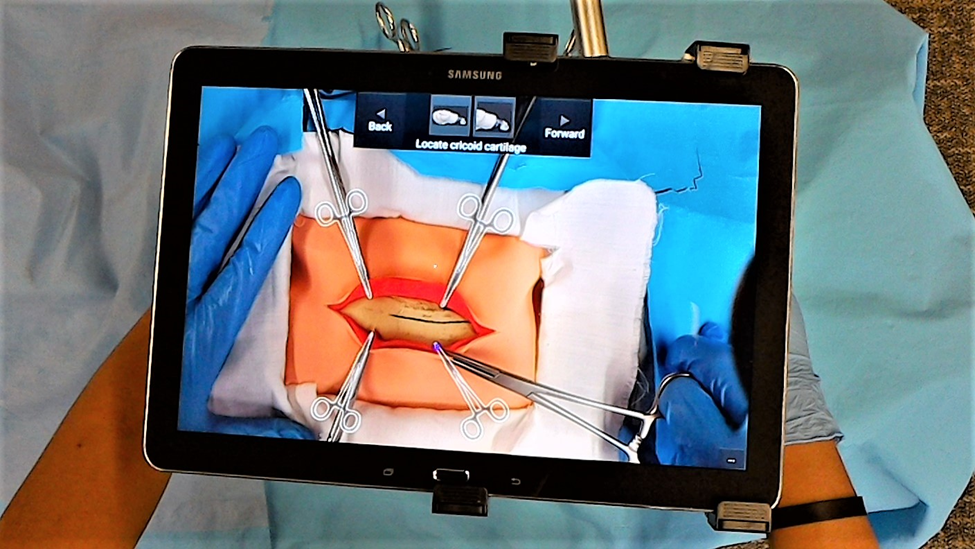

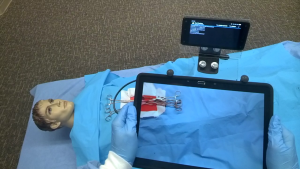

| Abstract: INTRODUCTION: Surgical telementoring connects expert mentors with trainees performing urgent care in austere environments. However, such environments impose unreliable network quality, with significant latency and low bandwidth. We have developed an augmented reality telementoring system that includes future step visualization of the medical procedure. Pregenerated video instructions of the procedure are dynamically overlaid onto the trainee’s view of the operating field when the network connection with a mentor is unreliable. METHODS: Our future step visualization uses a tablet suspended above the patient’s body, through which the trainee views the operating field. Before trainee use, an expert records a “future library” of step-by-step video footage of the operation. Videos are displayed to the trainee as semitransparent graphical overlays. We conducted a study where participants completed a cricothyroidotomy under telementored guidance. Participants used one of two telementoring conditions: conventional telestrator or our system with future step visualization. During the operation, the connection between trainee and mentor was bandwidth throttled. Recorded metrics were idle time ratio, recall error, and task performance. RESULTS: Participants in the future step visualization condition had 48% smaller idle time ratio (14.5% vs. 27.9%, P < 0.001), 26% less recall error (119 vs. 161, P = 0.042), and 10% higher task performance scores (rater 1 = 90.83 vs. 81.88, P = 0.008; rater 2 = 88.54 vs. 79.17, P = 0.042) than participants in the telestrator condition. CONCLUSIONS: Future step visualization in surgical telementoring is an important fallback mechanism when trainee/mentor network connection is poor, and it is a key step towards semiautonomous and then completely mentor-free medical assistance systems. |

|

|

| Andersen D, Cabrera ME, Rojas-Muñoz E, Popescu V, Gonzalez G, Mullis B, Marley S, Zarzaur B, Wachs J. Augmented Reality Future Step Visualization for Robust Surgical Telementoring. Simulation in Healthcare. 2019, 59-66. | ||

MAGIC: A fundamental Framework for Gesture Representation, Comparison and Assessment |

||

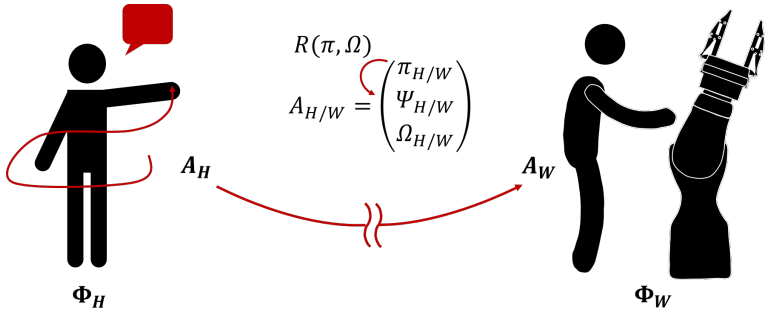

| Abstract:Gestures play a fundamental role in instructional processes between agents. However, effectively transferring this non-verbal information becomes complex when the agents are not physically co-located. Recently, remote collaboration systems that transfer gestural information have been developed. Nonetheless, these systems relegate gestures to an illustrative role: only a representation of the gesture is transmitted. We argue that further comparisons between the gestures can provide information of how well the tasks are being understood and performed. While gesture comparison frameworks exist, they only rely on gesture’s appearance, leaving semantics and pragmatical aspects aside. This work introduces the Multi-Agent Gestural Instructions Comparer (MAGIC), an architecture that represents and compares gestures at the morphological, semantical and pragmatical levels. MAGIC abstracts gestures via a three-stage pipeline based on a taxonomy classification, a dynamic semantics framework and a constituency parsing; and utilizes a comparison scheme based on subtrees intersections to describe gesture similarity. This work shows the feasibility of the framework by assessing MAGIC’s gesture matching accuracy against other gesture comparison frameworks during a mentor-mentee remote collaborative physical task scenario. |  |

|

| Rojas-Muñoz E, Wachs J. MAGIC: A fundamental Framework for Gesture Representation, Comparison and Assessment. 14th IEEE International Conference on Automatic Face and Gesture Recognition. 2019; Lille, France. | ||

AR Guidance for Trauma Surgery in Austere Environments |

||

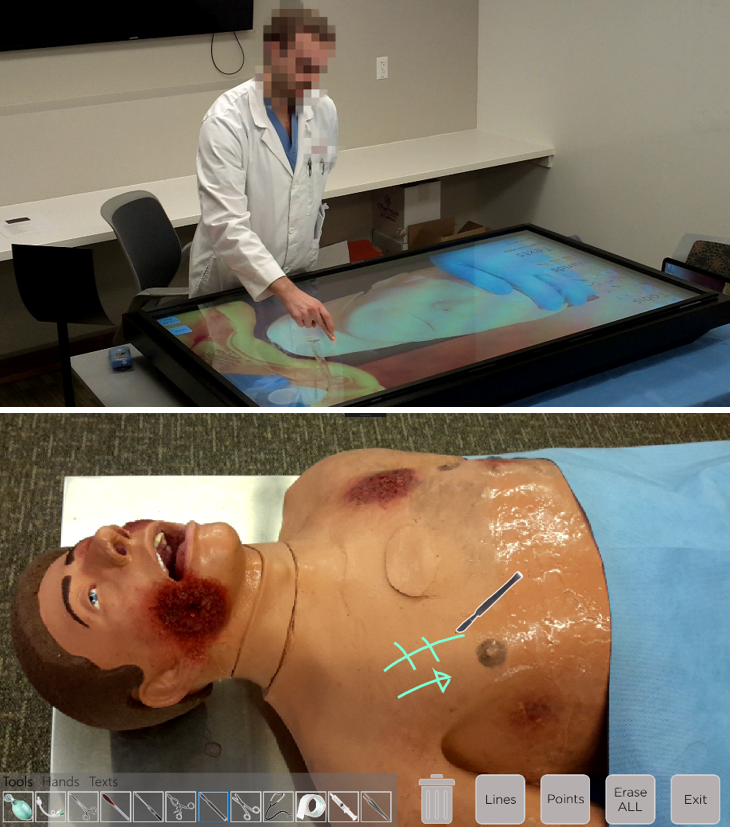

| Abstract: Trauma injuries in austere environments, such as combat zones, developing countries, or rural settings, often require urgent and subspecialty surgical expertise that is not physically present. Surgical telement-oring connects local generalist surgeons with remote expert mentors during a procedure to improve patient outcomes. However, traditional telementoring solutions rely on telestrators, which display a men-tor’s visual instructions onto a nearby monitor. This increases cognitive load for the local mentee sur-geon who must shift focus from the operating field and mentally remap the viewed instructions onto the patient’s body. Our project, STAR (System for Telementoring with Augmented Reality), bridges this gap by taking ad-vantage of augmented reality (AR), which enables in-context superimposed visual annotations directly onto the patient’s body. Our interdisciplinary team, comprised of computer scientists, industrial engi-neers, trauma surgeons, and nursing educators, has investigated, prototyped, and validated several AR-based telementoring solutions. Our first approach delivers mentor annotations using a conventional tablet, which offers pixel-level alignment with the operating field, while our second approach instead uses an AR HMD, which offers additional portability, surgeon mobility, and stereo rendering. |

|

|

| Andersen D, Rojas-Muñoz E, Lin C, Cabrera ME, Popescu V, Marley S, Anderson K, Zarzaur B, Mullis B, Wachs J. AR Guidance for Trauma Surgery in Austere Environments. EuroVR 2018 (Industrial Track). London, UK. Oct 2018. | ||

A First-person Mentee Second-person Mentor AR Interface for Surgical Telementoring |

||

| Abstract: This application paper presents the work of a multidisciplinary group of designing; implementing; and testing an Augmented Reality (AR) surgical telementoring system. The system acquires the surgical field with an overhead camera; the video feed is transmitted to the remote mentor; where it is displayed on a touch-based interaction table; the mentor annotates the video feed; the annotations are sent back to the mentee; where they are displayed into the mentee’s field of view using an optical see-through AR head-mounted display (HMD). The annotations are reprojected from the mentor’s second-person view of the surgical field to the mentee’s first-person view. The mentee sees the annotations with depth perception; and the annotations remain anchored to the surgical field as the mentee moves their head. Average annotation display accuracy is 1.22cm. The system was tested in the context of a user study where surgery residents (n = 20) were asked to perform a lower-leg fasciotomy on cadaver models. Participants who benefited from telementoring using our system received a higher Individual Performance Score; and they reported higher usability and self confidence levels. |

|

|

| Lin C, Andersen D, Popescu V, Rojas-Muñoz E, Cabrera ME, Mullis B, Zarzaur B, Anderson K, Marley S, Wachs J. A first-person mentee second-person mentor AR interface for surgical telementoring. In Adjunct Proceedings of the IEEE and ACM International Symposium for Mixed and Augmented Reality. 2018. | ||

Augmented Visual Instruction for Surgical Practice and Training |

||

| Abstract: This paper presents two positions about the use of augmented reality (AR) in healthcare scenarios, informed by the authors’ experience as an interdisciplinary team of academics and medical practicioners who have been researching, implementing, and validating an AR surgical telementoring system. First, AR has the potential to greatly improve the areas of surgical telementoring and of medical training on patient simulators. In austere environments, surgical telementoring that connects surgeons with remote experts can be enhanced with the use of AR annotations visualized directly in the surgeon’s field of view. Patient simulators can gain additional value for medical training by overlaying the current and future steps of procedures as AR imagery onto a physical simulator. Second, AR annotations for telementoring and for simulator-based training can be delivered either by video see-through tablet displays or by AR head-mounted displays (HMDs). The paper discusses the two AR approaches by looking at accuracy, depth perception, visualization continuity, visualization latency, and user encumbrance. Specific advantages and disadvantages to each approach mean that the choice of one display method or another must be carefully tailored to the healthcare application in which it is being used. |  |

|

| Andersen D, Lin C, Popescu V, Rojas Muñoz E, Cabrera ME, Mullis B, Zarzaur B, Marley S, Wachs J. Augmented Visual Instruction for Surgical Practice and Training. VAR4Good 2018 – Virtual and Augmented Reality for Good (workshop paper), Reutlingen, Germany, March 2018. | ||

Surgical Telementoring without Encumbrance: A Comparative Study of See-through Augmented Reality based Approaches |

||

| Abstract: Objective: This study investigates the benefits of a surgical telementoring system based on an augmented reality head-mounted display (ARHMD) that overlays surgical instructions directly onto the surgeon’s view of the operating field, without workspace obstruction. Summary Background Data: In conventional telestrator-based telementoring, the surgeon views annotations of the surgical field by shifting focus to a nearby monitor, which substantially increases cognitive load. As an alternative, tablets have been used between the surgeon and the patient to display instructions; however, tablets impose additional obstructions of surgeon’s motions. Methods: Twenty medical students performed anatomical marking (Task1) and abdominal incision (Task2) on a patient simulator, in one of two telementoring conditions: ARHMD and telestrator. The dependent variables were placement error, number of focus shifts, and completion time. Furthermore, workspace efficiency was quantified as the number and duration of potential surgeon/tablet collisions avoided by the ARHMD. Results: The ARHMD condition yielded smaller placement errors (Task1: 45%, P < 0.001; Task2: 14%, P = 0.01), fewer focus shifts (Task1: 93%, P < 0.001; Task2: 88%, P = 0.0039), and longer completion times (Task1: 31%, P < 0.001; Task2: 24%, P = 0.013). Furthermore, the ARHMD avoided potential tablet collisions (4.8 for 3.2s in Task1; 3.8 for 1.3s in Task2). Conclusion: The ARHMD system promises to improve accuracy and to eliminate focus shifts in surgical telementoring. Because ARHMD participants were able to refine their execution of instructions, task completion time increased. Unlike a tablet system, the ARHMD does not require modifying natural motions to avoid collisions. |

|

|

| Rojas-Muñoz E, Cabrera ME, Andersen D, Popescu V, Marley S, Mullis B, Zarzaur B, Wachs J. Surgical Telementoring without Encumbrance: A Comparative Study of See-through Augmented Reality based Approaches. Annals of Surgery. 2018; 270(2), 384-389 | ||

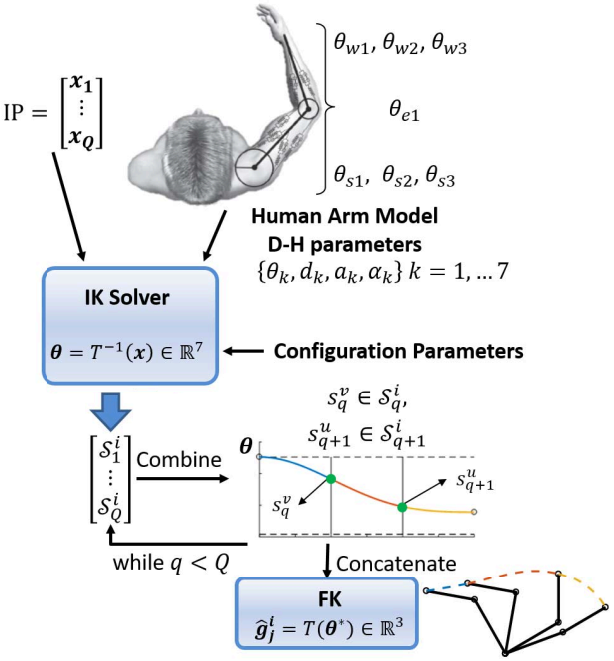

Biomechanical-Based Approach to Data Augmentation for One-Shot Gesture Recognition |

||

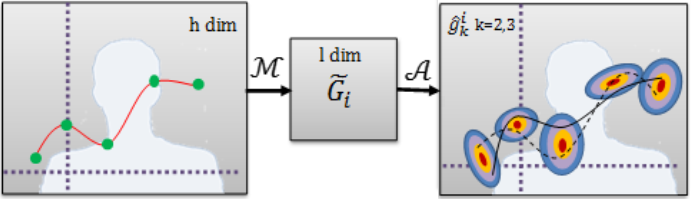

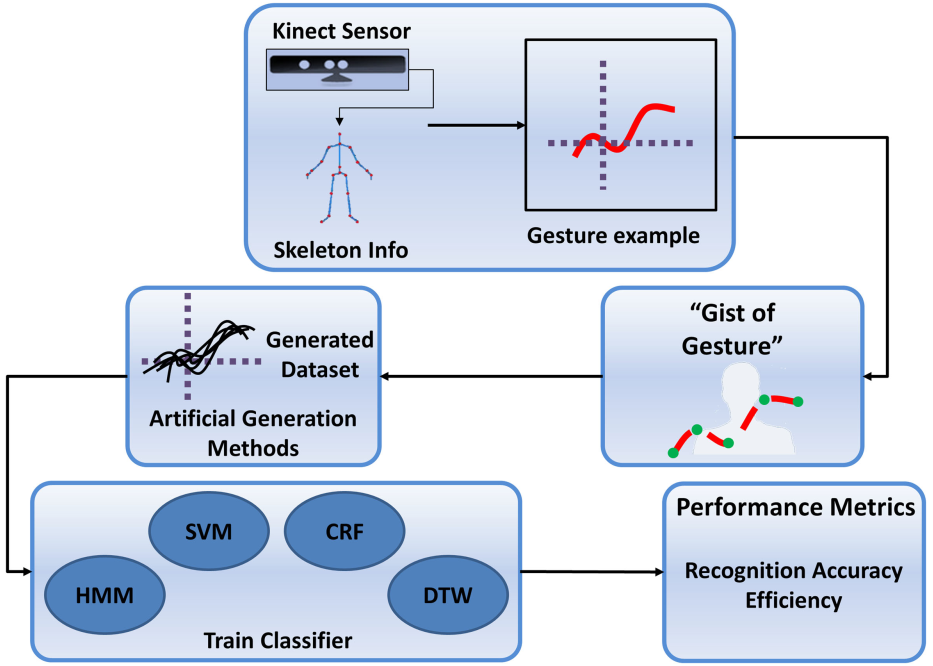

| Abstract: Most common approaches to one-shot gesture recognition have leveraged mainly conventional machine learning solutions and image based data augmentation techniques, ignoring the mechanisms that are used by humans to perceive and execute gestures, a key contextual component in this process. The novelty of this work consists on modeling the process that leads to the creation of gestures, rather than observing the gesture alone. In this approach, the context considered involves the way in which humans produce the gestures the kinematic and biomechanical characteristics associated with gesture production and execution. By understanding the main “modes” of variation we can replicate the single observation many times. Consequently, the main strategy proposed in this paper includes generating a data set of human-like examples based on “naturalistic” features extracted from a single gesture sample while preserving fundamentally human characteristics like visual saliency, smooth transitions and economy of motion. The availability of a large data set of realistic samples allows the use state-of-the-art classifiers for further recognition. Several classifiers were trained and their recognition accuracies were assessed and compared to previous one-shot learning approaches. An average recognition accuracy of 95% among all classifiers highlights the relevance of keeping the human “in the loop” to effectively achieve one-shot gesture recognition. |  |

|

| Cabrera ME, Wachs JP. Biomechanical-Based Approach to Data Augmentation for One-Shot Gesture Recognition. 13th IEEE International Conference on Automatic Face and Gesture Recognition. 2018; Xi’an, China | ||

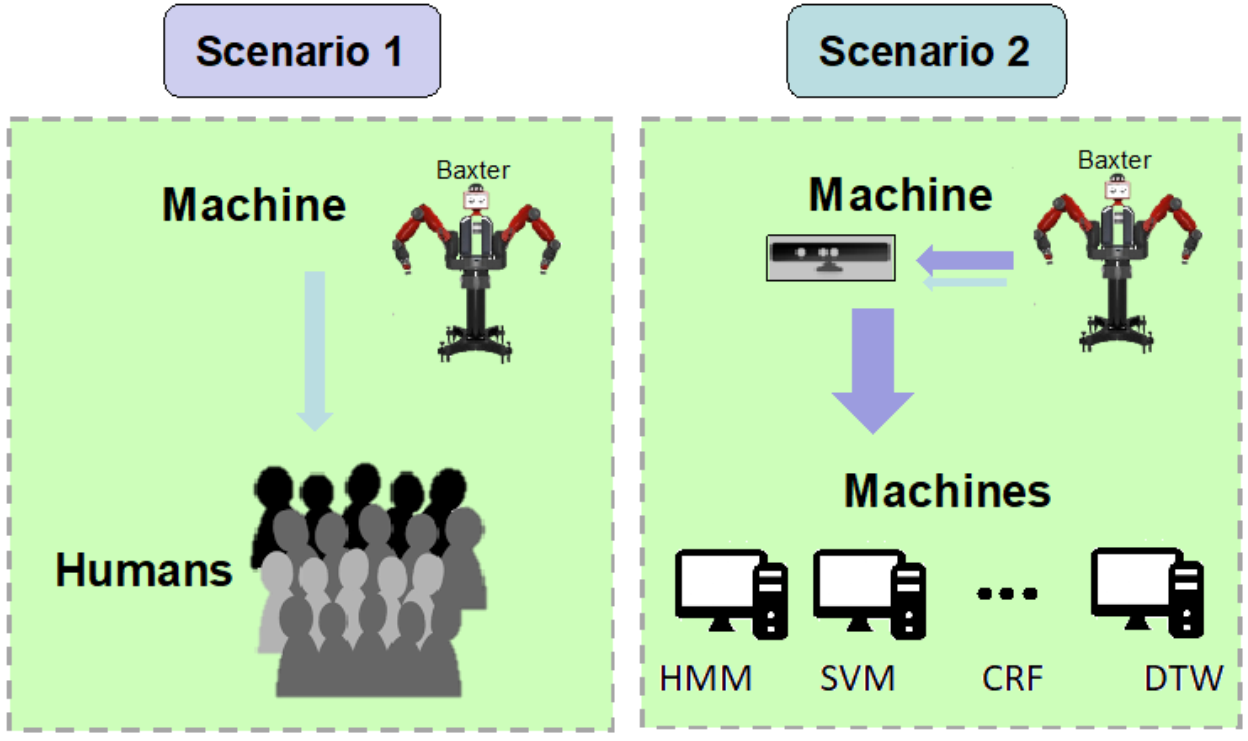

Coherence in One-Shot Gesture Recognition for Human-Robot Interaction |

||

| Abstract: An experiment was conducted where a robotic platform performs artificially generated gestures and both trained classifiers and human participants recognize. Classification accuracy is evaluated through a new metric of coherence in gesture recognition between humans and robots. Experimental results showed an average recognition performance of 89.2% for the trained classifiers and 92.5% for the participants. Coherence in one-shot gesture recognition was determined to be γ = 93.8%. This new metric provides a quantifier for validating how realistic the robotic generated gestures are. |  |

|

| Cabrera ME, Voyles RM, Wachs J. Coherence in One-Shot Gesture Recognition for Human-Robot Interaction. Companion of the 2018 ACM/IEEE International Conference on Human-Robot Interaction. 2018; Chicago, IL | ||

An Augmented Reality-Based Approach for Surgical Telementoring in Austere Environments |

||

| Abstract: Telementoring can improve treatment of combat trauma injuries by connecting remote experienced surgeons with local less-experienced surgeons in an austere environment. Current surgical telementoring systems force the local surgeon to regularly shift focus away from the operating field to receive expert guidance, which can lead to surgery delays or even errors. The System for Telementoring with Augmented Reality (STAR) integrates expert-created annotations directly into the local surgeon’s field of view. The local surgeon views the operating field by looking at a tablet display suspended between the patient and the surgeon that captures video of the surgical field. The remote surgeon remotely adds graphical annotations to the video. The annotations are sent back and displayed to the local surgeon while being automatically anchored to the operating field elements they describe. A technical evaluation demonstrates that STAR robustly anchors annotations despite tablet repositioning and occlusions. In a user study, participants used either STAR or a conventional telementoring system to precisely mark locations on a surgical simulator under a remote surgeon’s guidance. Participants who used STAR completed the task with fewer focus shifts and with greater accuracy. The STAR reduces the local surgeon’s need to shift attention during surgery, allowing him or her to continuously work while looking “through” the tablet screen. |  |

|

| Andersen D, Popescu V, Cabrera ME, Shanghavi A, Mullis B, Marley S, Gomez G, Wachs JP. An Augmented Reality-Based Approach for Surgical Telementoring in Austere Environments. Military Medicine. 2017;182, 310-315. | ||

What makes a gesture a gesture? Neural signatures involved in gesture recognition |

||

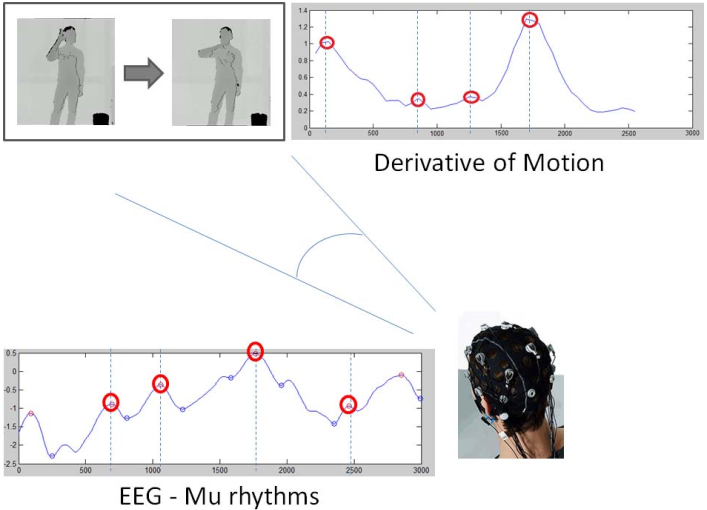

| Abstract: Previous work in the area of gesture production, has made the assumption that machines can replicate humanlike gestures by connecting a bounded set of salient points in the motion trajectory. Those inflection points were hypothesized to also display cognitive saliency. The purpose of this paper is to validate that claim using electroencephalography (EEG). That is, this paper attempts to find neural signatures of gestures (also referred as placeholders) in human cognition, which facilitate the understanding, learning and repetition of gestures. Further, it is discussed whether there is a direct mapping between the placeholders and kinematic salient points in the gesture trajectories. These are expressed as relationships between inflection points in the gestures trajectories with oscillatory mu rhythms (8-12 Hz) in the EEG. This is achieved by correlating fluctuations in mu power during gesture observation with salient motion points found for each gesture. Peaks in the EEG signal at central electrodes (motor cortex; C3/Cz/C4) and occipital electrodes (visual cortex; O3/Oz/O4) were used to isolate the salient events within each gesture. We found that a linear model predicting mu peaks from motion inflections fits the data well. Increases in EEG power were detected 380 and 500ms after inflection points at occipital and central electrodes, respectively. These results suggest that coordinated activity in visual and motor cortices is sensitive to motion trajectories during gesture observation, and it is consistent with the proposal that inflection points operate as placeholders in gesture recognition. |  |

|

| Cabrera ME, Novak K, Foti D, Voyles R, Wachs J. What makes a gesture a gesture? Neural signatures involved in gesture recognition. 12th IEEE International Conference on Automatic Face & Gesture Recognition. 2017; Washington, DC | ||

One-shot gesture recognition: One step towards adaptive learning |

||

| Abstract: User’s intentions may be expressed through spontaneous gesturing, which have been seen only a few times or never before. Recognizing such gestures involves one shot gesture learning. While most research has focused on the recognition of the gestures themselves, recently new approaches were proposed to deal with gesture perception and production as part of the recognition problem. The framework presented in this work focuses on learning the process that leads to gesture generation, rather than treating the gestures as the outcomes of a stochastic process only. This is achieved by leveraging kinematic and cognitive aspects of human interaction. These factors enable the artificial production of realistic gesture samples originated from a single observation, which in turn are used as training sets for state-of-the-art classifiers. Classification performance is evaluated in terms of recognition accuracy and coherency; the latter being a novel metric that determines the level of agreement between humans and machines. Specifically, the referred machines are robots which perform artificially generated examples. Coherency in recognition was determined at 93.8%, corresponding to a recognition accuracy of 89.2% for the classifiers and 92.5% for human participants. A proof of concept was performed towards the expansion of the proposed one shot learning approach to adaptive learning, and the results are presented and the implications discussed. |  |

|

| Cabrera ME, Sanchez-Tamayo N, Voyles R, Wachs J. One-shot gesture recognition: One step towards adaptive learning. 12th IEEE International Conference on Automatic Face & Gesture Recognition. 2017; Washington, DC | ||

A human-centered approach to one-shot gesture learning |

||

| Abstract: This article discusses the problem of one-shot gesture recognition using a human-centered approach and its potential application to fields such as human–robot interaction where the user’s intentions are indicated through spontaneous gesturing (one shot). Casual users have limited time to learn the gestures interface, which makes one-shot recognition an attractive alternative to interface customization. In the aim of natural interaction with machines, a framework must be developed to include the ability of humans to understand gestures from a single observation. Previous approaches to one-shot gesture recognition have relied heavily on statistical and data-mining-based solutions and have ignored the mechanisms that are used by humans to perceive and execute gestures and that can provide valuable context information. This omission has led to suboptimal solutions. The focus of this study is on the process that leads to the realization of a gesture, rather than on the gesture itself. In this case, context involves the way in which humans produce gestures—the kinematic and anthropometric characteristics. In the method presented here, the strategy is to generate a data set of realistic samples based on features extracted from a single gesture sample. These features, called the “gist of a gesture”, are considered to represent what humans remember when seeing a gesture and, later, the cognitive process involved when trying to replicate it. By adding meaningful variability to these features, a large training data set is created while preserving the fundamental structure of the original gesture. The availability of a large data set of realistic samples allows the use of training classifiers for future recognition. The performance of the method is evaluated using different lexicons, and its efficiency is compared with that of traditional N-shot learning approaches. The strength of the approach is further illustrated through human and machine recognition of gestures performed by a dual-arm robotic platform. |  |

|

| Cabrera ME, Wachs J. A human-centered approach to one-shot gesture learning. Frontiers in Robotics and AI. 2017; 4, 8 | ||

A Hand-Held, Self-Contained Simulated Transparent Display |

||

| Abstract: Hand-held transparent displays are important infrastructure for augmented reality applications. Truly transparent displays are not yet feasible in hand-held form, and a promising alternative is to simulate transparency by displaying the image the user would see if the display were not there. Previous simulated transparent displays have important limitations, such as being tethered to auxiliary workstations, requiring the user to wear obtrusive head-tracking devices, or lacking the depth acquisition support that is needed for an accurate transparency effect for close-range scenes.We describe a general simulated transparent display and three prototype implementations (P1, P2, and P3), which take advantage of emerging mobile devices and accessories. P1 uses an off-the-shelf smartphone with built-in head-tracking support; P1 is compact and suitable for outdoor scenes, providing an accurate transparency effect for scene distances greater than 6m. P2 uses a tablet with a built-in depth camera; P2 is compact and suitable for short-distance indoor scenes, but the user has to hold the display in a fixed position. P3 uses a conventional tablet enhanced with on-board depth acquisition and head tracking accessories; P3 compensates for user head motion and provides accurate transparency even for close-range scenes. The prototypes are hand-held and self-contained, without the need of auxiliary workstations for computation. |  |

|

| Andersen D, Popescu V, Lin C, Cabrera ME, Shanghavi A, Wachs J. A Hand-Held, Self-Contained Simulated Transparent Display. ISMAR’16 – Proceedings of the IEEE International Symposium on Mixed and Augmented Reality (poster), Merida, Mexico, September 2016. | ||

Medical Telementoring Using an Augmented Reality Transparent Display |

||

| Abstract:Background: The goal of this study was to design and implement a novel surgical telementoring system called STAR (System for Telementoring with Augmented Reality) that uses a virtual transparent display to convey precise locations in the operating field to a trainee surgeon. This system was compared to a conventional system based on a telestrator for surgical instruction. Methods: A telementoring system was developed and evaluated in a study which used a 1 x 2 between-subjects design with telementoring system, i.e. STAR or Conventional, as the independent variable. The participants in the study were 20 pre-medical or medical students who had no prior experience with telementoring. Each participant completed a task of port placement and a task of abdominal incision under telementoring using either the STAR or the Conventional system. The metrics used to test performance when using the system were placement error, number of focus shifts, and time to task completion. Results: When compared to the Conventional system, participants using STAR completed the two tasks with less placement error (45% and 68%) and with fewer focus shifts (86% and 44%), but more slowly (19% for each task). Conclusions: Using STAR resulted in decreased annotation placement error, fewer focus shifts, but greater times to task completion. STAR placed virtual annotations directly onto the trainee surgeon’s field of view of the operating field by conveying location with great accuracy; this technology helped to avoid shifts in focus, decreased depth perception, and enabled fine-tuning execution of the task to match telementored instruction, but led to greater times to task completion. |

|

|

| Andersen D, Popescu V, Cabrera M, Shanghavi A, Gomez G, Marley S, Mullis B, Wachs J. Medical Telementoring Using an Augmented Reality Transparent Display. Surgery. 2016; 159(6), 1646 – 1653. | ||

Avoiding Focus Shifts in Surgical Telementoring Using an Augmented Reality Transparent Display |

||

| Abstract: Conventional surgical telementoring systems require the trainee to shift focus away from the operating field to a nearby monitor to receive mentor guidance. This paper presents the next generation of telementoring systems. Our system, STAR (System for Telementoring with Augmented Reality) avoids focus shifts by placing mentor annotations directly into the trainee’s field of view using augmented reality transparent display technology. This prototype of we tested with pre-medical and medical students. Experiments were conducted where participants were asked to identify precise operating field locations communicated to them using either STAR or a conventional telementoring system. STAR was shown to improve accuracy and to reduce focus shifts. The initial STAR prototype only provides an approximate transparent display effect, without visual continuity between the display and the surrounding area. The current version of our transparent display provides visual continuity by showing the geometry and color of the operating field from the trainee’s viewpoint. |  |

|

| Andersen, D.; Popescu, V.; Cabrera, M.; Shanghavi, A.; Gomez, G.; Marley, S.; Mullis, B. & Wachs, J. Avoiding Focus Shifts in Surgical Telementoring Using an Augmented Reality Transparent Display. NextMed/MMVR22 Proceedings, 2016; 22, 9-14. | ||

Virtual annotations of the surgical field through an augmented reality transparent display |

||

| Abstract: Existing telestrator-based surgical telementoring systems require a trainee surgeon to shift focus frequently between the operating field and a nearby monitor to acquire and apply instructions from a remote mentor. We present a novel approach to surgical telementoring where annotations are superimposed directly onto the surgical field using an augmented reality (AR) simulated transparent display. We present our first steps towards realizing this vision, using two networked conventional tablets to allow a mentor to remotely annotate the operating field as seen by a trainee. Annotations are anchored to the surgical field as the trainee tablet moves and as the surgical field deforms or becomes occluded. The system is built exclusively from compact commodity-level components: all imaging and processing are performed on the two tablets. |  |

|

| Andersen, D.; Popescu, V.; Cabrera, M.; Shanghavi, A.; Gomez, G.; Marley, S.; Mullis, B. & Wachs, J. Virtual annotations of the surgical field through an augmented reality transparent display. The Visual Computer, Springer Berlin Heidelberg, 2015, 1-18. | Video | |

An augmented reality approach to surgical telementoring |

||

| Abstract: Optimal surgery and trauma treatment integrates different surgical skills frequently unavailable in rural/field hospitals. Telementoring can provide the missing expertise, but current systems require the trainee to focus on a nearby telestrator, fail to illustrate coming surgical steps, and give the mentor an incomplete picture of the ongoing surgery. A new telementoring system is presented that utilizes augmented reality to enhance the sense of co-presence. The system allows a mentor to add annotations to be displayed for a mentee during surgery. The annotations are displayed on a tablet held between the mentee and the surgical site as a heads-up display. As it moves, the system uses computer vision algorithms to track and align the annotations with the surgical region. Tracking is achieved through feature matching. To assess its performance, comparisons are made between SURF and SIFT detector, brute force and FLANN matchers, and hessian blob thresholds. The results show that the combination of a FLANN matcher and a SURF detector with a 1500 hessian threshold can optimize this system across scenarios of tablet movement and occlusion. |  |

|

| Loescher T, Shih Yu Lee, Wachs JP. An augmented reality approach to surgical telementoring. 2014 IEEE International Conference on Systems, Man and Cybernetics (SMC). 2014; 2341-2346. | ||