Master theorem

Overview

In this assignment, you will practice using recurrence relations and the Master theorem to analyze the complexity of divide-and-conquer algorithms. You will be applying this method to algorithms we have not covered in class.

Full instructions are given below. In short, we will give a list of algorithms, many of which you may not have seen before. You will read descriptions of the algorithms and find one that fits each of the 3 (main) cases of the Master theorem.

No programming is required for HW04. (More details are below.) All instructions for this assignment are in this web page. You will complete the assignment in this PDF form.

Goals

- Practice analyzing divide-and-conquer algorithms using recurrence relations, recurrence trees, and the Master theorem..

- Practice reading pseudocode for unfamiliar algorithms.

- Get exposed to more algorithms, beyond what we cover in-depth in the class.

- Develop your intuition for thinking about and analyzing divide-and-conquer algorithms.

You will submit via Gradescope. (That will be opened up 48 hours prior to the deadline.)

Background

A recurrence relation is a mathematical function that is expressed in terms of itself, and defines a sequence in terms of the preceding elements in that same sequence, typically with one or more initial cases. Recurrence relations are not inherently tied to algorithms.

Examples:

| Factorial | Factorial(0) = 1 |

| Factorial(n) = n * Factorial(n - 1) , for n ≥ 1 | |

| Fibonacci | Fibonacci(0) = 0 |

| Fibonacci(1) = 1 | |

| Fibonacci(n) = Fibonacci(n - 1) + Fibonacci(n - 2) , for n ≥ 2 |

See Wikipedia: recurrence relations for more examples and a more thorough explanation of recurrence relations, in general.

Recurrence relations for recursive algorithms

We talk about recurrence relations in the context of algorithms because they are useful for characterizing the running time (and other characteristics) of divide-and-conquer algorithms. Since they are typically expressed as recursive functions, we can create a recurrence relation simply by looking at the work done by each function call.

The pseudocode below is a general outline that can be applied to a wide variety of divide-and-conquer algorithms.

Credit: Wikipedia-CC-BY-SA-4.0

The recurrence relation for the work done by procedure p is \(T(n) = aT(\frac{n}{b}) + f(n)\).

- $a$ is the number of subproblems created by each call to procedure p.

- $b$ is the factor by which the input is divided before each successive recursive call.

- We can treat $\frac{n}{b}$ as interchangeable with $\lceil\frac{n}{b}\rceil$ or $\lfloor\frac{n}{b}\rfloor$.

- $f(n)$ is the total amount of work required to create the subproblems and combine the results. Most of the time, we don't need an explicit expression for $f(n)$, since we will usually just refer to its complexity (i.e, $\mathcal{O}(f{\small (}n{\small )})$, $\Omega(f{\small (}n{\small )})$, or $\Theta(f{\small (}n{\small )})$).

Master theorem

The Master theorem provides a shortcut method of finding the asymptotic complexity of recurrence relations fitting the form above (\(T(n) = aT(\frac{n}{b}) + f(n)\)).

| $T(n)$ is $\Theta(n^{\log_b a})$ | if $f(n)$ is $\mathcal{O}(n^d)$ | and $d < \log_b a$. |

| $T(n)$ is $\Theta(n^{\log_b a} \log n)$ | if $f(n)$ is $\Theta(n^d)$ | and $d = \log_b a$. |

| $T(n)$ is $\Theta(f{\small(}n{\small)})$ | if $f(n)$ is $\Omega(n^d)$ | and $d > \log_b a$. |

Requirements for applying Master theorem

- $a$, $b$, and $d$ are constants.

- $a \ge 1$

- $b \gt 1$

- $d \ge 0$

Instructions

Below is a list of algorithms which are divide-and-conquer or can be interpreted as such.

For each of the three cases of the Master theorem, find one algorithm from the list that fits that case.

You can learn about the algorithm from the link provided or any other resource. You do not need to learn the algorithm well enough to code it.

Algorithms

- stooge sort

- quickselect (random pivot) – same as HW03 but choose random element as pivot to ensure average-case even splits

- k-way mergesort

- matrix multiplication » Strassen's algorithm

- Fast Fourier Transform (FFT) » Cooley-Tukey

- Towers of Hanoi

- Karatsuba multiplication

- Search an m'ary tree

- Quicksort tree

- GCD, Euclid's algorithm

More algorithms, contributed by students

- Toom Cook Multiplication Credit: JD

- Bently Ottman Algorithm Credit: CN

- Median of Medians Credit: YA

- Quickhull Credit: DS

- All Nearest Smaller Values Credit: RR

- Kasai's Algorithm for longest common prefix (LCP) in am array or stringCredit: BK

- Counting bits Credit: SC

- Tournament Tree Credit: RP

- Minimum subsequence sum Credit: JM

- Skyline Credit: NA

- Convex hull – Chan's algorithm Credit: AG

- Ancient Egyption Multiplication Credit: JC

- Closest Pair of Points Credit: HC

- Cascade (pairwise) summation Credit: SS

- Tim Sort Credit: RL

- Hilbert Curve Construction Credit: CS

- Saddleback Search on 2D Array Credit: BM

- Color quantization » Medium Cut algorithm Credit: KC

- All Nearest Smallest Values Credit: RR

- Graham Scan Credit: RB

- Barnes Hut Credit: MM

- Credit: AW

- Wavelet tree construction Credit: YY

- Binary exponentiation Credit: SN

- Counting Inversions Credit: PK

- Fast Inverse Square Root Credit: RL

- Convex hull » Kirkpatrick-Seidel Credit: HA

- Viterbi Credit:

- Max subarray sum / maximal subsequence Credit: MC

- Traveling salesman heuristic Credit: AC

- Voronoi diagram construction Credit: HH

- Credit:

You may suggest other algorithms to add to this list. See Participation Points below.

Acceptable use of GenAI (i.e., ChatGPT) and other resources

You may use the web, GenAI (i.e., ChatGPT, etc.) or any other resource to learn how these algorithms work.

You may ask ChatGPT questions like. In fact, you are encouraged to do so.

- ✔ "How does the ▒▒▒ algorithm work?"

- ✔ "How is the input data divided into subproblems at each step of the ▒▒▒ algorithm?"

Do NOT ask questions that lead to answers that could directly be used in HW04.

- ✘ “Provide a recurrence relation for the ▒▒▒ in a form suitable for the Master theorem.”

- ✘ “Use the Master theorem to derive the complexity for the ▒▒▒ algorithm.”

- ✘ “In the recurrence relation for the ▒▒▒ algorithm, why is the value of a = 5?”

✔ You may check that your final answer (running time complexity) is correct using any source you like, but you should make an effort to derive it yourself, if possible.

Questions and discussion about this on Piazza are encouraged.

⚠ If we find inconsistencies between your drawings, numeric answers, and textual answers that make us question whether you understood what you wrote, we may ask you to come and explain in-person, as a condition of receiving credit for the assignment.

⚠ In any case, we will expect you to have these skills after completing this assignment. Exam 2 (Fall 2023) could contain questions asking you to do the same for other algorithms, or could ask you about the analysis of these particular ones (in a way that lets you choose the one(s) you did for HW04).

Submission

You may fill out the form on your computer, or handwrite the answers. If you fill it out on your computer, be sure to save often.

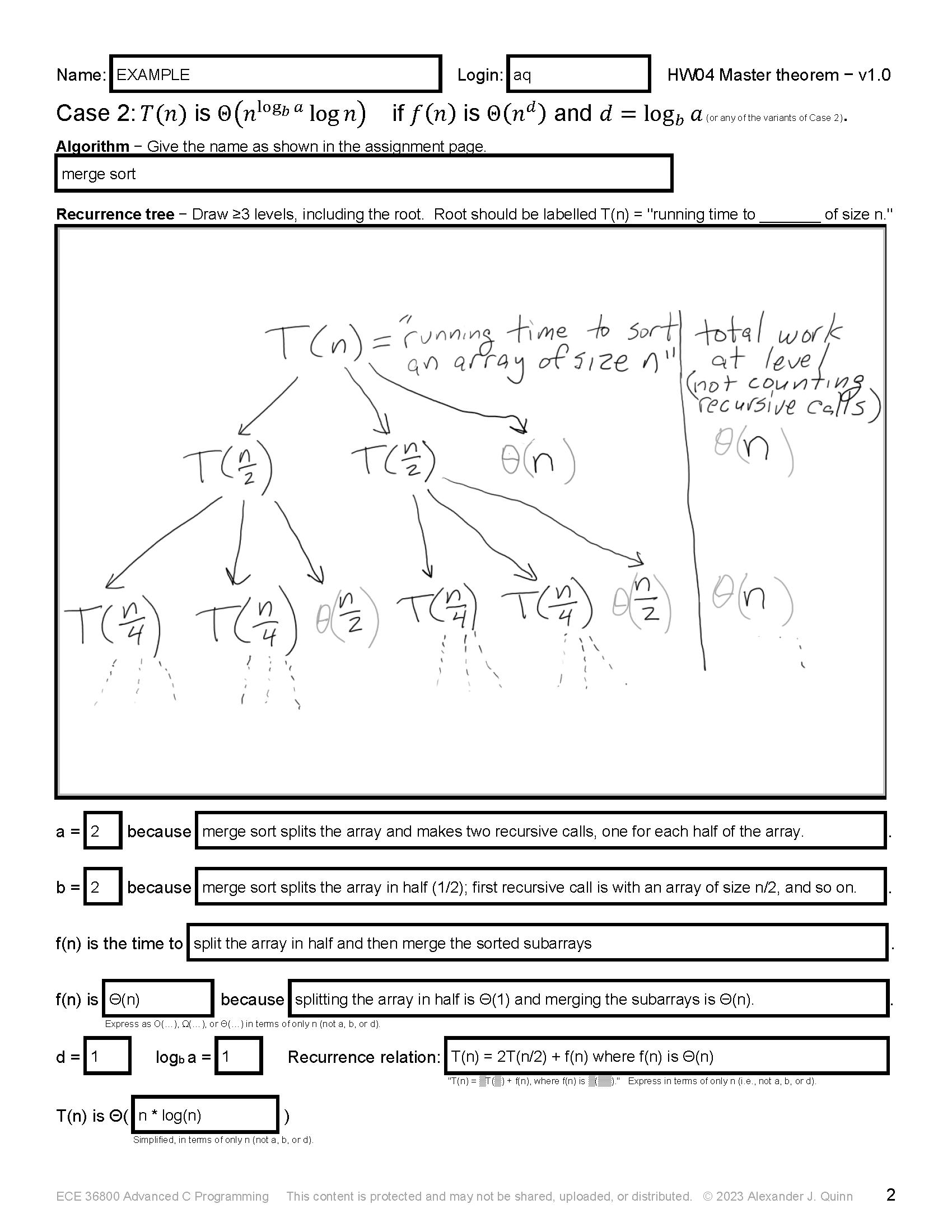

You should be able to add an image for the recurrence tree. It should be drawn by you, including a node labelled "T(n)" for the root. Each T(▒) should have one child node for each recursive call, plus one node for the amount of other work done at that node. On the right side, give a total for the amount of work (not counting the recursive calls) done at that level.

Your recurrence tree should include ≥3 levels (i.e., root + two levels of recursive calls).

Submit via Gradescope as "HW04". (This will be enabled at least 48 hours before the deadline.)

This assignment requires no programming. You do not need to use eceprog (or ececomp).

Q&A

-

What is the recurrence tree supposed to look like?

As stated in the instructions (above and on the form itself):- “Root should be labelled T(n) = ‘running time to ____ of size n’.” Ex: For merge sort, the root would be labelled, ❝T(n) = ‘running time to sort an array of size n’.❞

- “Each T(n) should have one child for each recursive call…”

Ex: For merge sort, the root (T(n)) has two recursive calls, T(n/2) and T(n/2). - “… plus one node for the amount of other work done at that node.”

Ex: For merge sort, the root node will have three children: two for the recursive calls plus one for the other work done at that node. The other work refers to the time to split the array and then merge the sorted arrays (after the recursive calls). In the case of the root, that other work is Θ(n). Thus, the three children of the root will be T(n), T(n), and Θ(n). - “On the right side, give a total for the amount of work (not counting the recursive calls) done at that level.”

Ex: For merge sort, the right column will say Θ(n) on the second and third levels. This is just the total at that level, excluding the recursive calls.

Updates

Corrections may be noted here.