Tracking Humans with a Wired Camera Network

The ability of machines to detect humans and recognize their activities in an environment is an essential part of allowing a machine to interact with humans easily and efficiently. The existing methods for detecting and analyzing human motion often require special markers attached to the human subjects, which prevents the widespread application of the technology. The goal of this project is to develop a system that can track articulated human motion using multiple cameras without relying on markers or special colored clothing.

As a stepping stone in this research, we have developed a system that tracks multiple humans in real-time using a network of 12 cameras. The 12 cameras independently acquire images and detect a person in the environment. In order to simplify the human detection process, we have attached two tracking lights on the A-eye that can easily be detected in the images. Each camera that detects a human in the image sends out a packet containing the coordinates of the human in the local image coordinate frame along with the camera ID and the image capture time to the server. The server then computes the current location of the human by integrating the information received from different cameras. A movie clip that shows this system is available below.

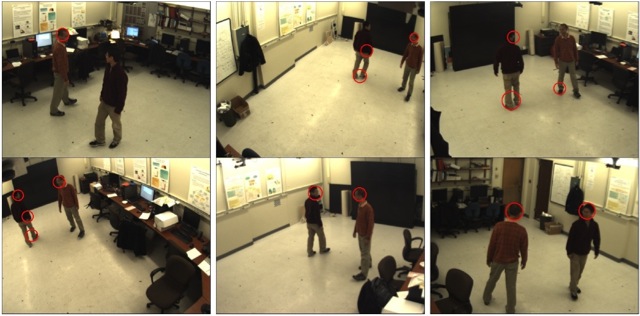

More recently, we have improved the system to track multiple people with no tracking markers. The system extracts the contours of moving objects in the scene, and by analyzing the shape of each contour, the system detects a person's head. Figure 2 shows some examples of the head detection algorithm where the red circles represent the candidates of head regions detected by the system. The false candidates are eliminated by integrating the data from multiple cameras. Combining this technology and our human posture classification algorithm, we put together a system that can monitor basic human activities in the environment. We have three movie clips below that show some parts of this system.

Figure 2. 6 camera views out of 12 cameras in the system. Red circles represent the candidates of head region detected by each camera. The false candidates are eliminated by integrating the data from multiple cameras.

14 networked cameras tracking a person. When the system detects the person not moving for a while, the systems asks the person, "You seem to be lost. If you want to know which zone you're in, please raise your right hand". The person raises the right hand. The system recognizes that the person's right hand is raised, so reply to the person by saying, "I can help you with that. You're in Zone A4".

14 networked cameras tracking a person. When the system detects the person falling down on the floor, the system asks the person, "Are you OK? If you're OK, please sit up". The system detects that the person is still lying down, so it responds by saying, "Medical assistance is on its way. Alert! Medical assistance needed in Zone A2".

• Akio Kosaka

• Johnny Park

• Gaurav Srivastava

• Hidekazu Iwaki

Hidekazu Iwaki, Gaurav Srivastava, Akio Kosaka, Johnny Park, and Avinash Kak, “A Novel Evidence Accumulation Framework for Robust Multi Camera Person Detection,” ACM/IEEE International Conference on Distributed Smart Cameras, 2008. (accepted)

Ruth Devlaeminck, "Human Motion Tracking With Multiple Cameras Using a Probabilistic Framework for Posture Estimation," Master's Thesis, School of Electrical and Computer Engineering, Purdue University, August 2006. [pdf]