A Vision-based Robot Navigation Architecture using Fuzzy Inference for Uncertainty-Reasoning

FUZZY-NAV is another robust vision-based control architecture for indoor mobile-robot navigation. On one hand, this architecture takes advantage of the high-throughput of neural networks for the processing of camera images. On the other, it employs fuzzy logic to deal with the uncertainty in the inferences drawn from the vision data. Note that the architecture we present in this paper allows our robot to simultaneously navigate and avoid obstacles, both static and dynamic. As indicated in the previous section, the FINALE system is heavily geometrical, in the sense that it requires that a 3D model of the hallways be known.

Our next architecture, FUZZY-NAV, is more human-friendly, in the sense that it used topological models of hallways. From the topological models of space the path planner in FUZZY-NAV outputs a sequence of navigation commands like [straight to the second T-junction, turn right, straight to the third door on the left]}. In executing these commands, the robot invokes an ensemble of neural networks for steering control. With the same hardware as for FINALE, the FUZZY-NAV system is able to generate a steering command every three seconds.

• A topological model of environments for human-friendly system.

• Neural networks for vision-based motion commands.

• Fuzzy logic for high-level reasoning over motion commands.

Architecture of FUZZY-NAV

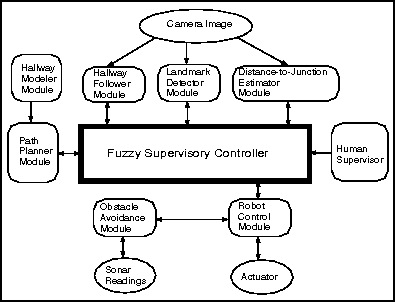

As depicted in the following figure, the heart of the FUZZY-NAV system is the Fuzzy Supervisory Controller connected to a human supervisor and several peripheral modules.

Figure: Architecture of FUZZY-NAV.

Hallway Follower (Neural Networks)

• Help the robot stay in the middle of the hallway.

• Provide an estimate of how deviated the robot orientation is, in terms of steering commands like: left-40, left-30, ... , right-40.

Distance-to-Junction Estimator (Neural Networks)

• Provide an estimate of how far it is from the robot to the junction in terms of distance estimate: far, near, close.

Fuzzy Supervisory Controller (Fuzzy Logic)

• Perform high-level reasoning over the outputs from the neural networks using fuzzy rules.

• Generate actual motion commands (rotation and translation) within three seconds even using visual observations.

The Hallway Follower consists of two competing neural networks that help the robot stay in the middle of the hallway when no obstacles are present. Different regions of the Hough space of the camera image are fed into the input nodes of these neural networks. The two neural networks together have ten output nodes corresponding to the following steering commands:

left-40, left-30, left-20, left-10, go-straight, right-10, right-20, right-30, right-40, and no-decision.

The Distance-to-Junction Estimation Module gives the robot a sense of how far it is to a hallway junction. Certain regions of the Hough space representation of the camera image correspond to those straight lines in a hallway that are horizontal but perpendicular to the hallway in which the robot is traveling. By feeding these regions of the Hough space into an appropriately trained neural network, an estimate of the distance to the end of the hallway can be constructed. The output nodes of this network are far, near, close, and no-decision.

The Obstacle Avoidance Module consists of a semi-ring of ultrasonic sensors. Through these sensors, the module can estimate both the direction of and the distance to the obstacle. When the distance to the obstacle falls below a certain preset threshold, the Collision Avoidance Module interrupts the CPU and takes over the Robot Control Module. As long as an obstacle can be detected by any of the sonar sensors, the Robot Control Module remains completely under the control of the Obstacle Avoidance Module. When no obstacles are detected, control reverts back to the Fuzzy Supervisory Controller.

The Landmark Detector contains neural networks, each specialized for a specific recognition task. One of the main neural networks is for recognizing door frames of closed doors from a certain range of perspectives. This module, obviously of critical importance if our robot is to succeed in simultaneous navigation and object finding, is still undergoing development and refinement in our laboratory.

The Path Planner uses a topological model of the hallways and the information supplied by the human about the desired destination location to spit out a sequence of navigational commands. For example, the path planner will issue the following commands to the Fuzzy Supervisory Controller: follow-corridor, stop-at-junction, turn-right-at-junction, follow-corridor, stop-at-deadend.

The Fuzzy Supervisory Controller uses the information received from the Hallway Follower, the Distance-to-Junction Estimator, and the Landmark Detector which consist of neural networks to decide how to control the robot. This control must of course be in accordance with the sequence of top-level commands received initially from the Path Planner. The Fuzzy Supervisory Controller actually uses three linguistic variables, distance-to-junction, turn-angle, distance-to-travel. Associated with these linguistic variables are a total of sixteen fuzzy terms.

Vision Processing in FUZZY-NAV

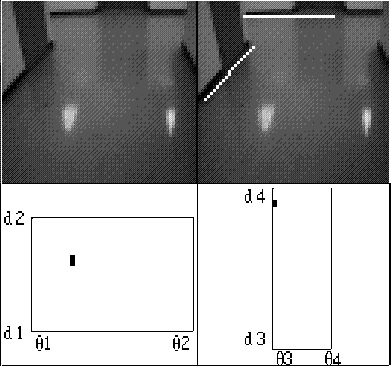

The following figure shows a downsampled camera image in the left upper corner; the extracted edges are shown in the right upper image. The edge image is then mapped into the Hough space. Different regions of the Hough space are used as input for the different neural networks in the system. The portion [d1, d2] x [theta1, theta2] is used as input region for the Hallway Follower module, whereas the portion of the Hough space bounded by [d3, d4] x [theta3, theta4] is used as input for the Distance-to-Junction Estimator Module. The lower left in the figure shows that part of the Hough space derived from the edge image of upper right which is used as input to Hallway Follower Module. The lower right shows that part which is used as input to the Distance-to-Junction Estimator Module.

Figure: Vision processing in FUZZY-NAV.

On the CRT display of the robot as shown in the robot hardware figure, composite images such as the one in in the following figure pop up as the robot is navigating down the hallway. As the vision data is processed by each of the three vision-based modules, one of the three iconic figures also pops up. For example, after the Hallway Follower has processed part of the Hough space of the camera image, the iconic figure at the upper left pops up on the display. In this example, the output node right_10 lights up, meaning that the Hallway Follower is recommending that the robot turn to the right by 10 degrees in order to stay in the middle of the hallway. Subsequently, the iconic figure shown at the upper right shows up; this corresponds to the output node far lights up. Therefore, the Distance-to-Junction Estimator is indicating that the distance to the junction is far. All of this information is fed into the Fuzzy Supervisor Controller. When this happens, the iconic figure at the bottom pops up on the screen. This figure shows that the Fuzzy Supervisory Controller takes in the recommendations far for distance to junction and right_10 for the turn angle and issues forth its decisions regarding what the robot actions should be. As the iconic diagram shows, in this case a total of three rules were fired that made assertions about how far the robot should continue to travel in a straight line, two of these asserted that the value of the linguistic variable distance-to-travel should be long while one rule asserted that it should be medium. The same three rules also asserted that the the value of the linguistic variable turn-angle should be zero, right-20 and right-10. The defuzzification of these assertions made by the Fuzzy Supervisory Controller result in the values of 5.9 feet for the latest estimate of how far the robot should travel before expecting to see the junction and that the turn angle at this time should be 9 degrees in order to bring the robot to approximately the middle of the hallway.

Figure: Fuzzy supervisory control for motion command.

J. Pan, D. J. Pack, and A. C. Kak, "Fuzzy-Nav: A Vision-Based Robot Navigation Architecture using Fuzzy Inference for Uncertainty Reasoning," Proceedings of the World Congress on Neural Networks, Vol. 2, pp. 602-607, July 17-21, Washington DC, 1995. [ps, 2.0MB]

J. Pan and A. C. Kak, "Design of a Large-Scale Expert System using Fuzzy Logic for Uncertainty Reasoning," Proceedings of the World Congress on Neural Networks, Vol. 2, pp. 703-708, July 17-21, Washington DC, 1995. [ps, 0.2MB]

A. Kosaka and J. Pan, "Purdue Experiments in Model-based Vision for Hallway Navigation'', Proceedings of Workshop on Vision for Robots in IROS'95, pp.87-96, 1995. [ps, 4.2MB]

• FINALE: Fast Vision-Guided Mobile Robot Navigation using Model-Driven Reasoning and Prediction of Uncertainty

FUZZY-NAV