New research in media forensics is bringing automated algorithms to foil fake news and detect falsified scientific data.

Some of the work is aimed at helping the U.S. military and intelligence communities quickly and automatically distinguish faked photos and videos from the real thing. New applications harness deep learning, so named because it requires layers of neural networks to actually learn how to perform certain tasks on the fly.

A team of researchers from seven universities, led by Purdue, has developed an “end-to-end” system capable of handling the massive volume of media uploaded regularly to the Internet.

“The military collects a lot of open-source images and videos. The question they want to ask is, are they real or not? We built our end-to-end system to do that, says Edward Delp, the Charles William Harrison Distinguished Professor of Electrical and Computer Engineering. “We are running it and testing it, and we are finding that it can detect forgeries really well.”

Such a system could have potential commercial applications as well, representing a tool for news and social media platforms to authenticate images and video before posting them.

“Since we started working on this project the term ‘fake news’ has taken on new mainstream importance,” says Delp, who is also director of Purdue’s Video and Image Processing Laboratory, or VIPER. “Many tools currently available cannot be used for the tens of millions of images that are out there on the Net. They take too long to run and just don’t scale up to this huge volume, so there is a real need for this capability.”

The project is funded over four years with a $4.9 million grant from the U.S. Defense Advanced Research Projects Agency (DARPA). Partner universities include the University of Notre Dame, New York University, University of Southern California, University of Siena in Italy, Politecnico di Milano in Italy, and University of Campinas in Brazil.

Extending this research focus, Delp’s team also is applying its expertise to identify tampered satellite imagery. And, in other research also funded by DARPA, his lab is working to develop a system that automatically screens scientific papers to determine whether they contain faked data.

“There are a number of scientific papers that are fake and that actually have to be retracted every year because the scientists lied,” he says. “In fact, there is a website called Retraction Watch, and it shows all the papers people have written that have had to be retracted because of faked data.”

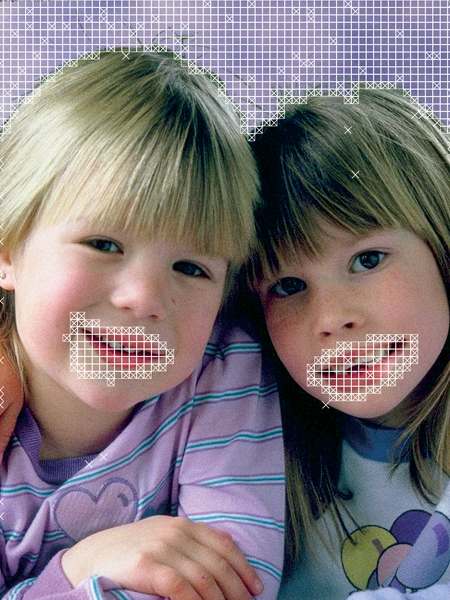

One way to falsify research is by doctoring images such as microscope photos, which can be scrutinized using deep-learning algorithms, a focus of Delp’s group. “We are building tools to look at scientific publications and see if the data, specifically the images in the scientific publications, are real or have been faked,” he says.

The research is innovative, in part because deep learning has not yet been applied extensively to media forensics.