Emerging advances stem from the realization that deep neural networks, a type of deep-learning architecture, could be much more powerful by using many layers of processing.

“I have never seen anything so disruptive and transformational as deep learning,” says Charles Bouman, Purdue’s Showalter Professor of Electrical and Computer Engineering and Biomedical Engineering.

For example, in the realm of voice recognition, consumers have seen the advent of Siri and Alexa, Bouman says. “At the same time, deep learning is very disruptive in the research field, changing a wide range of research topics in fundamental ways. We use deep learning now in almost everything we do. It’s been an incredibly energetic time. For example, our research is moving the direction of laboratory experiments that can be controlled through artificial intelligence, a self-driving laboratory where we use AI to determine where to make the next measurement in a way that is most informative for either accepting or rejecting our hypothesis.”

Integrated Imaging Is Key to the Research

Central to the research is integrated imaging, where deep-learning algorithms are integrated within sensing systems. “Real sensors are not just hardware. They are a combination of hardware and algorithms, and that’s why we call it integrated imaging,” says Bouman, who leads an integrated imaging research cluster at Purdue.

The deep-learning algorithms promise to bring a sea change to many fields, including manufacturing and medical diagnostics.

“We are applying the techniques of data science, exploiting the newest methods in machine learning and artificial intelligence to extract more information from sensors,” he says. “Instead of just collecting huge quantities of data we collect some data, and then determine where to collect the next data in a way that’s optimal, so we can get the most information with the fewest measurements.”

Bouman says this can be achieved by both using prior information about the physics of the sensor and the objects being imaged, and dynamic acquisition of data, much like what people do when they observe a scene. “People are dynamic in the sense that they look at something and based on what they see, they collect more information by adapting how they look at the scene,” he says.

The algorithms are needed to reconstruct complete images from “sparse data” collected by sensors such as LIDAR (Light Detection and Ranging) in self-driving cars and cell phone cameras.

“LIDAR can only measure at sparse locations, so you want to measure in locations that are most informative and then build a picture of what’s out in the world,” Bouman says. “Your cell phone camera doesn’t measure directly the image you get; it measures pixels at sparse locations and different wavelengths, and then algorithms assemble a photograph.”

Advances Are Speeding Computations with AI

The researchers are incorporating deep-learning algorithms into an approach called model-based iterative reconstruction, or MBIR.

“This is more-or-less how humans solve problems by trial and error, assessing probability and discarding extraneous information,” he says.

MBIR could bring more affordable CT scanners for airport screening. In conventional scanners, an X-ray source rotates at high speed around a chamber, capturing cross-section images of luggage placed inside the chamber. However, MBIR enables the machines to be simplified by eliminating the need for the rotating mechanism. It also has been used in a new CT scanning technology that exposes patients to one-fourth the radiation of conventional CT scanners. MBIR reduces “noise” in the data, providing greater clarity that allows the radiologist or radiological technician to scan the patient at a lower dosage, Bouman says.

“It’s like having night-vision goggles,” he says. “They enable you to see in very low light, just as MBIR allows you to take high-quality CT scans with a low-power X-ray source.”

Deep learning also allows for faster computation. “If you wanted to, you could build complex physics models that are very accurate but can be computationally expensive and difficult to run,” he says. “But what we do is train machine-learning algorithms to approximate the behavior of the physics model, and it’s much faster. So, in some cases, we replace first-principle physics models with these machine-learning methods and they can effectively speed the computations dramatically.”

The approach is being adapted to detect flaws in jet engine parts created with additive manufacturing and in concrete well bores for geothermal energy systems.

“An advantage of additive manufacturing is that you can get complex shapes that are unusual and allow for better performance and reduced cost,” he says. “But a problem is that you need to make sure the part has structural integrity, and it’s hard to look inside these parts to detect defects that could affect the structural integrity. We do CT imaging of the parts and are developing algorithms to do this.”

Adaptive Optics Produces Undistorted Images

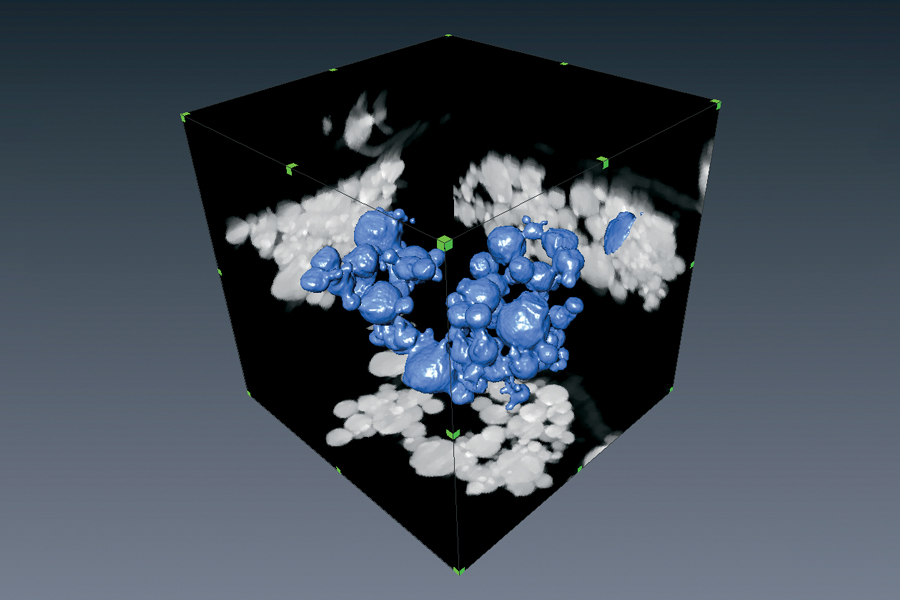

The advanced algorithms also are being harnessed for adaptive optics, making it possible to counteract the distortion caused by “deep turbulence” when imaging through the atmosphere, or in biological and chemical samples in a microscope.

“If you have ever seen the wavy distortion of light over a hot barbecue, that’s because of a phase distortion caused by temperature variations of the air,” he says.

“The same sort of distortion occurs in materials. Deep-learning algorithms are now being used to infer adaptively those optical phase distortions and correct for them in real time.”

The research also involves the use of large-scale supercomputing networks based on graphical processing units.

“The GPUs are at the cutting edge of running these algorithms for training,” he says. “The algorithms can be incorporated into all kinds of applications, from self-driving cars to speech recognition to the kinds of sensing technologies we are developing.”

Some of his research is affiliated with the Awareness and Localization of Explosives Related Threats center (ALERT). Also leading the ALERT team is Stephen Beaudoin, professor of chemical engineering and director of graduate admissions in the School of Chemical Engineering, and Steven Son, the Alfred J. McAllister Professor of Mechanical Engineering.

“As part of our work for ALERT, we are creating a new generation of algorithms for CT reconstruction in airport security scanners,” Bouman says. “These algorithms have the potential to both increase the safety of travel and also reduce inconvenience and cost to the public.”