Today, a majority of our businesses are executed using web services. As users, we tend to think that web transactions succeed without considering the complex back-end processes involved. In reality, web requests do fail, and it is crucial to understand why they fail, how the failures are manifested to the end-user, and what can be done to detect, diagnose, and recover from these failures? This project aims to answer these questions by experimentation on state of the art web service applications and benchmarks.

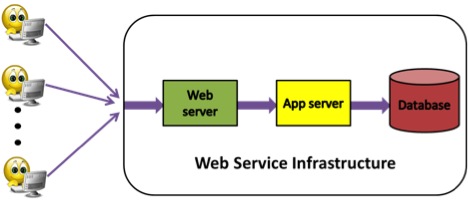

A typical web service architecture serving user requests is composed of a front-end web server, a middle application server and a back-end database. We set-up our testbed infrastructure with this architecture (Figure 1). For simulating web users sending web requests, we set-up the client layer with a HTTP request emulator called Grinder and use Glassfish, an application server to provide the business functionality for a given web application. The application server executes application logic and builds database requests, which are sent to a back-end database.

Figure 1 . Web Request Flow

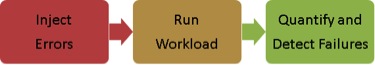

We employ a fault-injection based methodology to evaluate a given web service. Firstly, representative errors are injected into a web application. The workload is then ran, and the failure manifestations are observed from an end-user perspective. We classify failure manifestation into three classes, i.e., non-silent, non-silent-interactive and silent. Non-silent failure occurs when a user sees a visible failure in the web browser, e.g., an HTTP “500 Internal Server Error”. Non-silent-interactive failure happens, when a user finds the returned data unusual and concludes that something went wrong, e.g., a user searches for five valid items, but is returned only three. Silent failure occurs when no visible failure is observed. To detect these failures, at the server end, system features that discriminate between failed and succeeded web requests are determined. An example of a system feature is call-length, which if monitored can detect failed web requests with reasonable accuracy. For monitoring by a computer system, these features are encoded as rules that raise an alert when violated. These rules can be either application-specific, where some domain knowledge is required to learn the parameters of the rule, or they can be application-generic, where the rule will work across all applications. These rules are then embedded within the application. The process of embedding the rules in an application is made transparent using standard technologies like filters and interceptors. This avoids the application code to be polluted. The process flow (Figure 2) and major goals of this project are given below:

Figure 2 . Process Flow Diagram

- Quantification of application failure modes (silent vs non-silent) for a given web service.

- Derivation of rules which detect web service failures with reasonable accuracy.

- Analysis of basic design patterns that lead to robust web services.