An Approach-Path Independent Framework for Place Recognition

and Mobile Robot Localization in Interior Hallways

An Approach-Path Independent Framework for Place Recognition

and Mobile Robot Localization in Interior Hallways

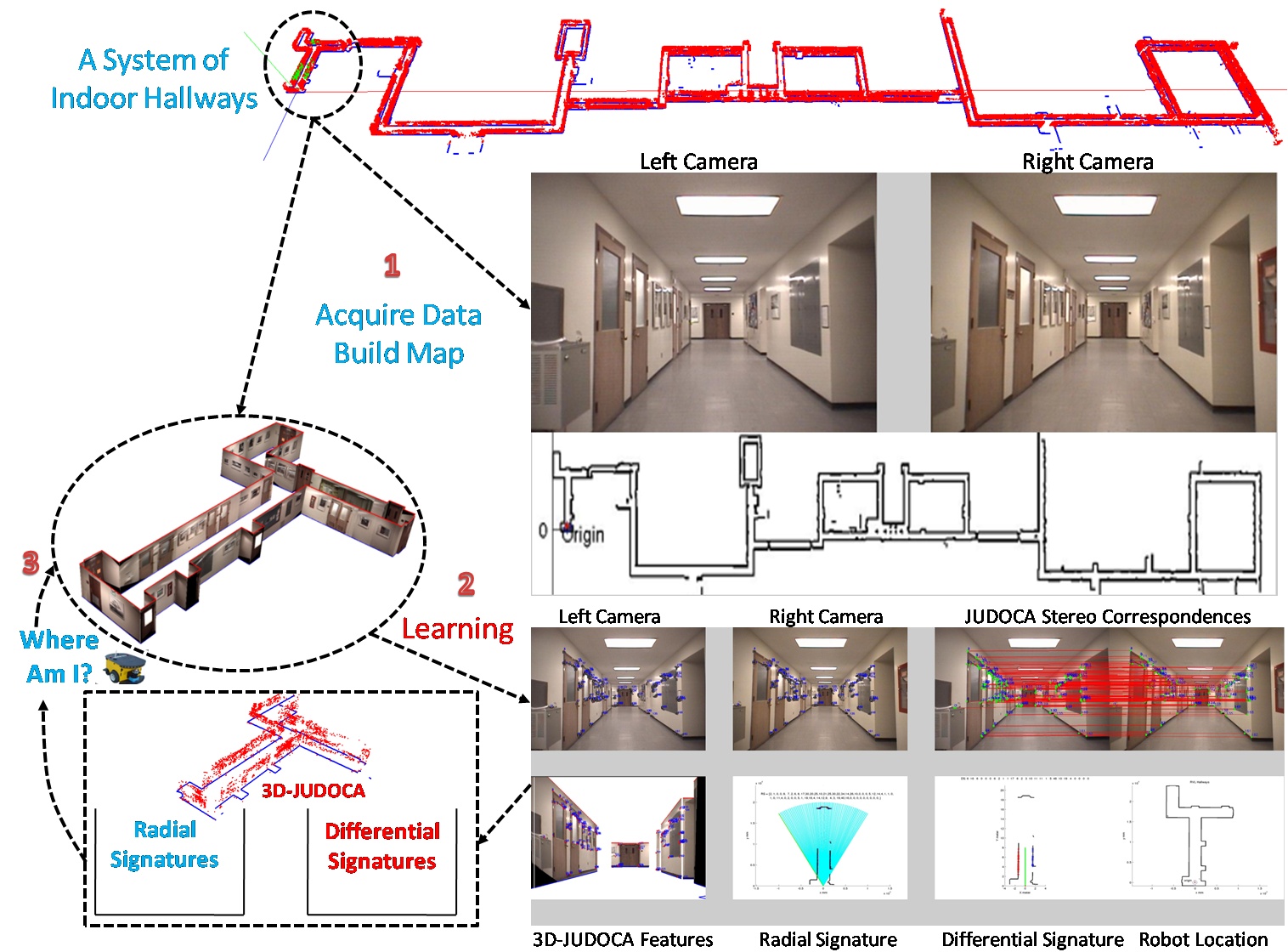

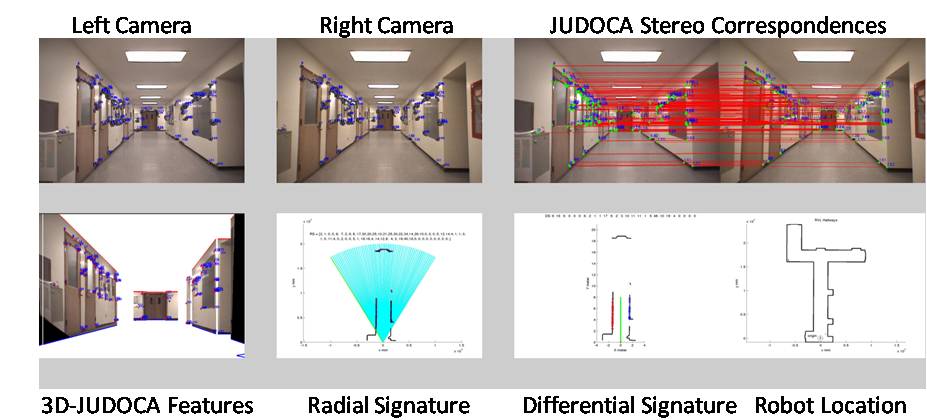

This work is about self-localization by a robot using stereo images of the environment. We use 3D-JUDOCA features, shown in Figure 1, that have high robustness to viewpoint variations. We present a novel cylindrical data structure — the Feature Cylinder — for expediting localization with two hypothesize-and-verify frameworks.

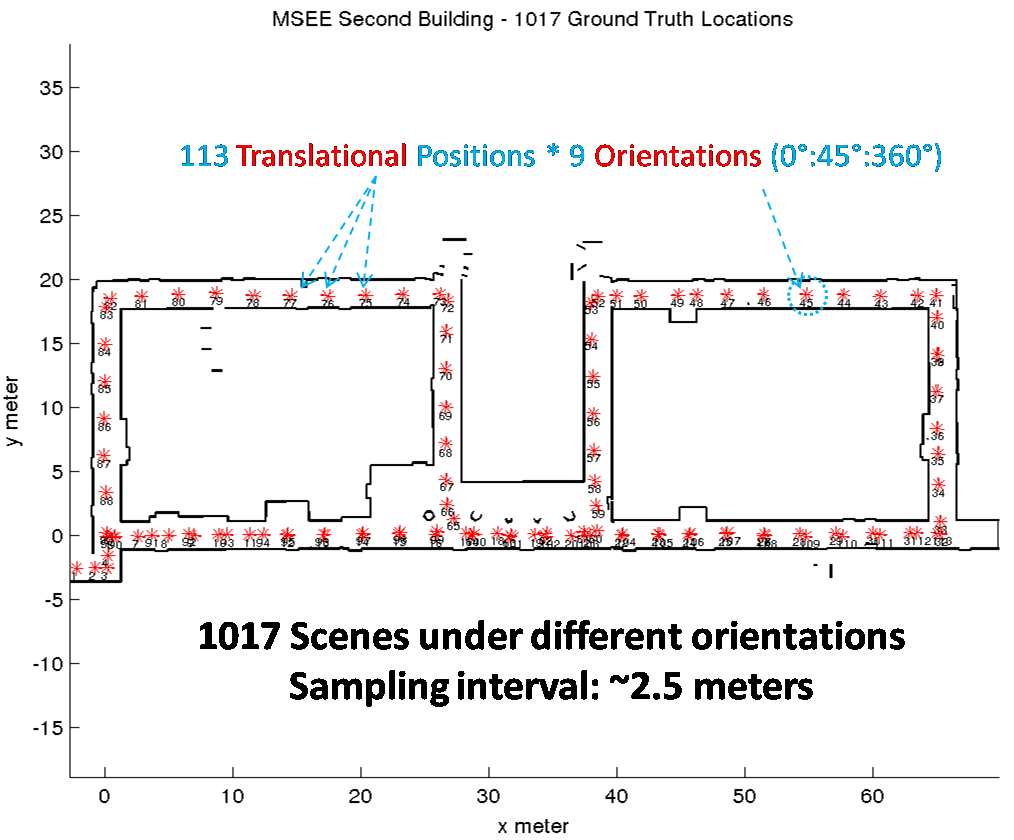

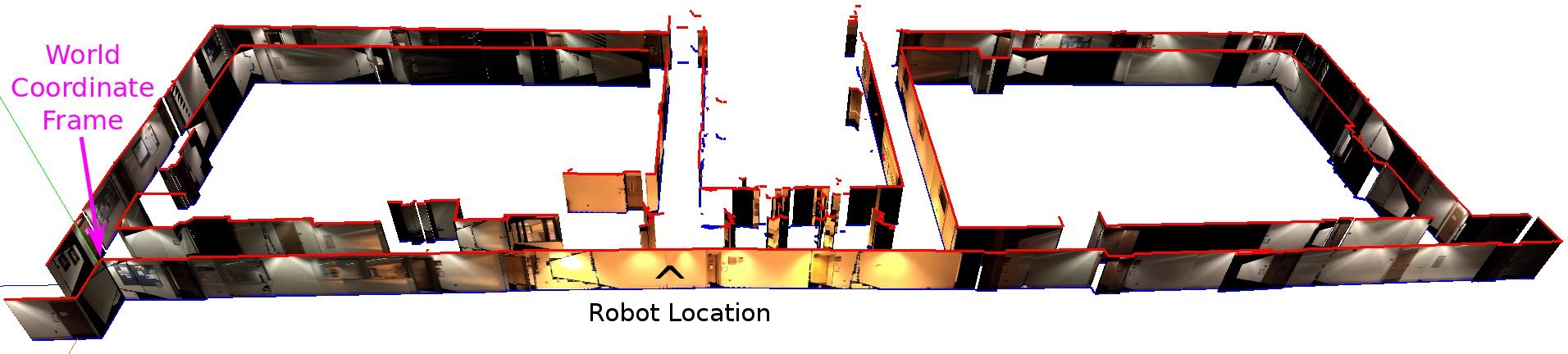

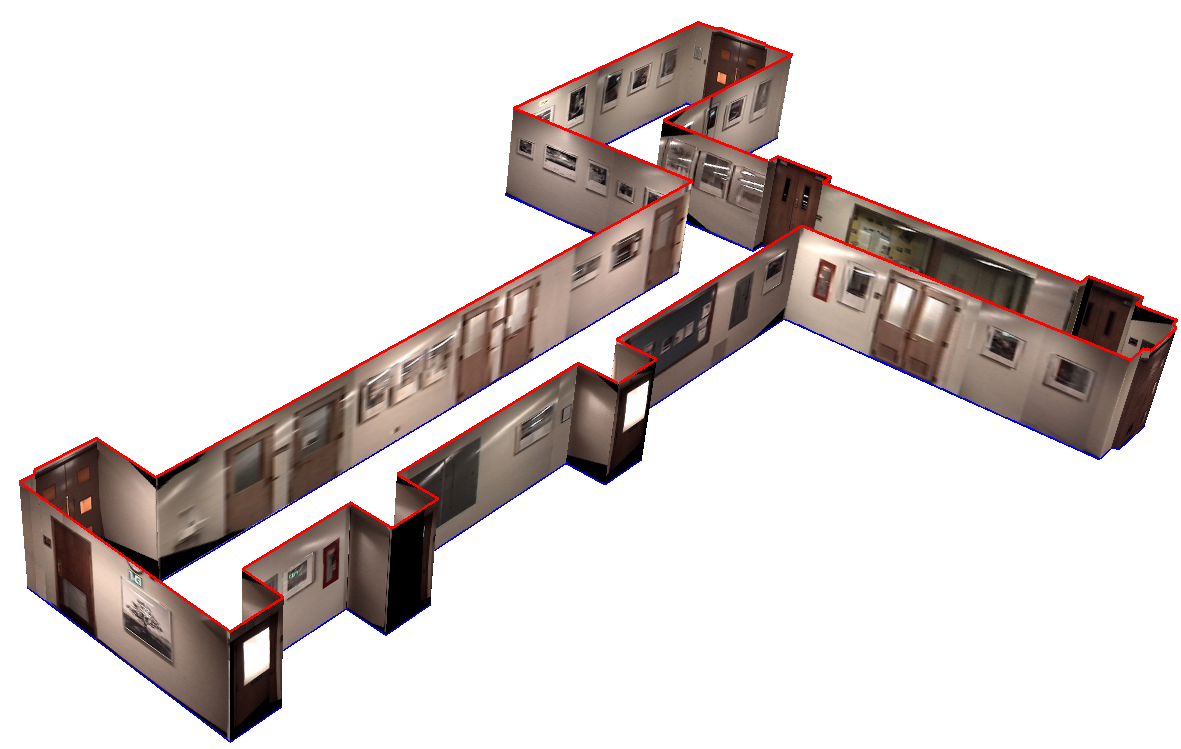

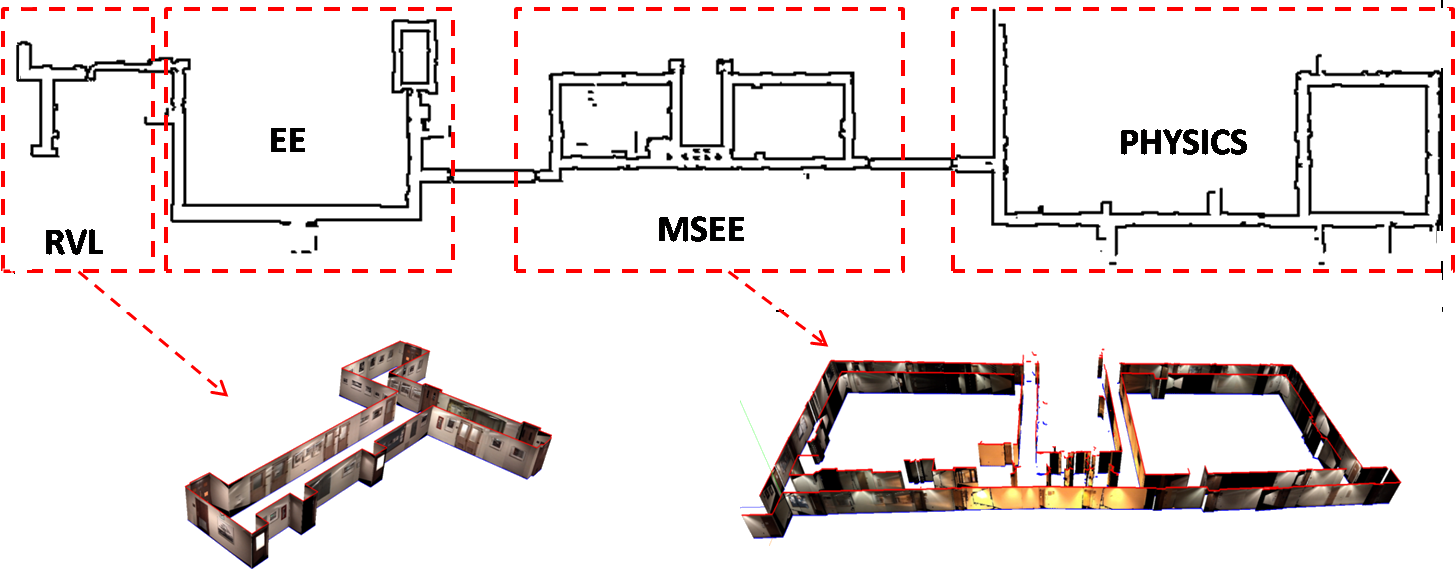

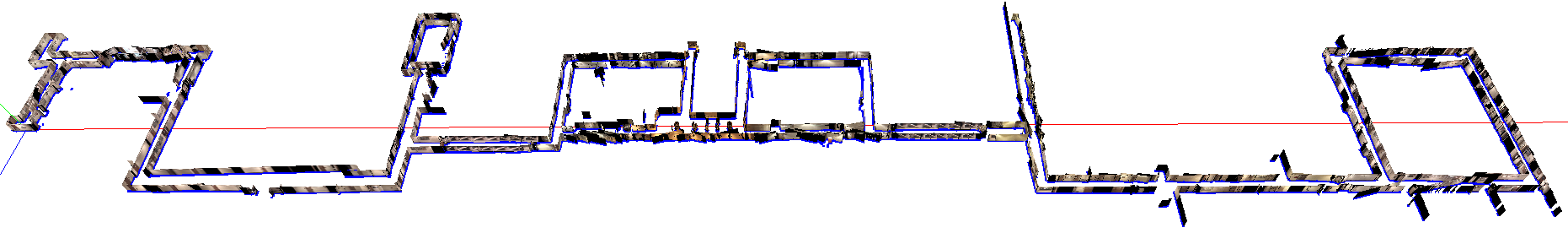

Our performance evaluation consists of two main phases: training (learning) and testing (recognition). During the training phase, a system of indoor hallways is learned by running our map building system. The learned data consist of a 3D map of the interior hallways with all the poses of the robot locations registered in this map, a set of 3D-JUDOCA features extracted from stereo pairs of images recorded by the robot and lastly, only for identified locales, derived DS and RS signatures from the 3D-JUDOCA features. The big picture of the performance evaluation setup is pictorially depicted in Figure 2. After the training phase is over, our matching algorithms work in real time as the robot asked to localize itself when taken to a random place in the same environment. We base the experimental results on different databases of recorded data (stereo images recorded by our robot).

All of the work is based on the premise that a robot wants to carry out place recognition and self-localization with zero prior history. This is a worst case scenario. In practice, after a robot has recognized a place and localized itself, any subsequent attempts at doing the same would need to examine a smaller portion of the search space compared to the zero-history case. Our goal is to create a complete framework that allows prior history to be taken into account when a robot tries to figure out where it is in a complex indoor environment.

• Khalil Ahmad Yousef

• Johnny Park

• Avinash Kak

The stereo images of the datasets used in the performance evaluation in the publication mentioned below

• MSEE Building - Second Floor: [dataset, 96 MB]

• Robot Vision Lab Hallways: 333 Locales [dataset, 52 MB]

• The hallways found in the second floor of Purdue’s EE, MSEE, and Physics buildings: 6209 Locales [dataset, 959 MB], [wmv,1.9 MB]

Khalil Ahmad Yousef, Johnny Park, and Avinash C. Kak, "Place Recognition and Self-Localization in Interior Hallways by Indoor Mobile Robots: A Signature-Based Cascaded Filtering Framework," IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Chicago, September 14-18, 2014 [pdf]

Khalil M. Ahmad Yousef, Johnny Park, Avinash C. Kak, "An Approach-Path Independent Framework for Place Recognition and Mobile Robot Localization in Interior Hallways," 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, May 6-10, 2013 [pdf]

Hyukseong Kwon, Khalil M. Ahmad Yousef, Avinash C. Kak, "Building 3D visual maps of interior space with a new hierarchical sensor fusion architecture," Robotics and Autonomous Systems, ISSN 0921-8890, 10.1016/j.robot.2013.04.016 [pdf]