One system allows devices to understand and immediately identify objects in a camera’s field of view, overlaying lines of text that describe items in the environment.

“It analyzes the scene and puts tags on everything,” says Eugenio Culurciello, associate professor of biomedical engineering with appointments in electrical and computer engineering, mechanical engineering, and health and human sciences.

The innovation could find applications in “augmented reality” technologies like Google Glass, facial recognition systems and autonomous cars. “When you give vision to machines, the sky’s the limit,” Culurciello says. Internet companies are using deep-learning software, which allows users to search the Web for pictures and video that have been tagged with keywords. Such tagging, however, is not possible for portable devices and home computers. “The deep-learning algorithms that can tag video and images require a lot of computation, so it hasn’t been possible to do this in mobile devices,” Culurciello says.

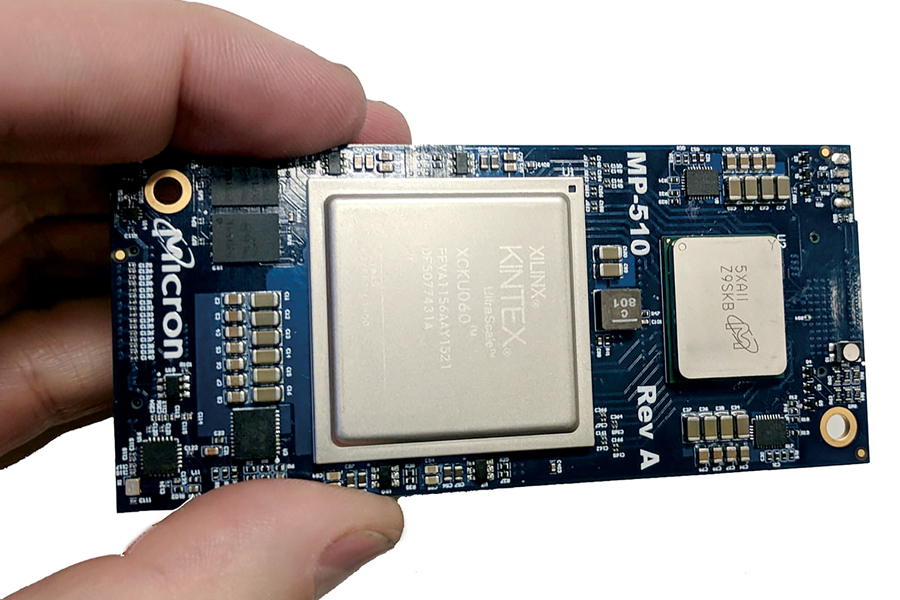

His research group has formed startup FWDNXT, based in the Purdue Research Park, that is designing next-generation hardware and software for deep learning. The team has developed a low-power mobile coprocessor called Snowflake for accelerating deep neural networks effective at image recognition and classification. Snowflake was designed with the primary goal of optimizing computational efficiency by processing multiple streams of information to mix deep learning and artificial intelligence techniques with augmented reality applications.

New software and hardware might allow a conventional smartphone processor to run deep-learning applications. “We are kind of head-to-head with Google, Nvidia and Intel,” Culurciello says. “We have been focused on designing both very efficient neural network algorithms and very efficient hardware to run the algorithms. Snowflake is very efficient at running the deep-learning, or machine-learning, algorithms.”

In some cases, he says, it’s better than Nvidia’s GPU, the standard hardware used for research and development in deep learning and machine learning.

While other research groups have focused on developing more accurate algorithms, Culurciello’s group has concentrated instead on efficiency. “Accuracy is not really the important metric by itself,” he explains. “What we try to do is maximize accuracy per watt or per operation.” While accuracy is important, efficiency is critical for applications like autonomous cars. “What good is accuracy if you have to wait 10 seconds for your autonomous car to decide how to act on a critical decision?” he says.

The goal of FWDNXT is to enable computers to understand the environment so computers, phones, tablets, wearables and robots can be helpful in daily activities.

Snowflake is able to achieve a computational efficiency of more than 91 percent for an entire convolutional neural network, the deep-learning model of choice for performing object detection, classification, semantic segmentation and natural language-processing tasks. Snowflake also is able to achieve 99 percent efficiency while processing some individual layers, he says.

FWDNXT’s innovation in hardware and software will be used to drive cars autonomously; to recognize faces for security and for numerous other day-to-day purposes; such as helping people find items on their shopping lists as they walk down a store aisle or helping smart appliances recognize a user’s preferences.

Culurciello says there has been remarkable development in machine learning in the past five years as computer scientists turned to specialized chips to do more complex computing instead of depending on a central processing unit. “It can operate on large data very fast with low power consumption,” Culurciello says. “We want to have the maximum performance with the minimal energy.”

The FWDNXT team includes graduate students Ali Zaidy, the lead architect, designer of Snowflake and a deep-learning expert; Abhishek Chaurasia, the team’s deep-learning lead developer; Andre Chang, architect and compiler of deep-learning; and Marko Vitez, neural network optimization wizard.