Teaching machines to pinpoint earthquake damage Shirley Dyke and Chul Min Yeum are the first to combine computer vision and deep-learning technologies for rapid assessment of structural wreckage.

Teaching machines to pinpoint earthquake damage

| Author: | Eric Bender |

|---|---|

| Subtitle: | Shirley Dyke and Chul Min Yeum are the first to combine computer vision and deep-learning technologies for rapid assessment of structural wreckage. |

| Magazine Section: | Main Feature |

| Article Type: | Feature |

| Feature CSS: | background-position: 100% 30%; |

| Page CSS: | #article-banner{ background-position : 100% 20% !important; } |

In the first days after a major earthquake, like the one that struck Taiwan in March 2016, engineering teams fly in to assess the damage to the built environment. “The reconnaissance teams collect photographs and data about what’s happened — what structures survived, what structures failed — and they try to understand how to ensure the future performance of our structures can be improved,” says Shirley Dyke, professor of mechanical engineering and civil engineering.

Gathering these data is challenging, exhausting, dangerous and often sad work. It’s also extremely urgent, since the stricken community needs to repair or remove damaged structures as quickly as possible. Each night, the weary teams examine hundreds or thousands of photos and plan what to collect the next day. “But people can only look at so many,” Dyke says.

“Rather than having teams take several hours trying to organize their data to figure out how to pick up their work the next day, we’d prefer to have them spend less than an hour doing it with our algorithms,” she says.

She and her postdoctoral researcher, Chul Min Yeum, are bringing help in the form of automated image analysis technology that can classify images and zero in on regions of concern. Their work builds on recent advances in computer vision, “deep learning” based on neural network computation, and massively parallel graphic processing unit (GPU) systems that offer great power for analyzing images.

“These software tools are evolving in a way that shows great promise to facilitate and make more efficient the work of engineers and scientists interested in post-event damage assessment,” says Julio Ramirez, professor of civil engineering at Purdue. Ramirez also directs the Network Coordination Office of the National Science Foundation’s Natural Hazards Engineering Research Infrastructure initiative.

Systematic views of destruction

Reconnaissance teams follow a systematic procedure to collect data at an earthquake scene. The researchers take photos from the outside of buildings (and the inside if safe) and gather GPS coordinates, along with related information such as architectural drawings, dimension measurements and images from drones or other sources if those are available. These data are critical for the evolution of structural design procedures and building-design codes that enable infrastructure to withstand future hazards.

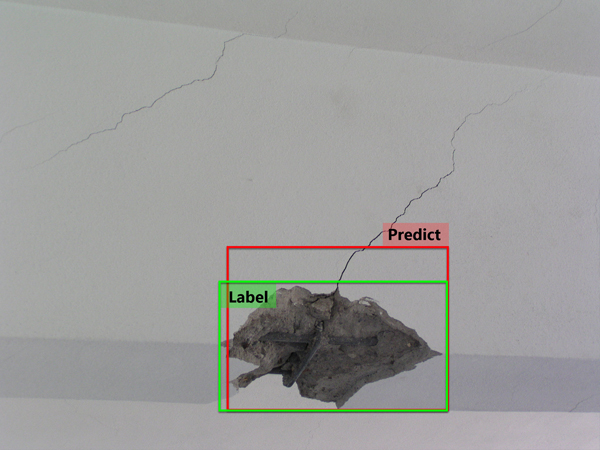

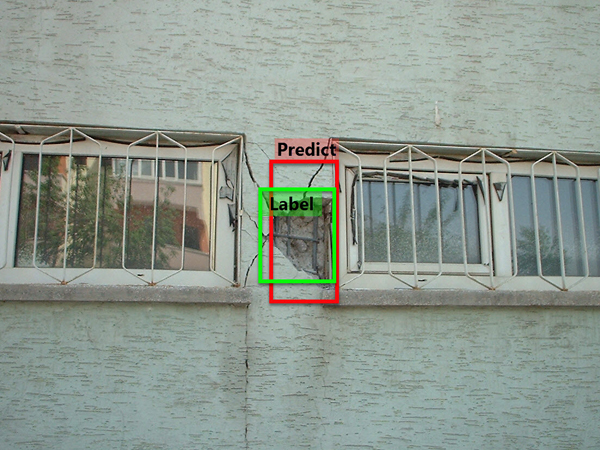

Dyke’s project builds on work by Yeum both in computer-vision technologies and in deep-learning methods to extend those technologies. Deep learning draws on neural network techniques and large data sets to create algorithms for assessing the data — in this case, the images of earthquake damage. “Deep learning has been applied to many everyday image classification situations, but as far as we know, we’re the first to employ it for the damage assessment problems we’re trying to solve,” Yeum says.

A three-year National Science Foundation grant of $299,000 funds the project. Bedrich Benes, professor of computer graphics technology, and Thomas Hacker, professor of computer and information technology, are co-investigators.

(Photo: Mark Simons, Purdue University)

Training for deep learning

The image analysis uses visual data to identify structures in which, for example, a column might be buckled or show spalling (in which pieces of concrete chip off structural elements due to large tensile stresses). “Mostly we want to automatically determine if an instance of structural damage is there and use that information to determine if people should be inside the building,” Dyke says.

Training the deep-learning system to do this requires a painstaking process of manually labeling tens of thousands of images (ideally from very diverse sets of data) according to a chosen schema. The computer system then learns about the contents and features of each class and trains the algorithms to find the best ways to identify the areas of interest.

So far, Dyke and Yeum have gathered about 100,000 digital images for training the system, contributed from several researchers and practitioners around the world. Most of these are from past earthquakes, but other hazard images from tornadoes and hurricanes also are collected. Many images came, for instance, from the Taiwan earthquake, and were gathered by Santiago Pujol, a Purdue professor of civil engineering who brought a team of nine Purdue faculty and students to survey the damage.

“When we ask earthquake researchers for their image data from these missions, typically they give us about 50 images, and we go back to them and say, we really need all of your data,” Dyke says. “Eventually it trickles out that they have thousands of images sitting on their hard drive. Our research is trying to teach the computer to determine automatically why they took 5,000 images and pulled out those 50. Then all of the data can inform future decisions.

“We will seek opportunities to test our system in the field, perhaps working with researchers that are examining the tens of thousands of buildings exposed to significant ground motions in Italy in the past months,” Dyke says. “The classifiers trained using our database of past images would be applied to new images collected on site to directly support teams in the field.”

(Image source: DataHub.)

Definitions for damage

In previous work, Dyke has explored using field disaster data sets maintained at Purdue’s Center for Earthquake Engineering and Disaster Data (CrEEDD), which is led by Pujol. She wants the new deep-learning tools to accelerate the progress being made around the world by the vast numbers of researchers who contribute to and work with CrEEDD data.

Building tools for common use by this diverse population of researchers has called for a parallel effort: creating common and rigorously defined terminology and annotation methods to describe structural damage.

Currently, engineering groups evaluating damage caused by the same event may employ a wide variety of terms to describe images of earthquake damage, and that impedes the identification and analysis of data sets with which they are not familiar. Keyword searches may not get the results that researchers expect, for example. “We want to unify the classifiers used to organize the visual data and make it very easy for a broad range of researchers to access, which no one else has done,” Yeum says.

“As we develop the methodologies to organize the data, we are engaging researchers who deal with building codes, so that the classifiers actually can be used by people around the world,” Dyke adds.

With a common classification scheme in place, “using the same tools, I could look at an earthquake’s effects on reinforced concrete structures, and a social scientist might look at the impacts on housing and services,” Ramirez notes.

“We’re trying to learn the lessons from data collected at really high cost in human life and economic cost, and use that information to make structures that will be more resilient to future events,” he says. “We’re also trying to help the communities come back more quickly. At the end of the day, we want to help people who are in very difficult times.”

(Photo: Mark Simons, Purdue University)