Spotting and identifying satellites from Earth is difficult. Not only are they far away and very small, but getting a meaningful amount of detail requires a high-end telescope with a large open-ing (aperture). Never mind the pesky problems of atmospheric blurring, light pollution and — the bane of every amateur astronomer — clouds. Spot-ting smaller pieces of orbital debris is even harder.

But what if you could send up a satellite to take pictures up close? And, rather than sending back just those pictures — which takes a lot of bandwidth — could it be smart enough to generate a 3D model of what it saw, calculate how it is oriented in space, and beam that information down to Earth for peo-ple to study? Complete with their trajectories?

That’s the goal of the Boeing-funded Rela-tive Navigation Project that Associate Professor Carolin Frueh has tackled for the past two years. Together with PhD student Daigo Kobayashi, Frueh worked to design a hardware-in-the-loop testbed that can calculate the shape, orientation (attitude motion) and trajectory of another space object — be it a live satellite, a derelict satellite or just space junk.

Their technique is a variation of Simultaneous Localization and Mapping (SLAM) — which is how autonomous vehicles figure out where they are and where they’re going. But space missions have strict power, size and weight requirements, meaning Frueh couldn’t rely on advanced sensors like LiDAR, infrared or multiple color cameras that capture depth information.

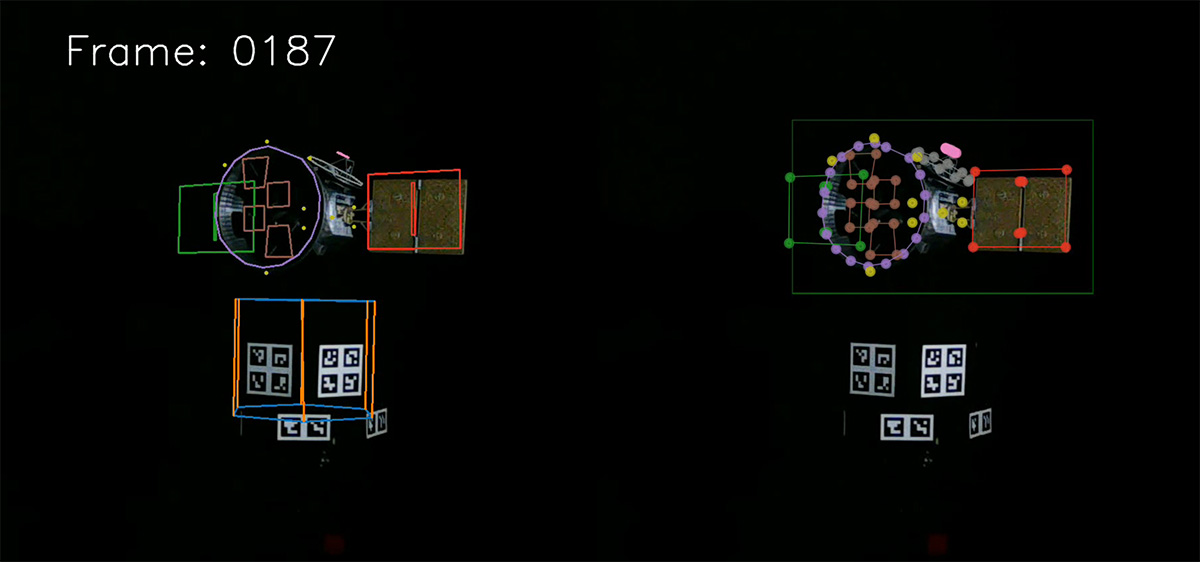

In lab experiments, the software devised by Frueh and Kobayashi was able to identify and follow the major shapes in the TESS satellite model as it rotated about a tilted vertical axis. ArUco markers attached to the model’s rotating support were used for a “ground truth” comparison. In some frames, the solution calculated from images alone appear more accurate than the ground truth solution.

Frueh and Kobayashi would have to get by with just one monochrome camera. The kicker was to design the software to be simple enough to run on a low-power microcomputer. The kind that might fit on a cubesat.

“The true application here is to check out satel-lites and get independent information about their operational state and operational health,” Frueh says. But she also envisions broader applications for a system like this.

"There is a lot of interest in collecting debris in space.This could be used to help identify what that debris is and if it’s worth collecting. One can tell a non-responsive satellite by whether its solar panels are deployed or pointed towards the sun, or if it’s tumbling.”

Frueh had a head start in designing the software: She specializes in orbital dynamics and debris tracking. Her previous work includes programs that identify and characterize objects in orbit based on their reflections as seen from Earth.

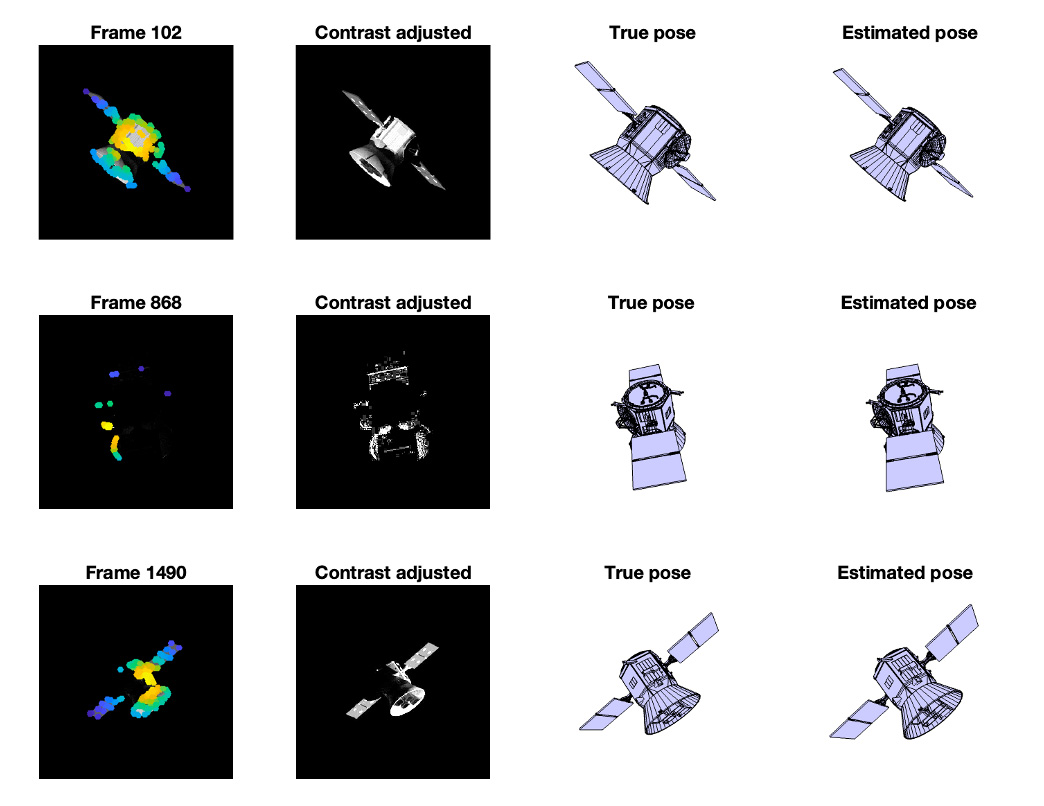

This image shows a satellite's estimated position based on low-quality pictures side-by-side with the actual position of the satellite.

That kind of expertise is what brings partners like Boeing to work with Purdue, says Joe Krok, executive director of at the Office of Industry Partnerships.

“Boeing comes to Purdue because together we have built a long-term, mutually beneficial, productive partnership. Purdue has a deep bench of talent among students and faculty, we have a business friendly approach to contracting, and we bring world-class capability in a broad range of disciplines,” Krok says.

Frueh’s novel challenge in this project was to develop her previous work into a similar, but scaled-down image analysis tool for resolved im-ages. In the first phase of this project, their soft-ware design was tested with simulated pictures.

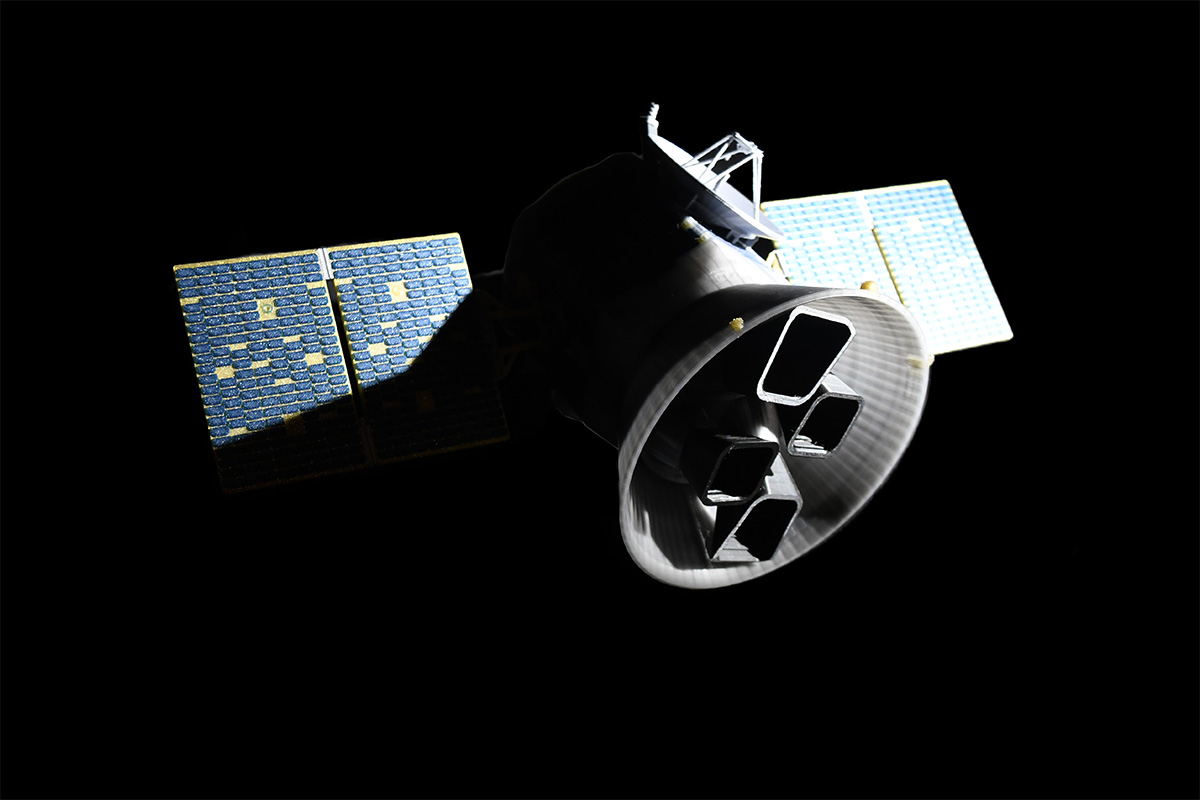

A detailed model of NASA’s TESS satellite is being used to refine lightweight software that can identify objects in low Earth orbit. This photo shows some of the challenges that the software faces: Dark shadows obscure some parts of the satellite, and brightly lit areas may get washed out and lose detail.

Phase 2 took her work into the realm of real-world experiments, making use of her Space Informa-tion Dynamics Lab.

The SID Lab space is a windowless room in-side Armstrong Hall. With the door shut, it’s pitch black inside. Frueh and Kobayashi have precise control of all light sources in that room, making it the perfect place to simulate the depth of space — and a great photo studio.

A hyper-realistic satellite model would serve to test the system’s capabilities. Frueh reached out to Dailey Productions, experts in additive manu-facturing, to 3D-print a scale model of NASA’s Transiting Exoplanet Survey Satellite (TESS).

Giving themselves no advantages, Frueh got one of the cheapest webcam models they could find and connected it to a Raspberry Pi — an ex-tremely low-cost, single-board computer about the size of a credit card.

Frueh’s Earth-bound webcam would take grainy, grayscale pictures of an extremely detailed satellite model.

Additional complicating factors were added to test the strength of the algorithm. Frueh’s pro-gram had to contend with hot and cold pixels, perspective projection errors, stray light from the sun and moon and more.

Graduate student Daigo Kobayashi reviews the calculated ground truth based on their lab’s webcam output.

They attached helper shapes, called ArUco markers, on the model’s rotating platform to train and improve the software model and fuse it with a gyro unit measurements. ArUco markers are common in augmented reality systems to cor-rectly overlay renderings into the real world, cor-recting for effects like camera position and lens distortion. Gyros are traditionally used in the on-board attitude estimation. The attitude solution provides feedback to train their image analysis model against the ArUco-based “ground truth.”

Putting their computations to work, Frueh and Kobayashi’s software was successful in finding the satellite’s calculated key points and tracking the satellite’s major shapes through multiple rota-tions about vertical and tilted axes.

“We’re very pleased with these results,” Frueh says, noting that Boeing has renewed the project to continue into 2025.

“We have succeeded in producing a light and effective program that can estimate the shape, orientation, position and trajectory of space ob-jects using very limited information and compu-tational power. I’m excited to see how we can put this to use in our future research.”