Texture-based Video Coding and Motion Analysis for Video Compression

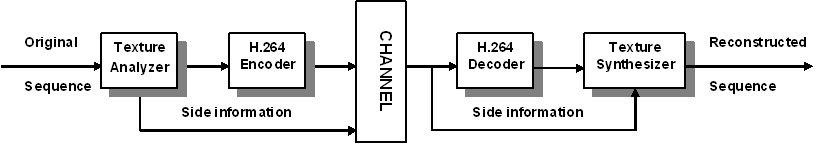

Video Coding techniques are a major research path on the image processing and visual communications fields. In recent years, a growing interest in developing novel techniques for increasing the coding efficiency of video compression methods has taken place in the video coding field. In this project we integrate several spatial texture tools into a texture-based video coding scheme in order to detect texture regions in video sequences. These textures are analyzed using temporal motion techniques and are labeled as skipped areas that are not encoded. After the decoding process, frame reconstruction is performed by inserting the skipped texture areas into the decoded frames. We investigated new techniques to prevent some segmentation errors caused in the texture-based performance. We studied the spatial and temporal properties of dynamic or non-rigid textures in order to detect a wider range of textures. Finally, we studied a novel approach inspired by the texture-based video coding scheme, we considered human eye motion perception properties and tracker detection properties instead of spatial properties of the video sequence.

This research is led by Prof. Edward J. Delp at the Video and Image Processing Laboratory (VIPER).

Example of texture (water) analysis and detection

- Feature extraction is used to measure local texture properties in an image. In this project we investigated the following techniques:

- Segmentation. Once the texture features are extracted and feature vectors are formed, texture segmentation then groups regions in an image that have similar texture properties.

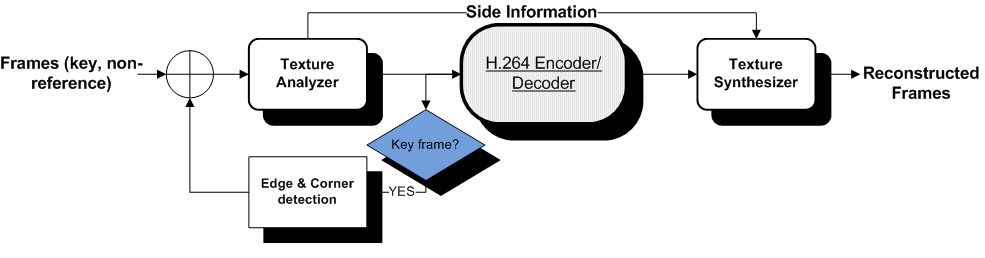

The spatial texture models referred above operate on each frame of a given sequence independently of the other frames of the same sequence. This may yield to an inconsistency segmentation across the sequence. To maintain temporal consistency of the texture regions, they are warped from frame-to-frame using a motion model to provide temporal consistency in the segmentation. The mapping is based on a global motion assumption for every texture region in the frame, i.e., the displacement of the entire region can be described by just one set motion parameters. We modified a 8-parameter (i.e. planar perspective) motion model to compensate the global motion. The motion parameters are estimated using a simplified implementation of a robust M-estimator for global motion estimation and sent as side information to the synthesizer.

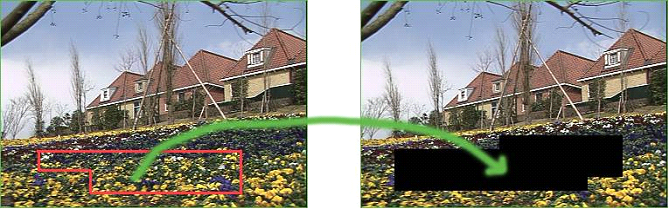

At the decoder, key frames and non-synthesizable parts of other frames are conventionally decoded. The remaining parts labeled as synthesizable regions are skipped by the encoder and their values remain blank in the conventional coding process. The texture synthesizer is then used to reconstruct the corresponding missing pixels. With the assumption that the frame to frame motion can be described using a planar perspective model, then given the motion parameter set and the control parameter that indicated which frame (first or last frame of the GoF) is used as the key frame, the texture regions can be reconstructed by warping the texture from the key frame toward each synthesizable texture region identified by the texture analyzer.

For every texture region in each of the middle frames, the texture analyzer looked for similar textures in both reference frames. The corresponding area (if it can be found in at least one of the reference frames) was then mapped into the segmented texture region based on a global motion model. When a B frame contained identified synthesizable texture regions, the corresponding segmentation masks, motion parameters as well as the control flag to indicate which reference frame was used were transmitted as side information to the decoder. All macroblocks belonging to a synthesizable texture region were handled as skipped macroblocks in the H.264/AVC reference software. Hence, all parameters and variables used for decoding the macroblocks inside the slice, in decoder order, were set as specified for skipped macroblocks. After all macroblocks of a current frame were completely decoded, texture synthesis was performed in which macroblocks belonging to a synthesizable texture region were replaced with the textures identified in the corresponding reference frame.

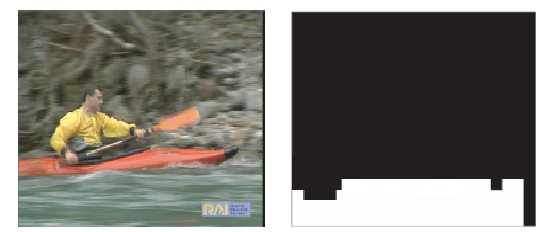

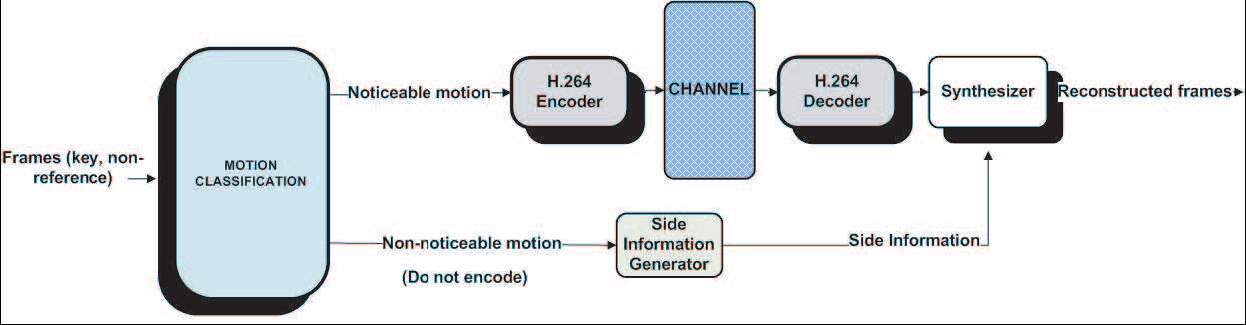

Inspired by the texture-based scheme, we consider human eye motion perception and tracker detection properties instead of spatial properties of the video sequence. We integrate a motion classification algorithm to separate foreground (noticeable motion) from background (non-noticeable motion) objects. These background areas are labeled as skipped areas that are not encoded. After the decoding process, frame reconstruction is performed by inserting the skipped background into the decoded frames. We are able to show an improvement over previous texture-based implementations in terms of video compression efficiency.

The complete list of recent publications in Image and Video Coding in the Video and Image Processing Laboratory (VIPER).

Address all comments and questions to Professor Edward J. Delp.