Abstract

An integrated, computer vision-based system was developed to operate a commercial wheelchair-mounted robotic manipulator (WMRM). In this paper, a gesture recognition interface system developed specifically for individuals with upper-level spinal cord injuries (SCIs) was combined with object tracking and face recognition systems to be an efficient, hands free WMRM controller. In this test system, two Kinect cameras were used synergistically to perform a variety of simple object retrieval tasks. One camera was used to interpret the hand gestures to send as commands to control the WMRM and locate the operator’s face for object positioning. The other sensor was used to automatically recognize different daily living objects for test subjects to select. The gesture recognition interface incorporated hand detection, tracking and recognition algorithms to obtain a high recognition accuracy of 97.5% for an eight-gesture lexicon. An object recognition module employing Speeded Up Robust Features (SURF) algorithm was performed and recognition results were sent as a command for “coarse positioning†of the robotic arm near the selected daily living object. Automatic face detection was also provided as a shortcut for the subjects to position the objects to the face by using a WMRM. Completion time tasks were conducted to compare manual (gestures only) and semi manual (gestures, automatic face detection and object recognition) WMRM control modes. The use of automatic face and object detection significantly increased the completion times for retrieving a variety of daily living objects.

System Architecture

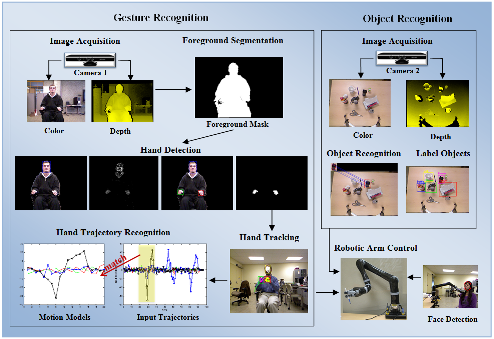

The architecture of the proposed system is illustrated in Figure 1. Two Kinect® video cameras were employed and served as inputs for the gesture recognition and object detection modules respectively. The results of these two modules were then passed as commands to the execution modules to control the JACO robotic arm (Kinova, Inc., Montréal, Canada). Briefly, these modules are described as follows:

A. Gesture Recognition Module

The video input from Kinect camera was processed in four stages using for gesture recognition based WMRM system control; foreground segmentation, hand detection, tracking, and hand trajectory recognition stage. Foreground segmentation was used to increase computational efficiency by reducing search range for hand detection and later stage process. The face and hands were detected from the foreground which provided an initialization region for hand tracking stage. The tracked trajectories were then segmented and compared to the pre-constructed motion models and classified them as certain gesture groups. The recognized gesture was then encoded and passed as command to control the WMRM.

B. Object Recognition Module

The goal of the object recognition module is to detect the different daily living objects and assign a unique identifier for each of these objects. A template was created for each object being recognized. These templates were compared to each frame in the video sequence to obtain the best matching object. The results were then encoded and passed as commands to position the robotic manipulator.

C. Automatic Face Detection Module

A face detector was employed in this module to perform automatic face detection. The goal was to provide a shortcut for the subjects to position the objects to the front of the face by controlling the robotic arm.

D. Execution Module

The robotic arm was programmed as a wrapper using JACO API under C# environment which was then called by the main program. The JACO robotic arm was mounted to the seat frame of a motorized wheelchair. The robotic arm wascontrolled by the encoded commands from gesture recognition, automatic face detection and object recognition module.

Fig. 1. System Architecture