WukLab

Datacenter Resource Disaggregation

Datacenters have been using a monolithic server model for decades, where each server has a motherboard that hosts all types of hardware resources, usually including a processor, memory chips, storage devices, and network cards. This monolithic architecture is easy to deploy but is inflexible in terms of resource utilization, new hardware device integration, and failure handling. We are looking into new ways to rethink datacenter hardware and software systems, including disaggregated hardware architecture, disaggregated operating system, remote memory (and non-volatile memory) systems, and distributed (non-volatile) memory systems.

Hardware Approach to Building OS Functionalities

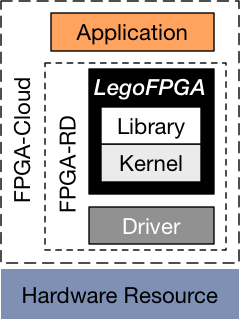

Operating system is the system that manages and virtualized hardware and has thus been always implemented in software. Two recent trends make it appealing to implement OS functionalities in hardware. First, datacenter resource disaggregation separates hardware resources into network-attached, stand-alone devices that applications access from remote. Second, many cloud providers now offer hardware-based accelerators such as FPGA as a service. Applications in both these cases need to have virtualized and protected accesses to hardware resources, and datacenters should support these applications with solutions of good performance per dollar.

We built LegoFPGA, an FPGA-based platform to manage and virtualize hardware resources for both applications running at remote and applications running locally on FPGA. LegoFPGA delivers hardware-like performance and software-like flexibility at relatively low cost. We implemented a set of OS functionalities to manage on-device memory and device network interface. Using these functionalities, we built a virtualized remote memory system and a remote key-value store system. These systems deliver performance at or close to network line-rate with small FPGA area usages.

Network for Resource Disaggregation

The need to access remote resources and to access them fast demands a new network system for future disaggregated datacenters. We are building LegoNET, a new network system designed for adding disaggregated resources to existing datacenters in a non-disruptive way, while delivering low-latency, high-throughput performance and a flexible, easy-to-use interface.

Disaggregated Operating System

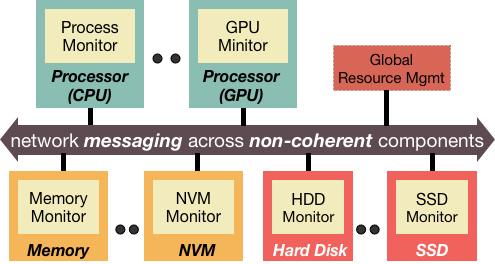

The monolithic server model where a server is the unit of deployment, operation, and failure is meeting its limits in the face of several recent hardware and application trends. To improve resource utilization, elasticity, heterogeneity, and failure handling in datacenters, we believe that datacenters should break monolithic servers into disaggregated, network-attached hardware components. Despite the promising benefits of hardware resource disaggregation, no existing OSes or software systems can properly manage it.

We propose a new OS model called the splitkernel to manage disaggregated systems. Splitkernel disseminates traditional OS functionalities into loosely-coupled monitors, each of which runs on and manages a hardware component. A splitkernel also performs resource allocation and failure handling of a distributed set of hardware components. Using the splitkernel model, we built LegoOS, a new OS designed for hardware resource disaggregation. LegoOS appears to users as a set of distributed servers. Internally, a user application can span multiple processor, memory, and storage hardware components. We implemented LegoOS on x86-64 and evaluated it by emulating hardware components using commodity servers. Our evaluation results show that LegoOS’ performance is comparable to monolithic Linux servers, while largely improving resource packing and reducing failure rate over monolithic clusters.

Disaggregated Persistent Memory

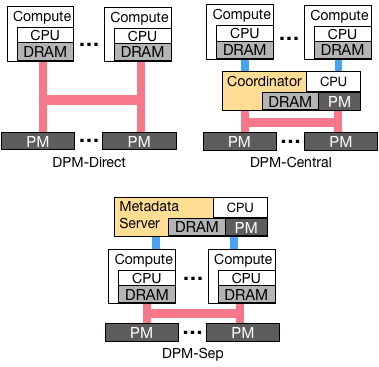

One viable approach to deploy persistent memory (PM) in datacenters is to attach PM as self-contained devices to the network as disaggregated persistent memory, or DPM. DPM requires no changes to existing servers in datacenters; without the need to include a processor, DPM devices are cheap to build; and by sharing DPM across compute servers, they offer great elasticity and efficient resource packing.

We propose three architectures of DPM: 1) compute nodes directly access DPM (DPM-Direct); 2) compute nodes send requests to a coordinator server, which then accesses DPM to complete a request (DPM-Central); and 3) compute nodes directly access DPM for data operations and communicate with a global metadata server for the control plane (DPM-Sep). Based on these architectures, we built three atomic, crash-consistent data stores.

Kernel-Level Indirection Layer for RDMA

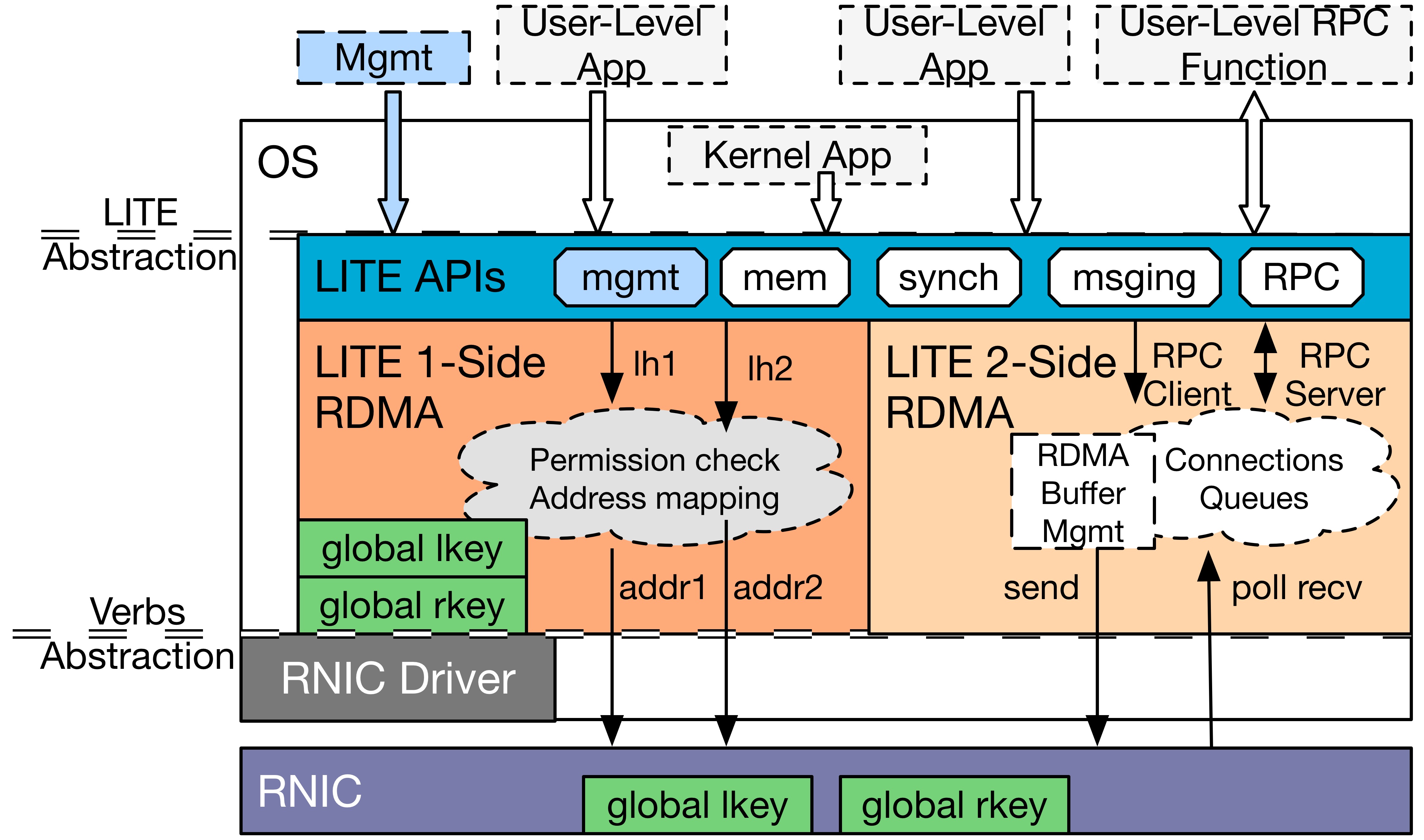

Recently, there is an increasing interest in building datacenter applications with RDMA because of its low-latency, high-throughput, and low-CPU-utilization benefits. However, RDMAis not readily suitable for datacenter applications. It lacks a flexible, high-level abstraction; its performance does not scale; and it does not provide resource sharing or flexible protection. Because of these issues, it is difficult to build RDMA-based applications and to exploit RDMA’s performance benefits.

To solve these issues, we built LITE, a Local Indirection TiEr for RDMA in the Linux kernel that virtualizes native RDMA into a flexible, high-level, easy-to-use abstraction and allows applications to safely share resources. Despite the widely-held belief that kernel bypassing is essential to RDMA’s low-latency performance, we show that using a kernel-level indirection can achieve both flexibility and lowlatency, scalable performance at the same time.

Distributed Shared Persistent Memory

NVMs have the potential to greatly improve the performance and reliability of large-scale applications in datacenters. However, it is still unclear how to best utilize them in distributed, datacenter environments.

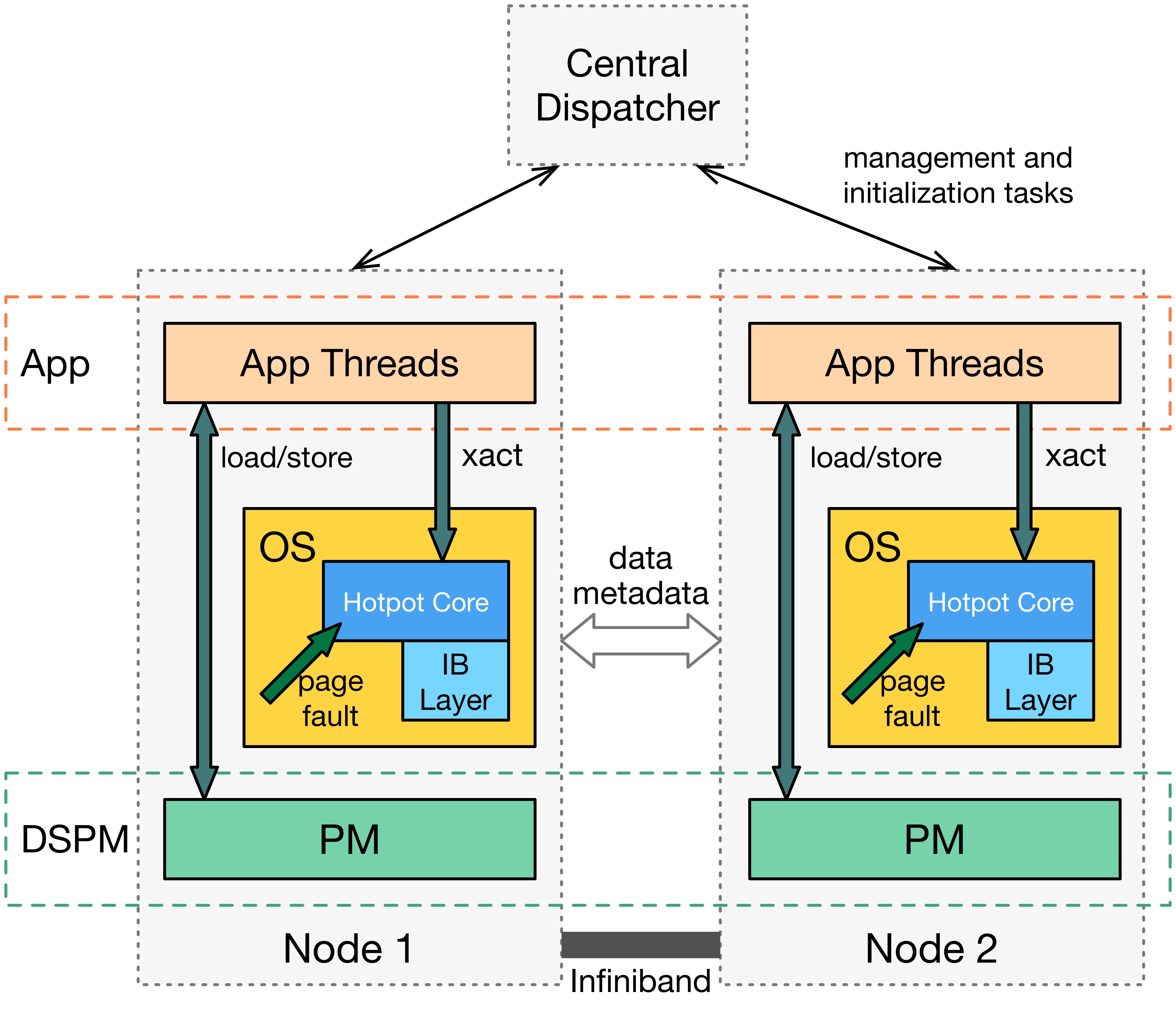

We introduce Distributed Shared Persistent Memory (DSPM), a new framework for using persistent memories in distributed datacenter environments. DSPM provides a new abstraction that allows applications to both perform traditional memory load and store instructions and to name, share, and persist their data. We built Hotpot, a kernel-level DSPM system that provides low-latency, transparent memory accesses, data persistence, data reliability, and high availability.

Get Hotpot here.

Related Publications

Building Atomic, Crash-Consistent Data Stores with Disaggregated Persistent Memory

Shin-Yeh Tsai, Yiying Zhang

arxiv preprint

Challenges in Building and Deploying Disaggregated Persistent Memory

Yizhou Shan, Yutong Huang, Yiying Zhang

to appear at the 9th Annual Non-Volatile Memories Workshop (NVMW '19)

Building Atomic, Crash-Consistent Data Stores with Disaggregated Persistent Memory

Shin-Yeh Tsai, Yiying Zhang

to appear at the 9th Annual Non-Volatile Memories Workshop (NVMW '19)

LegoOS: A Disseminated, Distributed OS for Hardware Resource Disaggregation

Yizhou Shan, Yutong Huang, Yilun Chen, Yiying Zhang

Proceedings of the 13th USENIX Symposium on Operating Systems Design and Implementation (OSDI '18) (Best Paper Award)

Disaggregating Memory with Software-Managed Virtual Cache

Yizhou Shan, Yiying Zhang

the 2018 Workshop on Warehouse-scale Memory Systems (WAMS '18) (co-located with ASPLOS '18)

MemAlbum: an Object-Based Remote Software Transactional Memory System

Shin-Yeh Tsai, Yiying Zhang

the 2018 Workshop on Warehouse-scale Memory Systems (WAMS '18) (co-located with ASPLOS '18)

Split Container: Running Containers beyond Physical Machine Boundaries

Yilun Chen, Yiying Zhang

the 2018 Workshop on Warehouse-scale Memory Systems (WAMS '18) (co-located with ASPLOS '18)

Distributed Shared Persistent Memory

Yizhou Shan, Shin-Yeh Tsai, Yiying Zhang

the 9th Annual Non-Volatile Memories Workshop (NVMW '18)

LITE Kernel RDMA Support for Datacenter Applications

Shin-Yeh Tsai, Yiying Zhang

Proceedings of the 26th ACM Symposium on Operating Systems Principles (SOSP '17)

Distributed Shared Persistent Memory

Yizhou Shan, Shin-Yeh Tsai, Yiying Zhang

Proceedings of the ACM Symposium on Cloud Computing 2017 (SoCC '17)

Lego: A Distributed, Decomposed OS for Resource Disaggregation

Yizhou Shan, Yilun Chen, Yutong Huang, Sumukh Hallymysore, Yiying Zhang

Poster at the 26th ACM Symposium on Operating Systems Principles (SOSP '17)

Disaggregated Operating System

Yizhou Shan, Sumukh Hallymysore, Yutong Huang, Yilun Chen, Yiying Zhang

Poster at the ACM Symposium on Cloud Computing 2017 (SoCC '17)

Disaggregated Operating System

Yiying Zhang, Yizhou Shan, Sumukh Hallymysore

the 17th International Workshop on High Performance Transaction Systems (HPTS '17)

Rockies: A Network System for Future Data Center Racks

Shin-Yeh Tsai, Linzhe Li, Yiying Zhang

WIP and Poster at the 14th USENIX Conference on File and Storage Technologies (FAST '16)