Hyperdimensional (HD) Computing

As an AI paradigm beyond neural networks, HD computing draws inspirations from how the human brain computes with patterns of neural activities in high-dimensional space, for energy-efficient, streamlined, life-long learning.

A community website: https://www.hd-computing.com/community

Neuro-inspired computing, where the hardware fabrics are implemented naturally and closely with the deployed learning algorithms that take inspirations from neuroscience, offers unique opportunities towards a new class of AI hardware with new functionalities, versatility, security, and energy efficiency.

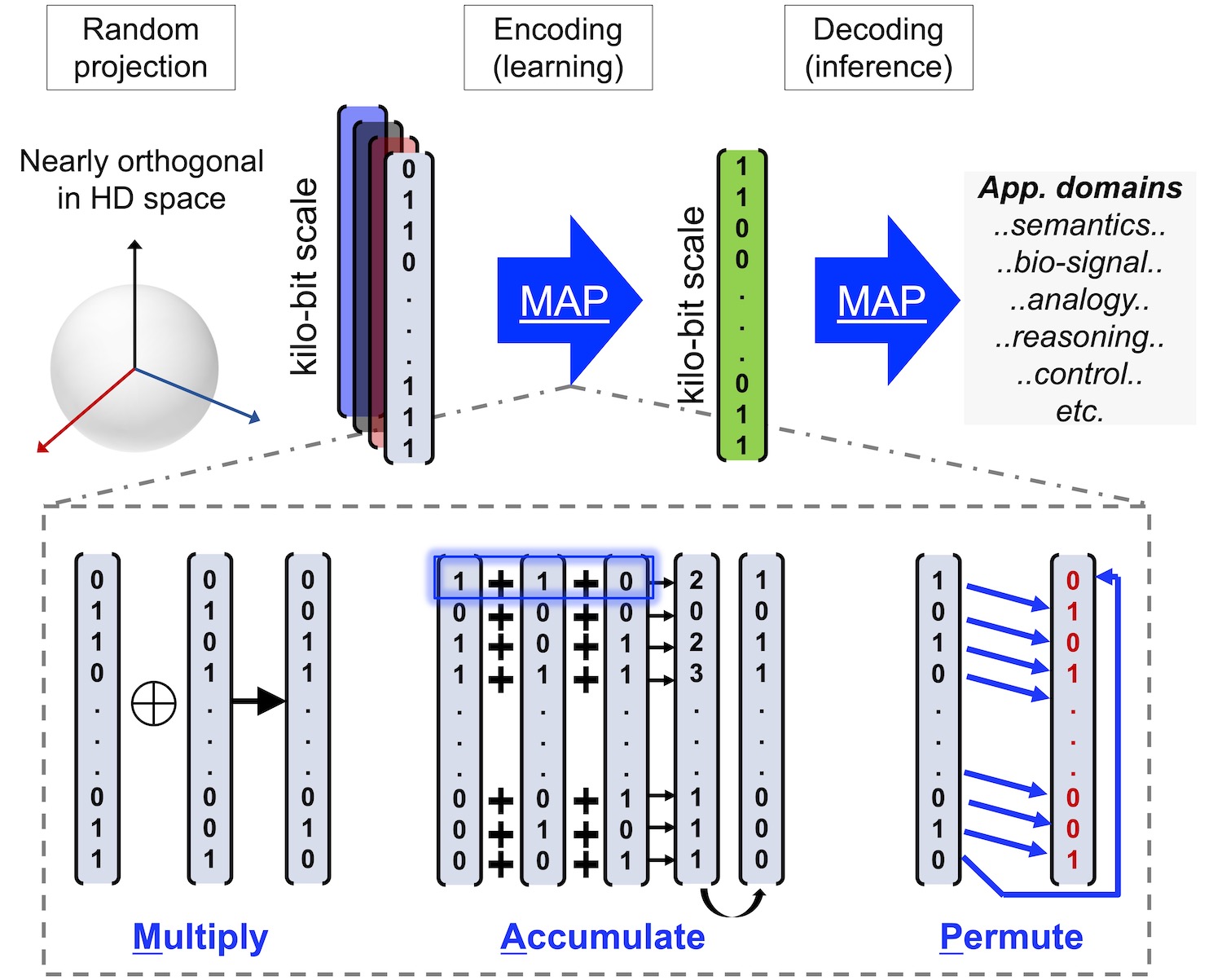

HD computing provides a cognitive computational framework that can be applied to various domains of applications, such as language recognition, analogical reasoning, bio-signal processing, robotic vision and control, drug discovery, and so on. Multi-task learning can be also implemented within the HD computing framework. HD computing is based on the understanding that brains compute with patterns of neural activities that are not readily associated with scalar numbers. Due to the scale of the brain’s circuits, neural activity patterns can be modeled with points in an HD space, that is, with HD vectors. Information is represented and distributed in HD vectors as ultra-wide binary words (with thousands of bits). These HD vectors can then be mathematically manipulated to perform real-time cognitive operations in a streaming fashion with high energy efficiency and ultra-low training cost.

Our ongoing work explores:

- In-memory HD computing fabrics with nanoscale embedded memories (e.g., 3D RRAM, MRAM, FeFET).

- Secure learning, inference, and communication on distributed HD hardware fabrics.

- Interaction between neuroscience and HD framework that inspires new hardware architectures.

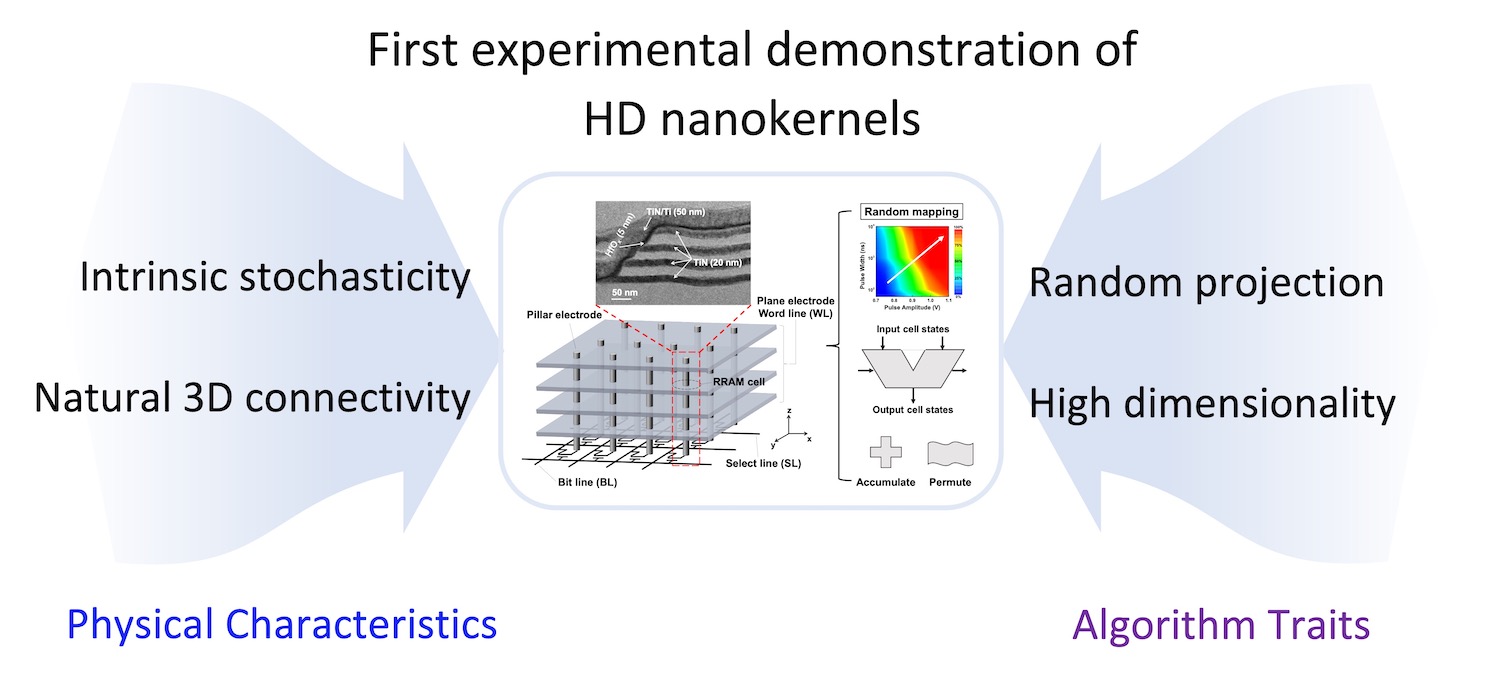

From the algorithm perspective, HD computing embraces randomness and high dimensionality. From the hardware perspective, resistive memories built in a three-dimensional vertical structure possess intrinsic stochasticity and native 3D connectivity. The convergence of physical and algorithm characteristics leads to a natural hardware realization of 3D nano-kernels for HD computing, where the vertical integration and interplay of RRAM and silicon transistors collectively serve as the enabling factors. This is one of the earliest experimental demonstrations for HD computing.

The stochastic switching behaviors of RRAM are captured and characterized by a nonlinear voltage-time relationship in the sub-threshold regime from electrical measurements. Leveraging the intrinsic stochasticity, in situ random projection of HD vectors is realized. Reconfigurable logic immersed in memory, which capitalizes on the shared vertical pillar structure, native 3D connectivity, and non-volatility, is developed and validated by pulse measurements on 3D VRRAM. Furthermore, using these in-memory operation schemes as the primitives, full MAP kernels are then experimentally demonstrated within 3D VRRAMs with robust operations and immunity to D2D variations, eliminating the need of moving data out of high-density 3D memories. Algorithm-hardware co-design offers a knob for energy-accuracy trade-offs, and meanwhile, we show that memory-centric HD systems can be extremely resilient to hardware errors.

Related publications:

VLSI 2016, IEDM 2016, VLSI-TSA 2017, TCAS-I 2017, ISSCC 2018, JSSC 2018, SPIE Spintronics XII 2019