AI Hardware-Algorithm Co-Design

To scale AI models for muti-modal, life-long learning scenarios, the underlying hardware must take a key role in shaping the design of hardware-aware model architectures and learning algorithms, with inspirations from neuroscience.

To scale today’s AI models towards the future life-long learning scenarios with more sophisticated workloads, the underlying hardware must take a bigger role in shaping the design of hardware-aware neural network model architectures and learning algorithms. This motivates us to explore two directions:

- AI systems that naturally tolerate hardware non-idealities without incurring overhead.

- Tailored or re-designed learning algorithms that directly benefit from certain hardware behaviors not seen on conventional hardware.

ML techniques themselves may be leveraged for co-designing AI hardware and algorithms:

- Training statistical machine learning models based on hardware simulations, CAD data, metrology and measurement data, can enable large-scale design space explorations and may uncover hidden mechnisms.

- Meta-models and teacher-models can be constructed to reversely generate efficient, hardware-aware model architectures, using techniques such as neural architecture search (NAS) and knowledge distillation (KD).

Lessons and inspirations from theoretical neuroscience, if used judiciously, with unique characteristics offered by nanoscale semiconductor devices, may guide the design of a new class of AI models and hardware.

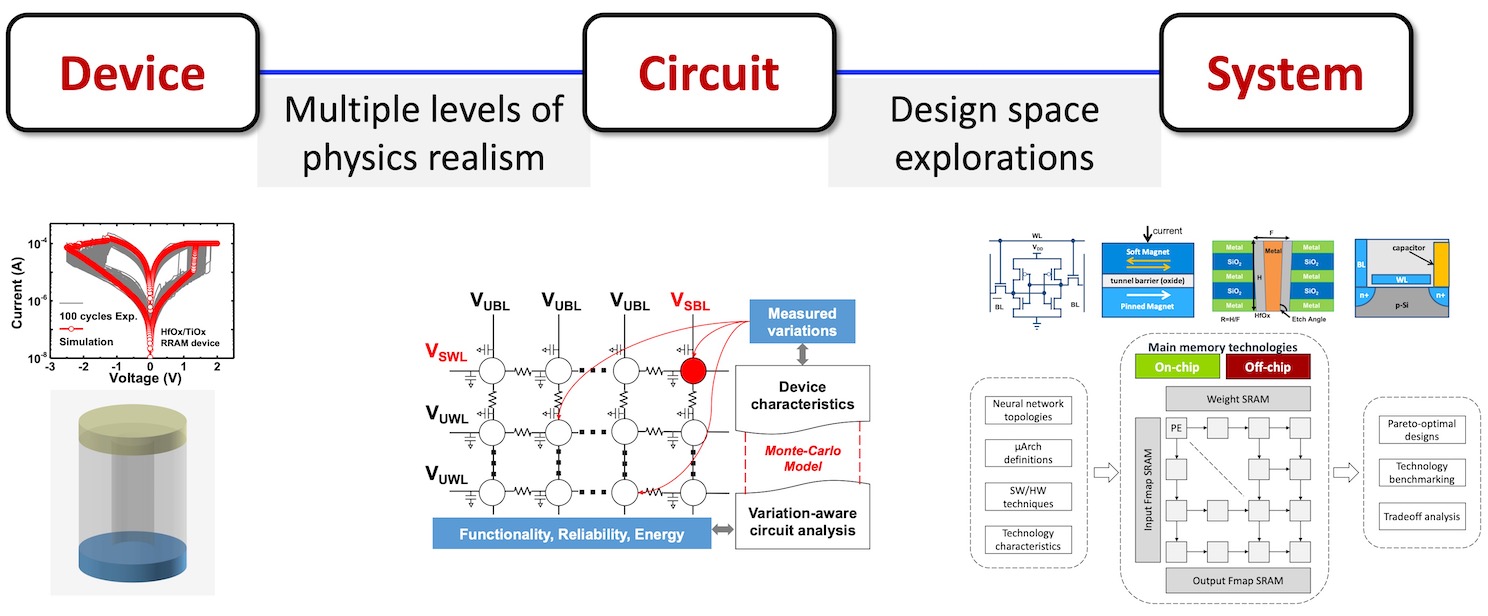

We work on cross-layer modeling for emerging semiconductor device technologies to understand the interactions across various layers of abstraction, down to device physics. Modeling serves as a “common ground” while both technologies and applications keep evolving, and enables circuit and system design explorations. This becomes particularly important when new device physics is involved. We have done extensive case studies with non-volatile memories and in particular, resistive RAM (RRAM).

At the device level, we develop an RRAM SPICE model having a hierarchy of three model levels. With experimental calibration on metal-oxide RRAM devices, the developed RRAM model can facilitate circuit designs and analysis with different design needs, without loss of physics realism. At the circuit level, we develop a full-array Monte Carlo modeling framework for variation-aware RRAM circuit analysis, leading to a deeper understanding of device-circuit interaction and co-optimization. At the system level, a technology-system design space exploration framework is developed to explore on-chip memory technologies (SRAM, eDRAM, MRAM, 3D RRAM) for edge DNN accelerators in mobile SoCs. The analysis indicates that dense memory-compute integration provides ample opportunities for energy and area efficiency through optimal chip resource partition.

Related publications:

DAC 2019, TCAS-I 2017, TED 2017, DATE 2015