Temporal Analysis

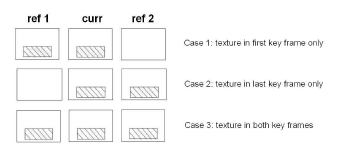

In order to maintain temporal consistency of the texture regions,

the video sequence is first divided into groups of frames (GoF). Each GoF

consists of two reference frames (first and last frame of the considered GoF)

and several middle frames between the two reference frames. The reference frames

will either be I or P frames when they are coded. For every texture region in

each of the middle frames we look for similar textures in both reference frames.

The corresponding region (if it can be found in at least one of the reference

frames) is then mapped into the segmented texture region. There are three

possible cases, the texture is only found in the first reference frame, the last

reference frame, or it is found in both reference frames. In most cases, similar

textures can be found in both reference frames.

In this case, the the texture that results in the smallest error will be considered. The details of the

metrics for the error will be described later in this section. The texture

regions are warped from frame-to-frame using a motion model to provide temporal

consistency in the segmentation. The mapping is based on a global motion

assumption for every texture region in the frame i.e. the displacement of the

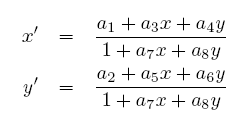

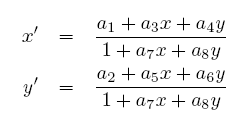

entire region can be described by just one set motion parameters. We modified a

8-parameter (i.e. planar perspective) motion model to compensate the global

motion. This can be expressed as:

Where (x, y)

is the location of the pixel in the current frame and (x,

y) is the corresponding mapped

coordinates. The planar perspective model is suitable to describe arbitrary

rigid object motion if the camera operation is restricted to rotation and zoom.

It is also suitable for arbitrary camera operation, if the objects with rigid

motion are restricted planar motion. In practice these assumptions often hold

over a short period of a GoF. When an identified texture region in one of the

middle frames (current frame) is warped towards the reference frame of the GoF,

only the pixels of the warped texture region that lie within the corresponding

texture region of the reference frame of the GoF are used for synthesis.

Although this reduces the texture region in the current frame, it is more

conservative and usually gives better results. The motion parameters (a0,

a1,

. . . , a8)

are estimated using a simplified implementation of a robust M-estimator for

global motion estimation.

Where (x, y)

is the location of the pixel in the current frame and (x,

y) is the corresponding mapped

coordinates. The planar perspective model is suitable to describe arbitrary

rigid object motion if the camera operation is restricted to rotation and zoom.

It is also suitable for arbitrary camera operation, if the objects with rigid

motion are restricted planar motion. In practice these assumptions often hold

over a short period of a GoF. When an identified texture region in one of the

middle frames (current frame) is warped towards the reference frame of the GoF,

only the pixels of the warped texture region that lie within the corresponding

texture region of the reference frame of the GoF are used for synthesis.

Although this reduces the texture region in the current frame, it is more

conservative and usually gives better results. The motion parameters (a0,

a1,

. . . , a8)

are estimated using a simplified implementation of a robust M-estimator for

global motion estimation.