Dependable

Computing Systems Lab: Research Overview

Prepared by: Saurabh Bagchi

https://engineering.purdue.edu/dcsl/

Last Update: September 18, 2012

1 Executive Summary

The Dependable Computing Systems Lab (DCSL) comprises students (graduate and undergraduate), post-doctoral researchers, and programming staff members within the School of Electrical and Computer Engineering, the Department of Computer Science, and two institute-wide centers – CERIAS (The Center for Education and Research in Information Assurance and Security) and NSF supported NEEScomm (Network for Earthquake Engineering Simulation).

The broad goal of our research is to design practical dependable distributed systems. Our work is motivated by the fact that systems are increasing in scale, both in terms of the number of executing elements and the amount of data that they need to process and existing dependability techniques are increasingly failing to meet the demands of such scaling. The faults that bedevil such systems may be due to accidental (or natural) causes, or malicious (or induced) causes. Our work deals with faults by providing the functionality of detection (tell quickly that there is something wrong), diagnosis (what is the root cause of the failure), containment (how to prevent the failure from propagating through the system), and in some cases, prediction (of an impending failure, so that proactive mitigation actions can be triggered). The dependability mechanisms must not overly impact the application or the execution environment, either in terms of performance impact or in terms of the level of changes that is required from them. Our work focuses primarily in the application and in the middleware software layers, while with embedded wireless devices, we also delve into the low-level firmware.

Changing

execution environments

We observe that the kinds of execution environments are changing – from one where all the components are developed in-house, are open source and well-understood to one where they are made up of third-party software components, which are at least partially opaque to the system owner and interactions amongst the components have many patterns, some of which cannot be enumerated a priori (i.e., before deployment of the system). There is a growing amount of non-determinism in the behavior of the systems due to various factors. First, the execution environments are intrinsically noisy, especially at large scales, due to interference from other applications executing on the same environment and unpredicted interactions among the application’s components themselves. Second, the number of possible usage scenarios are increasing, with the end user interacting with the system under a variety of conditions (consider for example, a smart phone which the consumer is using for talking, while sending text messages, while the gyro is recording a fast upward acceleration). Third, the variety of programming languages and runtime environments being used result in different kinds of faults, some subtly different and some radically so. For example, with programming environments for heterogeneous computing, an incorrect mapping of functional blocks of the application to the system blocks (such as, a memory intensive part of code to a GPU), can lead to a performance degradation. For malicious errors, these different usage scenarios give rise to different attack paths.

The dependability solution has to handle these sources of non-determinism, increasing the fraction of failure cases that it handles correctly, while also keeping a lid on the number of correct executions that it incorrectly denotes as faulty. Different distributed systems impose different kinds of resource constraints on the dependability solution, e.g., large-scale computing clusters require that most communication be kept local, with only rare use of global communication operations; embedded wireless networks constrain the amount of communication and other energy-heavy operations due to the dependability solution.

Broad

characteristics of our work

Our work fits within this broad universe of applications and execution environments. Our work can be characterized as “systems-y”. It derives from solid theoretical underpinnings, adapting them to the domain-specific challenges, and then developing them for use in practical scenarios. We perform synthetic fault injections to evaluate our solutions and then test them out, as far as practicable, in real deployments and in real workloads. Our collaborations take two broad forms: with academic experts in the specific sub-field of Computer Science or Computer Engineering that we dip into for building our dependability solutions and with practitioners who face the dependability challenges in their respective domains. Examples of the former type include our explorations of data mining, machine learning, static analysis, scientific computing, and wireless software development. The latter class comprises collaborations with colleagues from industries and federal labs. Our work has been supported by and adopted by partners at Avaya (Voice over IP security), Emnet LLC (wireless mesh network for waste water monitoring), Motorola (multi-hop wireless network for emergency responders), Lockheed Martin (intrusion tolerance for zero-day attacks), Northrop Grumman (distributed intrusion detection for enterprise class systems), and IBM (mobile computing workloads migrated to the cloud).

Our research is

structured around three thrusts:

1. Dependability in large-scale applications

2. Strengthening enterprise-class distributed systems

3. Dependability of embedded wireless devices and networks

2 Research Thrust 1: Dependability in large-scale applications

2.1 Problem Statement

Current techniques for resilience are insufficient for exascale systems (i.e., systems capable of executing 1018 floating point operations per second), and unless radical changes are made across the entire software stack, exascale systems may never compute reliable scientific results. The available parallelism on exascale systems is expected to increase by 3-5 orders of magnitude over today's petascale systems, driven by increases in on-node concurrency and power density. At that point in the design space, hard and soft failures will be commonplace occurrences. The model of hardware being correct all the time, on all regions of the chip, and forever, will become prohibitively expensive to maintain, in terms of both manufacturing and energy cost.

Current resilience techniques will also be too costly at exascale. Approaches based on checkpoint/restart require enough I/O bandwidth to record checkpoints faster than faults occur, but I/O is not expected to keep pace with processing power, and has already experienced a widening gap with respect to processing power [1,2,3]. Pure checkpointing approaches will thus spend all of their time in I/O instead of performing useful calculations. Replication-based approaches have promise, but blind replication of all tasks will halve the available performance on exascale machines at best, wasting CPU cycles and energy on redundant work.

A targeted approach is needed to allow exascale runtime systems to isolate regions where faults occur and replicate only those parts of the system. To enable this, we need runtime systems that monitor and analyze their own behavior to determine when to take preventive action. This type of analysis has been investigated before, but existing approaches aggregate system-wide data to a central point for analysis, which is unscalable and time-consuming. Further, existing analyses assume that parallel application behavior is relatively homogeneous across nodes and tasks. Such approaches will be ill-equipped to cope with the pervasive adaptive behavior of exascale applications and heterogeneity of exascale platforms.

2.2 Solution Approach

We have developed AutomaDeD [4], a tool that detects errors based on runtime information of control paths that the parallel application follows and the times spent in each control block. AutomaDeD suggests possible root causes of detected errors by pinpointing, in a probabilistic rank-ordered manner, the erroneous process and the code region in which the error arose. Intuitively, the erroneous tasks often form a small minority of the full set of tasks. Hence, they are outliers when we cluster the tasks, based on their features related to control flow and timing. Further, in the time dimension, the executions in the first few iterations are more likely to be correct than in later iterations, which we also leverage to determine correct or erroneous labels; else we make use of some labeled correct runs, if available.

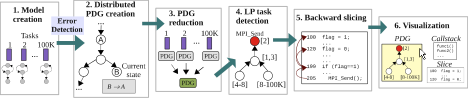

All existing parallel debugging tools (including our first effort at AutomaDeD [4]) failed to scale to the process counts of today’s state-of-the-art systems. Three main factors impede scalability. First, the tools include a centralized component that performs the data analysis. Thus, tools must stream behavioral information from all the processes to this central component so that it can process the information to deter- mine the error and, possibly, its location. Second, the tools require huge amounts of data. While many tools optimize the monitoring part quite well, the cost of shipping all information to the analysis engine and the cost of analyzing the full volume of data remains. While tools such as STAT [5] reduce the data volume that the central component must handle, they still must process the full data in their communication structure. Third, the data structures used to maintain the information are not completely optimized for the operations that need to be performed for error detection and localization, such as comparison of information from processes that belong to the same equivalence class. Small differences in the cost of one operation, though insignificant for hundreds of processes, become significant at larger scales. We address each of these concerns and develop a scalable version of AutomaDeD [6] that runs at scales of tens of thousands of application processes on LLNL’s largest clusters and is able to detect and diagnose application problems. Most recently, we have demonstrated the power of AutomaDeD on real bugs [7], including a hard-to-crack bug in a molecular dynamics code [8]. For this, we augmented AutomaDeD with the ability to perform backward slicing, which it did working backward from the point of manifestation of the fault. The fault manifested only with 7,996 or more processes and our tool quickly found the fault — a sophisticated deadlock condition.

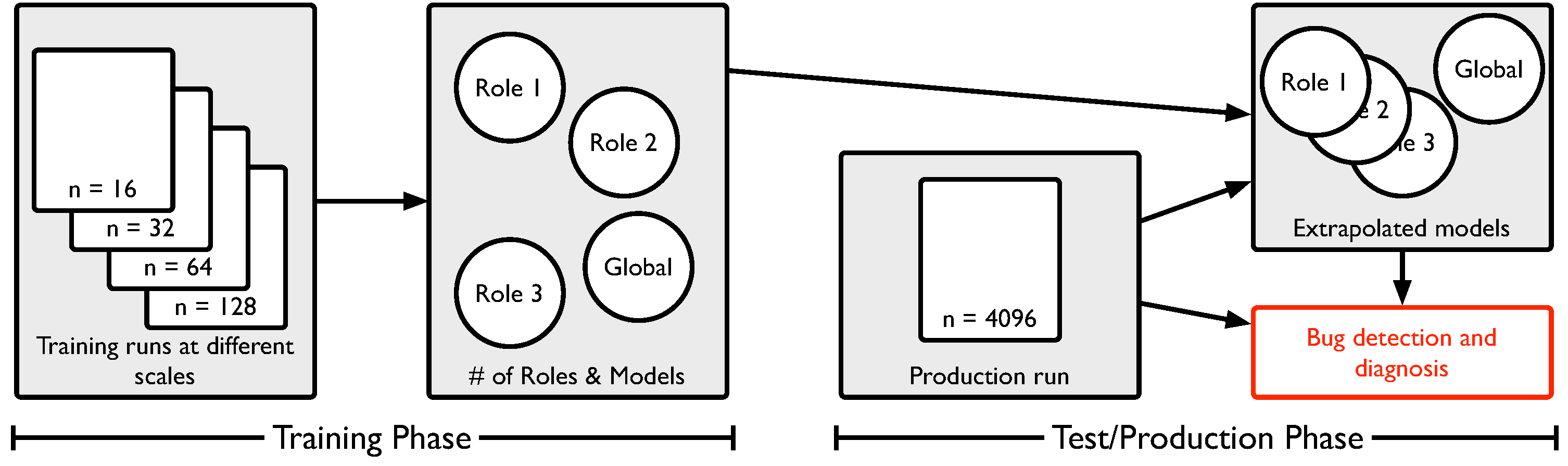

Figure 1. Problem

localization with AutomaDeD

Figure 2. Overview of the workflow for problem localization

An especially subtle class of bugs in large-scale applications are those that are scale-dependent: while small-scale test cases may not exhibit the bug, the bug arises in large-scale production runs, and can change the result or performance of an application. A simple example of this is a data type overflow that happens say when either a large amount of data is exchanged or data is exchanged among a large number of processes. A popular approach to finding bugs is statistical bug detection, where abnormal behavior is detected through comparison with bug-free behavior. Unfortunately, for scale-dependent bugs, there may not be bug-free runs at large scales and therefore traditional statistical techniques are not viable. We have developed a technique called Vrisha [9], a statistical approach to detecting and localizing scale-dependent bugs. Vrisha detects bugs in large-scale programs by building models of behavior based on bug-free behavior at small scales. These models are constructed using kernel canonical correlation analysis (KCCA) and exploit scale-determined properties, whose values are predictably dependent on application scale. Then, if the predicted property at the large scale deviates from the observed property, an error is detected. By intelligently reverse mapping the deviation to the original behavioral feature, we can pinpoint the bug to a behavioral feature and from that, to a region of code.

Figure 3. Overview of the operation of Vrisha divided into training runs at small scales, followed by production runs at large scales

2.3 What’s Coming Up

We are developing techniques that can operate with different kinds of data at large scales. Sometime the behavior of the application changes in a hitherto hard-to-predict manner when the size of data goes above a certain threshold (e.g., thrashing effects kick in because the cache size is no longer sufficient) and we are equipping our current system to determine how correct behavior should change. In another aspect, we are improving the power of our technique to localize the bug to a point in time and to a region of the code. This problem is challenging because the behavioral feature set has a high dimension and in doing the analysis, we reduce the dimensionality. As a result, the reverse mapping becomes one-to-many. We believe that while it is likely not feasible to identify only a single region of code for the developer to examine for bugs, it is possible to identify multiple possible regions, with the possibility of annotating each region with a probability value.

3 Research Thrust 2: Strengthening enterprise-class distributed systems

3.1 Problem Statement

Today's enterprise IT systems mostly run distributed applications. These applications are built out of a large number of software components and run on a variety of hardware platforms. Many of these applications require continuous availability despite being built out of unreliable components. Therefore, system administrators need efficient techniques and practical tools for error-detection that can operate online (as the application runs), and that can detect errors and anomalies with small delay¾the time between the error manifestation and its detection should be short. Preventing an error from becoming a user-visible failure is a further desirable characteristic. Automatically predicting impending failures based on observed patterns of measurements can trigger prevention techniques, such as microrebooting [10], redirection of further requests to a healthy server, or simply starting a backup service for the data. A third necessary functionality for reliable execution of distributed applications is problem localization, whereby automated techniques can determine if the program is at fault or the infrastructure on which the program is executing. If the program is at fault, then the system can provide localization of the fault to a region of the code, which can then be inspected by the developer for the purpose of implementing a fix.

We make our problem concrete by focusing on application systems of our various industrial collaborators. The first kind we focus on is web services, which are often large, complex, and dynamic systems, with a large number of hardware and software components, structured through front-end web servers, middle tier of application servers running the business logic, and back-end of data stores. Additionally, some web services need to run analytics on large amounts of data. Analytics toolkits such as, IBM's BigInsights [11], are geared toward extracting patterns in large volumes of data. We consider representative failures that crop up in such web services.

The second kind we are currently working with is storage systems, where hard disk drives have multiple layers of fault tolerance mechanisms that protect against data loss. However, a few failures occasionally breach the entire set of mechanisms. To prevent such scenarios, we rely on failure prediction mechanisms to raise alarms with sufficient warning to allow the at-risk data to be copied to a safe location. A common failure prediction technique monitors the occurrence of soft errors and triggers an alarm when the soft error rate exceeds a specified threshold. We are investigating sophisticated failure prediction techniques based on the patterns of soft errors.

In this sphere, we also consider maliciously injected errors at enterprise systems. We are focused on attacks to distributed enterprise systems that involve multiple steps, known as multi-stage attacks. In these, adversaries compromise outward-facing services and use them as stepping stones to progressively compromise other services, with the ultimate goal to compromise a critical asset. An example would be compromising a web server, then achieve a series of intermediary steps (such as compromising a developer’s box thanks to a vulnerable PHP module and connecting to a FTP server with gained credentials) to ultimately connect to a database where user credentials are stored. Current detection systems are not capable of analyzing the multi-step attack scenario because they only focus on single steps of this multi-chain process and perform all their inferencing in a “greedy” manner based on the manifestation on that single place where the detector is installed. Further, the security posture is essentially reactive – once the detector finds something, some again “greedy” response is taken, such as, disconnecting a TCP connection. The essential problem with this is three-fold – by the time the reactive response is taken, damage is already done; it is too dependent on the fidelity of the detectors; and finally, this security strategy often fails against hitherto unknown attacks, which are also known as zero-day attacks.

3.2 Solution Approach

Today's enterprise-class distributed systems routinely collect a plethora of metrics by monitoring at various layers---system-level, middleware-level, and application-level. Many commercial and open-source tools exist for collecting these metrics, such as HP OpenView, Sysstat, and Ganglia. Examples of useful metrics are: at the system level: CPU, memory, storage, and network-bandwidth usage ; at the middleware level: resource usages in a Java EE container (such as Tomcat or JBoss) or time spent in an MPICH library call; at the application level: number of servlet requests and exceptions, number of JDBC connections, or time spent in a region of the code. A common class of error-detection techniques works as follows. From values of metrics collected during training runs, a model is built up for how the metrics should behave during normal operation. At runtime, a comparison is made between what is indicated by the trained model and what metric values are observed in the system. If there is sufficient divergence between the two, an error is flagged. Further the metrics that cause the divergence are mapped back to code regions that affect these metrics, thus providing a level of fault localization. However, existing approaches toward performing error-detection within a node based on statistical analysis of runtime metrics suffer from one or more of the following problems. First, their models do not consider multiple metrics simultaneously [12,13]. Many software bugs and performance faults are manifested in such a way that the correlations between measurements of different metrics are broken and these bugs are then missed. Second, some models do not consider observations of a metric as a sequence of measurements [14,15]. Many software bugs, for example those related to performance problems, develop a distinctive temporal pattern that can only be captured by analyzing measurements in a sequential manner rather than through instantaneous snapshots of the metric values. Third, the overwhelming majority of techniques do not offer failure prediction. They operate in a reactive mode by flagging alarms when a failure occurs rather than in a proactive mode by anticipating a failure. Failure prediction has been a hot topic in the past few years [16,17,18], however, to the best of our knowledge all the failure-prediction systems suffer from either the first or the second problem (or both). Finally, existing approaches often consider a restricted set of metrics for modeling [14]. A reduced set of metrics is cherry-picked so that the online performance of the technique can keep pace with the distributed application. These approaches do not work in general because a fault can be manifested in a metric that is not selected initially.

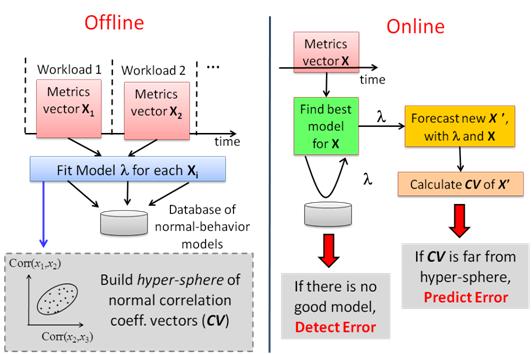

With these insights, we develop a system called Augury to perform error detection within a node using three progressively more sophisticated schemes - first, check for thresholds of individual metric values (both lower and upper bounds); next, check that the temporal patterns of the metric values follow the models of normality; and finally, check that the dependencies between metrics are maintained. Also, depending on which metrics are found to cause the deviation from normality, we can localize the fault to the program (application-level metrics) or the infrastructure (system-level or middleware-level metrics).

Figure 4. Overview of Augury for detection and prediction of errors in distributed enterprise systems

Failure prediction is only a small add-on to the steps described above for detecting anomalous behavior. Augury keeps a per-metric observation window and uses it to forecast future measurements using a selected ARIMA model. For ARIMA models, it is required to have moderately long series in order to have reliable forecasts; some authors recommend a minimum of 100 observations [6]. To avoid the overhead of computing forecasting models at runtime, Augury uses pre-computed ARIMA models to perform forecasts. The models are selected based on data in the observation window, and which explain best the observed data. The model selection is fast as it only tests a few possible models and each test takes just a few operations. Once a model has been selected, Augury can perform s-step ahead forecasts and perform the error detection using the forecast values, thereby providing prediction. In work with IBM, we have shown [19] how web services that execute on cloud computing infrastructures can make use of such prediction to enable failover and load balancing. In this work, we have used the OSGi framework and built fault-tolerant services in it.

For protecting a distributed enterprise system against multi-stage attacks (MSAs), we have developed a solution called the Distributed Intrusion and Attack Detection System (DIADS) [19,21]. DIADS has a central inferencing engine, which has a model of MSAs as attack graphs. DIADS creates a Bayesian Network (BN) out of an attack graph and observable (or evidence) nodes in the attack graph are mapped from sensor alerts (typical sensors are network-based intrusion detection sensors such as Snort and Bro and host-based intrusion detection sensors such as Tripwire). It receives inputs from the sensors and performs inferencing to determine whether a re-configuration of sensors is needed, i.e., whether any new sensor needs to replace an existing sensor, whether the placement of a sensor should be changed, or whether certain rules within a sensor need to be turned on or off. Thus, the inferencing engine has a two-way communication path with the sensors ¾ obtaining alerts from the sensors and then interacting with the sensors once the inferencing is done. DIADS determines changes that have been made to the protected system by parsing changes to firewall rules at network points as well as at individual hosts and updates the BN accordingly. If on the basis of current evidence, it determines that a critical asset (also synonymously referred to as a “crown jewel”) will imminently be compromised, it determines what further sensors close to the asset should be chosen, or equivalently, what further rules in an already active sensor should be turned on. The determination of critical assets is done by the system owner and thus DIADS takes into account relevant input from the owner.

DIADS can perform incremental operation when the configuration of the protected system changes, say through the addition of new hosts or changes to connectivity between existing hosts. DIADS can also perform incremental inferencing when the attack paths change, either in the probabilities or some paths are deactivated altogether. Our system is being used in an internal cyber test range at Northrop Grumman and for intrusion detection in our NSF center NEEScomm IT infrastructure [23].

Figure 5. Overview of approach of Diads to place and configure intrusion detection sensors in a changing enterprise environment

3.3 What’s Coming Up

In continuing work, we are developing more sophisticated machine learning techniques for detecting and predicting subtle software bugs that are related to resource leak and resource exhaustion. We are developing partially automated techniques for feature selection for feeding into our models, since the number of possible features is large. For predicting hard disk drive failures, we have shown [22] that the predictive power of simply the number and frequency of soft errors is poor. We believe that more intricate patterns of the soft errors, considering among other things, the different severity levels of soft errors is needed for improving the predictability.

In the security work, we are building proactive techniques to prevent novel attacks (zero-day attacks) from breaching the security of multiple connected components in a distributed system. Our solution will involve learning from prior attacks such that variants of these attacks can be thwarted. The learning involves generalizations of the specific attack steps. A complimentary solution strategy involves hiding the locations of key services through judicious use of randomization. By judicious, we mean that legitimate clients will be able to use the services, but suspect clients will not be.

4 Research Thrust 3: Dependability of embedded wireless devices and networks

4.1 Problem Statement

In recent years, advances in hardware and software tools have led to many real-world deployments of multi-hop wireless networks, i.e., wireless networks which require little fixed infrastructure. Management of already deployed multi-hop networks is an important issue. One of the crucial management tasks is that of software reconfiguration. During the lifetime of a multi-hop network, software running on the nodes may need to be changed for various reasons like correcting software bugs, modifying the application to meet the changing environmental conditions in which the network is deployed, adapting to evloving user requirements, etc. The frequency of software updates is often high in multi-hop networks due to various reasons harsh and unpredictable environments in which the multi-hop nodes are deployed, time-varying nature of the wireless channel, lack of robust tools for developing software, interference in the unlicensed ISM band, topology change caused by node mobility, battery outages, etc. Since a multi-hop network may consist of hundreds or even thousands of nodes which may be situated at places which are difficult or, sometimes, impossible to access physically, remote reprogramming of multi-hop networks is essential. The two most critical metrics for such reprogramming are energy efficiency and speed. Multi-Hop networks are often battery-powered and need to be operated unattended for long periods of time. Radio transmission is often the most energy-expensive operation in multi-hop networks. Since energy is a very scarce resource, conserving energy during reprogramming is essential. Also, since the performance of the network may be degraded, or even reduced to zero, during software update process, the technique must minimize reprogramming time significantly compared to existing reprogramming protocols.

It is important to get an idea of the events occurring in an embedded wireless node when it is deployed in the field, away from the convenience of an interactive debugger. Such visibility can be useful for post-deployment testing [24], replay-based debugging [25,26], and for performance and energy profiling of various software components [27,28]. Prior software-based solutions to address this problem have incurred high execution overhead and intrusiveness [25,27]. The intrusiveness changes the intrinsic timing behavior of the application, thereby reducing the fidelity of the collected profile. Prior hardware-based solutions [29,30] have involved the use of dedicated ASICs or other tightly coupled changes to the embedded node’s processor, which significantly limits their applicability. Therefore, our goal is to design novel hardware-software approaches that can be deployed at scale (and therefore must be low cost and low energy hogs) that can collect traces of different kinds of events without perturbing the timing of the application.

We are also motivated by the trend of having more resource rich, mobile devices that we carry on our bodies, such as, smartphones. As more critical applications are put in these smartphones, it is important to analyze the failure characteristics of these platforms – how do the different components fail, how are these different from traditional software failures, considering that the software has an event-driven flavor and need to be able to handle multiple input devices, sometime providing inputs concurrently. This investigation should lead to uncovering vulnerabilities that are exposed either due to careless programming or due to maliciously crafted inputs being sent in from the outside, such as, in the form of text messages or inputs fed to a sensor on the smartphone.

4.2 Solution Approach

We have developed a suite of reprogramming techniques and currently have a US patent for the fastest multi-hop reprogramming protocol. One of our solutions, called Zephyr [31], is an incremental reprogramming protocol that exploits the fact that in real world scenario, the software running on the sensor nodes evolves with incremental changes to the functionality. Zephyr significantly reduces reprogramming time and energy by wirelessly transferring only the difference between the old and new versions of the software, rather than the entire new software. The wireless nodes build the new image using the difference and the old image.

Information dissemination protocols used in wireless multi-hop networks incur energy expenditure not only during the data-item dissemination phase, but also during the steady-state when no dissemination is actually being done. The need for energy expenditure in the steady state arises from transient wireless link failures, incremental node deployment, and node mobility. To ensure that all nodes are up-to-date all the time, existing dissemination protocols cause wireless nodes to periodically advertise their metadata (e.g. the version number of the data-item that the node currently has) in the steady state. Thus, the steady state energy cost increases linearly with the steady state time duration, the most dominant phase in a node’s lifetime. We have developed Varuna [32], a maintenance algorithm that incurs fixed maintenance cost, independent of the steady state duration, at the cost of slight state maintenance (and hence, memory usage) at each node.

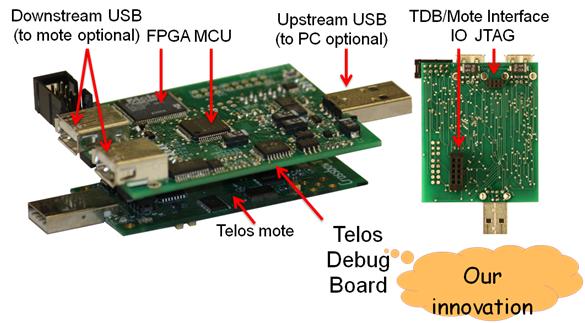

We have designed and prototyped Aveksha [27], a hardware-software approach for tracing applications running in an embedded wireless node in a non-intrusive manner. Our approach is based on the key insight that most embedded processors have an on-chip debug module (which has traditionally been used for interactive debugging) that provides significant visibility into the internal state of the processor. We designed a debug board that interfaces with the on-chip debug module of an embedded node’s processor through the JTAG port and provides three modes of event logging and tracing: breakpoint, watchpoint, and program counter polling. Using expressive triggers that the onchip debug module supports, Aveksha can watch for, and record, a variety of programmable events of interest. A key feature of Aveksha is that the target processor does not have to be stopped during event logging (in the last two of the three modes), subject to a limit on the rate at which logged events occur. Aveksha also performs power monitoring of the embedded wireless node and, importantly, enables power consumption data to be correlated to events of interest.

Aveksha is an operating system-agnostic solution. We demonstrate its functionality and performance using applications in TinyOS and in Contiki. We show that Aveksha can trace tasks and other generic events at the function and task-level granularity. We have also used Aveksha to find a subtle bug in the TinyOS low power listening protocol.

Figure 6. Aveksha’s debug board interfaced with a TI microcontroller

For the smartphone study, we have analyzed the bug reports of the two open source OSes – Android and Symbian OS and come up with a failure characterization in the different modules [33]. Our study indicates that Development tools, Web browsers, and Multimedia applications are most error-prone in both these systems. We further categorized the different types of code modifications required for the fixes. The analysis shows that 78% of errors required minor code changes, with the largest share of these coming from modifications to attribute values and conditions. Our final analysis focuses on the relation between customizability, code complexity, and reliability in Android and Symbian. We find that despite high cyclomatic complexity, the bug densities in Android and Symbian are surprisingly low. However, the support for customizability does impact the reliability of mobile OSes and there are cautionary tales for their further development.

In more recent work [34], we have developed a software fault injector to test how robust the Inter Process Communication (IPC) mechanism is in Android (the Android term for this is “Intent”). We have used the injector to discover vulnerabilities exploitable through random (or crafted) Intents. We then provide recommendations for hardening of Android IPC. During our experiments we sent more than 6 million Intents to 800+ application components across 3 versions of Android (2.2, 2.3.4, and 4.0) and discovered a significant number of input validation errors. In general less than 10% of the components tested crashed; all crashes are caused by unhandled exceptions. Our results suggest that Android has a sizable number of components with unhandled NullPointerExceptions across all versions. The most striking finding that we have is the ability to run privileged processes from user level applications without requiring the user-level application to be granted any special permission at install time. We found three instances, where we could crash the Android runtime from our injector (an unprivileged app). Such a crash makes the Android device unusable till it is rebooted. This has huge potential for denial-of-service and may even lead to more security vulnerabilities, if an adversary could figure out how to have these malformed (or “fuzzed”) Intents be sent out in response to some external message.

4.3 What’s Coming Up

We are now designing a software-only solution for tracing events of interest in an embedded wireless node. Conventional wisdom has it that pure software solution introduces too much overhead for it to be feasible for near real-time systems, such as our target embedded wireless networks. We observe that for record and replay, there exists significant compressibility in the event streams. So while the raw data rate of non-deterministic events is large, there exists the scope for compression by understanding the inherent redundancies.

We are expanding our information dissemination work (that we have accomplished for static networks) to mobile networks, using vehicular networks as a target. We are finding that current data dissemination algorithms which are designed for static or low mobility ad-hoc networks become ineffective in a mixed network of static and mobile nodes (i.e. static infrastructure and mobile vehicles). Existing algorithms involve propagating information from a single source to a single destination, while in our problem scenario many nodes are interested in a particular piece of information (say, weather or traffic) and these nodes are not geographically clustered in the same region. We are developing a protocol which can use the mobility of vehicles to disseminate useful data farther than the reach of the existing infrastructure. Every vehicle would be equipped with a radio transceiver that can communicate with fixed infrastructure transmitters as well as with radios installed in other vehicles in the road. Each vehicle will collect new information while in range of the infrastructure transmitters, and exchange most up-to date information with vehicles it encounters outside of this range. It will estimate the mobility of other vehicles to determine which would be good candidates to engage in data transfer.

With smartphones, we are implementing the suggested changes to improve the software reliability of the Android IPC mechanism and hope to identify which mechanism improves robustness to what extent and with what degree of change to the existing code base. In future work, we will look at other aspects of the reliability of the Android OS, such as the Binder at the kernel level and see how far lessons in software reliability architecture are applicable here.

5 References

2. Hewlett Packard, “IO Accelerator,” At: http://h71028.www7.hp.com/enterprise/us/en/solutions/storage-io-accelerator.html. Last accessed: May 14, 2012.

11. IBM, “Infosphere BigInsights,” At: http://www.ibm.com/software/data/infosphere/biginsights/. Last accessed: May 14, 2012.

19. Jan Rellermeyer and Saurabh Bagchi, “Dependability as a Cloud Service - A Modular Approach,” In: Proceedings of the 2nd International Workshop on Dependability of Clouds, Data Centers, and Virtual Machine Technology (DCDV 2012, in conjunction with DSN 2012), pp. 1-6, Boston, MA, June 2012.

20. Gaspar Modelo-Howard, Saurabh Bagchi, and Guy Lebanon, “'Determining Placement of Intrusion Detectors for a Distributed Application through Bayesian Network Modeling,” At the 11th International Symposium on Recent Advances in Intrusion Detection (RAID), pp. 271-290, Boston, MA, September 15-17, 2008.

23. Purdue University, “NEES – Cybersecurity,” At: http://nees.org/explore/security. Last accessed: May 16, 2012.

30. Green Hills Software Inc., “Processor Probes,” At: http://www.ghs.com/products/debugdevices.html. Last accessed: May 17, 2012.