The Intelligent Shelf Project

Faces are central to the visual component of human interaction. Classic examples of this include face recognition and visual focus of attention (V-FOA) analysis. For a computer vision system to be able to perform these tasks in a generic environment, it is necessary not only to locate the face within the image, but also to estimate the pose of the face, that is, the orientation of the face in some coordinate frame.

As an example of a face pose estimation system, we introduce the Intelligent Shelf project. In this project, we envision a grocery-store shelf lined with small, unobtrusive cameras. As a person peruses the contents of the shelf, the cameras would detect the pose of the person's face and estimate where on the shelf they are looking. This information can be used to improve the ergonomic design of stores and improve the layout of items within the store, to make things easier for customers to find.

Figure 1: Camera frame representing the Intelligent Shelf

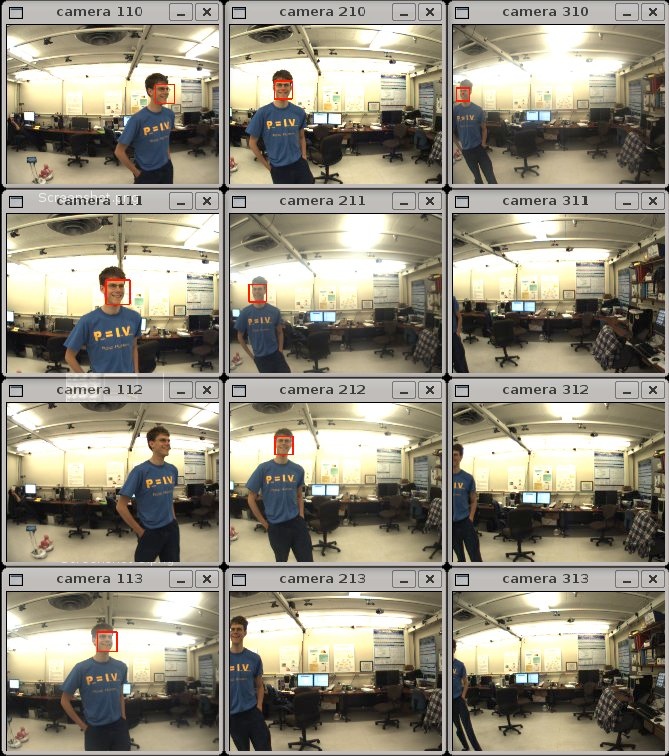

As an initial prototype, we have constructed a vertical frame, shown in Figure 1, which represents the shelf, on which we have mounted twelve cameras. These cameras capture a multi-view sequence of images of persons in front of the camera wall, shown in Figure 2.

Figure 2: Example images from the cameras

To be used in large camera network like the one required for the Intelligent Shelf project, distributed processing of the camera images is essential. In this project, we are experimenting with techniques for efficiently estimating the pose of each person's face, minimizing the bandwidth requirements while maintaining the accuracy of the detections.

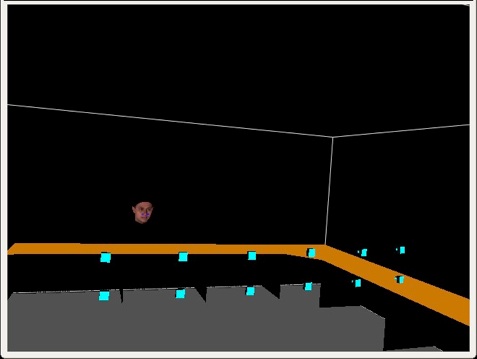

In our current system, estimates of each face's position and orientation are sent to a central evidence accumulation coordinator, which produces a rough pose estimate of the head. This evidence accumulation framework eliminates false detections and improves the accuracy of the pose estimates. The accumulated head positions are displayed in a graphical user interface (GUI) (See Figure 3)

Figure 3: Graphical user interface showing the estimated face position for the images shown in figure 2.

• Josiah Yoder

• Hidekasu Iwaki

• Johnny Park

[ogg] [avi]

This video demonstrates the range of positions and orientations which can be detected by our system.

The GUI uses a generic model, based on the average face from the USF Human ID Morphable Faces Database.

Real-time face pose estimation